Graphics Processing Unit (GPU) Memory Hierarchy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Powervr SGX Series5xt IP Core Family

PowerVR SGX Series5XT IP Core Family The PowerVR™ SGX Series5XT Graphics Processing Unit (GPU) IP core family is a series Features of highly efficient graphics acceleration IP cores that meet the multimedia requirements of • Most comprehensive IP core family the next generation of consumer, communications and computing applications. and roadmap in the industry PowerVR SGX Series5XT architecture is fully scalable for a wide range of area and • USSE2 delivers twice the peak performance requirements, enabling it to target markets from low cost feature-rich mobile floating point and instruction multimedia products to very high performance consoles and computing devices. throughput of Series5 USSE • YUV and colour space accelerators The family incorporates the second-generation Universal Scalable Shader Engine (USSE2™), for improved performance with a feature set that exceeds the requirements of OpenGL 2.0 and Microsoft Shader • Upgraded PowerVR Series5XT Model 3, enabling 2D, 3D and general purpose (GP-GPU) processing in a single core. shader-driven tile-based deferred rendering (TBDR) architecture • Multi-processor options enable scalability to higher performance • Support for all industry standard PowerVR SGX Family mobile and desktop graphics APIs and operating sytems Series5XT SGX543MP1-16, SGX544MP1-16, SGX554MP1-16 • Fully backwards compatible with PowerVR MBX and SGX Series5 Series5 SGX520, SGX530, SGX531, SGX535, SGX540, SGX545 Benefits Multi-standard API and OS • Extensive product line supports all area/performance requirements OpenGL -

ATI Radeon™ HD 4870 Computation Highlights

AMD Entering the Golden Age of Heterogeneous Computing Michael Mantor Senior GPU Compute Architect / Fellow AMD Graphics Product Group [email protected] 1 The 4 Pillars of massively parallel compute offload •Performance M’Moore’s Law Î 2x < 18 Month s Frequency\Power\Complexity Wall •Power Parallel Î Opportunity for growth •Price • Programming Models GPU is the first successful massively parallel COMMODITY architecture with a programming model that managgped to tame 1000’s of parallel threads in hardware to perform useful work efficiently 2 Quick recap of where we are – Perf, Power, Price ATI Radeon™ HD 4850 4x Performance/w and Performance/mm² in a year ATI Radeon™ X1800 XT ATI Radeon™ HD 3850 ATI Radeon™ HD 2900 XT ATI Radeon™ X1900 XTX ATI Radeon™ X1950 PRO 3 Source of GigaFLOPS per watt: maximum theoretical performance divided by maximum board power. Source of GigaFLOPS per $: maximum theoretical performance divided by price as reported on www.buy.com as of 9/24/08 ATI Radeon™HD 4850 Designed to Perform in Single Slot SP Compute Power 1.0 T-FLOPS DP Compute Power 200 G-FLOPS Core Clock Speed 625 Mhz Stream Processors 800 Memory Type GDDR3 Memory Capacity 512 MB Max Board Power 110W Memory Bandwidth 64 GB/Sec 4 ATI Radeon™HD 4870 First Graphics with GDDR5 SP Compute Power 1.2 T-FLOPS DP Compute Power 240 G-FLOPS Core Clock Speed 750 Mhz Stream Processors 800 Memory Type GDDR5 3.6Gbps Memory Capacity 512 MB Max Board Power 160 W Memory Bandwidth 115.2 GB/Sec 5 ATI Radeon™HD 4870 X2 Incredible Balance of Performance,,, Power, Price -

An Evolution of Mobile Graphics

AN EVOLUTION OF MOBILE GRAPHICS Michael C. Shebanow Vice President, Advanced Processor Lab Samsung Electronics July 20, 20131 DISCLAIMER • The views herein are my own • They do not represent Samsung’s vision nor product plans 2 • The Mobile Market • Review of GPU Tech • GPU Efficiency • User Experience • Tech Challenges • Summary 3 The Rise of the Mobile GPU & Connectivity A NEW WORLD COMING? 4 DISCRETE GPU MARKET Flattening 5 MOBILE GPU MARKET Smart • In 2012, an estimated 800+ Phones million mobile GPUs shipped “Phablets” • ~123M tablets • ~712M smart phones Tablets • Will easily exceed 1B in the coming years • Trend: • Discrete GPU relatively flat • Mobile is growing rapidly 6 WW INTERNET TRAFFIC • Source: Cisco VNI Mobile INET IP Traffic growth Traffic • Internet traffic growth Year (TB/sec) rate (TB/sec) rate is staggering 2005 0.9 0.00 2006 1.5 65% 0.00 • 2012 total traffic is 2007 2.5 61% 0.01 13.7 GB per person 2008 3.8 54% 0.01 per month 2009 5.6 45% 0.04 2010 7.8 40% 0.10 • 2012 smart phone 2011 10.6 36% 0.23 traffic at 2012 12.4 17% 0.34 0.342 GB per person per month • 2017 smart phone traffic expected at 2.7 GB per person per month 7 WHERE ARE WE HEADED?… • Enormous quantity of GPUs • Large amount of interconnectivity • Better I/O 8 GPU Pipelines A BRIEF REVIEW OF GPU TECH 9 MOBILE GPU PIPELINE ARCHITECTURES Tile-based immediate mode rendering IA VS CCV RS PS ROP (TBIMR) Tile-based deferred IA VS CCV scene rendering (TBDR) RS PS ROP IA = input assembler VS = vertex shader CCV = cull, clip, viewport transform RS = rasterization, -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 1 of 34 1 BOIES, SCHILLER & FLEXNER LLP WILLIAM A. ISAACSON (pro hac vice) 2 5301 Wisconsin Ave. NW, Suite 800 Washington, D.C. 20015 3 Telephone: (202) 237-2727 Facsimile: (202) 237-6131 4 Email: [email protected] 5 6 BOIES, SCHILLER & FLEXNER LLP BOIES, SCHILLER & FLEXNER LLP JOHN F. COVE, JR. (CA Bar No. 212213) PHILIP J. IOVIENO (pro hac vice) 7 DAVID W. SHAPIRO (CA Bar No. 219265) ANNE M. NARDACCI (pro hac vice) KEVIN J. BARRY (CA Bar No. 229748) 10 North Pearl Street 8 1999 Harrison St., Suite 900 4th Floor Oakland, CA 94612 Albany, NY 12207 9 Telephone: (510) 874-1000 Telephone: (518) 434-0600 Facsimile: (510) 874-1460 Facsimile: (518) 434-0665 10 Email: [email protected] Email: [email protected] [email protected] [email protected] 11 [email protected] 12 Attorneys for Plaintiff Jordan Walker Interim Class Counsel for Direct Purchaser 13 Plaintiffs 14 15 UNITED STATES DISTRICT COURT 16 NORTHERN DISTRICT OF CALIFORNIA 17 18 IN RE GRAPHICS PROCESSING UNITS ) Case No.: M:07-CV-01826-WHA ANTITRUST LITIGATION ) 19 ) MDL No. 1826 ) 20 This Document Relates to: ) THIRD CONSOLIDATED AND ALL DIRECT PURCHASER ACTIONS ) AMENDED CLASS ACTION 21 ) COMPLAINT FOR VIOLATION OF ) SECTION 1 OF THE SHERMAN ACT, 15 22 ) U.S.C. § 1 23 ) ) 24 ) ) JURY TRIAL DEMANDED 25 ) ) 26 ) ) 27 ) 28 THIRD CONSOLIDATED AND AMENDED CLASS ACTION COMPLAINT BY DIRECT PURCHASERS M:07-CV-01826-WHA Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 2 of 34 1 Plaintiffs Jordan Walker, Michael Bensignor, d/b/a Mike’s Computer Services, Fred 2 Williams, and Karol Juskiewicz, on behalf of themselves and all others similarly situated in the 3 United States, bring this action for damages and injunctive relief under the federal antitrust laws 4 against Defendants named herein, demanding trial by jury, and complaining and alleging as 5 follows: 6 NATURE OF THE CASE 7 1. -

Driver Riva Tnt2 64

Driver riva tnt2 64 click here to download The following products are supported by the drivers: TNT2 TNT2 Pro TNT2 Ultra TNT2 Model 64 (M64) TNT2 Model 64 (M64) Pro Vanta Vanta LT GeForce. The NVIDIA TNT2™ was the first chipset to offer a bit frame buffer for better quality visuals at higher resolutions, bit color for TNT2 M64 Memory Speed. NVIDIA no longer provides hardware or software support for the NVIDIA Riva TNT GPU. The last Forceware unified display driver which. version now. NVIDIA RIVA TNT2 Model 64/Model 64 Pro is the first family of high performance. Drivers > Video & Graphic Cards. Feedback. NVIDIA RIVA TNT2 Model 64/Model 64 Pro: The first chipset to offer a bit frame buffer for better quality visuals Subcategory, Video Drivers. Update your computer's drivers using DriverMax, the free driver update tool - Display Adapters - NVIDIA - NVIDIA RIVA TNT2 Model 64/Model 64 Pro Computer. (In Windows 7 RC1 there was the build in TNT2 drivers). http://kemovitra. www.doorway.ru Use the links on this page to download the latest version of NVIDIA RIVA TNT2 Model 64/Model 64 Pro (Microsoft Corporation) drivers. All drivers available for. NVIDIA RIVA TNT2 Model 64/Model 64 Pro - Driver Download. Updating your drivers with Driver Alert can help your computer in a number of ways. From adding. Nvidia RIVA TNT2 M64 specs and specifications. Price comparisons for the Nvidia RIVA TNT2 M64 and also where to download RIVA TNT2 M64 drivers. Windows 7 and Windows Vista both fail to recognize the Nvidia Riva TNT2 ( Model64/Model 64 Pro) which means you are restricted to a low. -

(GPU) Computing

Graphics Processing Unit (GPU) computing This section describes the graphics processing unit (GPU) computing feature of OptiSystem. Note: The GPU computing feature is only configurable with OptiSystem Version 11 (or higher) What is GPU computing? GPU computing or GPGPU takes advantage of a computer’s grahics processing card to augment the speed of general purpose scientific and engineering computing tasks. Compute Unified Device Architecture (CUDA) implementation for OptiSystem NVIDIA revolutionized the GPGPU and accelerated computing when it introduced a new parallel computing architecture: Compute Unified Device Architecture (CUDA). CUDA is both a hardware and software architecture for issuing and managing computations within the GPU, thus allowing it to operate as a generic data-parallel computing device. CUDA allows the programmer to take advantage of the parallel computing power of an NVIDIA graphics card to perform general purpose computations. OptiSystem CUDA implementation The OptiSystem model for GPU computing involves using a central processing unit (CPU) and GPU together in a heterogeneous co-processing computing model. The sequential part of the application runs on the CPU and the computationally-intensive part is accelerated by the GPU. In the OptiSystem GPU programming model, the application has been modified to map the compute-intensive kernels to the GPU. The remainder of the application remains within the CPU. CUDA parallel computing architecture The NVIDIA CUDA parallel computing architecture is enabled on GeForce®, Quadro®, and Tesla™ products. Whereas GeForce and Quadro are designed for consumer graphics and professional visualization respectively, the Tesla product family is designed ground-up for parallel computing and offers exclusive computing 3 GRAPHICS PROCESSING UNIT (GPU) COMPUTING features, and is the recommended choice for the OptiSystem GPU. -

NVIDIA Quadro P4000

NVIDIA Quadro P4000 GP104 1792 112 64 8192 MB GDDR5 256 bit GRAPHICS PROCESSOR CORES TMUS ROPS MEMORY SIZE MEMORY TYPE BUS WIDTH The Quadro P4000 is a professional graphics card by NVIDIA, launched in February 2017. Built on the 16 nm process, and based on the GP104 graphics processor, the card supports DirectX 12.0. The GP104 graphics processor is a large chip with a die area of 314 mm² and 7,200 million transistors. Unlike the fully unlocked GeForce GTX 1080, which uses the same GPU but has all 2560 shaders enabled, NVIDIA has disabled some shading units on the Quadro P4000 to reach the product's target shader count. It features 1792 shading units, 112 texture mapping units and 64 ROPs. NVIDIA has placed 8,192 MB GDDR5 memory on the card, which are connected using a 256‐bit memory interface. The GPU is operating at a frequency of 1227 MHz, which can be boosted up to 1480 MHz, memory is running at 1502 MHz. We recommend the NVIDIA Quadro P4000 for gaming with highest details at resolutions up to, and including, 5760x1080. Being a single‐slot card, the NVIDIA Quadro P4000 draws power from 1x 6‐pin power connectors, with power draw rated at 105 W maximum. Display outputs include: 4x DisplayPort. Quadro P4000 is connected to the rest of the system using a PCIe 3.0 x16 interface. The card measures 241 mm in length, and features a single‐slot cooling solution. Graphics Processor Graphics Card GPU Name: GP104 Released: Feb 6th, 2017 Architecture: Pascal Production Active Status: Process Size: 16 nm Bus Interface: PCIe 3.0 x16 Transistors: 7,200 -

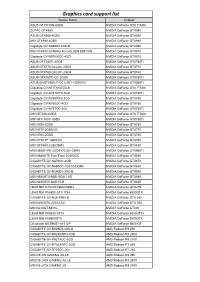

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

AMD Radeon E8860

Components for AMD’s Embedded Radeon™ E8860 GPU INTRODUCTION The E8860 Embedded Radeon GPU available from CoreAVI is comprised of temperature screened GPUs, safety certi- fiable OpenGL®-based drivers, and safety certifiable GPU tools which have been pre-integrated and validated together to significantly de-risk the challenges typically faced when integrating hardware and software components. The plat- form is an off-the-shelf foundation upon which safety certifiable applications can be built with confidence. Figure 1: CoreAVI Support for E8860 GPU EXTENDED TEMPERATURE RANGE CoreAVI provides extended temperature versions of the E8860 GPU to facilitate its use in rugged embedded applications. CoreAVI functionally tests the E8860 over -40C Tj to +105 Tj, increasing the manufacturing yield for hardware suppliers while reducing supply delays to end customers. coreavi.com [email protected] Revision - 13Nov2020 1 E8860 GPU LONG TERM SUPPLY AND SUPPORT CoreAVI has provided consistent and dedicated support for the supply and use of the AMD embedded GPUs within the rugged Mil/Aero/Avionics market segment for over a decade. With the E8860, CoreAVI will continue that focused support to ensure that the software, hardware and long-life support are provided to meet the needs of customers’ system life cy- cles. CoreAVI has extensive environmentally controlled storage facilities which are used to store the GPUs supplied to the Mil/ Aero/Avionics marketplace, ensuring that a ready supply is available for the duration of any program. CoreAVI also provides the post Last Time Buy storage of GPUs and is often able to provide additional quantities of com- ponents when COTS hardware partners receive increased volume for existing products / systems requiring additional inventory. -

Graphics: Mesa, AMDVLK, Adreno and Protected Xe Path

Published on Tux Machines (http://www.tuxmachines.org) Home > content > Graphics: Mesa, AMDVLK, Adreno and Protected Xe Path Graphics: Mesa, AMDVLK, Adreno and Protected Xe Path By Roy Schestowitz Created 08/02/2021 - 11:48pm Submitted by Roy Schestowitz on Monday 8th of February 2021 11:48:11 PM Filed under Graphics/Benchmarks [1] Panfrost Gallium3D Lands Its New Bifrost Scheduler In Mesa 21.1 - Phoronix[2] Hitting Mesa 21.1 this morning is a scheduler implementation for Panfrost Gallium3D, the open-source Arm Mali graphics driver. Lead Panfrost developer Alyssa Rosenzweig has been working to implement a scheduler in panfrost for the Arm Bifrost graphics code path. The scheduler has been in the works for a number of months and is passing the relevant conformance tests and has now been merged. AMDVLK 2021.Q1.3 Brings Performance Tuning For War Thunder - Phoronix[3] AMDVLK 2021.Q1.3 is out this morning as the latest snapshot of the official open-source AMD Radeon Vulkan driver for Linux systems that is derived from their shared platform driver sources. AMDVLK 2021.Q1.3 is on the lighter side with AMDVLK 2021.Q1.2 having arrived just over one week ago. Of the two listed driver changes, AMDVLK 2021.Q1.3 is rebuilt against the Vulkan API 1.2.168 headers. Freedreno's MSM DRM Driver Adds More Adreno Support, Speedbin Capability For Linux 5.12 - Phoronix[4] The MSM Direct Rendering Manager driver originally developed as part of the Freedreno effort for open-source Qualcomm Adreno graphics on Linux while now supported by the likes of Google and Qualcomm's Code Aurora engineers has some notable changes in store for the next Linux kernel cycle. -

A Configurable General Purpose Graphics Processing Unit for Power, Performance, and Area Analysis

Iowa State University Capstones, Theses and Creative Components Dissertations Summer 2019 A configurable general purpose graphics processing unit for power, performance, and area analysis Garrett Lies Follow this and additional works at: https://lib.dr.iastate.edu/creativecomponents Part of the Digital Circuits Commons Recommended Citation Lies, Garrett, "A configurable general purpose graphics processing unit for power, performance, and area analysis" (2019). Creative Components. 329. https://lib.dr.iastate.edu/creativecomponents/329 This Creative Component is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Creative Components by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. A Configurable General Purpose Graphics Processing Unit for Power, Performance, and Area Analysis by Garrett Joseph Lies A Creative Component submitted to the graduate faculty in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Major: Computer Engineering (Very-Large Scale Integration) Program of Study Committee: Joseph Zambreno, Major Professor The student author, whose presentation of the scholarship herein was approved by the program of study committee, is solely responsible for the content of this dissertation/thesis. The Graduate College will ensure this dissertation/thesis is globally accessible and will not permit alterations after a degree is conferred. Iowa State University Ames, Iowa 2019 Copyright c Garrett Joseph Lies, 2019. All rights reserved. ii TABLE OF CONTENTS Page LIST OF TABLES . iv LIST OF FIGURES . .v ACKNOWLEDGMENTS . vi ABSTRACT . vii CHAPTER 1. -

On the Energy Efficiency of Graphics Processing Units for Scientific

On the Energy Efficiency of Graphics Processing Units for Scientific Computing S. Huang, S. Xiao, W. Feng Department of Computer Science Virginia Tech {huangs, shucai, wfeng}@vt.edu Abstract sively parallel, multi-threaded multiprocessor design, combined with an appropriate data-parallel mapping of The graphics processing unit (GPU) has emerged as an application to it. a computational accelerator that dramatically reduces The emergence of more general-purpose program- the time to discovery in high-end computing (HEC). ming environments, such as Brook+ [1] and CUDA [2], However, while today’s state-of-the-art GPU can easily has made it easier to implement large-scale applica- reduce the execution time of a parallel code by many tions on GPUs. The CUDA programming model from orders of magnitude, it arguably comes at the expense NVIDIA was created for developing applications on of significant power and energy consumption. For ex- NVIDIA’s GPU cards, such as the GeForce 8 series. ample, the NVIDIA GTX 280 video card is rated at Similarly, Brook+ supports stream processing on the 236 watts, which is as much as the rest of a compute AMD/ATi GPU cards. For the purposes of this paper, node, thus requiring a 500-W power supply. As a we use CUDA atop an NVIDIA GTX 280 video card consequence, the GPU has been viewed as a “non- for our scientific computation. green” computing solution. GPGPU research has focused predominantly on ac- This paper seeks to characterize, and perhaps de- celerating scientific applications. This paper not only bunk, the notion of a “power-hungry GPU” via an accelerates scientific applications via GPU computing, empirical study of the performance, power, and en- but it also does so in the context of characterizing ergy characteristics of GPUs for scientific computing.