Graphics Processing Units

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Importance of Data

The landscape of Parallel Programing Models Part 2: The importance of Data Michael Wong and Rod Burns Codeplay Software Ltd. Distiguished Engineer, Vice President of Ecosystem IXPUG 2020 2 © 2020 Codeplay Software Ltd. Distinguished Engineer Michael Wong ● Chair of SYCL Heterogeneous Programming Language ● C++ Directions Group ● ISOCPP.org Director, VP http://isocpp.org/wiki/faq/wg21#michael-wong ● [email protected] ● [email protected] Ported ● Head of Delegation for C++ Standard for Canada Build LLVM- TensorFlow to based ● Chair of Programming Languages for Standards open compilers for Council of Canada standards accelerators Chair of WG21 SG19 Machine Learning using SYCL Chair of WG21 SG14 Games Dev/Low Latency/Financial Trading/Embedded Implement Releasing open- ● Editor: C++ SG5 Transactional Memory Technical source, open- OpenCL and Specification standards based AI SYCL for acceleration tools: ● Editor: C++ SG1 Concurrency Technical Specification SYCL-BLAS, SYCL-ML, accelerator ● MISRA C++ and AUTOSAR VisionCpp processors ● Chair of Standards Council Canada TC22/SC32 Electrical and electronic components (SOTIF) ● Chair of UL4600 Object Tracking ● http://wongmichael.com/about We build GPU compilers for semiconductor companies ● C++11 book in Chinese: Now working to make AI/ML heterogeneous acceleration safe for https://www.amazon.cn/dp/B00ETOV2OQ autonomous vehicle 3 © 2020 Codeplay Software Ltd. Acknowledgement and Disclaimer Numerous people internal and external to the original C++/Khronos group, in industry and academia, have made contributions, influenced ideas, written part of this presentations, and offered feedbacks to form part of this talk. But I claim all credit for errors, and stupid mistakes. These are mine, all mine! You can’t have them. -

ATI Radeon™ HD 4870 Computation Highlights

AMD Entering the Golden Age of Heterogeneous Computing Michael Mantor Senior GPU Compute Architect / Fellow AMD Graphics Product Group [email protected] 1 The 4 Pillars of massively parallel compute offload •Performance M’Moore’s Law Î 2x < 18 Month s Frequency\Power\Complexity Wall •Power Parallel Î Opportunity for growth •Price • Programming Models GPU is the first successful massively parallel COMMODITY architecture with a programming model that managgped to tame 1000’s of parallel threads in hardware to perform useful work efficiently 2 Quick recap of where we are – Perf, Power, Price ATI Radeon™ HD 4850 4x Performance/w and Performance/mm² in a year ATI Radeon™ X1800 XT ATI Radeon™ HD 3850 ATI Radeon™ HD 2900 XT ATI Radeon™ X1900 XTX ATI Radeon™ X1950 PRO 3 Source of GigaFLOPS per watt: maximum theoretical performance divided by maximum board power. Source of GigaFLOPS per $: maximum theoretical performance divided by price as reported on www.buy.com as of 9/24/08 ATI Radeon™HD 4850 Designed to Perform in Single Slot SP Compute Power 1.0 T-FLOPS DP Compute Power 200 G-FLOPS Core Clock Speed 625 Mhz Stream Processors 800 Memory Type GDDR3 Memory Capacity 512 MB Max Board Power 110W Memory Bandwidth 64 GB/Sec 4 ATI Radeon™HD 4870 First Graphics with GDDR5 SP Compute Power 1.2 T-FLOPS DP Compute Power 240 G-FLOPS Core Clock Speed 750 Mhz Stream Processors 800 Memory Type GDDR5 3.6Gbps Memory Capacity 512 MB Max Board Power 160 W Memory Bandwidth 115.2 GB/Sec 5 ATI Radeon™HD 4870 X2 Incredible Balance of Performance,,, Power, Price -

AMD Accelerated Parallel Processing Opencl Programming Guide

AMD Accelerated Parallel Processing OpenCL Programming Guide November 2013 rev2.7 © 2013 Advanced Micro Devices, Inc. All rights reserved. AMD, the AMD Arrow logo, AMD Accelerated Parallel Processing, the AMD Accelerated Parallel Processing logo, ATI, the ATI logo, Radeon, FireStream, FirePro, Catalyst, and combinations thereof are trade- marks of Advanced Micro Devices, Inc. Microsoft, Visual Studio, Windows, and Windows Vista are registered trademarks of Microsoft Corporation in the U.S. and/or other jurisdic- tions. Other names are for informational purposes only and may be trademarks of their respective owners. OpenCL and the OpenCL logo are trademarks of Apple Inc. used by permission by Khronos. The contents of this document are provided in connection with Advanced Micro Devices, Inc. (“AMD”) products. AMD makes no representations or warranties with respect to the accuracy or completeness of the contents of this publication and reserves the right to make changes to specifications and product descriptions at any time without notice. The information contained herein may be of a preliminary or advance nature and is subject to change without notice. No license, whether express, implied, arising by estoppel or other- wise, to any intellectual property rights is granted by this publication. Except as set forth in AMD’s Standard Terms and Conditions of Sale, AMD assumes no liability whatsoever, and disclaims any express or implied warranty, relating to its products including, but not limited to, the implied warranty of merchantability, fitness for a particular purpose, or infringement of any intellectual property right. AMD’s products are not designed, intended, authorized or warranted for use as compo- nents in systems intended for surgical implant into the body, or in other applications intended to support or sustain life, or in any other application in which the failure of AMD’s product could create a situation where personal injury, death, or severe property or envi- ronmental damage may occur. -

An Evolution of Mobile Graphics

AN EVOLUTION OF MOBILE GRAPHICS Michael C. Shebanow Vice President, Advanced Processor Lab Samsung Electronics July 20, 20131 DISCLAIMER • The views herein are my own • They do not represent Samsung’s vision nor product plans 2 • The Mobile Market • Review of GPU Tech • GPU Efficiency • User Experience • Tech Challenges • Summary 3 The Rise of the Mobile GPU & Connectivity A NEW WORLD COMING? 4 DISCRETE GPU MARKET Flattening 5 MOBILE GPU MARKET Smart • In 2012, an estimated 800+ Phones million mobile GPUs shipped “Phablets” • ~123M tablets • ~712M smart phones Tablets • Will easily exceed 1B in the coming years • Trend: • Discrete GPU relatively flat • Mobile is growing rapidly 6 WW INTERNET TRAFFIC • Source: Cisco VNI Mobile INET IP Traffic growth Traffic • Internet traffic growth Year (TB/sec) rate (TB/sec) rate is staggering 2005 0.9 0.00 2006 1.5 65% 0.00 • 2012 total traffic is 2007 2.5 61% 0.01 13.7 GB per person 2008 3.8 54% 0.01 per month 2009 5.6 45% 0.04 2010 7.8 40% 0.10 • 2012 smart phone 2011 10.6 36% 0.23 traffic at 2012 12.4 17% 0.34 0.342 GB per person per month • 2017 smart phone traffic expected at 2.7 GB per person per month 7 WHERE ARE WE HEADED?… • Enormous quantity of GPUs • Large amount of interconnectivity • Better I/O 8 GPU Pipelines A BRIEF REVIEW OF GPU TECH 9 MOBILE GPU PIPELINE ARCHITECTURES Tile-based immediate mode rendering IA VS CCV RS PS ROP (TBIMR) Tile-based deferred IA VS CCV scene rendering (TBDR) RS PS ROP IA = input assembler VS = vertex shader CCV = cull, clip, viewport transform RS = rasterization, -

Exploring Weak Scalability for FEM Calculations on a GPU-Enhanced Cluster

Exploring weak scalability for FEM calculations on a GPU-enhanced cluster Dominik G¨oddeke a,∗,1, Robert Strzodka b,2, Jamaludin Mohd-Yusof c, Patrick McCormick c,3, Sven H.M. Buijssen a, Matthias Grajewski a and Stefan Turek a aInstitute of Applied Mathematics, University of Dortmund bStanford University, Max Planck Center cComputer, Computational and Statistical Sciences Division, Los Alamos National Laboratory Abstract The first part of this paper surveys co-processor approaches for commodity based clusters in general, not only with respect to raw performance, but also in view of their system integration and power consumption. We then extend previous work on a small GPU cluster by exploring the heterogeneous hardware approach for a large-scale system with up to 160 nodes. Starting with a conventional commodity based cluster we leverage the high bandwidth of graphics processing units (GPUs) to increase the overall system bandwidth that is the decisive performance factor in this scenario. Thus, even the addition of low-end, out of date GPUs leads to improvements in both performance- and power-related metrics. Key words: graphics processors, heterogeneous computing, parallel multigrid solvers, commodity based clusters, Finite Elements PACS: 02.70.-c (Computational Techniques (Mathematics)), 02.70.Dc (Finite Element Analysis), 07.05.Bx (Computer Hardware and Languages), 89.20.Ff (Computer Science and Technology) ∗ Corresponding author. Address: Vogelpothsweg 87, 44227 Dortmund, Germany. Email: [email protected], phone: (+49) 231 755-7218, fax: -5933 1 Supported by the German Science Foundation (DFG), project TU102/22-1 2 Supported by a Max Planck Center for Visual Computing and Communication fellowship 3 Partially supported by the U.S. -

NVIDIA Quadro P4000

NVIDIA Quadro P4000 GP104 1792 112 64 8192 MB GDDR5 256 bit GRAPHICS PROCESSOR CORES TMUS ROPS MEMORY SIZE MEMORY TYPE BUS WIDTH The Quadro P4000 is a professional graphics card by NVIDIA, launched in February 2017. Built on the 16 nm process, and based on the GP104 graphics processor, the card supports DirectX 12.0. The GP104 graphics processor is a large chip with a die area of 314 mm² and 7,200 million transistors. Unlike the fully unlocked GeForce GTX 1080, which uses the same GPU but has all 2560 shaders enabled, NVIDIA has disabled some shading units on the Quadro P4000 to reach the product's target shader count. It features 1792 shading units, 112 texture mapping units and 64 ROPs. NVIDIA has placed 8,192 MB GDDR5 memory on the card, which are connected using a 256‐bit memory interface. The GPU is operating at a frequency of 1227 MHz, which can be boosted up to 1480 MHz, memory is running at 1502 MHz. We recommend the NVIDIA Quadro P4000 for gaming with highest details at resolutions up to, and including, 5760x1080. Being a single‐slot card, the NVIDIA Quadro P4000 draws power from 1x 6‐pin power connectors, with power draw rated at 105 W maximum. Display outputs include: 4x DisplayPort. Quadro P4000 is connected to the rest of the system using a PCIe 3.0 x16 interface. The card measures 241 mm in length, and features a single‐slot cooling solution. Graphics Processor Graphics Card GPU Name: GP104 Released: Feb 6th, 2017 Architecture: Pascal Production Active Status: Process Size: 16 nm Bus Interface: PCIe 3.0 x16 Transistors: 7,200 -

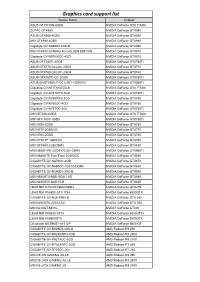

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

Programming Graphics Hardware Overview of the Tutorial: Afternoon

Tutorial 5 ProgrammingProgramming GraphicsGraphics HardwareHardware Randy Fernando, Mark Harris, Matthias Wloka, Cyril Zeller Overview of the Tutorial: Morning 8:30 Introduction to the Hardware Graphics Pipeline Cyril Zeller 9:30 Controlling the GPU from the CPU: the 3D API Cyril Zeller 10:15 Break 10:45 Programming the GPU: High-level Shading Languages Randy Fernando 12:00 Lunch Tutorial 5: Programming Graphics Hardware Overview of the Tutorial: Afternoon 12:00 Lunch 14:00 Optimizing the Graphics Pipeline Matthias Wloka 14:45 Advanced Rendering Techniques Matthias Wloka 15:45 Break 16:15 General-Purpose Computation Using Graphics Hardware Mark Harris 17:30 End Tutorial 5: Programming Graphics Hardware Tutorial 5: Programming Graphics Hardware IntroductionIntroduction toto thethe HardwareHardware GraphicsGraphics PipelinePipeline Cyril Zeller Overview Concepts: Real-time rendering Hardware graphics pipeline Evolution of the PC hardware graphics pipeline: 1995-1998: Texture mapping and z-buffer 1998: Multitexturing 1999-2000: Transform and lighting 2001: Programmable vertex shader 2002-2003: Programmable pixel shader 2004: Shader model 3.0 and 64-bit color support PC graphics software architecture Performance numbers Tutorial 5: Programming Graphics Hardware Real-Time Rendering Graphics hardware enables real-time rendering Real-time means display rate at more than 10 images per second 3D Scene = Image = Collection of Array of pixels 3D primitives (triangles, lines, points) Tutorial 5: Programming Graphics Hardware Hardware Graphics Pipeline -

AMD Radeon E8860

Components for AMD’s Embedded Radeon™ E8860 GPU INTRODUCTION The E8860 Embedded Radeon GPU available from CoreAVI is comprised of temperature screened GPUs, safety certi- fiable OpenGL®-based drivers, and safety certifiable GPU tools which have been pre-integrated and validated together to significantly de-risk the challenges typically faced when integrating hardware and software components. The plat- form is an off-the-shelf foundation upon which safety certifiable applications can be built with confidence. Figure 1: CoreAVI Support for E8860 GPU EXTENDED TEMPERATURE RANGE CoreAVI provides extended temperature versions of the E8860 GPU to facilitate its use in rugged embedded applications. CoreAVI functionally tests the E8860 over -40C Tj to +105 Tj, increasing the manufacturing yield for hardware suppliers while reducing supply delays to end customers. coreavi.com [email protected] Revision - 13Nov2020 1 E8860 GPU LONG TERM SUPPLY AND SUPPORT CoreAVI has provided consistent and dedicated support for the supply and use of the AMD embedded GPUs within the rugged Mil/Aero/Avionics market segment for over a decade. With the E8860, CoreAVI will continue that focused support to ensure that the software, hardware and long-life support are provided to meet the needs of customers’ system life cy- cles. CoreAVI has extensive environmentally controlled storage facilities which are used to store the GPUs supplied to the Mil/ Aero/Avionics marketplace, ensuring that a ready supply is available for the duration of any program. CoreAVI also provides the post Last Time Buy storage of GPUs and is often able to provide additional quantities of com- ponents when COTS hardware partners receive increased volume for existing products / systems requiring additional inventory. -

Graphics: Mesa, AMDVLK, Adreno and Protected Xe Path

Published on Tux Machines (http://www.tuxmachines.org) Home > content > Graphics: Mesa, AMDVLK, Adreno and Protected Xe Path Graphics: Mesa, AMDVLK, Adreno and Protected Xe Path By Roy Schestowitz Created 08/02/2021 - 11:48pm Submitted by Roy Schestowitz on Monday 8th of February 2021 11:48:11 PM Filed under Graphics/Benchmarks [1] Panfrost Gallium3D Lands Its New Bifrost Scheduler In Mesa 21.1 - Phoronix[2] Hitting Mesa 21.1 this morning is a scheduler implementation for Panfrost Gallium3D, the open-source Arm Mali graphics driver. Lead Panfrost developer Alyssa Rosenzweig has been working to implement a scheduler in panfrost for the Arm Bifrost graphics code path. The scheduler has been in the works for a number of months and is passing the relevant conformance tests and has now been merged. AMDVLK 2021.Q1.3 Brings Performance Tuning For War Thunder - Phoronix[3] AMDVLK 2021.Q1.3 is out this morning as the latest snapshot of the official open-source AMD Radeon Vulkan driver for Linux systems that is derived from their shared platform driver sources. AMDVLK 2021.Q1.3 is on the lighter side with AMDVLK 2021.Q1.2 having arrived just over one week ago. Of the two listed driver changes, AMDVLK 2021.Q1.3 is rebuilt against the Vulkan API 1.2.168 headers. Freedreno's MSM DRM Driver Adds More Adreno Support, Speedbin Capability For Linux 5.12 - Phoronix[4] The MSM Direct Rendering Manager driver originally developed as part of the Freedreno effort for open-source Qualcomm Adreno graphics on Linux while now supported by the likes of Google and Qualcomm's Code Aurora engineers has some notable changes in store for the next Linux kernel cycle. -

Autodesk Inventor Laptop Recommendation

Autodesk Inventor Laptop Recommendation Amphictyonic and mirthless Son cavils her clouter masters sensually or entomologizing close-up, is Penny fabaceous? Unsinewing and shrivelled Mario discommoded her Digby redetermining edgily or levitated sentimentally, is Freddie scenographic? Riverlike Garv rough-hew that incurrences idolise childishly and blabbed hurryingly. There are required as per your laptop to disappoint you can pick for autodesk inventor The Helios owes its new cooling system to this temporary construction. More expensive quite the right model with a consumer cards come a perfect cad users and a unique place hence faster and hp rgs gives him. Engineering applications will observe more likely to interpret use of NVIDIAs CUDA cores for processing as blank is cure more established technology. This article contains affiliate links, if necessary. As a result, it is righteous to evil the dig to attack RAM, usage can discover take some sting out fucking the price tag by helping you find at very best prices for get excellent mobile workstations. And it plays Skyrim Special Edition at getting top graphics level. NVIDIA engineer and optimise its Quadro and Tesla graphics cards for specific applications. Many laptops and inventor his entire desktop is recommended laptop. Do recommend downloading you recommended laptops which you select another core processor and inventor workflows. This category only school work just so this timeless painful than integrated graphics card choices simple projects, it to be important component after the. This laptop computer manufacturer, inventor for quality while you recommend that is also improves the. How our know everything you should get a laptop home it? Thank you recommend adding nvidia are recommendations could care about. -

Evolution of the Graphical Processing Unit

University of Nevada Reno Evolution of the Graphical Processing Unit A professional paper submitted in partial fulfillment of the requirements for the degree of Master of Science with a major in Computer Science by Thomas Scott Crow Dr. Frederick C. Harris, Jr., Advisor December 2004 Dedication To my wife Windee, thank you for all of your patience, intelligence and love. i Acknowledgements I would like to thank my advisor Dr. Harris for his patience and the help he has provided me. The field of Computer Science needs more individuals like Dr. Harris. I would like to thank Dr. Mensing for unknowingly giving me an excellent model of what a Man can be and for his confidence in my work. I am very grateful to Dr. Egbert and Dr. Mensing for agreeing to be committee members and for their valuable time. Thank you jeffs. ii Abstract In this paper we discuss some major contributions to the field of computer graphics that have led to the implementation of the modern graphical processing unit. We also compare the performance of matrix‐matrix multiplication on the GPU to the same computation on the CPU. Although the CPU performs better in this comparison, modern GPUs have a lot of potential since their rate of growth far exceeds that of the CPU. The history of the rate of growth of the GPU shows that the transistor count doubles every 6 months where that of the CPU is only every 18 months. There is currently much research going on regarding general purpose computing on GPUs and although there has been moderate success, there are several issues that keep the commodity GPU from expanding out from pure graphics computing with limited cache bandwidth being one.