Orchestral Maneuvers in Surround Issue 32

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Doctor Atomic

What to Expect from doctor atomic Opera has alwayS dealt with larger-than-life Emotions and scenarios. But in recent decades, composers have used the power of THE WORK DOCTOR ATOMIC opera to investigate society and ethical responsibility on a grander scale. Music by John Adams With one of the first American operas of the 21st century, composer John Adams took up just such an investigation. His Doctor Atomic explores a Libretto by Peter Sellars, adapted from original sources momentous episode in modern history: the invention and detonation of First performed on October 1, 2005, the first atomic bomb. The opera centers on Dr. J. Robert Oppenheimer, in San Francisco the brilliant physicist who oversaw the Manhattan Project, the govern- ment project to develop atomic weaponry. Scientists and soldiers were New PRODUCTION secretly stationed in Los Alamos, New Mexico, for the duration of World Alan Gilbert, Conductor War II; Doctor Atomic focuses on the days and hours leading up to the first Penny Woolcock, Production test of the bomb on July 16, 1945. In his memoir Hallelujah Junction, the American composer writes, “The Julian Crouch, Set Designer manipulation of the atom, the unleashing of that formerly inaccessible Catherine Zuber, Costume Designer source of densely concentrated energy, was the great mythological tale Brian MacDevitt, Lighting Designer of our time.” As with all mythological tales, this one has a complex and Andrew Dawson, Choreographer fascinating hero at its center. Not just a scientist, Oppenheimer was a Leo Warner and Mark Grimmer for Fifty supremely cultured man of literature, music, and art. He was conflicted Nine Productions, Video Designers about his creation and exquisitely aware of the potential for devastation Mark Grey, Sound Designer he had a hand in designing. -

Press Release: (Pdf Format)

FOR IMMEDIATE RELEASE CONTACT: Robert Cable, Stanford Live 650-736-0091; [email protected] PHOTOS: http://live.stanford.edu/press LOS ANGELES CHILDREN’S CHORUS CELEBRATES AMERICAN SONG AT BING, FEATURING WORLD PREMIERE OF THE PLENTIFUL PEACH BY MARK GREY The April 19 performance will include a guest appearance by the Stanford Chamber Chorale Stanford, CA, March 25, 2015— The famed Los Angeles Children’s Chorus (LACC), led by Artistic Director Anne Tomlinson, makes its debut at Bing Concert Hall with a program entitled “Celebrating American Song,” featuring the world premiere of The Plentiful Peach by the Palo Alto-raised composer Mark Gray and Bay Area librettist Niloufar Talebi, on Sunday, April 19 at 2:30 p.m. Commissioned by the LACC, the work is adapted from a story by the great Iranian writer, Samad Behrangi (1939-1968). The Plentiful Peach is the coming of age story of a peach and the brave children who secretly grow her—against the master’s odds. The LACC also presents the Cherokee hymn Sgwa ti’ni se sdi Yi Howa; Henry Mollicone’s Spanish Ave Maria; Let Us Sing! arranged by Linda Tutas Haugen; and Meir Finkelstein’s L’dor vador arranged by Rebecca Thompson and featuring soloist Madeleine Lew. In addition, the Stanford Chamber Chorale, under the direction of Stephen M. Sano, makes a guest appearance performing separately as well as combining forces with the LACC to perform Randall Thompson’s Pueri Hebraeorum and the American folk song Shenandoah arranged by James Erb. “Los Angeles Children’s Chorus is honored to bring its artistry to Bing Concert Hall and further expand our valued relationship with Stanford University,” says Tomlinson. -

Read Program

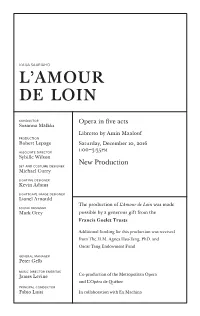

KAIJA SAARIAHO l’amour de loin conductor Opera in five acts Susanna Mälkki Libretto by Amin Maalouf production Robert Lepage Saturday, December 10, 2016 PM associate director 1:00–3:35 Sybille Wilson New Production set and costume designer Michael Curry lighting designer Kevin Adams lightscape image designer Lionel Arnould The production of L’Amour de Loin was made sound designer Mark Grey possible by a generous gift from the Francis Goelet Trusts Additional funding for this production was received from The H.M. Agnes Hsu-Tang, PhD. and Oscar Tang Endowment Fund general manager Peter Gelb music director emeritus James Levine Co-production of the Metropolitan Opera and L’Opéra de Québec principal conductor Fabio Luisi In collaboration with Ex Machina 2016–17 SEASON The 3rd Metropolitan Opera performance of KAIJA SAARIAHO’S This performance l’amour is being broadcast live over The Toll Brothers– de loin Metropolitan Opera International Radio Network, sponsored conductor by Toll Brothers, Susanna Mälkki America’s luxury ® in order of vocal appearance homebuilder , with generous long-term jaufré rudel support from Eric Owens The Annenberg Foundation, The the pilgrim Neubauer Family Tamara Mumford* Foundation, the Vincent A. Stabile clémence Endowment for Susanna Phillips Broadcast Media, and contributions from listeners worldwide. There is no Toll Brothers– Metropolitan Opera Quiz in List Hall today. This performance is also being broadcast live on Metropolitan Opera Radio on SiriusXM channel 74. Saturday, December 10, 2016, 1:00–3:35PM This afternoon’s performance is being transmitted live in high definition to movie theaters worldwide. The Met: Live in HD series is made possible by a generous grant from its founding sponsor, The Neubauer Family Foundation. -

Iolanta Bluebeard's Castle

iolantaPETER TCHAIKOVSKY AND bluebeard’sBÉLA BARTÓK castle conductor Iolanta Valery Gergiev Lyric opera in one act production Libretto by Modest Tchaikovsky, Mariusz Treliński based on the play King René’s Daughter set designer by Henrik Hertz Boris Kudlička costume designer Bluebeard’s Castle Marek Adamski Opera in one act lighting designer Marc Heinz Libretto by Béla Balázs, after a fairy tale by Charles Perrault choreographer Tomasz Wygoda Saturday, February 14, 2015 video projection designer 12:30–3:45 PM Bartek Macias sound designer New Production Mark Grey dramaturg The productions of Iolanta and Bluebeard’s Castle Piotr Gruszczyński were made possible by a generous gift from Ambassador and Mrs. Nicholas F. Taubman general manager Peter Gelb Additional funding was received from Mrs. Veronica Atkins; Dr. Magdalena Berenyi, in memory of Dr. Kalman Berenyi; music director and the National Endowment for the Arts James Levine principal conductor Co-production of the Metropolitan Opera and Fabio Luisi Teatr Wielki–Polish National Opera The 5th Metropolitan Opera performance of PETER TCHAIKOVSKY’S This performance iolanta is being broadcast live over The Toll Brothers– Metropolitan Opera International Radio Network, sponsored conductor by Toll Brothers, Valery Gergiev America’s luxury in order of vocal appearance homebuilder®, with generous long-term marta duke robert support from Mzia Nioradze Aleksei Markov The Annenberg iol anta vaudémont Foundation, The Anna Netrebko Piotr Beczala Neubauer Family Foundation, the brigit te Vincent A. Stabile Katherine Whyte Endowment for Broadcast Media, l aur a and contributions Cassandra Zoé Velasco from listeners bertr and worldwide. Matt Boehler There is no alméric Toll Brothers– Keith Jameson Metropolitan Opera Quiz in List Hall today. -

William Dazeley Baritone

William Dazeley Baritone William Dazeley is a graduate of Jesus College, Cambridge. He studied singing at the Guildhall School of Music and Drama, where he received several notable prizes including the prestigious Gold Medal, the Decca/Kathleen Ferrier Prize and the Richard Tauber Prize and won such competitions as the Royal Overseas League Singing Competition and the Walther Grüner International Lieder Competition. Engagements in the current season include MARCELLO La Boheme for Grange Park Opera, the world premiere of LIKE FLESH for the Opera de Lille and MUSIKLEHRER Ariadne auf Naxos for the Opera de Montpellier. Most recently, William sang DON ALFONSO Così fan tutte for the Royal Danish Opera, FATHER Hansel & Gretel in his company debut for Grange Park Opera, PROSECUTOR Frankenstein in the world premiere of Mark Grey’s opera Frankenstein at La Monnaie, COUNT Capriccio for Garsington Opera and PANDOLFE Cendrillon for Glyndebourne on Tour for whom he also appeared as CLAUDIUS in Brett Dean’s new award winning Hamlet. William has appeared in many of the world’s opera houses, where roles have included COUNT Cherubin, MARCELLO La Bohème, GUGLIELMO Così fan tutte, ANTHONY Sweeney Todd, YELETSKY Queen of Spades, MERCUTIO Romeo et Juliette and FIGARO Il Barbiere di Siviglia Royal Opera House, Covent Garden, PAPAGENO The Magic Flute English National Opera, ALFONSO Così fan tutte, Yeletsky, TITLE ROLE Don Giovanni, POSA Don Carlos, FANINAL Der Rosenkavalier, DANILO The Merry Widow, MR GEDGE Albert Herring Opera North, L’AMI The Fall of the -

Doctor Atomic Page 1 of 2 Opera Assn

San Francisco War Memorial 2005-2006 Doctor Atomic Page 1 of 2 Opera Assn. Opera House World Premiere Commission and Production Sponsorship made possible by Roberta Bialek Doctor Atomic (in English) Opera in two acts by John Adams Libretto by Peter Sellars Based on original sources Commissioned by San Francisco Opera Additional sponsorship: Jane Bernstein and Bob Ellis; Richard N. Goldman; Leslie & George Hume; The Flora C. Thornton Foundation; The Wallace Alexander Gerbode Foundation; An Anonymous Donor. Additional support: The National Endowment for the Arts; The Opera Fund, a program of OPERA America. Conductor CAST Donald Runnicles Edward Teller Richard Paul Fink* Director J. Robert Oppenheimer Gerald Finley* Peter Sellars Robert Wilson Thomas Glenn* Set designer Kitty Oppenheimer Kristine Jepson* Adrianne Lobel General Leslie Groves Eric Owens* Costume Designer Jack Hubbard James Maddalena* Dunya Ramicova Captain James Nolan Jay Hunter Morris* Lighting Designer Pasqualita Beth Clayton* James F. Ingalls Peter Oppenheimer Seth Durant* Choreographer Cloud Flower Blossom Bliss Dowman* Lucinda Childs Cynthia Drayer* Sound Designer Brook Broughton* Mark Grey Katherine Wells* Musical Preparation Adelle Eslinger Carol Isaac *Role debut †U.S. opera debut Donato Cabrera PLACE AND TIME: (Specified in scene breakdown) Paul Harris Ernest Fredric Knell Svetlana Gorzhevskaya Chorus Director Ian Robertson Assistant Stage Director Kathleen Belcher Elizabeth Burgess Stage Manager Lisa Anderson Dance Master Lawrence Pech Dance Captain Hope Mohr Costume -

MUSIC of CONSCIENCE May 22–June 8, 2019

FOR IMMEDIATE RELEASE Updated: May 20, 2019 April 25, 2019 Contact: Deirdre Roddin (212) 875-5700; [email protected] MUSIC OF CONSCIENCE May 22–June 8, 2019 MUSIC DIRECTOR JAAP VAN ZWEDEN To Conduct WORLD PREMIERE–Philharmonic Co-Commission of DAVID LANG’s prisoner of the state John CORIGLIANO’s Symphony No. 1 SHOSTAKOVICH’s Chamber Symphony and BEETHOVEN’s Symphony No. 3, Eroica NIGHTCAP: Curated by John Corigliano Kravis Nightcap Series at the Stanley H. Kaplan Penthouse Hosted by Nadia Sirota; Performances by New York Philharmonic Musicians SOUND ON: “Response” GROW @ Annenberg Sound ON Series at The Appel Room, Jazz at Lincoln Center World Premiere–Philharmonic Commission by Gabriella Smith Performed by New York Philharmonic Musicians FREE PUBLIC DISCUSSIONS AND PERFORMANCES LGBT Community Center and Insights at the Atrium PANELS FROM THE AIDS MEMORIAL QUILT TO BE DISPLAYED NEW YORK PHILHARMONIC ARCHIVES EXHIBIT The New York Philharmonic will conclude the 2018–19 subscription season with Music of Conscience, May 22–June 8, 2019, three weeks of concerts and events exploring the ways in which composers have used music to respond to the social and political issues of their times. Music Director Jaap van Zweden will conduct all three orchestral programs: the World Premiere of David Lang’s opera prisoner of the state, a retelling of Beethoven’s Fidelio; John Corigliano’s Symphony No. 1, his “personal response to the AIDS crisis”; and Shostakovich’s Chamber Symphony, about his struggles under Stalin, alongside Beethoven’s Eroica Symphony, originally dedicated to Napoleon until the composer angrily redacted the inscription. Music of Conscience will also feature two new-music programs, an archival exhibit, panels from the AIDS Memorial Quilt on display, and free public discussions and performances. -

World Premiere of Deep Blue Sea by Bill T. Jones

World Premiere of Deep Blue Sea by Bill T. Jones, Featuring the Renowned Choreographer in Performance with 100 Dancers and Community Members, Opens at Park Avenue Armory this April New Armory Commission Features Visual Environment Designed by Elizabeth Diller and Peter Nigrini April 14 – 25, 2020 Bill T. Jones, work-in-progress performance of Deep Blue Sea at LUMBERYARD, 2019. Photo: Maria Baranova New York, NY – February 4, 2020 – This April, renowned director, choreographer, and dancer Bill T. Jones presents and performs in the world premiere of his monumental new work, Deep Blue Sea, at Park Avenue Armory, marking Jones’ first time performing in fifteen years. Using deconstructed texts from Martin Luther King Jr.’s “I Have a Dream” and Herman Melville’s Moby Dick, the highly personal work explores the interplay of single and group identities and the pursuit of the elusive “we” during fractious times. The work commences with a solo by Jones and builds into a collective performance featuring 100 community members and dancers led by Jones and the Bill T. Jones/Arnie Zane Company. Conceived for the Armory’s Wade Thompson Drill Hall, Deep Blue Sea magnifies the vast space through a design by Elizabeth Diller of the renowned architectural firm Diller Scofidio + Renfro and projections by Tony Award- nominated projection designer Peter Nigrini (Beetlejuice, Fela!), with a sonic backdrop created by composer Nick Hallett and music producer Hprizm aka High Priest with Holland Andrews. Deep Blue Sea is commissioned by the Armory and Manchester International Festival in collaboration with the Holland Festival, and produced and developed in collaboration with New York Live Arts. -

Dracula: the Music and Film

October 1999 Brooklyn Academy of Music 1999 Next Wave Festival BAMcinematek Brooklyn Philharmonic 651 ARTS Jennifer Bartlett, House: Large Grid, 1998 BAM Next Wave Festival sponsored by PHILIP MORRIS ~lA6(Blll COMPANIES INC. Brooklyn Academy of Music Bruce C. Ratner Chairman of the Board Karen Brooks Hopkins Joseph V. Melillo President Executive Prod ucer presents in association with Universal Pictures Dracula: the Music and Film Running time: BAM Opera House approximately 1 hour October 26 and 27, 1999, at 7:30 p.m. and 25 minutes, with Original Music Philip Glass no intermission Performed by Philip Glass and Kronos Quartet Violin David Harrington Violin John Sherba Viola Hank Dutt Cello Jennifer Culp Conductor Michael Riesman Music Production Kurt Munkasci Scenery and Lighting Designer John Michael Deegan Sound Designer Mark Grey Producer Linda Greenberg Tour Management Pomegranate Arts © Dunvagen Music Publishers, Inc. Dracula: The Music and Film has been made possible with the generous support of Universal Family & Home Entertainment Productions, and Universal Studios Home Video. Technical support for the development of Dracula: The Music and Film was provided by The John Harms Center for the Arts, Englewood, New Jersey. Film sound equipment donated by Dolby Laboratories, Inc. Additional loud speakers provided by Meyer Sound Laboratories, Berkeley, California. 17 • • thp 1\/11 I,ir ~ nrl ~i Im Production Manager Doug Witney Aud io Engineer Mark Grey Production Stage Manager, Lighting Supervisor Larry Neff Company Manager Carol Patella Music Production Euphorbia Productions Stylist Kasia Walicka Maimone Assistant Stylist Stacy Saltzman Press Representation Annie Ohayon and Reyna Mastrosimone, Annie Ohayon Media (New York, New York) Kronos Quartet Managing Director Janet Cowperthwaite Associate Director Laird Rodet Technical Director Larry Neff Business Manager Sandie Schaaf Office Manager Leslie Mainer Assistant to Managing Director Ave Maria Hackett Record ing Projects Coord inator Sidney Chen PO. -

Echoes of the Avant-Garde in American Minimalist Opera

ECHOES OF THE AVANT-GARDE IN AMERICAN MINIMALIST OPERA Ryan Scott Ebright A dissertation submitted to the faculty at the University of North Carolina at Chapel Hill in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Department of Music. Chapel Hill 2014 Approved by: Mark Katz Tim Carter Brigid Cohen Annegret Fauser Philip Rupprecht © 2014 Ryan Scott Ebright ALL RIGHTS RESERVED ii ABSTRACT Ryan Scott Ebright: Echoes of the Avant-garde in American Minimalist Opera (Under the direction of Mark Katz) The closing decades of the twentieth century witnessed a resurgence of American opera, led in large part by the popular and critical success of minimalism. Based on repetitive musical structures, minimalism emerged out of the fervid artistic intermingling of mid twentieth- century American avant-garde communities, where music, film, dance, theater, technology, and the visual arts converged. Within opera, minimalism has been transformational, bringing a new, accessible musical language and an avant-garde aesthetic of experimentation and politicization. Thus, minimalism’s influence invites a reappraisal of how opera has been and continues to be defined and experienced at the turn of the twenty-first century. “Echoes of the Avant-garde in American Minimalist Opera” offers a critical history of this subgenre through case studies of Philip Glass’s Satyagraha (1980), Steve Reich’s The Cave (1993), and John Adams’s Doctor Atomic (2005). This project employs oral history and archival research as well as musical, dramatic, and dramaturgical analyses to investigate three interconnected lines of inquiry. The first traces the roots of these operas to the aesthetics and practices of the American avant-garde communities with which these composers collaborated early in their careers. -

Los Angeles Master Chorale

FOR IMMEDIATE USE Press contact: Libby Huebner (562) 799-6055 [email protected] LOS ANGELES MASTER CHORALE PRESENTS WOLRD PREMIERE OF MARK GREY’S MUGUNGHWA: ROSE OF SHARON FEATURING VIOLINIST JENNIFER KOH AS PART OF CHOIR’S ACCLAIMED “LA IS THE WORLD” INITIATIVE HIGHLIGHTING CITY’S VIBRANT MULTI- CULTURAL INFLUENCES All-Korean Progam, “Stories from Korea,” Conducted by Music Director Grant Gershon, also Includes Hyowon Woo’s Me-Na-Ri, and Beloved Korean Classic Arirang Fantasie Sunday, March 6, 2011, 7 PM, at Walt Disney Concert Hall Kogi Korean BBQ Truck Rolls up to Disney Hall Prior to Performance from 4-7 PM Renowned violinist Jennifer Koh joins Gershon and the Los Angeles Master Chorale to perform the world premiere of a piece by Mark Grey, named one of the Los Angeles Times “2008 Faces to Watch,” Sunday, March 6, 2011, 7 p.m., at Disney Hall. The new work, Mugunghwa: Rose of Sharon, with text based on the extraordinary verses of Korean poet and engineer Namsoo Kim, who fled from a North Korean prison at the outbreak of the Korean War, was commissioned by the Chorale and is the fourth piece to be premiered by the choir as part of its notable “LA Is the World” initiative, which was launched in 2007. This installment begins phase two of the initiative, conceived by Gershon as a collaboration among composers, master musicians and the choir to expand the choral literature with works that mirror LA’s vibrant multi-cultural fabric. Also on the all-Korean program, entitled “Stories from Korea,” is a stunning piece for three choirs entitled Me-Na- Ri by Hyowon Woo, who is the composer-in-residence of the world-renowned Incheon City Chorale and is recognized as one of the most brilliant young Korean composers on the scene today. -

Available Light

Friday and Saturday, February 3 –4, 2017, 8pm Zellerbach Hall Available Light Music Choreography Stage Design John Adams Lucinda Childs Frank O. Gehry Lighting Design Costume Design Sound Design Beverly Emmons Kasia Walicka Maimone Mark Grey John Torres Performed by Lucinda Childs Dance Company Katie Dorn, Katherine Helen Fisher, Sarah Hillmon, Anne Lewis, Sharon Milanese, Benny Olk, Patrick John O’Neill, Matt Pardo, Lonnie Poupard Jr., Caitlin Scranton, Shakirah Stewart Produced by Pomegranate Arts Linda Brumbach, executive producer The revival of Available Light was commissioned by Cal Performances, University of California, Berkeley; Festspielhaus St. Pölten; FringeArts, Philadelphia, with the support of The Pew Center for Arts & Heritage; Glorya Kaufman Presents Dance at the Music Center and The Los Angeles Philharmonic Association; International Summer Festival Kampnagel, Hamburg; Onassis Cultural Centre – Athens; Tanz Im August, Berlin; and Théâtre de la Ville – Paris and Festival d’Automne à Paris. The revival of Available Light was developed at MASS MoCa (Massachusetts Museum of Contemporary Art). Light Over Water by John Adams is used by arrangement with Hendon Music, Inc., a Boosey & Hawkes company, publisher and copyright owner. This evening’s performance will be performed without intermission and will last approximately 55 minutes. This performance is made possible, in part, by Patron Sponsors Gail and Daniel Rubinfeld, and Patrick McCabe. PROGRAM NOTES Production History John Adams (composer ) The Museum of Contemporary Art in Los Composer, conductor, and creative thinker— Angeles originally commissioned Available John Adams occupies a unique position in the Light in 1983 during a period when the mu - world of American music. His works, both op - seum was under construction and working in eratic and symphonic, stand out among con - site-specific locations.