Recommend Songs with Data from Spotify Using Spectral Clustering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Classic Albums: the Berlin/Germany Edition

Course Title Classic Albums: The Berlin/Germany Edition Course Number REMU-UT 9817 D01 Spring 2019 Syllabus last updated on: 23-Dec-2018 Lecturer Contact Information Course Details Wednesdays, 6:15pm to 7:30pm (14 weeks) Location NYU Berlin Academic Center, Room BLAC 101 Prerequisites No pre-requisites Units earned 2 credits Course Description A classic album is one that has been deemed by many —or even just a select influential few — as a standard bearer within or without its genre. In this class—a companion to the Classic Albums class offered in New York—we will look and listen at a selection of classic albums recorded in Berlin, or recorded in Germany more broadly, and how the city/country shaped them – from David Bowie's famous Berlin trilogy from 1977 – 79 to Ricardo Villalobos' minimal house masterpiece Alcachofa. We will deconstruct the music and production of these albums, putting them in full social and political context and exploring the range of reasons why they have garnered classic status. Artists, producers and engineers involved in the making of these albums will be invited to discuss their seminal works with the students. Along the way we will also consider the history of German electronic music. We will particularly look at how electronic music developed in Germany before the advent of house and techno in the late 1980s as well as the arrival of Techno, a new musical movement, and new technology in Berlin and Germany in the turbulent years after the Fall of the Berlin Wall in 1989, up to the present. -

Study Buddy CASSETTE DION ELVIS GUITAR IPOD PHONOGRAPH RADIO RECORD RHYTHM ROCK ROLL Where The

Rhythm, Blues and Clues I V J X F Y R D L Y W D U N H Searchin Michael Presser, Executive Director A Q X R O C K F V K K P D O P Help the musical note find it’s home B L U E S B Y X X F S F G I A Presents… Y C L C N T K F L V V E A D R Y A K O A Z T V E I O D O A G E S W R R T H K J P U P T R O U S I D H S O N W G I I U G N Z E G V A Y V F F F U E N G O P T V N L O T S C G X U Q E H L T G H B E R H O J H D N L P N E C S U W Q B M D W S G Y M Z O B P M R O Y F D G S R W K O F D A X E J X L B M O W Z K B P I D R V X T C B Y W P K P F Y K R Q R E Q F V L T L S G ALBUM BLUES BROADWAY Study Buddy CASSETTE DION ELVIS GUITAR IPOD PHONOGRAPH RADIO RECORD RHYTHM ROCK ROLL Where the 630 Ninth Avenue, Suite 802 Our Mission: Music Inside Broadway is a professional New York City based children’s theatre New York, NY 10036 12 company committed to producing Broadway’s classic musicals in a Music Lives Telephone: 212-245-0710 contemporary light for young audiences. -

New Campus Phone System to Be Installed

New student health store Delaware bounces ~ provides birth control back at Bosto~----1····-~.. --.; page2 page 15 / Student demonstrators condemn Columbus' 'discovery' By Donna Murphy that Columbus did not discover America but instead "'Their culture goes with the land hand in hand," she Columbus Day is not a day of pride, but one of shame." and Lori Salotto opened the way for the devastation of native American added. "The land was their culture; their spirit and soul." Jack Ellis, chairman of the history department, said, News Editors culture and environment. Mark Glyde (AS SR), another member of SEAC, said "The real issue is not who discovered America, but the What many refer to as the Age of Discovery was in Yesterday, about 20 members of the Student the holiday represents 499 years of destruction to native impact of the voyage." fact the Age of Collision - an era of confrontation Environmental Action Coalition (SEAC) staged brief cultures. The collision of native American and Western between cultures and continents from wh~h neithu the demonstrations around campus, denouncing Columbus "The United States has broken every treaty we ever cultures had a devastating impact on the biological, Old nor the New World ever recovered. Day. made with the indigenous people of this land," he said. economical, social and political aspects of the nation, he -William Graves, editor of National Geographic The protestors marched to a melancholy drum beat This is also true for recent treaties between the said. · magazine across campus, dressed as trees, natives and white government and existing tribes, he said. "In Columbus' log," Glyde said, "he notes how oppressors, reenacting what they believed to be the " We want to make people aware that Native friendly the people he encountered in this land were and For years, school history books portrayed Christopher initial interactions between Europeans and original Americans continue to struggle for their rights," Glyde how easy it would be to enslave them." Columbus as a cross-continental hero. -

Historical Society of the Upper Mojave Desert Re- Ceived the Honor Because of the Successful Work Our Volunteers Did to Renovate Our Historic USO Building

Upper Mojave Desert 230 W. Ridgecrest Blvd. • P. O. Box 2001, Ridgecrest, CA 93556 • 760-375-8456 Vol. 29 No. 6 June 2014 To see our schedule of events, visit us at www.hsumd.org or on Facebook at hsumd Bring Photos, Share Stories on June 17 ome enjoy an evening of nostalgia on Tuesday, June 17, at 7 p.m. at the Historic USO Building, 230 W. Ridgecrest Blvd. C The meeting will feature an ice cream social and viewing of historic local pictures. HSUMD members and the public are invited to bring their own pictures to share. We last had a similar social in 2010, and it brought forth many interest- ing pictures, includ- ing those shown here from Don Snyder Volunteers help at the opening of and Gene Schnei- the new Maturango Museum in der. So please look Ridgecrest, 1986. Gene Schneider colllection Don Snyder is the little blonde boy astride a pony, circa in your collection of 1954. In the background you can see B Mountain, com- photos and bring those showing interesting people, events plete with “B.” Don Snyder collection and buildings of the local area. See p. 3 Field Trip to Cerro Gordo — June 14 For the last field trip before summer, we’ll return to Cerro Gordo on June 14. Lots of exciting things have been happening up on “Fat Hill,” and it’s time to get caught up. The Hoist House has been rebuilt and is functional again! — we’ve been promised a tour. Robert, the caretaker, has been busy restoring other buildings and the Crapo House is now open as a small gift shop, visitors center, and antique shop, which has both of Cecile Vargo’s books for sale if you don’t already have them. -

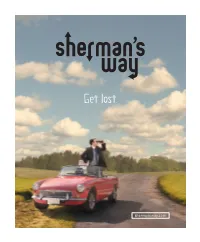

SW Press Booklet PRINT3

STARRY NIGHT ENTERTAINMENT Presents starring JAMES LE GROS (“The Last Winter” “Drugstore Cowboy”) ENRICO COLANTONI (“Just Shoot Me” “Galaxy Quest”) MICHAEL SHULMAN (“Can of Worms” “Little Man Tate”) BROOKE NEVIN (“The Comebacks” “Infestation”) with DONNA MURPHY THOMAS IAN NICHOLAS and LACEY CHABERT (“The Nanny Diaries”) (“American Pie Trilogy”) (“Mean Girls”) edited by CHRISTOPHER GAY cinematography by JOAQUIN SEDILLO written by TOM NANCE directed by CRAIG SAAVEDRA Copyright Sherman’s Way, LLC All Rights Reserved SHERMAN’S WAY Sherman’s Way starts with two strangers forced into a road trip of con - venience only to veer off the path into a quirky exploration of friendship, fatherhood and the annoying task of finding one’s place in the world – a world in which one wrong turn can change your destination. The discord begins when Sherman, (Michael Shulman) a young, uptight Ivy- Leaguer, finds himself stranded on the West Coast with an eccentric stranger and washed-up, middle-aged former athlete Palmer (James Le Gros) in an attempt to make it down to Beverly Hills in time for a career-making internship at a prestigious law firm. The two couldn't be more incompatible. Palmer is a reckless charmer with a zest for life; Sherman is an arrogant snob with a sense of entitlement. Palmer is con - tent reliving his past; Sherman is focused solely on his future. Neither is really living in the present. The only thing this odd couple seems to share is the refusal to accept responsibility for their lives. Director Craig Saavedra brings his witty sensibility into this poignant look at fatherhood and friendship that features indie stalwart James Le Gros in an unapologetic performance that manages to bring undeniable charm to an otherwise abrasive character. -

The Wiki Music Dataset: a Tool for Computational Analysis of Popular Music

The Wiki Music dataset: A tool for computational analysis of popular music Fabio Celli Profilio Company s.r.l. via sommarive 18, 38123 Trento, Italy Email: fabio@profilio.co Abstract—Is it possible use algorithms to find trends in monic and timbral properties that brought changes in music the history of popular music? And is it possible to predict sound around 1964, 1983 and 1991 [14]. Beside these research the characteristics of future music genres? In order to answer fields, there is a trend in the psychology of music that studies these questions, we produced a hand-crafted dataset with the how the musical preferences are reflected in the dimensions intent to put together features about style, psychology, sociology of personality [11]. From this kind of research emerged the and typology, annotated by music genre and indexed by time MUSIC model [20], which found that genre preferences can and decade. We collected a list of popular genres by decade from Wikipedia and scored music genres based on Wikipedia be decomposed into five factors: Mellow (relaxed, slow, and ro- descriptions. Using statistical and machine learning techniques, mantic), Unpretentious, (easy, soft, well-known), Sophisticated we find trends in the musical preferences and use time series (complex, intelligent or avant-garde), Intense (loud, aggressive, forecasting to evaluate the prediction of future music genres. and tense) and Contemporary (catchy, rhythmic or danceable). Is it possible to find trends in the characteristics of the genres? Keywords—Popular Music, Computational Music analysis, And is it possible to predict the characteristics of future genres? Wikipedia, Natural Language Processing, dataset To answer these questions, we produced a hand-crafted dataset with the intent to put together MUSIC, style and sonic features, I. -

Is Rock Music in Decline? a Business Perspective

Jose Dailos Cabrera Laasanen Is Rock Music in Decline? A Business Perspective Helsinki Metropolia University of Applied Sciences Bachelor of Business Administration International Business and Logistics 1405484 22nd March 2018 Abstract Author(s) Jose Dailos Cabrera Laasanen Title Is Rock Music in Decline? A Business Perspective Number of Pages 45 Date 22.03.2018 Degree Bachelor of Business Administration Degree Programme International Business and Logistics Instructor(s) Michael Keaney, Senior Lecturer Rock music has great importance in the recent history of human kind, and it is interesting to understand the reasons of its de- cline, if it actually exists. Its legacy will never disappear, and it will always be a great influence for new artists but is important to find out the reasons why it has become what it is in now, and what is the expected future for the genre. This project is going to be focused on the analysis of some im- portant business aspects related with rock music and its de- cline, if exists. The collapse of Gibson guitars will be analyzed, because if rock music is in decline, then the collapse of Gibson is a good evidence of this. Also, the performance of independ- ent and major record labels through history will be analyzed to understand better the health state of the genre. The same with music festivals that today seem to be increasing their popularity at the expense of smaller types of live-music events. Keywords Rock, music, legacy, influence, artists, reasons, expected, fu- ture, genre, analysis, business, collapse, -

Crossroads Film and Television Program List

Crossroads Film and Television Program List This resource list will help expand your programmatic options for the Crossroads exhibition. Work with your local library, schools, and daycare centers to introduce age-appropriate books that focus on themes featured in the exhibition. Help libraries and bookstores to host book clubs, discussion programs or other learning opportunities, or develop a display with books on the subject. This list is not exhaustive or even all encompassing – it will simply get you started. Rural themes appeared in feature-length films from the beginning of silent movies. The subject matter appealed to audiences, many of whom had relatives or direct experience with life in rural America. Historian Hal Barron explores rural melodrama in “Rural America on the Silent Screen,” Agricultural History 80 (Fall 2006), pp. 383-410. Over the decades, film and television series dramatized, romanticized, sensationalized, and even trivialized rural life, landscapes and experiences. Audiences remained loyal, tuning in to series syndicated on non-network channels. Rural themes still appear in films and series, and treatments of the subject matter range from realistic to sensational. FEATURE LENGTH FILMS The following films are listed alphabetically and by Crossroads exhibit theme. Each film can be a basis for discussions of topics relevant to your state or community. Selected films are those that critics found compelling and that remain accessible. Identity Bridges of Madison County (1995) In rural Iowa in 1965, Italian war-bride Francesca Johnson begins to question her future when National Geographic photographer Robert Kincaid pulls into her farm while her husband and children are away at the state fair, asking for directions to Roseman Bridge. -

Tony Award Winner Laura Benanti Returns to Feinstein’S at the Nikko with Tales from Soprano Isle

FOR IMMEDIATE RELEASE Media Contact: Kevin Kopjak | Charles Zukow Associates 415.296.0677 | [email protected] TONY AWARD WINNER LAURA BENANTI RETURNS TO FEINSTEIN’S AT THE NIKKO WITH TALES FROM SOPRANO ISLE THREE PERFORMANCES ONLY JULY 29 – 31, 2016 SAN FRANCISCO – Fresh off her Tony Award-nominated role in Roundabout Theater Company’s critically-acclaimed Broadway revival of She Loves Me, Tony Award winner Laura Benanti returns to Feinstein’s at the Nikko with her new show, Tales from Soprano Isle, for three performances only – Friday, July 29 (8 p.m.), Saturday, July 30 (7 p.m.) and Sunday, July 31 (3 p.m.). In this humorous evening, Benanti will perform songs from throughout her celebrated career, as well as share stories and personal anecdotes from her life on and off the stage! Tickets for Laura Benanti range in price from $75 – $95 and are available now by calling 866.663.1063 or visiting www.feinsteinsatthenikko.com. Tony Award-winner Laura Benanti can currently be seen starring in Roundabout Theater Company’s critically-acclaimed Broadway revival of She Loves Me opposite Zachary Levi, for which she has received a 2016 Tony Award Nomination, Drama Desk Award Nomination, Outer Critic’s Circle Award Nomination and Drama League Distinguished Performance Award Nomination. On TV, she most recently portrayed Alura Zor-El in the CBS action- drama, “Supergirl.” In 2014, she played songbird Sadie Stone in ABC’s hit drama, "Nashville" and also appeared in recurring arcs on CBS's "The Good Wife" and HBO's "Nurse Jackie." In addition to television work and her highly praised performance as Elsa Schrader in NBC's “The Sound of Music LIVE,” Ms. -

Popular Music in Germany the History of Electronic Music in Germany

Course Title Popular Music in Germany The History of Electronic Music in Germany Course Number REMU-UT.9811001 SAMPLE SYLLABUS Instructors’ Contact Information Heiko Hoffmann [email protected] Course Details Wednesdays, 6:15pm to 7:30pm Location of class: NYU Berlin Academic Center, Room “Prenzlauer Berg” (tbc) Prerequisites No pre-requisites Units earned 2 credits Course Description From Karlheinz Stockhausen and Kraftwerk to Giorgio Moroder, D.A.F. and the Euro Dance of Snap!, the first nine weeks of class consider the history of German electronic music prior to the Fall of the Wall in 1989. We will particularly look at how electronic music developed in Germany before the advent of house and techno in the late 1980s. One focus will be on regional scenes, such as the Düsseldorf school of electronic music in the 1970s with music groups such as Cluster, Neu! and Can, the Berlin school of synthesizer pioneers like Tangerine Dream, Klaus Schulze and Manuel Göttsching, or Giorgio Moroder's Sound of Munich. Students will be expected to competently identify key musicians and recordings of this creative period. The second half of the course looks more specifically at the arrival of techno, a new musical movement, and new technology in Berlin and Germany in the turbulent years after the Fall of the Berlin Wall, up to the present. Indeed, Post-Wall East Berlin, full of abandoned spaces and buildings and deserted office blocks, was the perfect breeding ground for the youth culture that would dominate the 1990s and led techno pioneers and artists from the East and the West to take over and set up shop. -

Khelt with Head Office in Edmonton, Alta., of Any Trust Company in the Prov- Be Looking Forward to the Announce- Nized

) THE .;nCWISH POST 'l'h.ursda,y, October I, 1964 Page Ten Tbursday, October I, 1964 THE JEWISH POST Page Eleven I The ~haarey Tzedec Men's Club home of Mr. and Mrs. Leo Paperny, funds raised through the United BIRTHDAY AND WEDDING will hold a men's stag Wednesday, 1021 Hill(ll"est, to open the total Jewish Appeal. CAKES AT THEIR BEST Rother's 1ine FurnHure "Post"-Marked Oct. 7, at. 6:30 p.m. in the Youth welfare cam,paign of the I. L. Per- Many agencies studied are outside ''Made to Order" Superb StyJiDg etz School. Co-chairmen for the the sphere 'Of financial support by BARNEY GLAZER'S Re-Upholstering and Re-Building by Expert Craftsmen Hall. Rabbi Benjamin Eisenberg, Belgian new spiritual leader of the congre 1964-65 campaign are A. Pearhnan Jewish Welfare or UJA monies. FREE ESTIMATES Calgary gation, will be guest speaker. The and Leon Krygier. Committee They are included in order to pro Pastry Shop chairmen are: social, Mrs. Dave vide an over-all picture of the com Phone 772-2181 Evenings JU 6-6782 8J' TANYA GELFAND membership fee 01 $5 includes all French Pastries Our Specialty BNtger; phoning, S. Pearlman; invi- munity's resources. HOLLYJlTOOD (Mrs. Geliand Wlll be pleased to accept organizational refreshmenls. 498 PORTAGE AVENUE tation, Mrs: A. Gold; publiCity, Lou In the past, members have toured 1101 Corydon Ave. or local news 8lI.d personal notes for inclusion in this The Sha'arey Tzedec Sisterhood column. Her phone number is I\.V 9-2166 ••• her held its membership ,tea in the Pearhnan and Mrs. -

Morgan Woodward ‘48

The University of Texas at Arlington Morgan Woodward ‘48 organ Woodward Hutch,” “The Waltons,” “Fantasy M(AA ’48) has probably Island,” “Hill Street Blues,” been shot more than any other “The Dukes of Hazzard,” “The UT Arlington alumnus. Chances X-Files,” and seven seasons as are you’ve seen him shot, Marvin Anderson on “Dallas.” in episodes of “Gunsmoke,” Woodward retired in 1997. “Bonanza,” “Wagon Train,” or By all accounts Woodward’s many of the other 250 television career in Hollywood was a shows and movies he appeared success, although what he in from 1956-1997. Woodward, really wanted to be was an 84, usually played bad guys. “I opera singer. During lean times was 6’3”, 221 pounds … bigger in Hollywood, Woodward even than practically everybody wondered if he might make a else,” Woodward said, “and better living as a watchmaker, with a face that is not really all but after winning lifetime that pleasant.” achievement awards and the Woodward’s best-known prestigious Golden Boot Award, role came in Cool Hand Luke Morgan Woodward he appears satisfied with his (1967) in which he played Boss body of work. Godfrey, the silent, stone-faced Last year Woodward guard behind the mirrored sunglasses who personified evil. decided to give something back. He established the Morgan Woodward’s performance was lauded by critics, his salary Woodward Distinguished Professorship in Film and Video doubled, and in 1969 Newsweek named him one of the six with a $250,000 gift that will be matched by the University’s most wanted bad guys in Hollywood.