Mozfest Is One Part of a Much Larger Movement — a Global Community of Coders, Activists, Researchers, and Artists Working to Make the Internet a Healthier Place

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

It Quarter N. 2 2016

2 6 IN THIS ISSUE Anno 4, numero 2, Settembre 2016 ISSN 2284-0001 3 EDITORIAL COORDINATOR FIRST PAGE Anna Vaccarelli D Food and agri-food sector: the Observatory is online EDITORIAL COMMITTEE Maurizio Martinelli, Rita Rossi, 7 STATISTICS Anna Vaccarelli, Daniele Vannozzi D Growth of new registrations GRAPHIC AND PAGING Annual growth D Giuliano Kraft, Francesco Gianetti D Top regions D Entity types PHOTO CREDITS Fotolia (www.fotolia.it), Francesco Gianetti 9 .IT PARADE EDITORIAL BOARD D School/work programme: students at CNR Francesca Nicolini (coordinatore D DNSSEC redazionale), Giorgia Bassi, D Academy 2016 Arianna Del Soldato, Stefania Fabbri, Beatrice Lami, D IF2016 is coming Adriana Lazzaroni, Maurizio Martinelli, Ludoteca .it: school year 2015/2016 D Rita Rossi, Gian Mario Scanu, D Researchers’ Night 2016 Gino Silvatici, Chiara Spinelli D Ludoteca’s presentations DATA SOURCE Unità sistemi e sviluppo tecnologico del 13 HIGHLIGHTS Registro .it D Small and medium enterprises on the Net DATA PROCESSING Lorenzo Luconi Trombacchi FOCUS 14 EDITED BY D The new Registrars’ contract Unità relazioni esterne, media, comunicazione e marketing del Registro .it NEWS FROM ABROAD Via G. Moruzzi, 1 16 D CENTR and Net Neutrality I-56124 Pisa tel. +39 050 313 98 11 Court of justice: “data retention” D fax +39 050 315 27 13 D Court of justice: IP adresses e-mail: [email protected] D The IANA transition website: http://www.registro.it/ D Root Zone Maintainer Agreement D Post-transition IANA HEAD OF .IT REGISTRY Domenico Laforenza EVENTS 19 D RIPE D ICANN D IETF D CENTR D IGF 2016 D IF 2016 2 FIRST PAGE Food and agri-food sector: the Observatory is online Maurizio Martinelli The food and agri-food sector industry is one of the pillars of the Italian economy. -

Romanian Political Science Review Vol. XXI, No. 1 2021

Romanian Political Science Review vol. XXI, no. 1 2021 The end of the Cold War, and the extinction of communism both as an ideology and a practice of government, not only have made possible an unparalleled experiment in building a democratic order in Central and Eastern Europe, but have opened up a most extraordinary intellectual opportunity: to understand, compare and eventually appraise what had previously been neither understandable nor comparable. Studia Politica. Romanian Political Science Review was established in the realization that the problems and concerns of both new and old democracies are beginning to converge. The journal fosters the work of the first generations of Romanian political scientists permeated by a sense of critical engagement with European and American intellectual and political traditions that inspired and explained the modern notions of democracy, pluralism, political liberty, individual freedom, and civil rights. Believing that ideas do matter, the Editors share a common commitment as intellectuals and scholars to try to shed light on the major political problems facing Romania, a country that has recently undergone unprecedented political and social changes. They think of Studia Politica. Romanian Political Science Review as a challenge and a mandate to be involved in scholarly issues of fundamental importance, related not only to the democratization of Romanian polity and politics, to the “great transformation” that is taking place in Central and Eastern Europe, but also to the make-over of the assumptions and prospects of their discipline. They hope to be joined in by those scholars in other countries who feel that the demise of communism calls for a new political science able to reassess the very foundations of democratic ideals and procedures. -

Jihočeská Univerzita V Českých Budějovicích

JIHOČESKÁ UNIVERZITA V ČESKÝCH BUDĚJOVICÍCH FILOZOFICKÁ FAKULTA ÚSTAV ČESKO-NĚMECKÝCH AREÁLOVÝCH STUDIÍ A GERMANISTIKY BAKALÁŘSKÁ PRÁCE ČESKÁ PIRÁTSKÁ STRANA A PIRÁTSKÁ STRANA NĚMECKA A VOLBY DO EVROPSKÉHO PARLAMENTU V ROCE 2019 Vedoucí práce: PhDr. Miroslav Šepták, Ph.D. Autor práce: Viktorie Tichánková Studijní obor: Česko-německá areálová studia Ročník: 3 2020 Prohlašuji, že svoji bakalářskou práci jsem vypracovala samostatně, pouze s použitím pramenů a literatury uvedených v seznamu citované literatury. Prohlašuji, že v souladu s § 47b zákona č. 111/1998 Sb. v platném znění souhlasím se zveřejněním své bakalářské práce, a to v nezkrácené podobě elektronickou cestou ve veřejně přístupné části databáze STAG provozované Jihočeskou univerzitou v Českých Budějovicích na jejích internetových stránkách, a to se zachováním mého autorského práva k odevzdanému textu této kvalifikační práce. Souhlasím dále s tím, aby toutéž elektronickou cestou byly v souladu s uvedeným ustanovením zákona č. 111/1998 Sb. zveřejněny posudky školitele a oponentů práce i záznam o průběhu a výsledky obhajoby kvalifikační práce. Rovněž souhlasím s porovnáním textu mé kvalifikační práce s databází kvalifikačních prací Theses.cz provozovanou Národním registrem vysokoškolských kvalifikačních prací a systémem na odhalování plagiátů. České Budějovice 9. května 2020 .…………………… Viktorie Tichánková Poděkování Touto cestou bych chtěla poděkovat mým rodičům a mému chlapci za to, že mne po celou dobu podporovali a byli mi nablízku. Dále bych chtěla poděkovat europoslancům Markétě Gregorové, Mikulášovi Peksovi a Patricku Breyerovi za ochotu poskytnout mi odpovědi na mé otázky, čímž mi pomohli proniknout hlouběji do tématu, o kterém v bakalářské práci píši. Největší díky patří PhDr. Miroslavovi Šeptákovi, Ph.D. za odborné rady, čas a vstřícnost, které mi během psaní věnoval a za podporu, kterou mi neustále projevoval. -

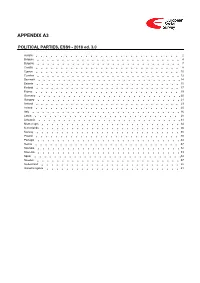

ESS9 Appendix A3 Political Parties Ed

APPENDIX A3 POLITICAL PARTIES, ESS9 - 2018 ed. 3.0 Austria 2 Belgium 4 Bulgaria 7 Croatia 8 Cyprus 10 Czechia 12 Denmark 14 Estonia 15 Finland 17 France 19 Germany 20 Hungary 21 Iceland 23 Ireland 25 Italy 26 Latvia 28 Lithuania 31 Montenegro 34 Netherlands 36 Norway 38 Poland 40 Portugal 44 Serbia 47 Slovakia 52 Slovenia 53 Spain 54 Sweden 57 Switzerland 58 United Kingdom 61 Version Notes, ESS9 Appendix A3 POLITICAL PARTIES ESS9 edition 3.0 (published 10.12.20): Changes from previous edition: Additional countries: Denmark, Iceland. ESS9 edition 2.0 (published 15.06.20): Changes from previous edition: Additional countries: Croatia, Latvia, Lithuania, Montenegro, Portugal, Slovakia, Spain, Sweden. Austria 1. Political parties Language used in data file: German Year of last election: 2017 Official party names, English 1. Sozialdemokratische Partei Österreichs (SPÖ) - Social Democratic Party of Austria - 26.9 % names/translation, and size in last 2. Österreichische Volkspartei (ÖVP) - Austrian People's Party - 31.5 % election: 3. Freiheitliche Partei Österreichs (FPÖ) - Freedom Party of Austria - 26.0 % 4. Liste Peter Pilz (PILZ) - PILZ - 4.4 % 5. Die Grünen – Die Grüne Alternative (Grüne) - The Greens – The Green Alternative - 3.8 % 6. Kommunistische Partei Österreichs (KPÖ) - Communist Party of Austria - 0.8 % 7. NEOS – Das Neue Österreich und Liberales Forum (NEOS) - NEOS – The New Austria and Liberal Forum - 5.3 % 8. G!LT - Verein zur Förderung der Offenen Demokratie (GILT) - My Vote Counts! - 1.0 % Description of political parties listed 1. The Social Democratic Party (Sozialdemokratische Partei Österreichs, or SPÖ) is a social above democratic/center-left political party that was founded in 1888 as the Social Democratic Worker's Party (Sozialdemokratische Arbeiterpartei, or SDAP), when Victor Adler managed to unite the various opposing factions. -

20150312-P+S-031-Article 19

ARTICLE 19 Free Word Centre, 60 Farringdon Road, London, EC1R 3GA Intelligence and Security Committee of Parliament 35 Great Smith Street, London, SW1P 3BQ Re: Response to the Privacy and Security Inquiry Call for Evidence ARTICLE 19, international freedom of expression organisation, welcomes the opportunity to comment on the laws governing the intelligence agencies’ access to private communications. Before outlining our concerns, however, we must highlight that we are deeply concerned about the independence of the Committee in relation to the matter in hand. We believe the independence of the Committee is compromised on the grounds that: Secretaries of State can veto what information can be seen by the Committee; the Prime Minister's nomination is required for eligibility to sit on the Committee; the Prime Minister is responsible for determining which material the Committee can release publically. We believe that this lack of independence reduces the Committee’s ability to adequately command the confidence of the public on complex questions of whether the intelligence agencies have breached the law. ARTICLE 19 is concerned about the following issues in the Call for Evidence. The Call includes misleading references to the “individual right to privacy” and the “collective right to security.” We observe that both rights entail an individual and collective element. The right to security necessarily includes an individual right to security of the person. Equally, privacy entails a significant collective element – the right to privacy of collective gatherings such as societies, groups, and organisations as well as the crucial social function of privacy in encouraging free communication between members of society. -

ECPR General Conference

13th General Conference University of Wrocław, 4 – 7 September 2019 Contents Welcome from the local organisers ........................................................................................ 2 Mayor’s welcome ..................................................................................................................... 3 Welcome from the Academic Convenors ............................................................................ 4 The European Consortium for Political Research ................................................................... 5 ECPR governance ..................................................................................................................... 6 Executive Committee ................................................................................................................ 7 ECPR Council .............................................................................................................................. 7 University of Wrocław ............................................................................................................... 8 Out and about in the city ......................................................................................................... 9 European Political Science Prize ............................................................................................ 10 Hedley Bull Prize in International Relations ............................................................................ 10 Plenary Lecture ....................................................................................................................... -

The European Parliament Elections of 2019

The European Parliament Elections of 2019 Edited by Lorenzo De Sio Mark N. Franklin Luana Russo luiss university press The European Parliament Elections of 2019 Edited by Lorenzo De Sio Mark N. Franklin Luana Russo © 2019 Luiss University Press – Pola Srl All rights reserved ISBN (print) 978-88-6105-411-0 ISBN (ebook) 978-88-6105-424-0 Luiss University Press – Pola s.r.l. viale Romania, 32 00197 Roma tel. 06 85225481/431 www.luissuniversitypress.it e-mail [email protected] Graphic design: HaunagDesign Srl Layout: Livia Pierini First published in July 2019 Table of contents Introduction Understanding the European Parliament elections of 2019 luana russo, mark n. franklin, lorenzo de sio .....................................p. 9 part i − comparative overview Chapter One Much ado about nothing? The EP elections in comparative perspective davide angelucci, luca carrieri, mark n. franklin ...............................p. 15 Chapter Two Party system change in EU countries: long-term instability and cleavage restructuring vincenzo emanuele, bruno marino ........................................................p. 29 Chapter Three Spitzenkandidaten 2.0: From experiment to routine in European elections? thomas christiansen, michael shackleton ...........................................p. 43 Chapter Four Explaining the outcome. Second-order factors still matter, but with an exceptional turnout increase lorenzo de sio, luana russo, mark n. franklin ....................................p. 57 Chapter Five Impact of issues on party performance -

The Hague, November 26, 2015 Public Libraries in Brussels: Grant

The Hague, November 26, 2015 Public Libraries in Brussels: grant us Public Lending Right for e-books Next month, the European Commission will be presenting its packages of measures on the required copyright reform. This is the start of a lengthy process of debates in the lead-up to the establishment of a new European directive. For the Netherlands Association of Public Libraries (VOB) this therefore presents the perfect opportunity to address Brussels with a plea for a statutory Public Lending Right (PLR) for e-books. Two attending Members of the European Parliament (MEP) support the public libraries in their entreaty. The public library sector does not have a tradition of militant lobbying for its own interests. The vice-president of the VOB, Chris Wiersma, who is also the director of De Nieuwe Bibliotheek library in Almere in the Netherlands, acknowledges this without any shame. Naturally, though, libraries do have their interests and concerns. And in the digital age even more than ever. No PLR exists for e- books. Therefore, libraries can only lend out e-books for which they have purchased a license from the publisher. It is not possible to purchase e–book titles and make them available to their members at their own discretion, as is permitted for printed books. This needs to change. Thus, it was with a bit of shy pride that Wiersma pleaded the case with a delegation from the public library sector last week during a breakfast session in the Brussels Stanhope Hotel. The director of the Dutch Bibliotheek Kennemerwaard library, Erna Winters, explained to the Members of the European Parliament that libraries carry the legal responsibility to provide access to information, so that citizens can form an opinion and fully participate in a democratic society. -

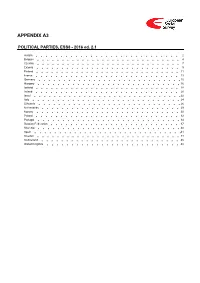

ESS8 Appendix A3 Political Parties Ed

APPENDIX A3 POLITICAL PARTIES, ESS8 - 2016 ed. 2.1 Austria 2 Belgium 4 Czechia 7 Estonia 9 Finland 11 France 13 Germany 15 Hungary 16 Iceland 18 Ireland 20 Israel 22 Italy 24 Lithuania 26 Netherlands 29 Norway 30 Poland 32 Portugal 34 Russian Federation 37 Slovenia 40 Spain 41 Sweden 44 Switzerland 45 United Kingdom 48 Version Notes, ESS8 Appendix A3 POLITICAL PARTIES ESS8 edition 2.1 (published 01.12.18): Czechia: Country name changed from Czech Republic to Czechia in accordance with change in ISO 3166 standard. ESS8 edition 2.0 (published 30.05.18): Changes from previous edition: Additional countries: Hungary, Italy, Lithuania, Portugal, Spain. Austria 1. Political parties Language used in data file: German Year of last election: 2013 Official party names, English 1. Sozialdemokratische Partei Österreichs (SPÖ), Social Democratic Party of Austria, 26,8% names/translation, and size in last 2. Österreichische Volkspartei (ÖVP), Austrian People's Party, 24.0% election: 3. Freiheitliche Partei Österreichs (FPÖ), Freedom Party of Austria, 20,5% 4. Die Grünen - Die Grüne Alternative (Grüne), The Greens - The Green Alternative, 12,4% 5. Kommunistische Partei Österreichs (KPÖ), Communist Party of Austria, 1,0% 6. NEOS - Das Neue Österreich und Liberales Forum, NEOS - The New Austria and Liberal Forum, 5,0% 7. Piratenpartei Österreich, Pirate Party of Austria, 0,8% 8. Team Stronach für Österreich, Team Stronach for Austria, 5,7% 9. Bündnis Zukunft Österreich (BZÖ), Alliance for the Future of Austria, 3,5% Description of political parties listed 1. The Social Democratic Party (Sozialdemokratische Partei Österreichs, or SPÖ) is a social above democratic/center-left political party that was founded in 1888 as the Social Democratic Worker's Party (Sozialdemokratische Arbeiterpartei, or SDAP), when Victor Adler managed to unite the various opposing factions. -

Anti-Establishment Radical Parties in 21 Century Europe

Anti-Establishment Radical Parties in 21st Century Europe by Harry Nedelcu A thesis submitted to the Faculty of Graduate and Postdoctoral Affairs in partial fulfillment of the requirements for the degree of PhD in Political Science Carleton University, Ottawa, Ontario © Harry Nedelcu i Abstract The current crisis in Europe is one that superimposed itself over an already existing political crisis - one which, due to the cartelization of mainstream parties, Peter Mair (1995) famously referred to as a problem of democratic legitimacy in European political systems between those political parties that govern but no longer represent and those that claim to represent but do not govern. As established cartel-political parties have become complacent about their increasing disconnect with societal demands, the group of parties claiming to represent without governing has intensified its anti-elitist, anti-integrationist and anti-mainstream party message. Indeed many such parties, regardless of ideology (radical right but also radical-left), have surged during the past decade, including in the European Parliament elections of 2009 and especially 2014. The common features of these parties are: a) a radical non-centrist ideological stance (be it on the left or right, authoritarian or libertarian dimensions); b) a populist anti-establishment discourse, c) a commitment to representing specific societal classes; d) an aggressive discourse and behaviour towards political enemies, e) a commitment towards ‘restoring true democracy’ and f) a tendency to offer simplistic solutions to intricate societal issues. The question this dissertation asks is - what accounts for the rise of left-libertarian as well as right-wing authoritarian tribune parties within such a short period of time during the mid-2000s and early 2010s? I investigate this question through a comparative study of six EU member- states. -

“Don't Worry, We Are from the Internet”

“Don’t Worry, We Are From the Internet” The Diffusion of Protest against the Anti- Counterfeiting Trade Agreement in the Age of Austerity Julia Rone Thesis submitted for assessment with a view to obtaining the degree of Doctor of Political and Social Sciences of the European University Institute Florence, 22 February 2018 European University Institute Department of Political and Social Sciences “Don’t Worry, We Are From the Internet”: The Diffusion of Protest against the Anti-Counterfeiting Trade Agreement in the Age of Austerity Julia Rone Thesis submitted for assessment with a view to obtaining the degree of Doctor of Political and Social Sciences of the European University Institute Examining Board Prof. Donatella della Porta, Scuola Normale Superiore (EUI/External Supervisor) Prof. László Bruszt, Scuola Normale Superiore Dr. Sebastian Haunss. BIGSSS, Universität Bremen Dr. Paolo Gerbaudo, King’s College London © Julia Rone, 2018 No part of this thesis may be copied, reproduced or transmitted without prior permission of the author Table of Contents INTRODUCTION: “Leave Our Internet alone”. On the Importance of studying ACTA and what to expect from this thesis ……………………………………………………………………………………………………..…… ...... 1 0.1. What is ACTA and why it is important to study the diffusion of protest against it? ..................... 1 0.2. Main puzzles and questions with regard to the diffusion of protest against ACTA …………………… 6 0.3. Chapter Plan …………………………………………………………….……………………………………. .................... 8 CHAPTER 1. Spreading Protest: Analysing the anti-ACTA mobilization as part of the post-financial crisis cycle of contention ............................................................................................................. 15 1.1. “Hard to be a God”: Moving beyond comparative analysis ....................................................... 16 1.2. Literature Review and Theoretical Framework.......... -

Digital Democracy: Opportunities and Dilemmas

Digital Democracy: Opportunities and Dilemmas What could digital citizen involvement mean for the Dutch parliament? Arthur Edwards and Dennis de Kool Rathenau Institute Board G.A. Verbeet (chairperson) Prof. E.H.L. Aarts Prof. W.E. Bijker Prof. R. Cools Dr J.H.M. Dröge E.J.F.B. van Huis Prof. H.W. Lintsen Prof. J.E.J. Prins Prof. M.C. van der Wende Dr M.M.C.G. Peters (secretary) Digital Democracy: Opportunities and Dilemmas What could digital citizen involvement mean for the Dutch parliament? Arthur Edwards and Dennis de Kool Rathenau Institute 5 Rathenau Instituut Anna van Saksenlaan 51 Correspondence address: Postbus 95366 NL-2509 CJ Den Haag T: +31 (0)70-342 15 42 E: [email protected] W: www.rathenau.nl Published by the Rathenau Institute Editing: Redactie Dynamiek, Utrecht Correction: Duidelijke Taal tekstproductes, Amsterdam Translation: Balance Amsterdam/Maastricht Preferred citation title: Edwards, A.R & D. de Kool, Kansen en dilemma’s van digitale democratie - Wat kan digitale burgerbetrokkenheid betekenen voor het Nederlandse parlement? Den Haag, Rathenau Instituut 2015 The Rathenau Institute has an Open Access policy. Its reports, background studies, research articles and software are all open access publications. Research data are made available pursuant to statutory provisions and ethical research standards concerning the rights of third parties, privacy and copyright. © Rathenau Instituut 2015 This work or parts of it may be reproduced and/or published for creative, personal or educational purposes, provided that no copies are made or used for commercial objectives, and subject to the condition that copies always give the full attribution above.