The Present and Future of Statistics Challenges and Opportunities

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Interview of Albert Tucker

University of Tennessee, Knoxville TRACE: Tennessee Research and Creative Exchange About Harlan D. Mills Science Alliance 9-1975 Interview of Albert Tucker Terry Speed Evar Nering Follow this and additional works at: https://trace.tennessee.edu/utk_harlanabout Part of the Mathematics Commons Recommended Citation Speed, Terry and Nering, Evar, "Interview of Albert Tucker" (1975). About Harlan D. Mills. https://trace.tennessee.edu/utk_harlanabout/13 This Report is brought to you for free and open access by the Science Alliance at TRACE: Tennessee Research and Creative Exchange. It has been accepted for inclusion in About Harlan D. Mills by an authorized administrator of TRACE: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. The Princeton Mathematics Community in the 1930s (PMC39)The Princeton Mathematics Community in the 1930s Transcript Number 39 (PMC39) © The Trustees of Princeton University, 1985 ALBERT TUCKER CAREER, PART 2 This is a continuation of the account of the career of Albert Tucker that was begun in the interview conducted by Terry Speed in September 1975. This recording was made in March 1977 by Evar Nering at his apartment in Scottsdale, Arizona. Tucker: I have recently received the tapes that Speed made and find that these tapes carried my history up to approximately 1938. So the plan is to continue the history. In the late '30s I was working in combinatorial topology with not a great deal of results to show. I guess I was really more interested in my teaching. I had an opportunity to teach an undergraduate course in topology, combinatorial topology that is, classification of 2-dimensional surfaces and that sort of thing. -

87048 Stats News

statistical society of australia incorporated newsletter November 2005 Number 113 Registered by Australia Post Publication No. NBH3503 issn 0314-6820 75 years of statisticians in CSIRO methods to agricultural research. Her projects included control of oriental peach moths and blowflies, work on plant diseases and noxious weeds, and studies of the effects of supplements on sheep. “Betty Allan’s statistical work pushed CSIR’s agricultural science to new heights – training scientists, designing reliable experiments and extracting important information,” said Dr Murray Cameron, chief of CSIRO Mathematical and Information Sciences. “After she retired in 1940 following her marriage, CSIRO formally established a Biometrics Section, forerunner to the present-day CSIRO Mathematical and Information Sciences.” Dinner Speakers Terry Speed (left) and John Field (right) with Murray Cameron (centre), Chief of CSIRO Mathematical and Information Sciences At a dinner in Canberra on 27 September 2005, CSIRO paid tribute to the seventy fifth anniversary of the appointment of its first statistician, Frances Elizabeth “Betty” Allan. The dinner featured talks by John Field of BiometricsSA on the life of Betty Allan, and Terry Speed of WEHI and UC Berkeley on the expansive topic “Mathematics, Statistics, Biology: Past, Present and Future”. Born in 1905, Betty Allan studied pure and mixed mathematics at Melbourne University, completing both bachelors and masters degrees and winning scholarships and honours throughout. In 1928 she took up a CSIR studentship for “the study of statistical methods applied to agriculture”, studying at Newnham College, Cambridge and then working at Rothamsted Experimental Station with RA Fisher. Fisher described her as Betty Allen having “a rare gift for first-class mathematics”. -

2020 Annual Report

2020 Annual Report Make this cover come alive with augmented reality. Details on inside back cover. Contents The Walter and Eliza Hall Institute About WEHI 1 of Medical Research President’s report 2 Parkville campus 1G Royal Parade Director’s report 3 Parkville Victoria 3052 Australia Telephone: +61 3 9345 2555 WEHI’s new brand launched 4 Bundoora campus 4 Research Avenue Our supporters 10 La Trobe R&D Park Bundoora Victoria 3086 Australia Exceptional science and people 13 Telephone: +61 3 9345 2200 www.wehi.edu.au 2020 graduates 38 WEHIresearch Patents granted in 2020 40 WEHI_research WEHI_research WEHImovies A remarkable place 41 Walter and Eliza Hall Institute Operational overview 42 ABN 12 004 251 423 © The Walter and Eliza Hall Institute Expanding connections with our alumni 45 of Medical Research 2021 Diversity and inclusion 46 Produced by the WEHI’s Communications and Marketing department Working towards reconciliation 48 Director Organisation and governance 49 Douglas J Hilton AO BSc Mon BSc(Hons) PhD Melb FAA FTSE FAHMS WEHI Board 50 Deputy Director, Scientific Strategy WEHI organisation 52 Alan Cowman AC BSc(Hons) Griffith PhD Melb FAA FRS FASM FASP Members of WEHI 54 Chief Operating Officer WEHI supporters 56 Carolyn MacDonald BArts (Journalism) RMIT 2020 Board Subcommittees 58 Chief Financial Officer 2020 Financial Statements 59 Joel Chibert BCom Melb GradDipCA FAICD Financial statements contents 60 Company Secretary Mark Licciardo Statistical summary 94 BBus(Acc) GradDip CSP FGIA FCIS FAICD The year at a glance 98 Honorary -

Newsletter, and of My Two Obituaries of Given at Second Year Undergraduate Level

ewsletter December 2007 Number 121 N statistical society of australia incorporated Counting Australia In The Editors invited Professor Eugene Seneta to review “Counting Australia In”, the first and only book on the history Graeme Cohen. Counting Australia In. The People, Organisations and Institutions of Australian Mathematics. With a foreword by of the mathematical sciences, including many aspects of Lord Robert May of Oxford. Halstead Press in association with statistics, in Australia. The result is a combination of personal the Australian Mathematical Society. Sydney, 2006. 431pp. history, reflection on the content of the book, and expansion ISBN 1 920831 39 8. on the book’s statistical aspects. The author stresses in his Preface, dated August 2006, that Chapter 10 is the requested history of the Australian Mathematical Society, Introduction but the book should not be regarded as a history of the Australian mathematics generally. Its scope is indicated by its subtitle, and it There is a great deal about statistics, from official to covers the period from Australia’s colonial beginnings (1788) up mathematical, in the book, and I write this review primarily to about 2005. as a statistician for Australian statisticians. We are part of The following is a table of contents and brief description of the the mathematical community. Evgenii Slutsky, in the Preface chapters. to his first publication, a book in Russian of 1912 entitled Foreword, Preface, Main abbreviations. Correlation Theory which to a large extent brought Pearsonian Chapter 1. Mathematics and the Beginnings of the Colonies. statistics to the Russian Empire, said “Every statistician should Chapter 2. -

Newsletter 145 – December 2013

December 2013 No. 145 NewsThe Statistical Society of Australia TERRY SPEED AWARDED THE 2013 PRIME MINISTer’S PRIZE FOR SCIENCE Statistician and SSAI member Professor Terry Speed, from Melbourne’s Walter In this issue and Eliza Hall Institute, received the 2013 Prime Minister’s Prize for Science for his influential work using mathematics and statistics to help biologists understand Editorial 2 human health and disease. Events 4 The Prime Minister’s Prize for Science is Australia’s highest accolade for excellence in science research. The Prime Minister presented this year’s award President’s Column 6 to Terry at a celebratory dinner in late October at Parliament House. This is the first time this prestigious award has honoured a statistician or mathematician Member news 9 and the award to Terry recognises how important our discipline is to science. Seeking Mathematicians 10 Terry works in bioinformatics, a relatively new branch of science that combines maths, statistics and computer science to solve complex biological problems. Spatio-Temporal Statistical During his 44-year career, he has developed mathematical and statistical Modelling Course 12 tools that enable biologists to make sense of the vast amounts of information From the SSAI Office 17 generated by rapidly advancing genetic technologies. NSW Branch 19 Bioinformatics has made it possible to look at hundreds of genes in a DNA sequence at once to understand the genetic changes involved in complicated SA Branch 21 diseases such as cancers, and is integral to the genomics revolution that is driving the sequencing of whole genomes in ever decreasing times. Terry VIC Branch 25 has developed tools to identify genes that are responsible for different traits, WA Branch 29 diseases or cancers by sifting through these enormous volumes of data. -

Acknowledgment of Reviewers, 2009

Proceedings of the National Academy ofPNAS Sciences of the United States of America www.pnas.org Acknowledgment of Reviewers, 2009 The PNAS editors would like to thank all the individuals who dedicated their considerable time and expertise to the journal by serving as reviewers in 2009. Their generous contribution is deeply appreciated. A R. Alison Adcock Schahram Akbarian Paul Allen Lauren Ancel Meyers Duur Aanen Lia Addadi Brian Akerley Phillip Allen Robin Anders Lucien Aarden John Adelman Joshua Akey Fred Allendorf Jens Andersen Ruben Abagayan Zach Adelman Anna Akhmanova Robert Aller Olaf Andersen Alejandro Aballay Sarah Ades Eduard Akhunov Thorsten Allers Richard Andersen Cory Abate-Shen Stuart B. Adler Huda Akil Stefano Allesina Robert Andersen Abul Abbas Ralph Adolphs Shizuo Akira Richard Alley Adam Anderson Jonathan Abbatt Markus Aebi Gustav Akk Mark Alliegro Daniel Anderson Patrick Abbot Ueli Aebi Mikael Akke David Allison David Anderson Geoffrey Abbott Peter Aerts Armen Akopian Jeremy Allison Deborah Anderson L. Abbott Markus Affolter David Alais John Allman Gary Anderson Larry Abbott Pavel Afonine Eric Alani Laura Almasy James Anderson Akio Abe Jeffrey Agar Balbino Alarcon Osborne Almeida John Anderson Stephen Abedon Bharat Aggarwal McEwan Alastair Grac¸a Almeida-Porada Kathryn Anderson Steffen Abel John Aggleton Mikko Alava Genevieve Almouzni Mark Anderson Eugene Agichtein Christopher Albanese Emad Alnemri Richard Anderson Ted Abel Xabier Agirrezabala Birgit Alber Costica Aloman Robert P. Anderson Asa Abeliovich Ariel Agmon Tom Alber Jose´ Alonso Timothy Anderson Birgit Abler Noe¨l Agne`s Mark Albers Carlos Alonso-Alvarez Inger Andersson Robert Abraham Vladimir Agranovich Matthew Albert Suzanne Alonzo Tommy Andersson Wickliffe Abraham Anurag Agrawal Kurt Albertine Carlos Alos-Ferrer Masami Ando Charles Abrams Arun Agrawal Susan Alberts Seth Alper Tadashi Andoh Peter Abrams Rajendra Agrawal Adriana Albini Margaret Altemus Jose Andrade, Jr. -

NCARD Enewsletter June 2017 in This Issue

Issue 10 Newsletter Vol 7 | Jun 2017 Editor | Tracy Hayward In this issue ADSA PHD SCHOLARSHIP ANNA NOWAK RECEIVES MARF AWARD NCARD RAs IN PROFILE IMMUNOLOGY BAKING! four year period. As Joost had received the NHMRC fellowship, he shares the Cancer Council fellowship with another researcher, Curtin University’s Associate Professor Georgia Halkett. In February Joost also learned that he is one of 22 applicants selected to DR JOOST LESTERHUIS. PHOTO COURTESY CANCER COUNCIL OF WA present at the US-Australian Emerging Cancer Biomedical Technologies Workshop in Washington DC in A SUCCESSFUL FELLOW mid-June. A collaboration between the US National Cancer Institute, Virginia NCARD senior researcher Joost Lesterhuis had quite a start to 2017: Tech and the Australian Trade and Investment Commission, the workshop he effectively was made a fellow three times over in the first few weeks will include a reception hosted by the of the year, with the awarding of an NHMRC Career Development Australian Ambassador. Fellowship, the inaugural Bernie Banton Fellowship, and a Cancer Council of WA Fellowship. It’s a tremendous success for Joost, who emigrated with his family from The NHMRC Career Development Banton Fellowship, which the NHMRC the Netherlands to Western Australia Fellowship, Exploring and exploiting established “to commemorate the life in 2014 to work at NCARD after a novel therapeutic avenues in of Bernie Banton through supporting two year stint as a visiting scientist mesothelioma, was one of just three health and medical research related here, working especially with Richard awarded to researchers based in to mesothelioma”. As the first Bernie Lake. -

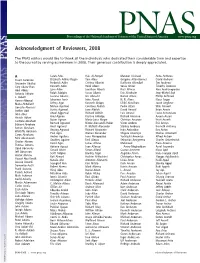

Acknowledgment of Reviewers, 2008

Proceedings of the National Academy ofPNAS Sciences of the United States of America www.pnas.org Acknowledgment of Reviewers, 2008 The PNAS editors would like to thank all the individuals who dedicated their considerable time and expertise to the journal by serving as reviewers in 2008. Their generous contribution is deeply appreciated. A Sarah Ades Qais Al-Awqati Marwan Al-shawi Anne Andrews Stuart Aaronson Elizabeth Adkins-Regan Tom Alber Gre´goire Altan-Bonnet David Andrews Alejandro Aballay Frederick Adler Cristina Alberini Karlheinz Altendorf Tim Andrews Cory Abate-Shen Kenneth Adler Heidi Albers Sonia Altizer Timothy Andrews Abul Abbas Lynn Adler Jonathan Alberts Russ Altman Alex Andrianopoulos Antonio Abbate Ralph Adolphs Susan Alberts Eric Altschuler Jean-Michel Ane´ L. Abbott Luciano Adorini Urs Albrecht Burton Altura Phillip Anfinrud Hanna Abboud Johannes Aerts John Alcock N. R. Aluru Klaus Anger Maha Abdellatif Jeffrey Agar Kenneth Aldape Lihini Aluwihare Jacob Anglister Goncalo Abecasis Munna Agarwal Courtney Aldrich Pedro Alzari Wim Annaert Steffen Abel Sunita Agarwal Jane Aldrich David Amaral Brian Annex John Aber Aneel Aggarwal Richard Aldrich Luis Amaral Lucio Annunciato Hinrich Abken Ariel Agmon Kristina Aldridge Richard Amasino Aseem Ansari Carmela Abraham Noam Agmon Maria-Luisa Alegre Christian Amatore Kristi Anseth Edward Abraham Bernard Agranoff Nicole Alessandri-Haber Victor Ambros Eric Anslyn Aneil Agrawal R. McNeill Alexander Stanley Ambrose Kenneth Anthony Soman Abraham Anurag Agrawal Richard Alexander Indu Ambudkar -

July 2008 Article in the IMS Bulletin

Volume 37 • Issue 6 IMS Bulletin July 2008 World Congress approaches he Seventh World Congress CONTENTS in Probability and Statistics, 1 IMS Annual Meeting jointly sponsored by IMS and Tthe Bernoulli Society, will be held in 2 Members’ News: Richard A Johnson; George Roussas; Singapore from July 14 to 19, 2008. Sastry Pantula; ASA fellows; For those planning to attend, on pages Guenther Walther 14–15 Sau Ling Wu outlines some of Singapore’s highlights, including those for 3 Bayesian Analysis journal; Citation Statistics report culture vultures, shoppers and epicures! According to Raffles Hotel, any visit is 4 Meeting reports: Bayesian incomplete without a Singapore Sling— Methods; SSP; ENAR an intoxicating cocktail much like the city 7 Medallion Lecture: Mary itself, with its fascinating blend of cultural Sara McPeek influences. 8 Shooting ourselves in the Check the Congress website for fur- Spot the similarities! Small wonder that Singapore’s foot? ther information as the date draws nearer: top-notch performance centre, The Esplanade [below], http://www2.ims.nus.edu.sg/ is known affectionately by locals as 9 Profile: Tom Liggett “The Durian”. The fruit [above] Programs/wc2008/ has a distinctive aroma so 10 Other news strong that it is forbid- Rick’s Ramblings den to eat it on 11 public transport! 12 IMS Fellows 2008 14 Singapore in a Nutshell 16 Letters 17 Terence’s Stuff: 2x2 19 Obituaries: Chris Heyde; Shihong Cheng 21 IMS meetings 28 Other meetings 29 Employment Opportunities 31 International Calendar of Statistical Events We are pleased to announce the 2009 IMS Medallion Lecturers: Peter Bühlmann, 35 Information for Advertisers Tony Cai, Claudia Klüppelberg, Sam Kou, Gabor Lugosi, David Madigan, Gareth Roberts and Gordon Slade. -

2019 WEHI Annual Report

ANNUAL REPORT 2019 Make this cover come alive with augmented reality. Click here for details. Contents About the Institute 1 The Walter and Eliza Hall Institute of Medical Research President’s report 2 Parkville campus Director’s report 4 1G Royal Parade Parkville Victoria 3052 Australia Strategic Plan 6 Telephone: +61 3 9345 2555 Our supporters 12 Bundoora campus 4 Research Avenue Exceptional science and people 15 La Trobe R&D Park Bundoora Victoria 3086 Australia Cancer Research and Treatments 16 Telephone: +61 3 9345 2200 Infection, Inflammation and Immunity 21 www.wehi.edu.au WEHIresearch Healthy Development and Ageing 26 WEHI_research New Medicines and Advanced Technologies 30 wehi_research WEHImovies Computational Biology 34 Walter and Eliza Hall Institute 2019 Graduates 38 ABN 12 004 251 423 Patents granted in 2019 40 © The Walter and Eliza Hall Institute of Medical Research 2020 A remarkable place 41 Produced by the Walter and Eliza Hall Institute’s Communications and Marketing department Staying connected with our 44 alumni community Pairing researchers and consumers Director 45 to drive research forward Douglas J Hilton ao BSc Mon BSc(Hons) PhD Melb FAA FTSE FAHMS Diversity and Inclusion 46 Deputy Director, Scientific Strategy Reconciliation 48 Alan Cowman ac BSc(Hons) Griffith PhD Melb FAA FRS FASM FASP Organisation and governance 49 Chief Operating Officer Institute organisation 50 Carolyn MacDonald BArts (Journalism) RMIT Institute Board 52 Chief Financial Officer Members of the Institute 54 Joel Chibert BCom Melb GradDipCA -

Vicbiostat Seminarflyer Terry Speed.Pdf

Victorian Centre for Biostatistics Seminar Thursday 26th June 9.30am to 10.30am Seminar Room 2, Level 5 Alfred Centre 99 Commercial Road, Prahran Normalization Professor Terry Speed Division of Bioinformatics Walter & Eliza Hall Institute Normalization is a term that has come to describe a range of adjustments done to data prior to carrying out conventional statistical analyses. Usually it is not model-based. It first came to my attention with microarray data, but is now used for a wide range of "omic" data (genomic, proteomic, metabolomic,...) and beyond. In this talk I'll describe some of my experiences with this notion. Along the way, I'll explain why and how people normalize data, and how it affects their ultimate results. My main story will be from microarray gene expression data, but I'll also mention RNA-seq data. My view now is that it can be model- based. Professor Terry Speed completed a BSc (Hons) in mathematics and statistics at the University of Melbourne (1965), and a PhD in mathematics at Monash University (1969). He held appointments at the University of Sheffield, U.K. (1969-73) and the University of Western Australia in Perth (1974-82), and he was with Australia’s CSIRO between 1983 and 1987. In 1987 he moved to the Department of Statistics at the University of California at Berkeley (UCB), and has remained with them ever since. www.vicbiostat.org.au ViCBiostat is a Centre of Research Excellence in biostatistics funded by Australia’s National Health & Medical Research Council (NHMRC). The Centre is a collaboration between biostatistical researchers at the Murdoch Childrens Research Institute, the Department of Epidemiology & Preventive Medicine at Monash University, and the Centre for Molecular, Environmental, Genetic & Analytical Epidemiology (MEGA) at The University of Melbourne. -

IMS Bulletin 32(5)

Volume 32 Issue 5 IMS Bulletin September/October 2003 Reports Galore! In this issue we have meeting reports from: ➤ CONTENTS the IMS sponsored New Researchers Conference (Davis, 2 Members’ News; CA) on page 5 Contacting the IMS ➤ IMS sponsored mini-meetings 4 Extrapolate Yourself! on Functional Data Analysis (Florida) on page 7, 5 Meeting Reports: NRC, Non/semi-parametric Models Statistics for Mathematical and and Sequential Analysis, Computational Finance (Con- Functional Data Analysis, necticut) on page 7 Statistics for Mathematical and Computational Finance, IMS- and Non/semi-parametric ISBA Models and Sequential Analysis (Kentucky) on page 6 9 Laha Award and others: calls for entries ➤ First Joint IMS-ISBA meeting (Puerto Rico) on page 8 10 COPSS Awards at JSM 11 Obituary: Howard Levene We also have the Dues for 2004 Executive, Committee and Editors’ reports, 13 IMS Fellows Nomination presented to Council at the 66th 15 IMS Annual Reports IMS Annual Meeting, which took San Francisco’s Museum of Modern Art (foreground) 30 IMS Meetings place at the Joint Statistical Meet- ings in San Francisco, in August. 34 Other Meetings and Announcements Terry Speed introduces the “Extrapolate Yourself!” membership drive on 37 Employment page 4 (see below). And if you’re looking for a new position, turn to page Opportunities 37: we have 38 job adverts from around the world. 49 International Calendar of Statistical Events Included with this issue: 51 Information for Advertisers Extrapolate Yourself! Please put this poster up on your departmental bulletin board, in your offi ce, on the number bus, wherever you think it will attract attention.