Download Your FREE Copy of the LT2013

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Uila Supported Apps

Uila Supported Applications and Protocols updated Oct 2020 Application/Protocol Name Full Description 01net.com 01net website, a French high-tech news site. 050 plus is a Japanese embedded smartphone application dedicated to 050 plus audio-conferencing. 0zz0.com 0zz0 is an online solution to store, send and share files 10050.net China Railcom group web portal. This protocol plug-in classifies the http traffic to the host 10086.cn. It also 10086.cn classifies the ssl traffic to the Common Name 10086.cn. 104.com Web site dedicated to job research. 1111.com.tw Website dedicated to job research in Taiwan. 114la.com Chinese web portal operated by YLMF Computer Technology Co. Chinese cloud storing system of the 115 website. It is operated by YLMF 115.com Computer Technology Co. 118114.cn Chinese booking and reservation portal. 11st.co.kr Korean shopping website 11st. It is operated by SK Planet Co. 1337x.org Bittorrent tracker search engine 139mail 139mail is a chinese webmail powered by China Mobile. 15min.lt Lithuanian news portal Chinese web portal 163. It is operated by NetEase, a company which 163.com pioneered the development of Internet in China. 17173.com Website distributing Chinese games. 17u.com Chinese online travel booking website. 20 minutes is a free, daily newspaper available in France, Spain and 20minutes Switzerland. This plugin classifies websites. 24h.com.vn Vietnamese news portal 24ora.com Aruban news portal 24sata.hr Croatian news portal 24SevenOffice 24SevenOffice is a web-based Enterprise resource planning (ERP) systems. 24ur.com Slovenian news portal 2ch.net Japanese adult videos web site 2Shared 2shared is an online space for sharing and storage. -

Neuro Informatics 2020

Neuro Informatics 2019 September 1-2 Warsaw, Poland PROGRAM BOOK What is INCF? About INCF INCF is an international organization launched in 2005, following a proposal from the Global Science Forum of the OECD to establish international coordination and collaborative informatics infrastructure for neuroscience. INCF is hosted by Karolinska Institutet and the Royal Institute of Technology in Stockholm, Sweden. INCF currently has Governing and Associate Nodes spanning 4 continents, with an extended network comprising organizations, individual researchers, industry, and publishers. INCF promotes the implementation of neuroinformatics and aims to advance data reuse and reproducibility in global brain research by: • developing and endorsing community standards and best practices • leading the development and provision of training and educational resources in neuroinformatics • promoting open science and the sharing of data and other resources • partnering with international stakeholders to promote neuroinformatics at global, national and local levels • engaging scientific, clinical, technical, industry, and funding partners in colla- borative, community-driven projects INCF supports the FAIR (Findable Accessible Interoperable Reusable) principles, and strives to implement them across all deliverables and activities. Learn more: incf.org neuroinformatics2019.org 2 Welcome Welcome to the 12th INCF Congress in Warsaw! It makes me very happy that a decade after the 2nd INCF Congress in Plzen, Czech Republic took place, for the second time in Central Europe, the meeting comes to Warsaw. The global neuroinformatics scenery has changed dramatically over these years. With the European Human Brain Project, the US BRAIN Initiative, Japanese Brain/ MINDS initiative, and many others world-wide, neuroinformatics is alive more than ever, responding to the demands set forth by the modern brain studies. -

Final Study Report on CEF Automated Translation Value Proposition in the Context of the European LT Market/Ecosystem

Final study report on CEF Automated Translation value proposition in the context of the European LT market/ecosystem FINAL REPORT A study prepared for the European Commission DG Communications Networks, Content & Technology by: Digital Single Market CEF AT value proposition in the context of the European LT market/ecosystem Final Study Report This study was carried out for the European Commission by Luc MEERTENS 2 Khalid CHOUKRI Stefania AGUZZI Andrejs VASILJEVS Internal identification Contract number: 2017/S 108-216374 SMART number: 2016/0103 DISCLAIMER By the European Commission, Directorate-General of Communications Networks, Content & Technology. The information and views set out in this publication are those of the author(s) and do not necessarily reflect the official opinion of the Commission. The Commission does not guarantee the accuracy of the data included in this study. Neither the Commission nor any person acting on the Commission’s behalf may be held responsible for the use which may be made of the information contained therein. ISBN 978-92-76-00783-8 doi: 10.2759/142151 © European Union, 2019. All rights reserved. Certain parts are licensed under conditions to the EU. Reproduction is authorised provided the source is acknowledged. 2 CEF AT value proposition in the context of the European LT market/ecosystem Final Study Report CONTENTS Table of figures ................................................................................................................................................ 7 List of tables .................................................................................................................................................. -

Three Directions for the Design of Human-Centered Machine Translation

Three Directions for the Design of Human-Centered Machine Translation Samantha Robertson Wesley Hanwen Deng University of California, Berkeley University of California, Berkeley samantha [email protected] [email protected] Timnit Gebru Margaret Mitchell Daniel J. Liebling Black in AI [email protected] Google [email protected] [email protected] Michal Lahav Katherine Heller Mark D´ıaz Google Google Google [email protected] [email protected] [email protected] Samy Bengio Niloufar Salehi Google University of California, Berkeley [email protected] [email protected] Abstract As people all over the world adopt machine translation (MT) to communicate across lan- guages, there is increased need for affordances that aid users in understanding when to rely on automated translations. Identifying the in- formation and interactions that will most help users meet their translation needs is an open area of research at the intersection of Human- Computer Interaction (HCI) and Natural Lan- guage Processing (NLP). This paper advances Figure 1: TranslatorBot mediates interlingual dialog us- work in this area by drawing on a survey of ing machine translation. The system provides extra sup- users’ strategies in assessing translations. We port for users, for example, by suggesting simpler input identify three directions for the design of trans- text. lation systems that support more reliable and effective use of machine translation: helping users craft good inputs, helping users under- what kinds of information or interactions would stand translations, and expanding interactiv- best support user needs (Doshi-Velez and Kim, ity and adaptivity. We describe how these 2017; Miller, 2019). For instance, users may be can be introduced in current MT systems and more invested in knowing when they can rely on an highlight open questions for HCI and NLP re- AI system and when it may be making a mistake, search. -

The MT Developer/Provider and the Global Corporation

The MT developer/provider and the global corporation Terence Lewis1 & Rudolf M.Meier2 1 Hook & Hatton Ltd, 2 Siemens Nederland N.V. [email protected], [email protected] Abstract. This paper describes the collaboration between MT developer/service provider, Terence Lewis (Hook & Hatton Ltd) and the Translation Office of Siemens Nederland N.V. (Siemens Netherlands). It involves the use of the developer’s Dutch-English MT software to translate technical documentation for divisions of the Siemens Group and for external customers. The use of this language technology has resulted in significant cost savings for Siemens Netherlands. The authors note the evolution of an ‘MT culture’ among their regu- lar users. 1. Introduction 2. The Developer’s perspective In 2002 Siemens Netherlands and Hook & Hat- Under the current arrangement, the Developer ton Ltd signed an exclusive agreement on the (working from home in the United Kingdom) marketing of Dutch-English MT services in the currently acts as the sole operator of an Auto- Netherlands. This agreement set the seal on an matic Translation Environment (Trasy), process- informal partnership that had evolved over the ing documents forwarded by e-mail by the Trans- preceding six-seven years between Siemens and lation Office of Siemens Netherlands in The the Developer (Terence Lewis, trading through Hague. Ideally, these documents are in MS Word the private company Hook & Hatton Ltd). Un- or rtf format, but the Translation Office also re- der the contract, Siemens Netherlands under- ceives pdf files or OCR output files for automatic takes to market the MT Services provided by translation. -

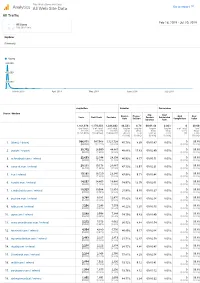

Thiswaifudoesnotexist.Net: Google Analytics: All Traffic 20190218-20190720

This Waifu Does Not Exist Analytics All Web Site Data Go to report All Traffic Feb 18, 2019 - Jul 20, 2019 All Users 100.00% Users Explorer Summary Users 600,000 400,000 200,000 March 2019 April 2019 May 2019 June 2019 July 2019 Acquisition Behavior Conversions Source / Medium Avg. Goal Bounce Pages / Goal Goal Users New Users Sessions Session Conversion Rate Session Completions Value Duration Rate 1,161,978 1,170,333 1,384,602 46.58% 8.74 00:01:48 0.00% 0 $0.00 % of Total: % of Total: % of Total: Avg for Avg for Avg for Avg for % of Total: % of 100.00% 100.07% 100.00% View: View: View: View: 0.00% Total: (1,161,978) (1,169,566) (1,384,602) 46.58% 8.74 00:01:48 0.00% (0) 0.00% (0.00%) (0.00%) (0.00%) (0.00%) ($0.00) 1. (direct) / (none) 966,071 967,968 1,121,738 45.78% 8.39 00:01:47 0.00% 0 $0.00 (82.06%) (82.71%) (81.02%) (0.00%) (0.00%) 2. google / organic 30,702 28,990 44,887 48.86% 15.63 00:02:49 0.00% 0 $0.00 (2.61%) (2.48%) (3.24%) (0.00%) (0.00%) 3. m.facebook.com / referral 22,693 22,544 24,304 46.93% 4.77 00:00:51 0.00% 0 $0.00 (1.93%) (1.93%) (1.76%) (0.00%) (0.00%) 4. away.vk.com / referral 20,111 19,178 26,667 47.13% 13.97 00:02:31 0.00% 0 $0.00 (1.71%) (1.64%) (1.93%) (0.00%) (0.00%) 5. -

List of Search Engines

A blog network is a group of blogs that are connected to each other in a network. A blog network can either be a group of loosely connected blogs, or a group of blogs that are owned by the same company. The purpose of such a network is usually to promote the other blogs in the same network and therefore increase the advertising revenue generated from online advertising on the blogs.[1] List of search engines From Wikipedia, the free encyclopedia For knowing popular web search engines see, see Most popular Internet search engines. This is a list of search engines, including web search engines, selection-based search engines, metasearch engines, desktop search tools, and web portals and vertical market websites that have a search facility for online databases. Contents 1 By content/topic o 1.1 General o 1.2 P2P search engines o 1.3 Metasearch engines o 1.4 Geographically limited scope o 1.5 Semantic o 1.6 Accountancy o 1.7 Business o 1.8 Computers o 1.9 Enterprise o 1.10 Fashion o 1.11 Food/Recipes o 1.12 Genealogy o 1.13 Mobile/Handheld o 1.14 Job o 1.15 Legal o 1.16 Medical o 1.17 News o 1.18 People o 1.19 Real estate / property o 1.20 Television o 1.21 Video Games 2 By information type o 2.1 Forum o 2.2 Blog o 2.3 Multimedia o 2.4 Source code o 2.5 BitTorrent o 2.6 Email o 2.7 Maps o 2.8 Price o 2.9 Question and answer . -

Information Rereival, Part 1

11/4/2019 Information Retrieval Deepak Kumar Information Retrieval Searching within a document collection for a particular needed information. 1 11/4/2019 Query Search Engines… Altavista Entireweb Leapfish Spezify Ask Excite Lycos Stinky Teddy Baidu Faroo Maktoob Stumpdedia Bing Info.com Miner.hu Swisscows Blekko Fireball Monster Crawler Teoma ChaCha Gigablast Naver Walla Dogpile Google Omgili WebCrawler Daum Go Rediff Yahoo! Dmoz Goo Scrub The Web Yandex Du Hakia Seznam Yippy Egerin HotBot Sogou Youdao ckDuckGo Soso 2 11/4/2019 Search Engine Marketshare 2019 3 11/4/2019 Search Engine Marketshare 2017 Matching & Ranking matched pages ranked pages 1. 2. query 3. muddy waters matching ranking “hits” 4 11/4/2019 Index Inverted Index • A mapping from content (words) to location. • Example: the cat sat on the dog stood on the cat stood 1 2 3 the mat the mat while a dog sat 5 11/4/2019 Inverted Index the cat sat on the dog stood on the cat stood 1 2 3 the mat the mat while a dog sat a 3 cat 1 3 dog 2 3 mat 1 2 on 1 2 sat 1 3 stood 2 3 the 1 2 3 while 3 Inverted Index the cat sat on the dog stood on the cat stood 1 2 3 the mat the mat while a dog sat a 3 cat 1 3 dog 2 3 mat 1 2 Every word in every on 1 2 web page is indexed! sat 1 3 stood 2 3 the 1 2 3 while 3 6 11/4/2019 Searching the cat sat on the dog stood on the cat stood 1 2 3 the mat the mat while a dog sat a 3 cat 1 3 query dog 2 3 mat 1 2 cat on 1 2 sat 1 3 stood 2 3 the 1 2 3 while 3 Searching the cat sat on the dog stood on the cat stood 1 2 3 the mat the mat while a dog sat a 3 cat -

TAUS Speech-To-Speech Translation Technology Report March 2017

TAUS Speech-to-Speech Translation Technology Report March 2017 1 Authors: Mark Seligman, Alex Waibel, and Andrew Joscelyne Contributor: Anne Stoker COPYRIGHT © TAUS 2017 All rights reserved. No part of this publication may be reproduced, stored in a retrieval system of any nature, or transmit- ted or made available in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior written permission of TAUS. TAUS will pursue copyright infringements. In spite of careful preparation and editing, this publication may contain errors and imperfections. Au- thors, editors, and TAUS do not accept responsibility for the consequences that may result thereof. Design: Anne-Maj van der Meer Published by TAUS BV, De Rijp, The Netherlands For further information, please email [email protected] 2 Table of Contents Introduction 4 A Note on Terminology 5 Past 6 Orientation: Speech Translation Issues 6 Present 17 Interviews 19 Future 42 Development of Current Trends 43 New Technology: The Neural Paradigm 48 References 51 Appendix: Survey Results 55 About the Authors 56 3 Introduction The dream of automatic speech-to-speech devices enabling on-the-spot exchanges using translation (S2ST), like that of automated trans- voice or text via smartphones – has helped lation in general, goes back to the origins of promote the vision of natural communication computing in the 1950s. Portable speech trans- on a globally connected planet: the ability to lation devices have been variously imagined speak to someone (or to a robot/chatbot) in as Star Trek’s “universal translator” to negoti- your language and be immediately understood ate extraterrestrial tongues, Douglas Adams’ in a foreign language. -

Translation Technology Landscape Report

Translation Technology Landscape Report April 2013 Funded by LT-Innovate Copyright © TAUS 2013 1 Translation Technology Landscape Report COPYRIGHT © TAUS 2013 All rights reserved. No part of this publication may be reproduced, stored in a retrieval system of any nature, or transmitted or made available in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior written permission of TAUS. TAUS will pursue copyright infringements. In spite of careful preparation and editing, this publication may contain errors and imperfections. Authors, editors, and TAUS do not accept responsibility for the consequences that may result thereof. Published by TAUS BV, De Rijp, The Netherlands. For further information, please email [email protected] 2 Copyright © TAUS 2013 Translation Technology Landscape Report Target Audience Any individual interested in the business of translation will gain from this report. The report will help beginners to understand the main uses for different types of translation technology, differentiate offerings and make informed decisions. For more experienced users and business decision-makers, the report shares insights on key trends, future prospects and areas of uncertainty. ,QYHVWRUVDQGSROLF\PDNHUVZLOOEHQH¿WIURPDQDO\VHVRIXQGHUO\LQJYDOXH propositions. Report Structure This report has been structured in discrete chapters and sections so that the reader can consume the information needed. The report does not need to be read from VWDUWWR¿QLVK7KHFRQYHQLHQFHDIIRUGHGIURPVXFKDVWUXFWXUHDOVRPHDQVWKHUHLV some repetition. Authors: Rahzeb Choudhury and Brian McConnell Reviewers - Jaap van der Meer and Rose Lockwood Our thanks to LT-Innovative for funding this report. Copyright © TAUS 2013 3 Translation Technology Landscape Report CONTENTS 1. Translation Technology Landscape in a Snapshot 8 2. -

Evaluating the Suitability of Web Search Engines As Proxies for Knowledge Discovery from the Web

Available online at www.sciencedirect.com ScienceDirect Procedia Computer Science 96 ( 2016 ) 169 – 178 20th International Conference on Knowledge Based and Intelligent Information and Engineering Systems, KES2016, 5-7 September 2016, York, United Kingdom Evaluating the suitability of Web search engines as proxies for knowledge discovery from the Web Laura Martínez-Sanahuja*, David Sánchez UNESCO Chair in Data Privacy, Department of Computer Engineering and Mathematics, Universitat Rovira i Virgili Av.Països Catalans, 26, 43007 Tarragona, Catalonia, Spain Abstract Many researchers use the Web search engines’ hit count as an estimator of the Web information distribution in a variety of knowledge-based (linguistic) tasks. Even though many studies have been conducted on the retrieval effectiveness of Web search engines for Web users, few of them have evaluated them as research tools. In this study we analyse the currently available search engines and evaluate the suitability and accuracy of the hit counts they provide as estimators of the frequency/probability of textual entities. From the results of this study, we identify the search engines best suited to be used in linguistic research. © 20162016 TheThe Authors. Authors. Published Published by by Elsevier Elsevier B.V. B.V. This is an open access article under the CC BY-NC-ND license (http://creativecommons.org/licenses/by-nc-nd/4.0/). Peer-review under responsibility of KES International. Peer-review under responsibility of KES International Keywords: Web search engines; hit count; information distribution; knowledge discovery; semantic similarity; expert systems. 1. Introduction Expert and knowledge-based systems rely on data to build the knowledge models required to perform inferences or answer questions. -

Bing 2017: Bing Annual 2017 Free

FREE BING 2017: BING ANNUAL 2017 PDF none | 64 pages | 25 Aug 2016 | HarperCollins Publishers | 9780008122386 | English | London, United Kingdom The Bing Times | Bing Nursery School Are you interested in testing our corporate solutions? Please do not hesitate to contact me. Additional Information. Bing 2017: Bing Annual 2017 source. Show sources information Show publisher information. Market share of search engines in the United States Google vs. Microsoft Advertising: U. This feature is limited to our corporate solutions. Please contact us to get started with full access to dossiers, forecasts, studies and international data. Try our corporate solution for free! Single Accounts Corporate Solutions Universities. Popular Statistics Topics Markets. This statistic shows the worldwide search market share of Bing as of August in leading online markets. During the measured period, Bing accounted for 17 percent of search traffic in Canada. The Microsoft-owned platform accounted for nine percent of search traffic worldwide. Worldwide search market share of Bing as of Augustby country. Loading Bing 2017: Bing Annual 2017 Download for free You need to log in to download this statistic Register for free Already a member? Log in. Show detailed source information? Register for free Already a member? Show sources information. Show publisher information. More information. Supplementary notes. Other statistics on the topic. Research expert covering internet and e-commerce. Profit from additional features with an Employee Account. Please create an employee account to Bing 2017: Bing Annual 2017 able to Bing 2017: Bing Annual 2017 statistics as favorites. Then you can access your favorite statistics via the star in the header.