D. Baxter, F. Cao, H. Eliasson, J. Phillips, Development of the I3A CPIQ Spatial Metrics, Image Quality and System Performance IX, Electronic Imaging 2012

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Denver Cmc Photography Section Newsletter

MARCH 2018 DENVER CMC PHOTOGRAPHY SECTION NEWSLETTER Wednesday, March 14 CONNIE RUDD Photography with a Purpose 2018 Monthly Meetings Steering Committee 2nd Wednesday of the month, 7:00 p.m. Frank Burzynski CMC Liaison AMC, 710 10th St. #200, Golden, CO [email protected] $20 Annual Dues Jao van de Lagemaat Education Coordinator Meeting WEDNESDAY, March 14, 7:00 p.m. [email protected] March Meeting Janice Bennett Newsletter and Communication Join us Wednesday, March 14, Coordinator fom 7:00 to 9:00 p.m. for our meeting. [email protected] Ron Hileman CONNIE RUDD Hike and Event Coordinator [email protected] wil present Photography with a Purpose: Conservation Photography that not only Selma Kristel Presentation Coordinator inspires, but can also tip the balance in favor [email protected] of the protection of public lands. Alex Clymer Social Media Coordinator For our meeting on March 14, each member [email protected] may submit two images fom National Parks Mark Haugen anywhere in the country. Facilities Coordinator [email protected] Please submit images to Janice Bennett, CMC Photo Section Email [email protected] by Tuesday, March 13. [email protected] PAGE 1! DENVER CMC PHOTOGRAPHY SECTION MARCH 2018 JOIN US FOR OUR MEETING WEDNESDAY, March 14 Connie Rudd will present Photography with a Purpose: Conservation Photography that not only inspires, but can also tip the balance in favor of the protection of public lands. Please see the next page for more information about Connie Rudd. For our meeting on March 14, each member may submit two images from National Parks anywhere in the country. -

Digital Holography Using a Laser Pointer and Consumer Digital Camera Report Date: June 22Nd, 2004

Digital Holography using a Laser Pointer and Consumer Digital Camera Report date: June 22nd, 2004 by David Garber, M.E. undergraduate, Johns Hopkins University, [email protected] Available online at http://pegasus.me.jhu.edu/~lefd/shc/LPholo/lphindex.htm Acknowledgements Faculty sponsor: Prof. Joseph Katz, [email protected] Project idea and direction: Dr. Edwin Malkiel, [email protected] Digital holography reconstruction: Jian Sheng, [email protected] Abstract The point of this project was to examine the feasibility of low-cost holography. Can viable holograms be recorded using an ordinary diode laser pointer and a consumer digital camera? How much does it cost? What are the major difficulties? I set up an in-line holographic system and recorded both still images and movies using a $600 Fujifilm Finepix S602Z digital camera and a $10 laser pointer by Lazerpro. Reconstruction of the stills shows clearly that successful holograms can be created with a low-cost optical setup. The movies did not reconstruct, due to compression and very low image resolution. Garber 2 Theoretical Background What is a hologram? The Merriam-Webster dictionary defines a hologram as, “a three-dimensional image reproduced from a pattern of interference produced by a split coherent beam of radiation (as a laser).” Holograms can produce a three-dimensional image, but it is more helpful for our purposes to think of a hologram as a photograph that can be refocused at any depth. So while a photograph taken of two people standing far apart would have one in focus and one blurry, a hologram taken of the same scene could be reconstructed to bring either person into focus. -

No Slide Title

December 2007 Updated 12/17/07 AT&T Mobility December ~ Washington Government WSCA – AT&T Mobility Device and Rate Plan Update for 2007 All Offers for Government Use ONLY On the attached pages you will find updates to the December WSCA pricing for Equipment and Rate Plans. Table of Contents Page 2: Voice Rate Plans Page 3: FREE Cellular Phones Page 4: Cellular Phones Page 5: BlackBerry Devices Page 6: SmartPhones (Microsoft, Nokia, Palm) Page 7: Push-to-Talk Devices Page 8: Aircards Page 9: Accessories Page 10: Nationwide Coverage Map December Highlights: ¾New Blackberry 8310 Curve! ~ Onboard GPS ~ Available in Titanium & Red! ¾ New Samsung Blackjack 2 – GPS ~ Available in Black & Red! ¾ Air Card Promotion: Sierra AC881 & Option GT Max FREE! *Certain devices may not be shown due to policy or otherwise WSCA Pricing. For more Information Contact: Prices and Promotions subject to change without notice Rob Holden All Offers for Government Use ONLY 425-580-7741 Master Price Agreement: T07-MST-069 [email protected] All plans receive WSCA 20% discount on monthly recurring service charges December 2007 December 2007 Updated 12/17/07 AT&T Mobility Oregon Government WSCA All plans receive 20% additional discount off of monthly recurring charges! AT&T Mobility Calling Plans REGIONAL Plan NATION Plans (Free Roaming and Long Distance Nationwide) Monthly Fee $9.99 (Rate Code ODNBRDS11) $39.99 $59.99 $79.99 $99.99 $149.99 Included mins 0 450 900 1,350 2,000 4,000 5000 N & W, Unlimited Nights & Weekends, Unlimited Mobile to 1000 Mobile to Mobile Unlim -

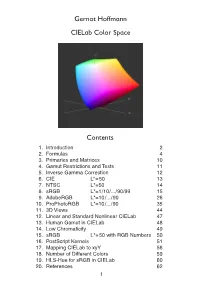

Cielab Color Space

Gernot Hoffmann CIELab Color Space Contents . Introduction 2 2. Formulas 4 3. Primaries and Matrices 0 4. Gamut Restrictions and Tests 5. Inverse Gamma Correction 2 6. CIE L*=50 3 7. NTSC L*=50 4 8. sRGB L*=/0/.../90/99 5 9. AdobeRGB L*=0/.../90 26 0. ProPhotoRGB L*=0/.../90 35 . 3D Views 44 2. Linear and Standard Nonlinear CIELab 47 3. Human Gamut in CIELab 48 4. Low Chromaticity 49 5. sRGB L*=50 with RGB Numbers 50 6. PostScript Kernels 5 7. Mapping CIELab to xyY 56 8. Number of Different Colors 59 9. HLS-Hue for sRGB in CIELab 60 20. References 62 1.1 Introduction CIE XYZ is an absolute color space (not device dependent). Each visible color has non-negative coordinates X,Y,Z. CIE xyY, the horseshoe diagram as shown below, is a perspective projection of XYZ coordinates onto a plane xy. The luminance is missing. CIELab is a nonlinear transformation of XYZ into coordinates L*,a*,b*. The gamut for any RGB color system is a triangle in the CIE xyY chromaticity diagram, here shown for the CIE primaries, the NTSC primaries, the Rec.709 primaries (which are also valid for sRGB and therefore for many PC monitors) and the non-physical working space ProPhotoRGB. The white points are individually defined for the color spaces. The CIELab color space was intended for equal perceptual differences for equal chan- ges in the coordinates L*,a* and b*. Color differences deltaE are defined as Euclidian distances in CIELab. This document shows color charts in CIELab for several RGB color spaces. -

Sample Manuscript Showing Specifications and Style

Information capacity: a measure of potential image quality of a digital camera Frédéric Cao 1, Frédéric Guichard, Hervé Hornung DxO Labs, 3 rue Nationale, 92100 Boulogne Billancourt, FRANCE ABSTRACT The aim of the paper is to define an objective measurement for evaluating the performance of a digital camera. The challenge is to mix different flaws involving geometry (as distortion or lateral chromatic aberrations), light (as luminance and color shading), or statistical phenomena (as noise). We introduce the concept of information capacity that accounts for all the main defects than can be observed in digital images, and that can be due either to the optics or to the sensor. The information capacity describes the potential of the camera to produce good images. In particular, digital processing can correct some flaws (like distortion). Our definition of information takes possible correction into account and the fact that processing can neither retrieve lost information nor create some. This paper extends some of our previous work where the information capacity was only defined for RAW sensors. The concept is extended for cameras with optical defects as distortion, lateral and longitudinal chromatic aberration or lens shading. Keywords: digital photography, image quality evaluation, optical aberration, information capacity, camera performance database 1. INTRODUCTION The evaluation of a digital camera is a key factor for customers, whether they are vendors or final customers. It relies on many different factors as the presence or not of some functionalities, ergonomic, price, or image quality. Each separate criterion is itself quite complex to evaluate, and depends on many different factors. The case of image quality is a good illustration of this topic. -

Seeing Like Your Camera ○ My List of Specific Videos I Recommend for Homework I.E

Accessing Lynda.com ● Free to Mason community ● Set your browser to lynda.gmu.edu ○ Log-in using your Mason ID and Password ● Playlists Seeing Like Your Camera ○ My list of specific videos I recommend for homework i.e. pre- and post-session viewing.. PART 2 - FALL 2016 ○ Clicking on the name of the video segment will bring you immediately to Lynda.com (or the login window) Stan Schretter ○ I recommend that you eventually watch the entire video class, since we will only use small segments of each video class [email protected] 1 2 Ways To Take This Course What Creates a Photograph ● Each class will cover on one or two topics in detail ● Light ○ Lynda.com videos cover a lot more material ○ I will email the video playlist and the my charts before each class ● Camera ● My Scale of Value ○ Maximum Benefit: Review Videos Before Class & Attend Lectures ● Composition & Practice after Each Class ○ Less Benefit: Do not look at the Videos; Attend Lectures and ● Camera Setup Practice after Each Class ○ Some Benefit: Look at Videos; Don’t attend Lectures ● Post Processing 3 4 This Course - “The Shot” This Course - “The Shot” ● Camera Setup ○ Exposure ● Light ■ “Proper” Light on the Sensor ■ Depth of Field ■ Stop or Show the Action ● Camera ○ Focus ○ Getting the Color Right ● Composition ■ White Balance ● Composition ● Camera Setup ○ Key Photographic Element(s) ○ Moving The Eye Through The Frame ■ Negative Space ● Post Processing ○ Perspective ○ Story 5 6 Outline of This Class Class Topics PART 1 - Summer 2016 PART 2 - Fall 2016 ● Topic 1 ○ Review of Part 1 ● Increasing Your Vision ● Brief Review of Part 1 ○ Shutter Speed, Aperture, ISO ○ Shutter Speed ● Seeing The Light ○ Composition ○ Aperture ○ Color, dynamic range, ● Topic 2 ○ ISO and White Balance histograms, backlighting, etc. -

Down and Dirty Camera Tricks Even for Your CELL PHONE

Down and Dirty Camera Tricks Even for Your CELL PHONE Educational Seminar GCSAA Presenter - John R. Johnson Creating Images That Count The often quoted Yogi said, “When you come to a Fork in the Road – Take it!” What he meant was . “When you have a Decision in Life – Make it.” Let’s Make Good Decisions with Our Cameras I Communicate With Images You’ve known me for Years. Good Photography Is How You Can Communicate This one is from the Media Moses Pointe – WA Which Is Better? Same Course, 100 yards away – Shot by a Pro Moses Pointe – WA Ten Tricks That Work . On Big Cameras Too. Note image to left is cell phone shot of me shooting in NM See, Even Pros Use Cell Phones So Let’s Get Started. I Have My EYE On YOU Photography Must-Haves Light Exposure Composition This is a Cell Phone Photograph #1 - Spectacular Light = Spectacular Photography Colbert Hills - KS Light from the side or slightly behind. Cell phones require tons of light, so be sure it is BRIGHT. Sunsets can’t hurt either. #2 – Make it Interesting Change your angle, go higher, go lower, look for the unusual. Resist the temptation to just stand and shoot. This is a Cell Phone Photograph Mt. Rainier Coming Home #2 – Make it Interesting Same trip, but I shot it from Space just before the Shuttle re-entry . OK, just kidding, but this is a real shot, on a flight so experiment and expand your vision. This is a Cell Phone Photograph #3 – Get Closer In This Case Lower Too Typically, the lens is wide angle, so things are too small, when you try to enlarge, they get blurry, so get closer to start. -

Passport Photograph Instructions by the Police

Passport photograph instructions 1 (7) Passport photograph instructions by the police These instructions describe the technical and quality requirements on passport photographs. The same instructions apply to all facial photographs used on the passports, identity cards and permits granted by the police. The instructions are based on EU legislation and international treaties. The photographer usually sends the passport photograph directly to the police electronically, and it is linked to the application with a photograph retrieval code assigned to the photograph. You can also use a paper photograph, if you submit your application at a police service point. Contents • Photograph format • Technical requirements on the photograph • Dimensions and positioning • Posture • Lighting • Expressions, glasses, head-wear and make-up • Background Photograph format • The photograph can be a black-and-white or a colour photograph. • The dimensions of photographs submitted electronically must be exactly 500 x 653 pixels. Deviations as small as one pixel are not accepted. • Electronically submitted photographs must be saved in the JPEG format (not JPEG2000). The file extension can be either .jpg or .jpeg. • The maximum size of an electronically submitted photograph is 250 kilobytes. 1. Correct 2. Correct 2 (7) Technical requirements on the photograph • The photograph must be no more than six months old. • The photograph must not be manipulated in any way that would change even the small- est details in the subject’s appearance or in a way that could raise suspicions about the photograph's authenticity. Use of digital make-up is not allowed. • The photograph must be sharp and in focus over the entire face. -

Preparing Images for Delivery

TECHNICAL PAPER Preparing Images for Delivery TABLE OF CONTENTS So, you’ve done a great job for your client. You’ve created a nice image that you both 2 How to prepare RGB files for CMYK agree meets the requirements of the layout. Now what do you do? You deliver it (so you 4 Soft proofing and gamut warning can bill it!). But, in this digital age, how you prepare an image for delivery can make or 13 Final image sizing break the final reproduction. Guess who will get the blame if the image’s reproduction is less than satisfactory? Do you even need to guess? 15 Image sharpening 19 Converting to CMYK What should photographers do to ensure that their images reproduce well in print? 21 What about providing RGB files? Take some precautions and learn the lingo so you can communicate, because a lack of crystal-clear communication is at the root of most every problem on press. 24 The proof 26 Marking your territory It should be no surprise that knowing what the client needs is a requirement of pro- 27 File formats for delivery fessional photographers. But does that mean a photographer in the digital age must become a prepress expert? Kind of—if only to know exactly what to supply your clients. 32 Check list for file delivery 32 Additional resources There are two perfectly legitimate approaches to the problem of supplying digital files for reproduction. One approach is to supply RGB files, and the other is to take responsibility for supplying CMYK files. Either approach is valid, each with positives and negatives. -

Photographic Films

PHOTOGRAPHIC FILMS A camera has been called a “magic box.” Why? Because the box captures an image that can be made permanent. Photographic films capture the image formed by light reflecting from the surface being photographed. This instruction sheet describes the nature of photographic films, especially those used in the graphic communications industries. THE STRUCTURE OF FILM Protective Coating Emulsion Base Anti-Halation Backing Photographic films are composed of several layers. These layers include the base, the emulsion, the anti-halation backing and the protective coating. THE BASE The base, the thickest of the layers, supports the other layers. Originally, the base was made of glass. However, today the base can be made from any number of materials ranging from paper to aluminum. Photographers primarily use films with either a plastic (polyester) or paper base. Plastic-based films are commonly called “films” while paper-based films are called “photographic papers.” Polyester is a particularly suitable base for film because it is dimensionally stable. Dimensionally stable materials do not appreciably change size when the temperature or moisture- level of the film change. Films are subjected to heated liquids during processing (developing) and to heat during use in graphic processes. Therefore, dimensional stability is very important for graphic communications photographers because their final images must always match the given size. Conversely, paper is not dimen- sionally stable and is only appropriate as a film base when the “photographic print” is the final product (as contrasted to an intermediate step in a multi-step process). THE EMULSION The emulsion is the true “heart” of film. -

Dalmatian's Definitions

T H E B L A C K & W H I T E P A P E R S D A L M A T I A N ’ S D E F I N I T I O N S 8 BIT A bit is the possible number of colors or tones assigned to each pixel. In 8 bit files, 1 of 256 tones is assigned to each pixel. 8-bit Jpeg is the default setting for most cameras; whenever possible set the camera to RAW capture for the best image capture possible. 16 BIT A bit is the possible number of colors or tones assigned to each pixel. In 16 bit files, 1 of 65,536 tones are assigned to each pixel. 16 bit files have the greatest range of tonalities and a more realistic interpretation of continuous tone. Note the increase in tonalities from 8 bit to 16 bit. If your aim is the quality of the image, then 16 bit is a must. ADOBE 1998 COLOR SPACE Adobe ‘98 is the preferred RGB color space that places the captured and therefore printable colors within larger parameters, rendering approximately 50% the visible color space of the human eye. Whenever possible, change the camera’s default sRGB setting to Adobe 1998 in order to increase available color capture. Whenever possible change this setting to Adobe 1998 in order to increase available color capture. When an image is captured in sRGB, it is best to leave the color space unchanged so color rendering is unaffected. ARCHIVAL Archival materials are those with a neutral pH balance that will not degrade over time and are resistant to UV fading. -

Visual Mobile Communication Camera Phone Photo Messages As Ritual Communication and Mediated Presence Mikko Villi’S Background Is in Com- Munication Studies

mikko villi Visual mobile communication Camera phone photo messages as ritual communication and mediated presence Mikko Villi’s background is in com- munication studies. He has worked as a researcher at the University of Art and Design Helsinki, University of Tampere and University of Helsin- ki, where he has also held the position of university lecturer. Currently, he works as coordinator of educational operations at Aalto University. Villi has both researched and taught sub- jects related to mobile communica- tion, visual communication, social media, multi-channel publishing and media convergence. Visual mobile communication mikko villi Visual mobile communication Camera phone photo messages as ritual communication and mediated presence Aalto University School of Art and Design Publication series A 103 www.taik.fi/bookshop © Mikko Villi Graphic design: Laura Villi Original cover photo: Ville Karppanen !"#$ 978-952-60-0006-0 !""$ 0782-1832 Cover board: Ensocoat 250, g/m2 Paper: Munken Lynx 1.13, 120 g/m2 Typography: Chronicle Text G1 and Chronicle Text G2 Printed at %" Bookwell Ltd. Finland, Jyväskylä, 2010 Contents acknowledgements 9 1 introduction 13 2 Frame of research 17 2.1 Theoretical framework 19 2.1.1 Ritual communication 19 2.1.2 Mediated presence 21 2.2 Motivation and contribution of the study 24 2.3 Mobile communication as a field of research 27 2.4 Literature on photo messaging 30 2.5 The Arcada study 40 2.6 Outline of the study 45 3 Camera phone photography 49 3.1 The real camera and the spare camera 51 3.2 Networked photography