Learning Neural Audio Embeddings for Grounding Semantics in Auditory Perception

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Role of Bell Patterns in West African and Afro-Caribbean Music

Braiding Rhythms: The Role of Bell Patterns in West African and Afro-Caribbean Music A Smithsonian Folkways Lesson Designed by: Jonathan Saxon* Antelope Valley College Summary: These lessons aim to demonstrate polyrhythmic elements found throughout West African and Afro-Caribbean music. Students will listen to music from Ghana, Nigeria, Cuba, and Puerto Rico to learn how this polyrhythmic tradition followed Africans to the Caribbean as a result of the transatlantic slave trade. Students will learn the rumba clave pattern, cascara pattern, and a 6/8 bell pattern. All rhythms will be accompanied by a two-step dance pattern. Suggested Grade Levels: 9–12, college/university courses Countries: Cuba, Puerto Rico, Ghana, Nigeria Regions: West Africa, the Caribbean Culture Groups: Yoruba of Nigeria, Ga of Ghana, Afro-Caribbean Genre: West African, Afro-Caribbean Instruments: Designed for classes with no access to instruments, but sticks, mambo bells, and shakers can be added Language: English Co-Curricular Areas: U.S. history, African-American history, history of Latin American and the Caribbean (also suited for non-music majors) Prerequisites: None. Objectives: Clap and sing clave rhythm Clap and sing cascara rhythm Clap and sing 6/8 bell pattern Dance two-bar phrase stepping on quarter note of each beat in 4/4 time Listen to music from Cuba, Puerto Rico, Ghana, and Nigeria Learn where Cuba, Puerto Rico, Ghana, and Nigeria are located on a map Understand that rhythmic ideas and phrases followed Africans from West Africa to the Caribbean as a result of the transatlantic slave trade * Special thanks to Dr. Marisol Berríos-Miranda and Dr. -

TD-30 Data List

Data List Preset Drum Kit List No. Name Pad pattern No. Name Pad pattern 1 Studio 41 RockGig 2 LA Metal 42 Hard BeBop 3 Swingin’ 43 Rock Solid 4 Burnin’ 44 2nd Line 5 Birch 45 ROBO TAP 6 Nashville 46 SATURATED 7 LoudRock 47 piccolo 8 JJ’s DnB 48 FAT 9 Djembe 49 BigHall 10 Stage 50 CoolGig LOOP 11 RockMaster 51 JazzSes LOOP 12 LoudJazz 52 7/4 Beat LOOP 13 Overhead 53 :neotype: 1SHOT, TAP 14 Looooose 54 FLA>n<GER 1SHOT, TAP 15 Fusion 55 CustomWood 16 Room 56 50s King 17 [RadioMIX] 57 BluesRock 18 R&B 58 2HH House 19 Brushes 59 TechFusion 20 Vision LOOP, TAP 60 BeBop 21 AstroNote 1SHOT 61 Crossover 22 acidfunk 62 Skanky 23 PunkRock 63 RoundBdge 24 OpenMaple 64 Metal\Core 25 70s Rock 65 JazzCombo 26 DrySound 66 Spark! 27 Flat&Shallow 67 80sMachine 28 Rvs!Trashy 68 =cosmic= 29 melodious TAP 69 1985 30 HARD n’BASS TAP 70 TR-808 31 BazzKicker 71 TR-909 32 FatPressed 72 LatinDrums 33 DrumnDubStep 73 Latin 34 ReMix-ulator 74 Brazil 35 Acoutronic 75 Cajon 36 HipHop 76 African 37 90sHouse 77 Ka-Rimba 38 D-N-B LOOP 78 Tabla TAP 39 SuperLoop TAP 79 Asian 40 >>process>>> 80 Orchestra TAP Copyright © 2012 ROLAND CORPORATION All rights reserved. No part of this publication may be reproduced in any form without the written permission of ROLAND CORPORATION. Roland and V-Drums are either registered trademarks or trademarks of Roland Corporation in the United States and/or other countries. -

FA-06 and FA-08 Sound List

Contents Studio Sets . 3 Preset/User Tones . 4 SuperNATURAL Acoustic Tone . 4 SuperNATURAL Synth Tone . 5 SuperNATURAL Drum Kit . .15 PCM Synth Tone . .15 PCM Drum Kit . .23 GM2 Tone (PCM Synth Tone) . .24 GM2 Drum Kit (PCM Drum Kit) . .26 Drum Kit Key Assign List . .27 Waveforms . .40 Super NATURAL Synth PCM Waveform . .40 PCM Synth Waveform . .42 Copyright © 2014 ROLAND CORPORATION All rights reserved . No part of this publication may be reproduced in any form without the written permission of ROLAND CORPORATION . © 2014 ローランド株式会社 本書の一部、もしくは全部を無断で複写・転載することを禁じます。 2 Studio Sets (Preset) No Studio Set Name MSB LSB PC 56 Dear My Friends 85 64 56 No Studio Set Name MSB LSB PC 57 Nice Brass Sect 85 64 57 1 FA Preview 85 64 1 58 SynStr /SoloLead 85 64 58 2 Jazz Duo 85 64 2 59 DistBs /TranceChd 85 64 59 3 C .Bass/73Tine 85 64 3 60 SN FingBs/Ac .Gtr 85 64 60 4 F .Bass/P .Reed 85 64 4 61 The Begin of A 85 64 61 5 Piano + Strings 85 64 5 62 Emotionally Pad 85 64 62 6 Dynamic Str 85 64 6 63 Seq:Templete 85 64 63 7 Phase Time 85 64 7 64 GM2 Templete 85 64 64 8 Slow Spinner 85 64 8 9 Golden Layer+Pno 85 64 9 10 Try Oct Piano 85 64 10 (User) 11 BIG Stack Lead 85 64 11 12 In Trance 85 64 12 No Studio Set Name MSB LSB PC 13 TB Clone 85 64 13 1–128 INIT STUDIO 85 0 1–128 129– 14 Club Stack 85 64 14 INIT STUDIO 85 1 1–128 256 15 Master Control 85 64 15 257– INIT STUDIO 85 2 1–128 16 XYZ Files 85 64 16 384 17 Fairies 85 64 17 385– INIT STUDIO 85 3 1–128 18 Pacer 85 64 18 512 19 Voyager 85 64 19 * When shipped from the factory, all USER locations were set to INIT STUDIO . -

PERCUSSION INSTRUMENTS of the ORCHESTRA Cabasa

PERCUSSION INSTRUMENTS OF THE ORCHESTRA Cabasa - A rattle consisting of a small gourd covered with a loose network of strung beads. It is held by a handle and shaken with a rotating motion. Claves - A pair of hardwood cylinders, each approximately 20 cm. long. One cylinder rests against the fingernails of a loosely formed fist (cupped to act as a resonator) and is struck with the other cylinder. Cowbell - A metal bell, usually with straight sides and a slightly expanding, nearly rectangular cross section. A type without a clapper and played with a drumstick is most often used in orchestras. Castanets - Consists of two shell-shaped pieces of wood, the hollow sides of which are clapped together. They produce an indefinite pitch and are widely used in Spanish music especially to accompany dance. When used in an orchestra, they are often mounted on either side of a piece of wood that is held by the player and shaken. Sleigh bells - Small pellet bells mounted in rows on a piece of wood with a protruding handle. Tambourine - A shallow, single-headed frame drum with a wooden frame in which metal disks or jingles are set. It is most often held in one hand and struck with the other. Bongos - A permanently attached pair of small, single-headed, cylindrical or conical drums. One drum is of slightly larger diameter and is tuned about a fifth below the smaller. The pair is held between the knees and struck with the hands. Maracas - A Latin American rattle consisting of a round or oval-shaped vessel filled with seeds or similar material and held by a handle. -

TC 1-19.30 Percussion Techniques

TC 1-19.30 Percussion Techniques JULY 2018 DISTRIBUTION RESTRICTION: Approved for public release: distribution is unlimited. Headquarters, Department of the Army This publication is available at the Army Publishing Directorate site (https://armypubs.army.mil), and the Central Army Registry site (https://atiam.train.army.mil/catalog/dashboard) *TC 1-19.30 (TC 12-43) Training Circular Headquarters No. 1-19.30 Department of the Army Washington, DC, 25 July 2018 Percussion Techniques Contents Page PREFACE................................................................................................................... vii INTRODUCTION ......................................................................................................... xi Chapter 1 BASIC PRINCIPLES OF PERCUSSION PLAYING ................................................. 1-1 History ........................................................................................................................ 1-1 Definitions .................................................................................................................. 1-1 Total Percussionist .................................................................................................... 1-1 General Rules for Percussion Performance .............................................................. 1-2 Chapter 2 SNARE DRUM .......................................................................................................... 2-1 Snare Drum: Physical Composition and Construction ............................................. -

Strike Multipad Kit & Instrument List

Kit & Instrument List Version 1.0 Kits 001-Power Duel 009-Good Ol' Rock 017-Xonga Set 025-Mult Sidesnare 002-Cash Money Kit 010-Latin Loops 018-Drum Circle 026-Marimba 003-Knife Jogger 011-Jazz Kit 019-Orchestra 027-Bowed Celeste 004-Piper Shuffle 012-Jeff's Solo 020- Kit of India 2 028-Xylophone 005-Ham & Beans 013-Drum Corp 021-Toy Chest 029-Timpani 006-Pink Tom Phils 014-Kit of India 1 022-Mi Casa 030-Bells 007-Pluck This! 015-Synth Loops 023-Perc Table 008-Randojon 016-Hat Grease Kit 024-Jo's Drums Instruments Chinas Acoustic Sab08ChKang a SabBwellBow a Wu17Stick a Zil18OrientalEdge a Sab08ChKang b SabBwellBow b Wu17Stick b Zil18OrientalEdge b Sab18Mallet a SabBwellEdge a Wu18Stick a Zil18TrashBow a Sab18Mallet b SabBwellEdge b Wu18Stick b Zil18TrashBow b Sab18Stick a SabBwellMallet a Wu20Stick 4a Zil18TrashEdge a Sab18Stick b SabBwellMallet b Wu20Stick 4b Zil18TrashEdge b Sab20XStreme a Wu17Brush a Zil18OrientalBow a ZilK17 a Sab20XStreme b Wu17Brush b Zil18OrientalBow b Crashes Acoustic 17KDBrush 4a Trad18Bow b Z16MedBrush a Z17MedStick b 17KDBrush 4b Trad18Edge a Z16MedBrush b Z18Bow a FlangeStacker a Trad18Edge b Z16MedMallet a Z18Bow b FlangeStacker b Z16ACBrush a Z16MedMallet b Z18DarkBow a MVint18Bow a Z16ACBrush b Z16MedStick a Z18DarkBow b MVint18Bow b Z16ACMallet a Z16MedStick b Z18DarkEdge a MVint18Edge a Z16ACMallet b Z17KDMallet a Z18DarkEdge b Kit & Instrument List Page 1 Crashes Acoustic (continued) PSSab Stacker a Z16ACStick b Z17KDStick a Z18Edge b PSSab Stacker b Z16DarkBrush a Z17KDStick b Z18MedBrush a Smashed17in -

Afro-Brazilian Songs and Rhythms for General Music K-8

Dr. Christopher H. Fashun Assistant Professor of Music, Hope College ([email protected]) Afro-Brazilian Songs and Rhythms for General Music K-8 Candomblé: The Afro-Brazilian Religion of Bahia Candomblé is the Afro-Brazilian religion of Brazil that originated in the state of Bahia in northeast Brazil. It is part of the religion and culture of the Africa diaspora, which include the religions of Cuban Santeria and Vodun of Haiti. Although the spellings and pronunciations of the deities (called orixás in Candomblé) are different between the countries due to syncretism and miscegenation, they have a shared tradition of rituals, songs, rhythms, mythology, worship, and social and cultural context. Of the approximate 10 million Africans that were enslaved and brought to the Americas, 4 million of those landed in Brazil. During the years of the Trans-Atlantic slave trade (1500-1850), Africans were taken from 3 different regions. Candomblé originated in the state of Bahia and its capital city of Salvador as a result of the concentration of enslaved Africans. The religious traditions of the enslaved Africans were founded on practices from Central and West Africa, who believed in a single god that was accompanied by a pantheon of deities called Orixás. Due to the mixing of the different tribal and cultural groups, the slaves were allowed to form irmandades, which were social organizations of Catholic lay brotherhoods that allowed the slaves to gather together from their points of origin in Africa. As a result of oppression, the enslaved Africans disguised their worship by syncretizing the orixás and religious rituals with the saints of Catholicism. -

Sean Shepherd

Sean Shepherd Sean Shepherd photo © Jennifer Taylor FULL ORCHESTRA 1 FULL ORCHESTRA 1 FULL ORCHESTRA Express Abstractionism 2017 13 min Blue Blazes for orchestra 2012 8 min 3(3=picc).2.corA.2(2=EbCl).bcl.3(3=contra) - 4.3.2.btbn.1 - timp - perc(4) - hp - pno/cel - Concert opener for orchestra strings picc.2.2.corA.2.bcl.2.dbn-4.3.2.btrbn.1-timp.perc(3): 3 sm egg shaker/sm maracas/lg 9790051098385 Orchestra (full score) maracas/vibraslap/5 tgl (2sm, med, 2lg)/ant.cym/tpl.bl/ratchet/3 sus.cym/slapstick/crash cym/cast/cabasa/tamb/tam-t/claves/cowbell/anvil/SD/slide whistle/2 bongos/wdbl/brake dr/BD/sleighbells/metal bowl-harp-pft(cel)-strings World Premiere: 08 Feb 2018 Symphony Hall, Boston, MA, United States Boston Symphony Orchestra World Premiere: 31 May 2012 Conductor: Andris Nelsons Kennedy Center Concert Hall, Washington, DC, United States National Symphony Orchestra, Washington Availability: This work is available from Boosey & Hawkes for the world Conductor: Christoph Eschenbach Magiya Availability: This work is available from Boosey & Hawkes for the world 2013 7 min Concerto for Ensemble for youth orchestra 2014-15 28 min maximum instrumentation: 3.3.3.3-4.3.3.1-timp-perc(4)-strings Concerto for ensemble 2(II=picc).2(II=corA).2(II=Ebcl).bcl.2-2.2.2.1-3perc:vib/anvil/2brake.drum/2susp.cym/ta World Premiere: 11 Jul 2013 mb/tpl.bl/sandpaper/3tgl/3wdbl/marimba/t.bells/3metal pipe/BD/egg Performing Arts Center - SUNY Purchase College, Purchase, NY, United States shaker/ratchet/2glass jars/xyl/glsp/sleigh bells/bell plate/splash National -

The Legacy of S Ta N K E N to N

THE UNITED STATES ARMY FIELD BAND JAZZ AMBASSADORS The Legacy of S TA N K ENTON Washington, D.C. “The Musical Ambassadors of the Army” he Jazz Ambassadors is the Concerts, school assemblies, clinics, T United States Army’s premier music festivals, and radio and televi- touring jazz orchestra. As a component sion appearances are all part of the Jazz of The United States Army Field Band Ambassadors’ yearly schedule. of Washington, D.C., this internation- Many of the members are also com- ally acclaimed organization travels thou- posers and arrangers whose writing helps sands of miles each year to present jazz, create the band’s unique sound. Concert America’s national treasure, to enthusi- repertoire includes big band swing, be- astic audiences throughout the world. bop, contemporary jazz, popular tunes, The band has performed in all fifty and dixieland. states, Canada, Mexico, Europe, Ja- Whether performing in the United pan, and India. Notable performances States or representing our country over- include appearances at the Montreux, seas, the band entertains audiences of all Brussels, North Sea, Toronto, and New- ages and backgrounds by presenting the port jazz festivals. American art form, jazz. The Legacy of Stan Kenton About this recording The Jazz Ambassadors of The United States Army Field Band presents the first in a series of recordings honoring the lives and music of individuals who have made significant contri- butions to big band jazz. Designed primarily as educational resources, these record- ings are a means for young musicians to know and appreciate the best of the music and musicians of previous generations, and to understand the stylistic developments leading to today’s litera- ture in ensemble music. -

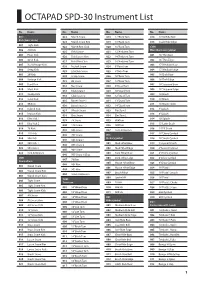

OCTAPAD SPD-30 Instrument List

OCTAPAD SPD-30 Instrument List No. Name No. Name No. Name No. Name KIK: 022 March Snare 018 12”Rock Tom 014 Sizzle Ride Bell Kick (bass drum) 023 March Snare Rim 019 13”Rock Tom 015 Sizzle Ride Edge 001 Tight Kick 024 March Rim Click 020 16”Rock Tom CYM: 002 Alt Kick Miscellaneous cymbal 025 Field Snare 021 12”Ambient Tom 003 Fiber Kick 001 16”Thin Bow 026 Field Snare Rim 022 14”Ambient Tom 004 Birch Kick 002 16”Thin Edge 027 Field Rim Click 023 16”Ambient Tom 005 RockVintage Kick 003 17”Medium Bow 028 NuJack Snare 024 6”Roto Tom 006 Deep Kick 004 17”Medium Edge 029 LiteClub Snare 025 8”Roto Tom 007 20”Kick 005 18”Dark Bow 030 2step Snare 026 10”Roto Tom 008 Vintage Kick 006 18”Dark Edge 031 BB Snare 027 12”Roto Tom 009 Hard Kick 007 18”Suspend Bow 032 Box Snare 028 6”Quad Tom 010 Meat Kick 008 18”Suspend Edge 033 Club Snare 1 029 10”Quad Tom 011 NuHip Kick 009 16”Brush 034 Club Snare 2 030 12”Quad Tom 012 Solid Kick 010 18”Brush 035 Barrel Snare 1 031 13”Quad Tom 013 BB Kick 011 18”Brush Sizzle 036 Barrel Snare 2 032 14”Quad Tom 014 Hybrid Kick 012 6”Splash 037 Whack Snare 033 ElecTom 1 015 Impact Kick 013 8”Splash 038 Elec Snare 034 ElecTom 2 016 Elec Kick 1 014 10”Splash 039 78 Snare 035 808Tom 017 Elec Kick 2 015 13”Latin Crash 040 110 Snare 036 909Tom 018 78 Kick 016 14”FX Crash 041 606 Snare 037 Tom Ambience 019 110 Kick 017 16”China Cymbal 042 707 Snare HH: 020 808 Kick Hi-hat cymbal 018 18”Swish Cymbal 043 808 Snare 1 021 909 Kick 1 001 Med Hihat Bow 019 3 Layered Crash 044 808 Snare 2 022 909 Kick 2 002 Med Hihat Edge 020 4”Accent -

Medium of Performance Thesaurus for Music

A clarinet (soprano) albogue tubes in a frame. USE clarinet BT double reed instrument UF kechruk a-jaeng alghōzā BT xylophone USE ajaeng USE algōjā anklung (rattle) accordeon alg̲hozah USE angklung (rattle) USE accordion USE algōjā antara accordion algōjā USE panpipes UF accordeon A pair of end-blown flutes played simultaneously, anzad garmon widespread in the Indian subcontinent. USE imzad piano accordion UF alghōzā anzhad BT free reed instrument alg̲hozah USE imzad NT button-key accordion algōzā Appalachian dulcimer lõõtspill bīnõn UF American dulcimer accordion band do nally Appalachian mountain dulcimer An ensemble consisting of two or more accordions, jorhi dulcimer, American with or without percussion and other instruments. jorī dulcimer, Appalachian UF accordion orchestra ngoze dulcimer, Kentucky BT instrumental ensemble pāvā dulcimer, lap accordion orchestra pāwā dulcimer, mountain USE accordion band satāra dulcimer, plucked acoustic bass guitar BT duct flute Kentucky dulcimer UF bass guitar, acoustic algōzā mountain dulcimer folk bass guitar USE algōjā lap dulcimer BT guitar Almglocke plucked dulcimer acoustic guitar USE cowbell BT plucked string instrument USE guitar alpenhorn zither acoustic guitar, electric USE alphorn Appalachian mountain dulcimer USE electric guitar alphorn USE Appalachian dulcimer actor UF alpenhorn arame, viola da An actor in a non-singing role who is explicitly alpine horn USE viola d'arame required for the performance of a musical BT natural horn composition that is not in a traditionally dramatic arará form. alpine horn A drum constructed by the Arará people of Cuba. BT performer USE alphorn BT drum adufo alto (singer) arched-top guitar USE tambourine USE alto voice USE guitar aenas alto clarinet archicembalo An alto member of the clarinet family that is USE arcicembalo USE launeddas associated with Western art music and is normally aeolian harp pitched in E♭. -

Billie Holiday: a Biography

Billie Holiday: A Biography Meg Greene Greenwood Press BILLIE HOLIDAY Recent Titles in Greenwood Biographies Jane Goodall: A Biography Meg Greene The Leakeys: A Biography Mary Bowman-Kruhm Arthur Ashe: A Biography Richard Steins Cesar Chavez: A Biography Roger Bruns F. Scott Fitzgerald: A Biography Edward J. Rielly Saddam Hussein: A Biography Shiva Balaghi Tiger Woods: A Biography Lawrence J. Londino Mohandas K. Gandhi: A Biography Patricia Cronin Marcello Muhammad Ali: A Biography Anthony O. Edmonds Martin Luther King, Jr.: A Biography Roger Bruns Wilma Rudolph: A Biography Maureen M. Smith Condoleezza Rice: A Biography Jacqueline Edmondson Arnold Schwarzenegger: A Biography Louise Krasniewicz and Michael Blitz BILLIE HOLIDAY A Biography By Meg Greene GREENWOOD BIOGRAPHIES GREENWOOD PRESS WESTPORT, CONNECTICUT • LONDON Library of Congress Cataloging-in-Publication Data Greene, Meg. Billie Holiday: a biography / By Meg Greene. p. cm. —(Greenwood biographies) Includes bibliographical references and index. ISBN 0–313–33629–6 (alk. paper) 1. Holiday, Billie, 1915–1959. 2. Singers—United States—Biography. I. Title. ML420.H58G68 2007 782.42165092—dc22 [B] 2006025382 British Library Cataloguing in Publication Data is available. Copyright © 2007 by Meg Greene All rights reserved. No portion of this book may be reproduced, by any process or technique, without the express written consent of the publisher. Library of Congress Catalog Card Number: 2006025382 ISBN 13: 978–0–313–33629–4 ISBN 10: 0–313–33629–6 ISSN: 1540–1900 First published in 2007 Greenwood Press, 88 Post Road West, Westport, CT 06881 An imprint of Greenwood Publishing Group, Inc. www.greenwood.com Printed in the United States of America The paper used in this book complies with the Permanent Paper Standard issued by the National Information Standards Organization (Z39.48–1984).