AMFS 2020 Perc Diagram

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

C:\Documents and Settings\Jeff Snyder.WITOLD\My Documents

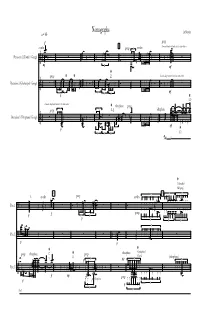

Nomographs q = 66 Jeff Snyder gongs 5 crotalesf gongs crotales diamond-shaped noteheads indicate rattan beaters 5 Percussion 1 (Crotales / Gongs) 5 P F D diamond-shaped noteheads indicate rattan beaters gongs _ Percussion 2 (Glockenspiel / Gongs) 3 3 5 5 5 F p G diamond-shaped noteheads indicate rattan beaters 5 vibraphone gongs gongs 3 D, A_ vibraphone Percussion 3 (Vibraphone / Gongs) 5 F p 5 5 5 E A (crotales) A# (gong) 10 7 15 7 7 l.v. crotales gongs crotales Perc.1 gongs 5 5 f p p 7 7 7 7 Perc.2 p p A (vibraphone) gongs vibraphone C gongs vibraphone G E (gong) (vibraphone) 5 7 7 p pp Perc.3 gongs f P vibraphone p 7 p 5 (Ped.) p 7 2 (rattan on both side (damp all) A (crotales) 20 5 5 3 and center of gong) gongs 3 Perc.1 3 3 f (rattan on both side (l.v.) C# and center of gong) 3 3 3 3 3 3 Perc.2 vibraphone D (vibraphone) F vibraphone (damp all) 3 D# (gong) gong vibraphone C# gongs (vibraphone) Perc.3 3 3 5 5 gongs 3 5 25 crotales 5 30 B (rattan on side) (all) gongs 3 Perc.1 5 (rattan on both side 5 5 gongs 5 p 5 5 5 f (rattan on side) and center of gong) (all) -

University of Cincinnati

UNIVERSITY OF CINCINNATI Date: 29-Apr-2010 I, Tyler B Walker , hereby submit this original work as part of the requirements for the degree of: Doctor of Musical Arts in Composition It is entitled: An Exhibition on Cheerful Privacies Student Signature: Tyler B Walker This work and its defense approved by: Committee Chair: Mara Helmuth, DMA Mara Helmuth, DMA Joel Hoffman, DMA Joel Hoffman, DMA Mike Fiday, PhD Mike Fiday, PhD 5/26/2010 587 An Exhibition On Cheerful Privacies Three Landscapes for Tape and Live Performers Including Mezzo-Soprano, Bb Clarinet, and Percussion A dissertation submitted to the Division of Research and Advanced Studies of the University of Cincinnati in partial fulfillment of the requirements for the degree of Doctor of Musical Arts in the Division of Composition, Musicology, and Theory of the College-Conservatory of Music 2010 by Tyler Bradley Walker M.M. Georgia State University January 2004 Committee Chair: Mara Helmuth, DMA Abstract This document consists of three-musical landscapes totaling seventeen minutes in length. The music is written for a combination of mezzo-soprano, Bb clarinet, percussion and tape. One distinguishing characteristic of this music, apart from an improvisational quality, involves habituating the listener through consistent dynamics; the result is a timbrally-diverse droning. Overall, pops, clicks, drones, and resonances converge into a direct channel of aural noise. One of the most unique characteristics of visual art is the strong link between process and esthetic outcome. The variety of ways to implement a result is astounding. The musical landscapes in this document are the result of an interest in varying my processes; particularly, moving mostly away from pen and manuscript to the sequencer. -

The Percussion Family 1 Table of Contents

THE CLEVELAND ORCHESTRA WHAT IS AN ORCHESTRA? Student Learning Lab for The Percussion Family 1 Table of Contents PART 1: Let’s Meet the Percussion Family ...................... 3 PART 2: Let’s Listen to Nagoya Marimbas ...................... 6 PART 3: Music Learning Lab ................................................ 8 2 PART 1: Let’s Meet the Percussion Family An orchestra consists of musicians organized by instrument “family” groups. The four instrument families are: strings, woodwinds, brass and percussion. Today we are going to explore the percussion family. Get your tapping fingers and toes ready! The percussion family includes all of the instruments that are “struck” in some way. We have no official records of when humans first used percussion instruments, but from ancient times, drums have been used for tribal dances and for communications of all kinds. Today, there are more instruments in the percussion family than in any other. They can be grouped into two types: 1. Percussion instruments that make just one pitch. These include: Snare drum, bass drum, cymbals, tambourine, triangle, wood block, gong, maracas and castanets Triangle Castanets Tambourine Snare Drum Wood Block Gong Maracas Bass Drum Cymbals 3 2. Percussion instruments that play different pitches, even a melody. These include: Kettle drums (also called timpani), the xylophone (and marimba), orchestra bells, the celesta and the piano Piano Celesta Orchestra Bells Xylophone Kettle Drum How percussion instruments work There are several ways to get a percussion instrument to make a sound. You can strike some percussion instruments with a stick or mallet (snare drum, bass drum, kettle drum, triangle, xylophone); or with your hand (tambourine). -

Quickstart Guide EN

Quickstart Guide EN Hello! Contents IMPORTANT SAFETY INSTRUCTIONS . 4 Important Information . 5. Assembling . 6 Packing List . .6 . Assembly . 7 Attaching the Beater Patch . 9 Tuning the Head Tension . 9 Connection . 10 Basic Operation . 12 Panel Description . 12 Power On/Off . .12 . Home Screen . .13 . Volume . 13 Inst Info . 13 . Click (Metronome) . 13 . Recorder . 13 Menu . 14 Power Menu . 14 Changing the Instrument . 15 . Adjusting the Pad Levels . .15 . Connecting Your Smart Device with Bluetooth . 16. Adjusting the Display (LCD) Settings . 17 Changing the Power Save Setting . 17. Adjusting the Hi-Hat . 17 Specifications . .18 . Support . 19 . For the EFNOTE 5 Reference Guide or other latest information, please refer to the EFNOTE 5 website . ef-note.com/products/drums/EFNOTE5/efnote5.html IMPORTANT SAFETY INSTRUCTIONS IMPORTANT SAFETY INSTRUCTIONS FAILURE TO OBSERVE THE FOLLOWING SAFETY INSTRUCTIONS MAY RESULT IN FIRE, ELECTRIC SHOCK, INJURY TO PERSONS, OR DAMAGE TO OTHER ITEMS OR PROPERTY . You must read and follow these instructions, heed all warnings, before using this product . Please keep this guide for future reference . About warnings and cautions About the symbols WARNING Indicates a hazard that could result "Caution": Calls your attention to a point of in death or serious injury caution CAUTION Indicates a hazard that could result "Do not . ":. Indicates a prohibited action in injury or property damage "You must . ":. Indicates a required action WARNING CAUTION To reduce the risk of fire or electric shock, do Do not place the product in an unstable not expose this product to rain or moisture location Do not use or store in the following locations Doing so may cause the product to overturn, causing personal injury . -

The Role of Bell Patterns in West African and Afro-Caribbean Music

Braiding Rhythms: The Role of Bell Patterns in West African and Afro-Caribbean Music A Smithsonian Folkways Lesson Designed by: Jonathan Saxon* Antelope Valley College Summary: These lessons aim to demonstrate polyrhythmic elements found throughout West African and Afro-Caribbean music. Students will listen to music from Ghana, Nigeria, Cuba, and Puerto Rico to learn how this polyrhythmic tradition followed Africans to the Caribbean as a result of the transatlantic slave trade. Students will learn the rumba clave pattern, cascara pattern, and a 6/8 bell pattern. All rhythms will be accompanied by a two-step dance pattern. Suggested Grade Levels: 9–12, college/university courses Countries: Cuba, Puerto Rico, Ghana, Nigeria Regions: West Africa, the Caribbean Culture Groups: Yoruba of Nigeria, Ga of Ghana, Afro-Caribbean Genre: West African, Afro-Caribbean Instruments: Designed for classes with no access to instruments, but sticks, mambo bells, and shakers can be added Language: English Co-Curricular Areas: U.S. history, African-American history, history of Latin American and the Caribbean (also suited for non-music majors) Prerequisites: None. Objectives: Clap and sing clave rhythm Clap and sing cascara rhythm Clap and sing 6/8 bell pattern Dance two-bar phrase stepping on quarter note of each beat in 4/4 time Listen to music from Cuba, Puerto Rico, Ghana, and Nigeria Learn where Cuba, Puerto Rico, Ghana, and Nigeria are located on a map Understand that rhythmic ideas and phrases followed Africans from West Africa to the Caribbean as a result of the transatlantic slave trade * Special thanks to Dr. Marisol Berríos-Miranda and Dr. -

Shadow of the Sun for Large Orchestra

Shadow of the Sun for Large Orchestra A dissertation submitted to the Graduate School of the University of Cincinnati In partial fulfillment of the requirements for the degree of Doctor of Musical Arts in the Department of Composition, Musicology, and Theory of the College-Conservatory of Music by Hojin Lee M.F.A. University of California, Irvine November 2019 Committee Chair: Douglas Knehans, D.M.A. Abstract It was a quite astonishing moment of watching total solar eclipse in 2017. Two opposite universal images have been merged and passed by each other. It was exciting to watch and inspired me to write a piece about that image ii All rights are reserved by Hojin Lee, 2018 iii Table of Contents Abstract ii Table of Contents iv Cover page 1 Program note 2 Instrumentation 3 Shadow of the Sun 4 Bibliography 86 iv The Shadow of the Sun (2018) Hojin Lee Program Note It was a quite astonishing moment of watching total solar eclipse in 2017. Two opposite universal images have been merged and passed by each other. It was very exciting to watch and inspired me to write a piece about that image. Instrumentation Flute 1 Flute 2 (Doubling Piccolo 2) Flute 3 (Doubling Piccolo 1) Oboe 1 Oboe2 English Horn Eb Clarinet Bb Clarinet 1 Bb Clarinet 2 Bass Clarinet Bassoon 1 Bassoon 2 Contra Bassoon 6 French Horns 4 Trumpets 2 Trombones 1 Bass Trombone 1 Tuba Timpani : 32” 28” 25” 21” 4 Percussions: Percussion 1: Glockenspiel, Vibraphone, Crotales (Two Octaves) Percussion 2: Xylophone, Triangle, Marimba, Suspended Cymbals Percussion 3: Triangle, Vibraphone, -

Learning Neural Audio Embeddings for Grounding Semantics in Auditory Perception

Journal of Artificial Intelligence Research 60 (2017) 1003-1030 Submitted 8/17; published 12/17 Learning Neural Audio Embeddings for Grounding Semantics in Auditory Perception Douwe Kiela [email protected] Facebook Artificial Intelligence Research 770 Broadway, New York, NY 10003, USA Stephen Clark [email protected] Computer Laboratory, University of Cambridge 15 JJ Thomson Avenue, Cambridge CB3 0FD, UK Abstract Multi-modal semantics, which aims to ground semantic representations in perception, has relied on feature norms or raw image data for perceptual input. In this paper we examine grounding semantic representations in raw auditory data, using standard evaluations for multi-modal semantics. After having shown the quality of such auditorily grounded repre- sentations, we show how they can be applied to tasks where auditory perception is relevant, including two unsupervised categorization experiments, and provide further analysis. We find that features transfered from deep neural networks outperform bag of audio words approaches. To our knowledge, this is the first work to construct multi-modal models from a combination of textual information and auditory information extracted from deep neural networks, and the first work to evaluate the performance of tri-modal (textual, visual and auditory) semantic models. 1. Introduction Distributional models (Turney & Pantel, 2010; Clark, 2015) have proved useful for a variety of core artificial intelligence tasks that revolve around natural language understanding. The fact that such models represent the meaning of a word as a distribution over other words, however, implies that they suffer from the grounding problem (Harnad, 1990); i.e. they do not account for the fact that human semantic knowledge is grounded in the perceptual system (Louwerse, 2008). -

TD-30 Data List

Data List Preset Drum Kit List No. Name Pad pattern No. Name Pad pattern 1 Studio 41 RockGig 2 LA Metal 42 Hard BeBop 3 Swingin’ 43 Rock Solid 4 Burnin’ 44 2nd Line 5 Birch 45 ROBO TAP 6 Nashville 46 SATURATED 7 LoudRock 47 piccolo 8 JJ’s DnB 48 FAT 9 Djembe 49 BigHall 10 Stage 50 CoolGig LOOP 11 RockMaster 51 JazzSes LOOP 12 LoudJazz 52 7/4 Beat LOOP 13 Overhead 53 :neotype: 1SHOT, TAP 14 Looooose 54 FLA>n<GER 1SHOT, TAP 15 Fusion 55 CustomWood 16 Room 56 50s King 17 [RadioMIX] 57 BluesRock 18 R&B 58 2HH House 19 Brushes 59 TechFusion 20 Vision LOOP, TAP 60 BeBop 21 AstroNote 1SHOT 61 Crossover 22 acidfunk 62 Skanky 23 PunkRock 63 RoundBdge 24 OpenMaple 64 Metal\Core 25 70s Rock 65 JazzCombo 26 DrySound 66 Spark! 27 Flat&Shallow 67 80sMachine 28 Rvs!Trashy 68 =cosmic= 29 melodious TAP 69 1985 30 HARD n’BASS TAP 70 TR-808 31 BazzKicker 71 TR-909 32 FatPressed 72 LatinDrums 33 DrumnDubStep 73 Latin 34 ReMix-ulator 74 Brazil 35 Acoutronic 75 Cajon 36 HipHop 76 African 37 90sHouse 77 Ka-Rimba 38 D-N-B LOOP 78 Tabla TAP 39 SuperLoop TAP 79 Asian 40 >>process>>> 80 Orchestra TAP Copyright © 2012 ROLAND CORPORATION All rights reserved. No part of this publication may be reproduced in any form without the written permission of ROLAND CORPORATION. Roland and V-Drums are either registered trademarks or trademarks of Roland Corporation in the United States and/or other countries. -

Product Guide 2020

Product Guide 2020 ZILDJIAN 2020 PRODUCT GUIDE CYMBAL FAMILIES 3 K FAMILY 5 A FAMILY 13 FX FAMILY 17 S FAMILY 19 I FAMILY 21 PLANET Z 23 L80 LOW VOLUME 25 CYMBAL PACKS 27 GEN16 29 BAND & ORCHESTRAL CYMBALS 31 GEAR & ACCESSORIES 57 DRUMSTICKS 41 PRODUCT LISTINGS 59 1 Product Guide 2 THE CYMBAL FAMILY 3 Product Guide 4 THE FAMILY K ZILDJIAN CYMBALS K Zildjian cymbals are known for their dark, warm sounds that harkens back to the original K cymbals developed by Zildjian in 19th Century Turkey. Instantly recognizable by their ˝vented K˝ logo, K cymbals capture the aura of original Ks but with far greater consistency, making them the choice of drummers from genres as diverse as Jazz, Country and Rock. RIDES SIZES CRASHES SIZES HIHATS SIZES EFFECTS SIZES Crash Ride 18˝ 20˝ 21˝ Splash 8˝ 10˝ 12˝ HiHats 13˝ 14˝ Mini China 14˝ Ride 20˝ 22˝ Dark Crash Thin 15˝ 16˝ 17˝ 18˝ 19˝ 20˝ K/Z Special HiHats 13˝ 14˝ EFX 16˝ 18˝ Heavy Ride 20˝ Dark Crash Medium Thin 16˝ 17˝ 18˝ Mastersound HiHats 14˝ China 17˝ 19˝ Light Ride 22˝ 24˝ Cluster Crash 16˝ 18˝ 20˝ Light HiHats 14˝ 15˝ 16˝ Dark Medium Ride 22˝ Sweet Crash 16˝ 17˝ 18˝ 19˝ 20˝ Sweet HiHats 14˝ 15˝ 16˝ Light Flat Ride 20˝ Sweet Ride 21˝ 23˝ DETAILS: Exclusive K Zildjian random hammering, traditional wide groove lathing, all Traditional except 21” Crash Ride 6 SPECIAL DRY K CUSTOM CYMBALS K Custom cymbals are based on the darker, dryer sounds of the legendary K line but have been customized with unique finishes, K CUSTOM SPECIAL DRY CYMBALS tonal modifications, and manufacturing techniques. -

KASHCHEI for Nine Instruments and Electronics

KASHCHEI For Nine Instruments and Electronics Nina C. Young November 2010 KASHCHEI for nine instruments and electronics November 2010 Approximate duration: 18:00 Premiered February 8, 2011, Live@CIRMMT – Montreal, Canada Instrumentation: Program Notes: flute (+ piccolo) Kashchei, for nine instruments and electronics, was written by Nina C. Young in 2010 in partial fulfillment of the Master’s of Music degree at McGill University under the supervision of Prof. Sean clarinet in B (+ bass clarinet) b Ferguson. The piece is a representation of Kashchei – a character from Russian folklore who makes an trumpet in C (+ piccolo trumpet; straight and harmon mutes) appearance in many popular fairytales or skazki. H is a dark, evil person of ugly, senile appearance who principally menaces young women. Kashchei cannot be killed by conventional means targeting his body. 2 percussion: Rather, the essence of his life is hidden outside of his flesh in a needle within an egg. Only by finding this I – vibraphone egg and breaking the needle can one overcome Kashchei’s powers. In one skazka, the princess Tsarevna’s I – almglocken (F4, G#4, A4, C5, D#5, E5, F5, G5, A5, B5, C#6) Darissa asks Kashchei where his death lies. Infatuated by her beauty, he let’s down his guard and I – crotales (E5, F5) eventually explains, “My death is far from hence, and hard to find, on the ocean wide: in that sea is the island of I – triangle I – wind chime Buyan, and upon this island there grows a green oak, and beneath this oak is an iron chest, and in this chest is a small I – splash cymbal basket, and in this basket is a hare, and in this hare is a duck, and in this duck is an egg; and he who finds this egg and I – suspended cymbal (medium) breaks it, at the same instant causes my death.” My own piece explores the seven layers and death of Kashchei. -

Percussion Primer / Triangle by Neil Grover

Percussion Primer / Triangle by Neil Grover SELECTION The triangle should be the highest, non-pitched member of the percussion family. Sizes range from 4” to 10”, however, the best size for concert playing is between 6” and 9”. A larger triangle provides a bigger internal working area for easier execution, however, it is heavier and more difficult to control. Triangles are made from steel, brass or bronze, each producing a different sonority. SUSPENSION A triangle needs to be suspended so that it vibrates unencumbered and freely, al- lowing maximum overtone resonance to be produced. It is very important that the instrument be suspended using a very thin, yet strong, mono-filament line. Fishing line works great and is also inexpensive. The use of string, cable, shoelaces, etc. will effec- tively dampen the resonance of any triangle. Using a second “catch line” will pre- vent the triangle from falling to the floor, should the primary line break. A light “triangle clip” will allow the triangle to be mounted on a music stand when not in use. STROKE The triangle should never be played when mounted on a music stand. It should al- ways be held at eye level and struck on the bottom leg with a motion that “pushes away” the bottom leg. This method will produce the maximum overtone sonority. A triangle sound full of overtones will blend with other instruments. Remember, a tri- angle is a non-pitched instrument and should have a very lush array of overtones, it should not sound like a bell! BEATERS Beaters are available in a large variety of sizes (diameters), materials and shapes. -

Ludwig Musser Concert Percussion 2013 Catalog

Welcome to the world of Ludwig/Musser Concert Percussion. The instruments in this catalog represent the finest quality and sound in percussion instruments today from a company that has been making instruments and accessories in the USA for decades. Ludwig is “The Most famous Name in Drums” since 1909 and Musser is “First in Class” for mallet percussion since 1948. Ludwig & Musser aren’t just brand names, they are men’s names. William F. Ludwig Sr. & William F. Ludwig II were gifted percussionists and astute businessmen who were innovators in the world of percussion. Clair Omar Musser was also a visionary mallet percussionist, composer, designer, engineer and leader who founded the Musser Company to be the American leader in mallet instruments. Both companies originated in the Chicago area. They joined forces in the 1960’s and originated the concept of “Total Percussion." With our experience as a manufacturer, we have a dedicated staff of craftsmen and marketing professionals that are sensitive to the needs of the percussionist. Several on our staff are active percussionists today and have that same passion for excellence in design, quality and performance as did our founders. We are proud to be an American company competing in a global economy. This Ludwig Musser Concert Percussion Catalog is dedicated to the late William F. Ludwig II Musser Marimbas, Xylophones, Chimes, Bells, & Vibraphones are available in “The Chief.” His vision for a “Total Percussion” a wide range of sizes and models to completely satisfy the needs of beginners, company was something he created at Ludwig schools, universities and professionals.