Semantic Web Based Named Entity Linking for Digital Humanities and Heritage Texts Francesca Frontini, Carmen Brando, Jean-Gabriel Ganascia

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

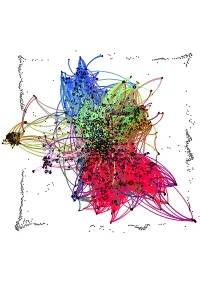

Network Map of Knowledge And

Humphry Davy George Grosz Patrick Galvin August Wilhelm von Hofmann Mervyn Gotsman Peter Blake Willa Cather Norman Vincent Peale Hans Holbein the Elder David Bomberg Hans Lewy Mark Ryden Juan Gris Ian Stevenson Charles Coleman (English painter) Mauritz de Haas David Drake Donald E. Westlake John Morton Blum Yehuda Amichai Stephen Smale Bernd and Hilla Becher Vitsentzos Kornaros Maxfield Parrish L. Sprague de Camp Derek Jarman Baron Carl von Rokitansky John LaFarge Richard Francis Burton Jamie Hewlett George Sterling Sergei Winogradsky Federico Halbherr Jean-Léon Gérôme William M. Bass Roy Lichtenstein Jacob Isaakszoon van Ruisdael Tony Cliff Julia Margaret Cameron Arnold Sommerfeld Adrian Willaert Olga Arsenievna Oleinik LeMoine Fitzgerald Christian Krohg Wilfred Thesiger Jean-Joseph Benjamin-Constant Eva Hesse `Abd Allah ibn `Abbas Him Mark Lai Clark Ashton Smith Clint Eastwood Therkel Mathiassen Bettie Page Frank DuMond Peter Whittle Salvador Espriu Gaetano Fichera William Cubley Jean Tinguely Amado Nervo Sarat Chandra Chattopadhyay Ferdinand Hodler Françoise Sagan Dave Meltzer Anton Julius Carlson Bela Cikoš Sesija John Cleese Kan Nyunt Charlotte Lamb Benjamin Silliman Howard Hendricks Jim Russell (cartoonist) Kate Chopin Gary Becker Harvey Kurtzman Michel Tapié John C. Maxwell Stan Pitt Henry Lawson Gustave Boulanger Wayne Shorter Irshad Kamil Joseph Greenberg Dungeons & Dragons Serbian epic poetry Adrian Ludwig Richter Eliseu Visconti Albert Maignan Syed Nazeer Husain Hakushu Kitahara Lim Cheng Hoe David Brin Bernard Ogilvie Dodge Star Wars Karel Capek Hudson River School Alfred Hitchcock Vladimir Colin Robert Kroetsch Shah Abdul Latif Bhittai Stephen Sondheim Robert Ludlum Frank Frazetta Walter Tevis Sax Rohmer Rafael Sabatini Ralph Nader Manon Gropius Aristide Maillol Ed Roth Jonathan Dordick Abdur Razzaq (Professor) John W. -

PDF the Migration of Literary Ideas: the Problem of Romanian Symbolism

The Migration of Literary Ideas: The Problem of Romanian Symbolism Cosmina Andreea Roșu ABSTRACT: The migration of symbolists’ ideas in Romanian literary field during the 1900’s occurs mostly due to poets. One of the symbolist poets influenced by the French literature (the core of the Symbolism) and its representatives is Dimitrie Anghel. He manages symbols throughout his entire writings, both in poetry and in prose, as a masterpiece. His vivid imagination and fantasy reinterpret symbols from a specific Romanian point of view. His approach of symbolist ideas emerges from his translations from the French authors but also from his original writings, since he creates a new attempt to penetrate another sequence of the consciousness. Dimitrie Anghel learns the new poetics during his years long staying in France. KEY WORDS: writing, ideas, prose poem, symbol, fantasy. A t the beginning of the twentieth century the Romanian literature was dominated by Eminescu and his epigones, and there were visible effects of Al. Macedonski’s efforts to impose a new poetry when Dimitrie Anghel left to Paris. Nicolae Iorga was trying to initiate a new nationalist movement, and D. Anghel was blamed for leaving and detaching himself from what was happening“ in the” country. But “he fought this idea in his texts making ironical remarks about those“The Landwho were” eagerly going away from native land ( Youth – „Tinereță“, Looking at a Terrestrial Sphere” – „Privind o sferă terestră“, a literary – „Pământul“). Babel tower He settled down for several years in Paris, which he called 140 . His work offers important facts about this The Migration of Literary Ideas: The Problem of Romanian Symbolism 141 Roșu: period. -

World Literature According to Wikipedia: Introduction to a Dbpedia-Based Framework

World Literature According to Wikipedia: Introduction to a DBpedia-Based Framework Christoph Hube,1 Frank Fischer,2 Robert J¨aschke,1,3 Gerhard Lauer,4 Mads Rosendahl Thomsen5 1 L3S Research Center, Hannover, Germany 2 National Research University Higher School of Economics, Moscow, Russia 3 University of Sheffield, United Kingdom 4 G¨ottingenCentre for Digital Humanities, Germany 5 Aarhus University, Denmark Among the manifold takes on world literature, it is our goal to contribute to the discussion from a digital point of view by analyzing the representa- tion of world literature in Wikipedia with its millions of articles in hundreds of languages. As a preliminary, we introduce and compare three different approaches to identify writers on Wikipedia using data from DBpedia, a community project with the goal of extracting and providing structured in- formation from Wikipedia. Equipped with our basic set of writers, we analyze how they are represented throughout the 15 biggest Wikipedia language ver- sions. We combine intrinsic measures (mostly examining the connectedness of articles) with extrinsic ones (analyzing how often articles are frequented by readers) and develop methods to evaluate our results. The better part of our findings seems to convey a rather conservative, old-fashioned version of world literature, but a version derived from reproducible facts revealing an implicit literary canon based on the editing and reading behavior of millions of peo- ple. While still having to solve some known issues, the introduced methods arXiv:1701.00991v1 [cs.IR] 4 Jan 2017 will help us build an observatory of world literature to further investigate its representativeness and biases. -

Purcaru, Cristina, Elena, the Contribution of The

”Alexandru Ioan Cuza” University of Iaşi Faculty of Letters Doctoral School of Philological Studies The contribution of the Psalms to the process of structuring the elegiac poetry in the Romanian literature − SUMMARY OF THE PhD THESIS − Scientific coordinator, Viorica S. Constantinescu, PhD Professor PhD Candidate, Cristina Elena Purcaru Iaşi, 2013 Introduction CHAPTER I The Psalms in the Biblical exegesis I.1. Preliminaries I.1.1. The Biblical exegesis in the patristic period – short history I.1.2. Ways of interpreting the Biblical text I.2. Exemplary interpreters of the Psalms I.2.1. The typological diversity of the Psalms I.2.1.1. Hymns I.2.1.2. The regal Psalms I.2.1.3. Lamentations I.2.1.4. Gratification Psalms CHAPTER II The psalmic moment. Dosoftei II.1. The dusk of the Romanian translations II.2. The Psalter in lyrics II.2.1. The problem of sources – hypostases of the translation II.2.2. Terminological implications of the translation of Dosoftei’s Psalms II.2.2.1. Aesthetic aspects of Dosoftei’s psalmic language II.2.2.2. Glossaries and reading possibilities 2 CHAPTER III The Psalms from a theological and literary perspective. A comparative study III.1. Introduction III.2.The Biblical Psalms / the literary Psalms III.2.1. The Discovery of the Psalm as a poetry III.2.2. The egocentric orientation in Macedonski’s Psalms III.2.3. The inner search for divinity III.2.4. Finding authentic feelings III.3. Arghezi and Doinaş ”in search for God” III.3.1. Hypostases of divinity III.3.2. -

The Unique Cultural & Innnovative Twelfty 1820

Chekhov reading The Seagull to the Moscow Art Theatre Group, Stanislavski, Olga Knipper THE UNIQUE CULTURAL & INNNOVATIVE TWELFTY 1820-1939, by JACQUES CORY 2 TABLE OF CONTENTS No. of Page INSPIRATION 5 INTRODUCTION 6 THE METHODOLOGY OF THE BOOK 8 CULTURE IN EUROPEAN LANGUAGES IN THE “CENTURY”/TWELFTY 1820-1939 14 LITERATURE 16 NOBEL PRIZES IN LITERATURE 16 CORY'S LIST OF BEST AUTHORS IN 1820-1939, WITH COMMENTS AND LISTS OF BOOKS 37 CORY'S LIST OF BEST AUTHORS IN TWELFTY 1820-1939 39 THE 3 MOST SIGNIFICANT LITERATURES – FRENCH, ENGLISH, GERMAN 39 THE 3 MORE SIGNIFICANT LITERATURES – SPANISH, RUSSIAN, ITALIAN 46 THE 10 SIGNIFICANT LITERATURES – PORTUGUESE, BRAZILIAN, DUTCH, CZECH, GREEK, POLISH, SWEDISH, NORWEGIAN, DANISH, FINNISH 50 12 OTHER EUROPEAN LITERATURES – ROMANIAN, TURKISH, HUNGARIAN, SERBIAN, CROATIAN, UKRAINIAN (20 EACH), AND IRISH GAELIC, BULGARIAN, ALBANIAN, ARMENIAN, GEORGIAN, LITHUANIAN (10 EACH) 56 TOTAL OF NOS. OF AUTHORS IN EUROPEAN LANGUAGES BY CLUSTERS 59 JEWISH LANGUAGES LITERATURES 60 LITERATURES IN NON-EUROPEAN LANGUAGES 74 CORY'S LIST OF THE BEST BOOKS IN LITERATURE IN 1860-1899 78 3 SURVEY ON THE MOST/MORE/SIGNIFICANT LITERATURE/ART/MUSIC IN THE ROMANTICISM/REALISM/MODERNISM ERAS 113 ROMANTICISM IN LITERATURE, ART AND MUSIC 113 Analysis of the Results of the Romantic Era 125 REALISM IN LITERATURE, ART AND MUSIC 128 Analysis of the Results of the Realism/Naturalism Era 150 MODERNISM IN LITERATURE, ART AND MUSIC 153 Analysis of the Results of the Modernism Era 168 Analysis of the Results of the Total Period of 1820-1939 -

Swedish Journal of Romanian Studies

SWEDISH JOURNAL OF ROMANIAN STUDIES Vol. 2 No 1 (2019) ISSN 2003-0924 SWEDISH JOURNAL OF ROMANIAN STUDIES Vol. 2 No 1 (2019) ISSN 2003-0924 Table of Contents Editorial ………………………………………………………………. 5 Introduction for contributors to Swedish Journal of Romanian Studies ………………………………………………………………… 7 Literature Maricica Munteanu The bodily community. The gesture and the rhythm as manners of the living-together in the memoirs of Viața Românească cenacle ………... 10 Roxana Patraș Hajduk novels in the nineteenth-century Romanian fiction: notes on a sub-genre ………………………………………………………………. 24 Simina Pîrvu Nostalgia originii la Andreï Makine, Testamentul francez și Sorin Titel, Țara îndepărtată / The nostalgia of the place of birth in Andrei Makine’s French Will and in Sorin Titel’s The Aloof Country………………………………………………............................. 34 Translation studies Andra-Iulia Ursa Mircea Ivănescu – a Romanian poet rendering the style of James Joyce’s Ulysses. The concept of fidelity in translating the overture from “Sirens” ………………………………………………………….. 42 Theatre Carmen Dominte DramAcum – the New Wave of Romanian contemporary dramaturgy .. 62 Adriana Carolina Bulz A challenge to American pragmatism: staging O’Neill’s Hughie by Alexa Visarion ………………………………………………………… 76 Cultural studies Alexandru Ofrim Attitudes towards prehistoric objects in Romanian folk culture (19th-20th century) …………………………………………………... 91 Linguistics Iosif Camară «Blachii ac pastores romanorum»: de nouveau sur le destin du latin à l’est / «Blachii ac pastores romanorum»: again, on the destiny of Latin in the East ……………………………………………………………………….. 109 Constantin-Ioan Mladin Considérations sur la modernisation et la redéfinition de la physionomie néolatine du roumain. Deux siècles d’influence française / Considerations on modernizing and redefining the neolatinic physiognomy of the Romanian language. Two centuries of French influence ………………………………………………. -

Kfv) International Ballad Commission Commission Internationale Pour L’Étude De La Chanson Populaire

Kommission für Volksdichtung (KfV) International Ballad Commission Commission internationale pour l’étude de la chanson populaire (Société Internationale d’Ethnologie et de Folklore, S.I.E.F.) www.KfVweb.org Electronic Newsletter no. 3 (August 2002) Minutes of the Business Meeting of the KfV, Leuven, 27 July 2002 1. Greetings from absent friends Greetings were read out from members unable to attend this year. These included Larry and Ardis Syndergaard, Anne Caufriez, Vaira Vike-Freiberga, Sandy Ives, Ildikó Kríza, and Gerald Porter, Jean-Pierre Pichette. 2. Treasurer’s report Prior to the conference the bank balance stood at €704. After deduction of bank charges and expenses, and the payment of membership fees in 2002, the current balance is €802. 3. Administrative report It was suggested and agreed that in future it would be worthwhile providing a telephone number(s) for use in emergency by members travelling to conferences, as well as email addresses. The Vice-President reiterated the usual request that members inform her immediately – [email protected] – of any changes to email or postal addresses, to ensure that members do not lose contact with the KfV. 4. Newsletter Members are encouraged to send news, especially news of publications, both books and articles, to the editor, David Atkinson, at [email protected] It was agreed that the newsletter should be sent to the entire membership list three times, once each in English, French, and German, in the body of three separate emails. This should avoid the potential problems posed by lengthy emails or the use of attachments. The newsletter will continue to appear probably twice each year, but in the interim short announcements can be circulated to the membership, in the manner of a listserve or discussion list. -

Poetic Prophetism in Romanian Romanticism

MINISTRY OF NATIONAL EDUCATION AND SCIENTIFIC RESEARCH UNIVERSITY "LUCIAN BLAGA" OF SIBIU THE INSTITUTE OF DOCTORAL STUDIES FACULTY OF LETTERS AND ARTS Poetic Prophetism in Romanian Romanticism Ph.D. Thesis -Summary- Scientific co-ordinators: Prof. univ. dr. habil. Andrei TERIAN Prof. univ. dr. Gheorghe MANOLACHE Ph.D. Student: Crina POENARIU Sibiu 2017 Contents Argument...................................................................................................................................5 Chapter One: Morphotipology of prophetism........................................................................10 1.1. Ethimology and semantic dinamics...................................................................................10 1.2. Paradigms of prophetism...................................................................................................14 1.2.1.Protoprophetism or primary prophetism.............................................................15 1.2.1.1.Hebrew prophetism...............................................................................15 1.2.1.2.Greek-Latin prophetism.........................................................................22 1.2.2.Neoprophetism or recovered prophetism............................................................28 1.3.Inter- and intraparadigmatical delimitations.......................................................................33 1.3.1.The Visionary.......................................................................................................33 1.3.2. Symbolic -

World Literature According to Wikipedia : Introduction to a Dbpedia-Based Framework

This is a repository copy of World literature according to Wikipedia : introduction to a DBpedia-based framework. White Rose Research Online URL for this paper: http://eprints.whiterose.ac.uk/108765/ Version: Submitted Version Article: Hube, C., Fischer, F., Jaschke, R. orcid.org/0000-0003-3271-9653 et al. (2 more authors) (Submitted: 2017) World literature according to Wikipedia : introduction to a DBpedia-based framework. arXiv. (Submitted) © 2017 The Authors. For reuse permissions, please contact the Author(s). Reuse Items deposited in White Rose Research Online are protected by copyright, with all rights reserved unless indicated otherwise. They may be downloaded and/or printed for private study, or other acts as permitted by national copyright laws. The publisher or other rights holders may allow further reproduction and re-use of the full text version. This is indicated by the licence information on the White Rose Research Online record for the item. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ World Literature According to Wikipedia: Introduction to a DBpedia-Based Framework Christoph Hube,1 Frank Fischer,2 Robert J¨aschke,1,3 Gerhard Lauer,4 Mads Rosendahl Thomsen5 1 L3S Research Center, Hannover, Germany 2 National Research University Higher School of Economics, Moscow, Russia 3 University of Sheffield, United Kingdom 4 G¨ottingen Centre for Digital Humanities, Germany 5 Aarhus University, Denmark Among the manifold takes on world literature, it is our goal to contribute to the discussion from a digital point of view by analyzing the representa- tion of world literature in Wikipedia with its millions of articles in hundreds of languages. -

Phd Thesis Title: Medieval Tradition in Geoffrey Chaucer's

Information from the CV ( http://www.artes-iasi.ro/colocvii/cv/Olesia%20Mihai%20-%20CV%20.doc ) PhD thesis Title: Medieval Tradition in Geoffrey Chaucer’s Writings Author: Olesia Lupu (now Olesia Mihai) Institution: “Al. I. Cuza” University, Iasi, Romania Date: April 2005 The author also published a book in 2009 with the same title (but in Romanian), see CV: Lupu, O., Tradi ţia medieval ă în scrierile lui Geoffrey Chaucer , Editura Sedcom Libris, Ia şi, Romania, 2009 Contents CONTENTS ...............................................................................................................................1 INTRODUCTION .....................................................................................................................2 CHAPTER 1 PRELIMINARY ASPECTS .....................................................................................................6 CHAPTER 2 UNIVERSALUNIVERSAL LOVELOVE – CLASSICAL AND CHRISTIAN TRADITION ...........................26 CHAPTERCHAPTER 3 THE NOMINALIST-REALIST CONTROVERSY AND THE PHILOSOPHICAL TRADITION ......................................................................61 CHAPTER 4 COUCOURTLYRTLY LLOVEOVE – TRADITION AND INNOVATION IN CHAUCER’S APPROACH ..............................................................................................80 CHAPTER 5 INDIVIDUALINDIVIDUAL VOICEVOICE – A REALISTIC APPROACH TO CHARACTERS ..............................................................................................................101 CHAPTER 6 THE LYRILYRICC WITHWITH NARRATIVENARRATIVE -

Petite Histoire Des Relations Entre La France Et La Roumanie

PETITE HISTOIRE DES RELATIONS ENTRE LA FRANCE ET LA ROUMANIE, ENTRE LA LITTERATURE ROUMAINE ET CELLE FRANÇAISE Rodica BOGATU, doctorande, Université d’Etat “Alecu Russo” de Bălţi, République de Moldova Résumé Au XIXe siècle, de nombreux voyageurs roumains, notamment les fils de boïards, voyagent en France, en particulier pour les études et rapportent des éléments de culture et de politique qu’ils implantent en Roumanie à leur retour. (Il s’agit, après la contribution des Phanariotes et des con- sulats français, de la troisième source de diffusion des idées françaises dans le monde roumain.) Plusieurs de ces étudiants ont joué un rôle majeurs dans le monde de la politique et des lettres. Citons ici Vasile Alecsandri, Alexandru Macedonski, Nicolae Iorga et Ion Ghica. Des relations solides s’instaurent entre certains écrivains français et la Roumanie, entre la littérature roumaine et la littérature française: rencontres de l’écrivain et homme politique Ion-Heliade Rădulescu avec Victor Hugo et Alphonse de Lamartine (élu en 1847 Président d’Honneur de l’Association des Etudiants Roumains de France), les relations durables de Ion Ghica avec Jules Michelet et Edgard Quinet, dont les cours sont suivis par des étudiants roumains, et qui soutiennent en 1859 l’Union des Principautés Roumaines. Bref, la littérature française y fut réceptée, car la France apparaissait souvent comme un modèle aux yeux des Roumains luttant pour leur indépendance et leur unité, ce qui explique les liens très intimes tissés entre vie intellectuelle et politique, littérature et engagement. Rezumat În secolul al XIX-lea, numeroşi români, în deosebi feciori de boieri (Vasile Alecsandri, Alexandru Macedonski, Nicolae Iorga, Ion Ghica etc.), îşi fac studiile în Franţa şi implantează, mai apoi, la întoarcere în România, elemente de cultură şi politică franceze. -

Alexandru Macedonski Și Concepția Sa Despre Versificație

THEORY, HISTORY AND LITERARY CRITICISM Alexandru Macedonski și concepția sa despre versificație Florina-Diana Cordoș Abstract: This article presents Alexandru Macedonskiʼs contribution to Romanian literature concerning the promotion of new literary tendencies – at the beginning in those times – Symbolism and Parnassianism. In this study I brought into discussion the vocation of “Mecenas poet” that Macedonski had in the literary space of Bucharest after the foundation of the society Literatorul. I also presented in this paper the Macedonskiʼs conception on versification. His ideas were published in the pages of the magazine “Literatorul”, in the colection entitled Arta versurilor. I highlighted here his activity as a promoter and guider in the Romanian literary space, because this is a quality that makes him radically different from his contemporaries. Keywords: prosodic elements, free verse, lines, rhyme, rhythm, metre Cenaclul literar și revista „Literatorul”. Receptarea și asimilarea unei noi direcții poetice În ianuarie 1880 a luat ființă în București societatea literară și revista „Literatorul”, al căror fondator a fost Alexandru Macedonski. Apariția acestei publicații a marcat începuturile poeziei române moderne, sub forma simbolismului și a parnasianismului. Cenaclul a reprezentat la vremea sa un important ferment literar, dedicat în totalitate poeziei, primul de acest fel din istoria literaturii române. Acesta a jucat un rol major în biografia poetului nostru, deoarece constituie în același timp și un aspect însemnat al laturii sale estetice, dar și etice. Tudor Vianu afirmă că „poeziile lui Macedonski sunt pline de strigătele durerii de a se ști singur într-o lume în care ne pândesc vrăjmașii și în care virtuțile omului nu numai că nu sunt recunoscute și răsplătite, dar sunt mai degrabă pricina nefericirii celui care le practică” (Vianu, 1974: 203).