Bulk Edit Issues Ken Zook October 20, 2008 Contents 1 Introduction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

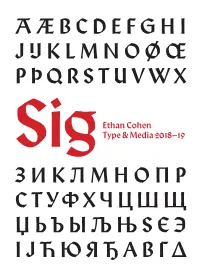

Sig Process Book

A Æ B C D E F G H I J IJ K L M N O Ø Œ P Þ Q R S T U V W X Ethan Cohen Type & Media 2018–19 SigY Z А Б В Г Ґ Д Е Ж З И К Л М Н О П Р С Т У Ф Х Ч Ц Ш Щ Џ Ь Ъ Ы Љ Њ Ѕ Є Э І Ј Ћ Ю Я Ђ Α Β Γ Δ SIG: A Revival of Rudolf Koch’s Wallau Type & Media 2018–19 ЯREthan Cohen ‡ Submitted as part of Paul van der Laan’s Revival class for the Master of Arts in Type & Media course at Koninklijke Academie von Beeldende Kunsten (Royal Academy of Art, The Hague) INTRODUCTION “I feel such a closeness to William Project Overview Morris that I always have the feeling Sig is a revival of Rudolf Koch’s Wallau Halbfette. My primary source that he cannot be an Englishman, material was the Klingspor Kalender für das Jahr 1933 (Klingspor Calen- dar for the Year 1933), a 17.5 × 9.6 cm book set in various cuts of Wallau. he must be a German.” The Klingspor Kalender was an annual promotional keepsake printed by the Klingspor Type Foundry in Offenbach am Main that featured different Klingspor typefaces every year. This edition has a daily cal- endar set in Magere Wallau (Wallau Light) and an 18-page collection RUDOLF KOCH of fables set in 9 pt Wallau Halbfette (Wallau Semibold) with woodcut illustrations by Willi Harwerth, who worked as a draftsman at the Klingspor Type Foundry. -

Package Mathfont V. 1.6 User Guide Conrad Kosowsky December 2019 [email protected]

Package mathfont v. 1.6 User Guide Conrad Kosowsky December 2019 [email protected] For easy, off-the-shelf use, type the following in your docu- ment preamble and compile using X LE ATEX or LuaLATEX: \usepackage[hfont namei]{mathfont} Abstract The mathfont package provides a flexible interface for changing the font of math- mode characters. The package allows the user to specify a default unicode font for each of six basic classes of Latin and Greek characters, and it provides additional support for unicode math and alphanumeric symbols, including punctuation. Crucially, mathfont is compatible with both X LE ATEX and LuaLATEX, and it provides several font-loading commands that allow the user to change fonts locally or for individual characters within math mode. Handling fonts in TEX and LATEX is a notoriously difficult task. Donald Knuth origi- nally designed TEX to support fonts created with Metafont, and while subsequent versions of TEX extended this functionality to postscript fonts, Plain TEX's font-loading capabilities remain limited. Many, if not most, LATEX users are unfamiliar with the fd files that must be used in font declaration, and the minutiae of TEX's \font primitive can be esoteric and confusing. LATEX 2"'s New Font Selection System (nfss) implemented a straightforward syn- tax for loading and managing fonts, but LATEX macros overlaying a TEX core face the same versatility issues as Plain TEX itself. Fonts in math mode present a double challenge: after loading a font either in Plain TEX or through the nfss, defining math symbols can be unin- tuitive for users who are unfamiliar with TEX's \mathcode primitive. -

The Unicode Cookbook for Linguists: Managing Writing Systems Using Orthography Profiles

Zurich Open Repository and Archive University of Zurich Main Library Strickhofstrasse 39 CH-8057 Zurich www.zora.uzh.ch Year: 2017 The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles Moran, Steven ; Cysouw, Michael DOI: https://doi.org/10.5281/zenodo.290662 Posted at the Zurich Open Repository and Archive, University of Zurich ZORA URL: https://doi.org/10.5167/uzh-135400 Monograph The following work is licensed under a Creative Commons: Attribution 4.0 International (CC BY 4.0) License. Originally published at: Moran, Steven; Cysouw, Michael (2017). The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles. CERN Data Centre: Zenodo. DOI: https://doi.org/10.5281/zenodo.290662 The Unicode Cookbook for Linguists Managing writing systems using orthography profiles Steven Moran & Michael Cysouw Change dedication in localmetadata.tex Preface This text is meant as a practical guide for linguists, and programmers, whowork with data in multilingual computational environments. We introduce the basic concepts needed to understand how writing systems and character encodings function, and how they work together. The intersection of the Unicode Standard and the International Phonetic Al- phabet is often not met without frustration by users. Nevertheless, thetwo standards have provided language researchers with a consistent computational architecture needed to process, publish and analyze data from many different languages. We bring to light common, but not always transparent, pitfalls that researchers face when working with Unicode and IPA. Our research uses quantitative methods to compare languages and uncover and clarify their phylogenetic relations. However, the majority of lexical data available from the world’s languages is in author- or document-specific orthogra- phies. -

Invisible-Punctuation.Pdf

... ' I •e •e •4 I •e •e •4 •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • ••••• • • •• • • • • • I •e •e •4 In/visible Punctuation • • • • •• • • • •• • • • •• • • • • • •• • • • • • •• • • • • • • •• • • • • • •• • • • • • ' •• • • • • • John Lennard •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • I •e •e •4 I •e •e •4 I •e •e •4 I •e •e •4 I •e •e •4 I ••• • • 4 I.e• • • 4 I ••• • • 4 I ••• • • 4 I ••• • • 4 I ••• • • 4 • • •' .•. • . • .•. •. • ' .. ' • • •' .•. • . • .•. • . • ' . ' . UNIVERSITY OF THE WEST INDIES- LENNARD, 121-138- VISIBLE LANGUAGE 45.1/ 2 I •e •e' • • • • © VISIBLE LANGUAGE, 2011 -RHODE ISLAND SCHOOL OF DESIGN- PROVIDENCE, RHODE ISLAND 02903 .. ' ABSTRACT The article offers two approaches to the question of 'invisible punctuation,' theoretical and critical. The first is a taxonomy of modes of punctuational invisibility, · identifying denial, repression, habituation, error and absence. Each is briefly discussed and some relations with technologies of reading are considered. The second considers the paragraphing, or lack of it, in Sir Philip Sidney's Apology for Poetry: one of the two early printed editions and at least one of the two MSS are mono paragraphic, a feature always silently eliminated by editors as a supposed carelessness. It is argued that this is improbable -

Iso/Iec Jtc1/Sc2/Wg2 N4907 L2/17-359

ISO/IEC JTC1/SC2/WG2 N4907 L2/17-359 2017-10-20 Universal Multiple-Octet Coded Character Set International Organization for Standardization Organisation Internationale de Normalisation Международная организация по стандартизации Doc Type: Working Group Document Title: Proposal to add six Latin Tironian letters to the UCS Source: Michael Everson and Andrew West Status: Individual Contribution Date: 2017-10-20 Replaces: N4841 (L2/17-300) 0. Summary. This proposal requests the encoding of three new casing letters used in medieval European texts. If this proposal is accepted, the following characters will exist: A7F0 LATIN CAPITAL LETTER TIRONIAN ET A7F1 LATIN SMALL LETTER TIRONIAN ET • Old and Middle English, … → 204A tironian sign et ꟲ A7F2 LATIN CAPITAL LETTER TIRONIAN ET WITH HOOK ꟳ A7F3 LATIN SMALL LETTER TIRONIAN ET WITH HOOK • Middle English, Latin, … ꟴ A7F4 LATIN CAPITAL LETTER TIRONIAN ET WITH HOOK AND STROKE Ꟶ A7F5 LATIN SMALL LETTER TIRONIAN ET WITH HOOK AND STROKE • Middle English, Latin, French, … 1. Background. A punctuation character chiefly for Irish use was added in Unicode 3.0 in 1999. Its current entry in the standard reads: ⁊ 204A TIRONIAN SIGN ET • Irish Gaelic, Old English, … → 0026 & ampersand → 1F670 script ligature et ornament A variety of medieval English manuscripts across a number of centuries treat the the Tironian sign as an actual letter of the alphabet, and case it when in sentence-initial position. Modern transcribers of documents containing these letters have distinguished them as casing, sometimes using the digit 7 as a font workaround. The original simple two-stroke shape of the character ⁊ as used in the Insular tradition (for Irish and Old English) was replaced by Carolingian character with a hooked base ꟳ, which had a range of glyph variants including a long extension of the top-bar descending to the left Page 1 and sometimes even encircling the glyph. -

Detection of Suspicious IDN Homograph Domains Using Active DNS Measurements

A Case of Identity: Detection of Suspicious IDN Homograph Domains Using Active DNS Measurements Ramin Yazdani Olivier van der Toorn Anna Sperotto University of Twente University of Twente University of Twente Enschede, The Netherlands Enschede, The Netherlands Enschede, The Netherlands [email protected] [email protected] [email protected] Abstract—The possibility to include Unicode characters in problem in the case of Unicode – ASCII homograph domain names allows users to deal with domains in their domains. We propose a low-cost method for proactively regional languages. This is done by introducing Internation- detecting suspicious IDNs. Since our proactive approach alized Domain Names (IDN). However, the visual similarity is based not on use, but on characteristics of the domain between different Unicode characters - called homoglyphs name itself and its associated DNS records, we are able to - is a potential security threat, as visually similar domain provide an early alert for both domain owners as well as names are often used in phishing attacks. Timely detection security researchers to further investigate these domains of suspicious homograph domain names is an important before they are involved in malicious activities. The main step towards preventing sophisticated attacks, since this can contributions of this paper are that we: prevent unaware users to access those homograph domains • propose an improved Unicode Confusion table able that actually carry malicious content. We therefore propose to detect 2.97 times homograph domains compared a structured approach to identify suspicious homograph to the state-of-the-art confusion tables; domain names based not on use, but on characteristics • combine active DNS measurements and Unicode of the domain name itself and its associated DNS records. -

Unicode Regular Expressions Technical Reports

7/1/2019 UTS #18: Unicode Regular Expressions Technical Reports Working Draft for Proposed Update Unicode® Technical Standard #18 UNICODE REGULAR EXPRESSIONS Version 20 Editors Mark Davis, Andy Heninger Date 2019-07-01 This Version http://www.unicode.org/reports/tr18/tr18-20.html Previous Version http://www.unicode.org/reports/tr18/tr18-19.html Latest Version http://www.unicode.org/reports/tr18/ Latest Proposed http://www.unicode.org/reports/tr18/proposed.html Update Revision 20 Summary This document describes guidelines for how to adapt regular expression engines to use Unicode. Status This is a draft document which may be updated, replaced, or superseded by other documents at any time. Publication does not imply endorsement by the Unicode Consortium. This is not a stable document; it is inappropriate to cite this document as other than a work in progress. A Unicode Technical Standard (UTS) is an independent specification. Conformance to the Unicode Standard does not imply conformance to any UTS. Please submit corrigenda and other comments with the online reporting form [Feedback]. Related information that is useful in understanding this document is found in the References. For the latest version of the Unicode Standard, see [Unicode]. For a list of current Unicode Technical Reports, see [Reports]. For more information about versions of the Unicode Standard, see [Versions]. Contents 0 Introduction 0.1 Notation 0.2 Conformance 1 Basic Unicode Support: Level 1 1.1 Hex Notation 1.1.1 Hex Notation and Normalization 1.2 Properties 1.2.1 General -

Supplemental Punctuation Range: 2E00–2E7F

Supplemental Punctuation Range: 2E00–2E7F This file contains an excerpt from the character code tables and list of character names for The Unicode Standard, Version 14.0 This file may be changed at any time without notice to reflect errata or other updates to the Unicode Standard. See https://www.unicode.org/errata/ for an up-to-date list of errata. See https://www.unicode.org/charts/ for access to a complete list of the latest character code charts. See https://www.unicode.org/charts/PDF/Unicode-14.0/ for charts showing only the characters added in Unicode 14.0. See https://www.unicode.org/Public/14.0.0/charts/ for a complete archived file of character code charts for Unicode 14.0. Disclaimer These charts are provided as the online reference to the character contents of the Unicode Standard, Version 14.0 but do not provide all the information needed to fully support individual scripts using the Unicode Standard. For a complete understanding of the use of the characters contained in this file, please consult the appropriate sections of The Unicode Standard, Version 14.0, online at https://www.unicode.org/versions/Unicode14.0.0/, as well as Unicode Standard Annexes #9, #11, #14, #15, #24, #29, #31, #34, #38, #41, #42, #44, #45, and #50, the other Unicode Technical Reports and Standards, and the Unicode Character Database, which are available online. See https://www.unicode.org/ucd/ and https://www.unicode.org/reports/ A thorough understanding of the information contained in these additional sources is required for a successful implementation. -

Double Hyphen" Source: Karl Pentzlin Status: Individual Contribution Action: for Consideration by JTC1/SC2/WG2 and UTC Date: 2010-09-28

JTC1/SC2/WG2 N3917 Universal Multiple-Octet Coded Character Set International Organization for Standardization Organisation Internationale de Normalisation Международная организация по стандартизации Doc Type: Working Group Document Title: Revised Proposal to encode a punctuation mark "Double Hyphen" Source: Karl Pentzlin Status: Individual Contribution Action: For consideration by JTC1/SC2/WG2 and UTC Date: 2010-09-28 Dashes and Hyphens A U+2E4E DOUBLE HYPHEN → 2010 hyphen → 2E17 double oblique hyphen → 003D equals sign → A78A modifier letter short equals sign · used in transcription of old German prints and handwritings · used in some non-standard punctuation · not intended for standard hyphens where the duplication is only a font variant Properties: 2E4E;DOUBLE HYPHEN;Pd;0;ON;;;;;N;;;;; Entry in LineBreak.TXT: 2E4E;BA # DOUBLE HYPHEN 1. Introduction The "ordinary" hyphen, which is representable by U+002D HYPHEN-MINUS or U+2010 HYPHEN, usually is displayed by a single short horizontal dash, but has a considerable glyph variation: it can be slanted to oblique or doubled (stacked) according to the used font. For instance, in Fraktur (Blackletter) fonts, it commonly is represented by two stacked short oblique dashes. However, in certain applications, double hyphens (consisting of two stacked short dashes) are used as characters with semantics deviating from the "ordinary" hyphen, e.g. to represent a definite unit in transliteration. For such a special application, in this case for transliteration of Coptic, U+2E17 DOUBLE OBLIQUE HYPHEN was encoded ([1], example on p. 9). However, there are other applications where the double hyphen us usually not oblique. For such applications, here a "DOUBLE HYPHEN" is proposed, which consists of two stacked short dashes which usually are horizontal. -

Revised Proposal to Encode a Punctuation Mark "Double Hyphen"

Universal Multiple-Octet Coded Character Set International Organization for Standardization Organisation Internationale de Normalisation Международная организация по стандартизации Doc Type: Working Group Document Title: Revised Proposal to encode a punctuation mark "Double Hyphen" Source: German NB Status: National Body Contribution Action: For consideration by JTC1/SC2/WG2 and UTC Date: 2011-01-17 Replaces: N3917 = L2/10-361, L2/10-162 Dashes and Hyphens A U+2E4E DOUBLE HYPHEN → 2010 hyphen → 2E17 double oblique hyphen → 003D equals sign → A78A modifier letter short equals sign · used in transcription of old German prints and handwritings · used in some non-standard punctuation · not intended for standard hyphens where the duplication is only a font variant Properties: 2E4E;DOUBLE HYPHEN;Pd;0;ON;;;;;N;;;;; Entry in LineBreak.TXT: 2E4E;BA # DOUBLE HYPHEN 1. Introduction The "ordinary" hyphen, which is representable by U+002D HYPHEN-MINUS or U+2010 HYPHEN, usually is displayed by a single short horizontal dash, but has a considerable glyph variation: it can be slanted to oblique or doubled (stacked) according to the used font. For instance, in Fraktur (Blackletter) fonts, it commonly is represented by two stacked short oblique dashes. However, in certain applications, double hyphens (consisting of two stacked short dashes) are used as characters with semantics deviating from the "ordinary" hyphen, e.g. to represent a definite unit in transliteration. For such a special application, in this case for transliteration of Coptic, U+2E17 DOUBLE OBLIQUE HYPHEN was encoded ([1], example on p. 9). However, there are other applications where the double hyphen is usually not oblique. For such applications, a "DOUBLE HYPHEN" is proposed here, which consists of two stacked short dashes which usually are horizontal. -

CJK Symbols and Punctuation Range: 3000–303F

CJK Symbols and Punctuation Range: 3000–303F This file contains an excerpt from the character code tables and list of character names for The Unicode Standard, Version 14.0 This file may be changed at any time without notice to reflect errata or other updates to the Unicode Standard. See https://www.unicode.org/errata/ for an up-to-date list of errata. See https://www.unicode.org/charts/ for access to a complete list of the latest character code charts. See https://www.unicode.org/charts/PDF/Unicode-14.0/ for charts showing only the characters added in Unicode 14.0. See https://www.unicode.org/Public/14.0.0/charts/ for a complete archived file of character code charts for Unicode 14.0. Disclaimer These charts are provided as the online reference to the character contents of the Unicode Standard, Version 14.0 but do not provide all the information needed to fully support individual scripts using the Unicode Standard. For a complete understanding of the use of the characters contained in this file, please consult the appropriate sections of The Unicode Standard, Version 14.0, online at https://www.unicode.org/versions/Unicode14.0.0/, as well as Unicode Standard Annexes #9, #11, #14, #15, #24, #29, #31, #34, #38, #41, #42, #44, #45, and #50, the other Unicode Technical Reports and Standards, and the Unicode Character Database, which are available online. See https://www.unicode.org/ucd/ and https://www.unicode.org/reports/ A thorough understanding of the information contained in these additional sources is required for a successful implementation. -

A Simplified Blackletter in Two Distinct Styles

Fleisch™ A simplified blackletter in two distinct styles. Designed by Joachim Müller-Lancé Supplemental Release · Version 1.5 · April 7, 2021 FLEISCH ÉPÎTRE CAMPAGNE e-Parchment Documents Hof zum Gutenberg Mainz, anno 1450 Erſat Deſigns for Affluent Tricy Bailiffſ FLEISCH 2 FLEISCH Fleischerfachgeschäft Lettere di Soſtanza Il processo di rimozione macchia di inchiostro invisibile Dungeon Master 6 player party + NPCs FLEISCH 3 FLEISCH Form & Feature Comparison Field StudyStudy ⃝ ⬤ FLEISCH WOLF FLEISCH WURST More traditional caligraphic forms Rectilinear terminals and counters FLEISCH 4 FLEISCH Features STYLISTIC ALTERNATES → a k x z a k x z → B L A C K B L A C K STANDARD LIGATURES ch ffi tf fz → ch ffi tf fz REGALIA (ORNAMENTS) 1 2 3 4 1 2 3 4 Additional Features Include: Kerning, Fractions, Localized Forms, Historical Forms, Case-Sensitive Forms, and Ordinals FLEISCH 5 FLEISCH Story Fleisch is a blackletter pair inspired by lettering that the In Müller-Lancé’s opinion, the capital letters of many designer, Joachim Müller-Lancé recalls from his child- blackletter variants are too ornate to be set in all-caps hood growing up in Freiburg im Breisgau, in southwest therefore, Fleisch’s uppercase is simplified and ‘roman- Germany. Fleisch also harks back to German 1920’s ized’ to maintain legibility. In addition to the lining and typefaces, droll picture books, newspaper advertising, oldstyle figures, Fleisch offers shapes more native to and cigar box labels. A bit of a remix, Fleisch is not based blackletter for the alternates of a, k, x, z, and Z, while the on any particular era, region or style of blackletter, basic alphabet contains the more romanized shapes.