Unicode Regular Expressions Technical Reports

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

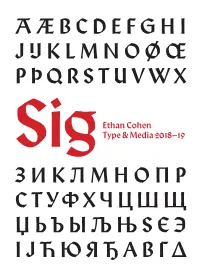

Sig Process Book

A Æ B C D E F G H I J IJ K L M N O Ø Œ P Þ Q R S T U V W X Ethan Cohen Type & Media 2018–19 SigY Z А Б В Г Ґ Д Е Ж З И К Л М Н О П Р С Т У Ф Х Ч Ц Ш Щ Џ Ь Ъ Ы Љ Њ Ѕ Є Э І Ј Ћ Ю Я Ђ Α Β Γ Δ SIG: A Revival of Rudolf Koch’s Wallau Type & Media 2018–19 ЯREthan Cohen ‡ Submitted as part of Paul van der Laan’s Revival class for the Master of Arts in Type & Media course at Koninklijke Academie von Beeldende Kunsten (Royal Academy of Art, The Hague) INTRODUCTION “I feel such a closeness to William Project Overview Morris that I always have the feeling Sig is a revival of Rudolf Koch’s Wallau Halbfette. My primary source that he cannot be an Englishman, material was the Klingspor Kalender für das Jahr 1933 (Klingspor Calen- dar for the Year 1933), a 17.5 × 9.6 cm book set in various cuts of Wallau. he must be a German.” The Klingspor Kalender was an annual promotional keepsake printed by the Klingspor Type Foundry in Offenbach am Main that featured different Klingspor typefaces every year. This edition has a daily cal- endar set in Magere Wallau (Wallau Light) and an 18-page collection RUDOLF KOCH of fables set in 9 pt Wallau Halbfette (Wallau Semibold) with woodcut illustrations by Willi Harwerth, who worked as a draftsman at the Klingspor Type Foundry. -

Package Mathfont V. 1.6 User Guide Conrad Kosowsky December 2019 [email protected]

Package mathfont v. 1.6 User Guide Conrad Kosowsky December 2019 [email protected] For easy, off-the-shelf use, type the following in your docu- ment preamble and compile using X LE ATEX or LuaLATEX: \usepackage[hfont namei]{mathfont} Abstract The mathfont package provides a flexible interface for changing the font of math- mode characters. The package allows the user to specify a default unicode font for each of six basic classes of Latin and Greek characters, and it provides additional support for unicode math and alphanumeric symbols, including punctuation. Crucially, mathfont is compatible with both X LE ATEX and LuaLATEX, and it provides several font-loading commands that allow the user to change fonts locally or for individual characters within math mode. Handling fonts in TEX and LATEX is a notoriously difficult task. Donald Knuth origi- nally designed TEX to support fonts created with Metafont, and while subsequent versions of TEX extended this functionality to postscript fonts, Plain TEX's font-loading capabilities remain limited. Many, if not most, LATEX users are unfamiliar with the fd files that must be used in font declaration, and the minutiae of TEX's \font primitive can be esoteric and confusing. LATEX 2"'s New Font Selection System (nfss) implemented a straightforward syn- tax for loading and managing fonts, but LATEX macros overlaying a TEX core face the same versatility issues as Plain TEX itself. Fonts in math mode present a double challenge: after loading a font either in Plain TEX or through the nfss, defining math symbols can be unin- tuitive for users who are unfamiliar with TEX's \mathcode primitive. -

The Unicode Cookbook for Linguists: Managing Writing Systems Using Orthography Profiles

Zurich Open Repository and Archive University of Zurich Main Library Strickhofstrasse 39 CH-8057 Zurich www.zora.uzh.ch Year: 2017 The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles Moran, Steven ; Cysouw, Michael DOI: https://doi.org/10.5281/zenodo.290662 Posted at the Zurich Open Repository and Archive, University of Zurich ZORA URL: https://doi.org/10.5167/uzh-135400 Monograph The following work is licensed under a Creative Commons: Attribution 4.0 International (CC BY 4.0) License. Originally published at: Moran, Steven; Cysouw, Michael (2017). The Unicode Cookbook for Linguists: Managing writing systems using orthography profiles. CERN Data Centre: Zenodo. DOI: https://doi.org/10.5281/zenodo.290662 The Unicode Cookbook for Linguists Managing writing systems using orthography profiles Steven Moran & Michael Cysouw Change dedication in localmetadata.tex Preface This text is meant as a practical guide for linguists, and programmers, whowork with data in multilingual computational environments. We introduce the basic concepts needed to understand how writing systems and character encodings function, and how they work together. The intersection of the Unicode Standard and the International Phonetic Al- phabet is often not met without frustration by users. Nevertheless, thetwo standards have provided language researchers with a consistent computational architecture needed to process, publish and analyze data from many different languages. We bring to light common, but not always transparent, pitfalls that researchers face when working with Unicode and IPA. Our research uses quantitative methods to compare languages and uncover and clarify their phylogenetic relations. However, the majority of lexical data available from the world’s languages is in author- or document-specific orthogra- phies. -

Use Perl Regular Expressions in SAS® Shuguang Zhang, WRDS, Philadelphia, PA

NESUG 2007 Programming Beyond the Basics Use Perl Regular Expressions in SAS® Shuguang Zhang, WRDS, Philadelphia, PA ABSTRACT Regular Expression (Regexp) enhance search and replace operations on text. In SAS®, the INDEX, SCAN and SUBSTR functions along with concatenation (||) can be used for simple search and replace operations on static text. These functions lack flexibility and make searching dynamic text difficult, and involve more function calls. Regexp combines most, if not all, of these steps into one expression. This makes code less error prone, easier to maintain, clearer, and can improve performance. This paper will discuss three ways to use Perl Regular Expression in SAS: 1. Use SAS PRX functions; 2. Use Perl Regular Expression with filename statement through a PIPE such as ‘Filename fileref PIPE 'Perl programm'; 3. Use an X command such as ‘X Perl_program’; Three typical uses of regular expressions will also be discussed and example(s) will be presented for each: 1. Test for a pattern of characters within a string; 2. Replace text; 3. Extract a substring. INTRODUCTION Perl is short for “Practical Extraction and Report Language". Larry Wall Created Perl in mid-1980s when he was trying to produce some reports from a Usenet-Nes-like hierarchy of files. Perl tries to fill the gap between low-level programming and high-level programming and it is easy, nearly unlimited, and fast. A regular expression, often called a pattern in Perl, is a template that either matches or does not match a given string. That is, there are an infinite number of possible text strings. -

Lecture 18: Theory of Computation Regular Expressions and Dfas

Introduction to Theoretical CS Lecture 18: Theory of Computation Two fundamental questions. ! What can a computer do? ! What can a computer do with limited resources? General approach. Pentium IV running Linux kernel 2.4.22 ! Don't talk about specific machines or problems. ! Consider minimal abstract machines. ! Consider general classes of problems. COS126: General Computer Science • http://www.cs.Princeton.EDU/~cos126 2 Why Learn Theory In theory . Regular Expressions and DFAs ! Deeper understanding of what is a computer and computing. ! Foundation of all modern computers. ! Pure science. ! Philosophical implications. a* | (a*ba*ba*ba*)* In practice . ! Web search: theory of pattern matching. ! Sequential circuits: theory of finite state automata. a a a ! Compilers: theory of context free grammars. b b ! Cryptography: theory of computational complexity. 0 1 2 ! Data compression: theory of information. b "In theory there is no difference between theory and practice. In practice there is." -Yogi Berra 3 4 Pattern Matching Applications Regular Expressions: Basic Operations Test if a string matches some pattern. Regular expression. Notation to specify a set of strings. ! Process natural language. ! Scan for virus signatures. ! Search for information using Google. Operation Regular Expression Yes No ! Access information in digital libraries. ! Retrieve information from Lexis/Nexis. Concatenation aabaab aabaab every other string ! Search-and-replace in a word processors. cumulus succubus Wildcard .u.u.u. ! Filter text (spam, NetNanny, Carnivore, malware). jugulum tumultuous ! Validate data-entry fields (dates, email, URL, credit card). aa Union aa | baab baab every other string ! Search for markers in human genome using PROSITE patterns. aa ab Closure ab*a abbba ababa Parse text files. -

Invisible-Punctuation.Pdf

... ' I •e •e •4 I •e •e •4 •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • ••••• • • •• • • • • • I •e •e •4 In/visible Punctuation • • • • •• • • • •• • • • •• • • • • • •• • • • • • •• • • • • • • •• • • • • • •• • • • • • ' •• • • • • • John Lennard •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • •• • • • • • I •e •e •4 I •e •e •4 I •e •e •4 I •e •e •4 I •e •e •4 I ••• • • 4 I.e• • • 4 I ••• • • 4 I ••• • • 4 I ••• • • 4 I ••• • • 4 • • •' .•. • . • .•. •. • ' .. ' • • •' .•. • . • .•. • . • ' . ' . UNIVERSITY OF THE WEST INDIES- LENNARD, 121-138- VISIBLE LANGUAGE 45.1/ 2 I •e •e' • • • • © VISIBLE LANGUAGE, 2011 -RHODE ISLAND SCHOOL OF DESIGN- PROVIDENCE, RHODE ISLAND 02903 .. ' ABSTRACT The article offers two approaches to the question of 'invisible punctuation,' theoretical and critical. The first is a taxonomy of modes of punctuational invisibility, · identifying denial, repression, habituation, error and absence. Each is briefly discussed and some relations with technologies of reading are considered. The second considers the paragraphing, or lack of it, in Sir Philip Sidney's Apology for Poetry: one of the two early printed editions and at least one of the two MSS are mono paragraphic, a feature always silently eliminated by editors as a supposed carelessness. It is argued that this is improbable -

Iso/Iec Jtc1/Sc2/Wg2 N4907 L2/17-359

ISO/IEC JTC1/SC2/WG2 N4907 L2/17-359 2017-10-20 Universal Multiple-Octet Coded Character Set International Organization for Standardization Organisation Internationale de Normalisation Международная организация по стандартизации Doc Type: Working Group Document Title: Proposal to add six Latin Tironian letters to the UCS Source: Michael Everson and Andrew West Status: Individual Contribution Date: 2017-10-20 Replaces: N4841 (L2/17-300) 0. Summary. This proposal requests the encoding of three new casing letters used in medieval European texts. If this proposal is accepted, the following characters will exist: A7F0 LATIN CAPITAL LETTER TIRONIAN ET A7F1 LATIN SMALL LETTER TIRONIAN ET • Old and Middle English, … → 204A tironian sign et ꟲ A7F2 LATIN CAPITAL LETTER TIRONIAN ET WITH HOOK ꟳ A7F3 LATIN SMALL LETTER TIRONIAN ET WITH HOOK • Middle English, Latin, … ꟴ A7F4 LATIN CAPITAL LETTER TIRONIAN ET WITH HOOK AND STROKE Ꟶ A7F5 LATIN SMALL LETTER TIRONIAN ET WITH HOOK AND STROKE • Middle English, Latin, French, … 1. Background. A punctuation character chiefly for Irish use was added in Unicode 3.0 in 1999. Its current entry in the standard reads: ⁊ 204A TIRONIAN SIGN ET • Irish Gaelic, Old English, … → 0026 & ampersand → 1F670 script ligature et ornament A variety of medieval English manuscripts across a number of centuries treat the the Tironian sign as an actual letter of the alphabet, and case it when in sentence-initial position. Modern transcribers of documents containing these letters have distinguished them as casing, sometimes using the digit 7 as a font workaround. The original simple two-stroke shape of the character ⁊ as used in the Insular tradition (for Irish and Old English) was replaced by Carolingian character with a hooked base ꟳ, which had a range of glyph variants including a long extension of the top-bar descending to the left Page 1 and sometimes even encircling the glyph. -

Perl Regular Expressions Tip Sheet Functions and Call Routines

– Perl Regular Expressions Tip Sheet Functions and Call Routines Basic Syntax Advanced Syntax regex-id = prxparse(perl-regex) Character Behavior Character Behavior Compile Perl regular expression perl-regex and /…/ Starting and ending regex delimiters non-meta Match character return regex-id to be used by other PRX functions. | Alternation character () Grouping {}[]()^ Metacharacters, to match these pos = prxmatch(regex-id | perl-regex, source) $.|*+?\ characters, override (escape) with \ Search in source and return position of match or zero Wildcards/Character Class Shorthands \ Override (escape) next metacharacter if no match is found. Character Behavior \n Match capture buffer n Match any one character . (?:…) Non-capturing group new-string = prxchange(regex-id | perl-regex, times, \w Match a word character (alphanumeric old-string) plus "_") Lazy Repetition Factors Search and replace times number of times in old- \W Match a non-word character (match minimum number of times possible) string and return modified string in new-string. \s Match a whitespace character Character Behavior \S Match a non-whitespace character *? Match 0 or more times call prxchange(regex-id, times, old-string, new- \d Match a digit character +? Match 1 or more times string, res-length, trunc-value, num-of-changes) Match a non-digit character ?? Match 0 or 1 time Same as prior example and place length of result in \D {n}? Match exactly n times res-length, if result is too long to fit into new-string, Character Classes Match at least n times trunc-value is set to 1, and the number of changes is {n,}? Character Behavior Match at least n but not more than m placed in num-of-changes. -

Detection of Suspicious IDN Homograph Domains Using Active DNS Measurements

A Case of Identity: Detection of Suspicious IDN Homograph Domains Using Active DNS Measurements Ramin Yazdani Olivier van der Toorn Anna Sperotto University of Twente University of Twente University of Twente Enschede, The Netherlands Enschede, The Netherlands Enschede, The Netherlands [email protected] [email protected] [email protected] Abstract—The possibility to include Unicode characters in problem in the case of Unicode – ASCII homograph domain names allows users to deal with domains in their domains. We propose a low-cost method for proactively regional languages. This is done by introducing Internation- detecting suspicious IDNs. Since our proactive approach alized Domain Names (IDN). However, the visual similarity is based not on use, but on characteristics of the domain between different Unicode characters - called homoglyphs name itself and its associated DNS records, we are able to - is a potential security threat, as visually similar domain provide an early alert for both domain owners as well as names are often used in phishing attacks. Timely detection security researchers to further investigate these domains of suspicious homograph domain names is an important before they are involved in malicious activities. The main step towards preventing sophisticated attacks, since this can contributions of this paper are that we: prevent unaware users to access those homograph domains • propose an improved Unicode Confusion table able that actually carry malicious content. We therefore propose to detect 2.97 times homograph domains compared a structured approach to identify suspicious homograph to the state-of-the-art confusion tables; domain names based not on use, but on characteristics • combine active DNS measurements and Unicode of the domain name itself and its associated DNS records. -

Regular Expressions with a Brief Intro to FSM

Regular Expressions with a brief intro to FSM 15-123 Systems Skills in C and Unix Case for regular expressions • Many web applications require pattern matching – look for <a href> tag for links – Token search • A regular expression – A pattern that defines a class of strings – Special syntax used to represent the class • Eg; *.c - any pattern that ends with .c Formal Languages • Formal language consists of – An alphabet – Formal grammar • Formal grammar defines – Strings that belong to language • Formal languages with formal semantics generates rules for semantic specifications of programming languages Automaton • An automaton ( or automata in plural) is a machine that can recognize valid strings generated by a formal language . • A finite automata is a mathematical model of a finite state machine (FSM), an abstract model under which all modern computers are built. Automaton • A FSM is a machine that consists of a set of finite states and a transition table. • The FSM can be in any one of the states and can transit from one state to another based on a series of rules given by a transition function. Example What does this machine represents? Describe the kind of strings it will accept. Exercise • Draw a FSM that accepts any string with even number of A’s. Assume the alphabet is {A,B} Build a FSM • Stream: “I love cats and more cats and big cats ” • Pattern: “cat” Regular Expressions Regex versus FSM • A regular expressions and FSM’s are equivalent concepts. • Regular expression is a pattern that can be recognized by a FSM. • Regex is an example of how good theory leads to good programs Regular Expression • regex defines a class of patterns – Patterns that ends with a “*” • Regex utilities in unix – grep , awk , sed • Applications – Pattern matching (DNA) – Web searches Regex Engine • A software that can process a string to find regex matches. -

Supplemental Punctuation Range: 2E00–2E7F

Supplemental Punctuation Range: 2E00–2E7F This file contains an excerpt from the character code tables and list of character names for The Unicode Standard, Version 14.0 This file may be changed at any time without notice to reflect errata or other updates to the Unicode Standard. See https://www.unicode.org/errata/ for an up-to-date list of errata. See https://www.unicode.org/charts/ for access to a complete list of the latest character code charts. See https://www.unicode.org/charts/PDF/Unicode-14.0/ for charts showing only the characters added in Unicode 14.0. See https://www.unicode.org/Public/14.0.0/charts/ for a complete archived file of character code charts for Unicode 14.0. Disclaimer These charts are provided as the online reference to the character contents of the Unicode Standard, Version 14.0 but do not provide all the information needed to fully support individual scripts using the Unicode Standard. For a complete understanding of the use of the characters contained in this file, please consult the appropriate sections of The Unicode Standard, Version 14.0, online at https://www.unicode.org/versions/Unicode14.0.0/, as well as Unicode Standard Annexes #9, #11, #14, #15, #24, #29, #31, #34, #38, #41, #42, #44, #45, and #50, the other Unicode Technical Reports and Standards, and the Unicode Character Database, which are available online. See https://www.unicode.org/ucd/ and https://www.unicode.org/reports/ A thorough understanding of the information contained in these additional sources is required for a successful implementation. -

Regular Expressions

CS 172: Computability and Complexity Regular Expressions Sanjit A. Seshia EECS, UC Berkeley Acknowledgments: L.von Ahn, L. Blum, M. Blum The Picture So Far DFA NFA Regular language S. A. Seshia 2 Today’s Lecture DFA NFA Regular Regular language expression S. A. Seshia 3 Regular Expressions • What is a regular expression? S. A. Seshia 4 Regular Expressions • Q. What is a regular expression? • A. It’s a “textual”/ “algebraic” representation of a regular language – A DFA can be viewed as a “pictorial” / “explicit” representation • We will prove that a regular expressions (regexps) indeed represent regular languages S. A. Seshia 5 Regular Expressions: Definition σ is a regular expression representing { σσσ} ( σσσ ∈∈∈ ΣΣΣ ) ε is a regular expression representing { ε} ∅ is a regular expression representing ∅∅∅ If R 1 and R 2 are regular expressions representing L 1 and L 2 then: (R 1R2) represents L 1⋅⋅⋅L2 (R 1 ∪∪∪ R2) represents L 1 ∪∪∪ L2 (R 1)* represents L 1* S. A. Seshia 6 Operator Precedence 1. *** 2. ( often left out; ⋅⋅⋅ a ··· b ab ) 3. ∪∪∪ S. A. Seshia 7 Example of Precedence R1*R 2 ∪∪∪ R3 = ( ())R1* R2 ∪∪∪ R3 S. A. Seshia 8 What’s the regexp? { w | w has exactly a single 1 } 0*10* S. A. Seshia 9 What language does ∅∅∅* represent? {ε} S. A. Seshia 10 What’s the regexp? { w | w has length ≥ 3 and its 3rd symbol is 0 } ΣΣΣ2 0 ΣΣΣ* Σ = (0 ∪∪∪ 1) S. A. Seshia 11 Some Identities Let R, S, T be regular expressions • R ∪∪∪∅∅∅ = ? • R ···∅∅∅ = ? • Prove: R ( S ∪∪∪ T ) = R S ∪∪∪ R T (what’s the proof idea?) S.