Mcdc) 2013-2014

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

HONDA the Povver of Dreams

11 Honda Australia started officially on February 4' , share the initiative in developing new products for 1969 in a rented flat. tomorrow, with a focus on increased durability, Honda grew enormously during the following reliability and performance. decades and in October 1991 Honda MPE was Honda was the first company in the world to established. Dedicated to Honda Motorcycles, develop a humanoid robot capable of autonomous, Power Equipment and Marine, and separate from bipedal motion. Honda's advanced robot, ASIMO, HONDA Honda cars, the company was formed to focus incorporates artificial intelligence enabling the attention on the many opportunities in the robot to understand and independently respond Australian market. to body language and gestures. Honda's goal is to The Povver of Dreams create a robot that will be a true partner to THE PRODUCT humankind. meets local needs, the company has gone beyond The strength of the Honda brand derives from the Honda is also taking aim at the sky. Two of establishing local sales networks. Many products simple fact that each Honda product is designed, Honda's recent challenges include the development are not only manufactured but also developed in engineered and manufactured to be the leader in its of a compact business jet and a piston aircraft the regions where they ru·e used. Honda has more field, delivering optimum performance, reliability, engine. Honda's US R&D operation has already than 120 manufacturing facilities in 29 countries economy and world leading enlission levels. created a prototype of the jet, which is entering the outside Japan, producing motorcycles, automobiles, THE MARKET Honda's advanced engineering has created the test flight phase. -

Escape from Cubeville

Volume 26, Number 9 June 2004 $3.50 Celebrating over 25 years of vintage motorcycling VINTAGE JAPANESE MOTORCYCLE CLUB MAGAZINE JUNE 2004 CONTENTS President’s Column..........3 This issue’s web password is: midohio Editor’s Column ...........4 Effective June 25th Use lower case Calendar of Events ..........5 Sunshine Meet Kicks Off Mission Statement AMCA 2004 Season ..........6 The Purpose of this organization is to promote the preservation, restoration and enjoyment of Vintage Japanese A Day at Laconia ...........8 motorcycles (defined as those greater than 15 years old) and to promote the sport of motorcycling and camaraderie RidingWiththeChainGang......9 of motorcyclists everywhere. Product Review: Silver's President CDs Are Golden ...........11 Pete Boody (865) 435-2112, [email protected] Field of Dreams: Magazine Editor Karen McElhaney The White Rose Meet ........12 (865) 671-2628, [email protected] Classified Advertising Gary Gadd A Honda Adventure in Time ....14 (817) 284-8195, [email protected] Commercial Advertising Region A Norman Smith Passbook is Pass Back to 1970. 16 (941) 792-0003, [email protected] Commercial Advertising Region B Brad Powell Kettle to Water (678) 576-4258, [email protected] Buffalo Conversion .........20 Membership Bill Granade Secrets of Speedometer Repair . 22 (813) 961-3737, [email protected] Webmaster Jason Bell (972) 245-0634, [email protected] Honda History: Cover Layout At Home in Japan ..........26 Andre Okazaki Magazine Layout Darin Watson Escape from Cubeville .......28 2004 Vintage -

Honda Insight Wikipedia in Its Third Generation, It Became a Four-Door Sedan €”Present

Honda insight wikipedia In its third generation, it became a four-door sedan —present. It was Honda's first model with Integrated Motor Assist system and the most fuel efficient gasoline-powered car available in the U. The Insight was launched April in the UK as the lowest priced hybrid on the market and became the best selling hybrid for the month. The Insight ranked as the top-selling vehicle in Japan for the month of April , a first for a hybrid model. In the following month, December , Insight became the first hybrid available in North America, followed seven months later by the Toyota Prius. The Insight featured optimized aerodynamics and a lightweight aluminum structure to maximize fuel efficiency and minimize emissions. As of , the first generation Insight still ranks as the most fuel-efficient United States Environmental Protection Agency EPA certified gasoline-fueled vehicle, with a highway rating of 61 miles per US gallon 3. The first-generation Insight was manufactured as a two-seater, launching in a single trim level with a manual transmission and optional air conditioning. In the second year of production two trim levels were available: manual transmission with air conditioning , and continuously variable transmission CVT with air conditioning. The only major change during its life span was the introduction of a trunk-mounted, front-controlled, multiple-disc CD changer. In addition to its hybrid drive system, the Insight was small, light and streamlined — with a drag-coefficient of 0. At the time of production, it was the most aerodynamic production car to be built. -

Gait-Behavior Optimization Considering Arm Swing and Toe Mechanisms for Biped Robot on Rough Road

Gait-Behavior Optimization Considering Arm Swing and Toe Mechanisms for Biped Robot on Rough Road Author: Supervisor: NGUYEN VAN TINH Professor. Dr. Eng. Student ID: NB16508 HIROSHI HASEGAWA A DISSERTATION SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY September, 2019 i Declaration of Authorship • This work was done wholly or mainly while in candidature for a research de- gree at this University. • Where any part of this thesis has previously been submitted for a degree or any other qualification at this University or any other institution, this has been clearly stated. • Where I have consulted the published work of others, this is always clearly attributed. • Where I have quoted from the work of others, the source is always given. With the exception of such quotations, this thesis is entirely my own work. • I have acknowledged all main sources of help. • Where the thesis is based on work done by myself jointly with others, I have made clear exactly what was done by others and what I have contributed my- self. Signed: Date: ii Abstract This research addresses a gait generation approach for the biped robot which is based on considering that a gait pattern generation is an optimization problem with constraints where to build up it, Response Surface Model (RSM) is used to approxi- mate objective and constraint function, afterwards, Improved Self-Adaptive Differ- ential Evolution Algorithm (ISADE) is applied to find out the optimal gait pattern for the robot. In addition, to enhance stability of walking behavior, I apply a foot structure with toe mechanism. -

Honda at Home in Japan

Volume 26, Number 8 April 2004 $3.50 Hodaka Revival Buying Vintage Tires Biking in Hawaii Honda at Home in Japan Celebrating over 25 years of vintage motorcycling VINTAGE JAPANESE MOTORCYCLE CLUB MAGAZINE APRIL 2004 CONTENTS President’s Column..........3 This issue’s web password is: showers Editor’s Column ...........3 Effective April 25th Use lower case VJMC Chapter Membership Benefits .........6 Mission Statement Calendar of Events ..........7 The Purpose of this organization is to promote the preservation, restoration and enjoyment of Vintage Japanese A Ride to Remember: motorcycles (defined as those greater Coast to Coast on a CB77 than 15 years old) and to promote the .......8 sport of motorcycling and camaraderie of motorcyclists everywhere. Tire and Size and RatingInfoDecoded.........9 President Pete Boody Tech Tip: Balancing (865) 435-2112, [email protected] Carbs with TWINMAX ........12 Magazine Editor Karen McElhaney (865) 671-2628, [email protected] Barber Museum Keeps Classified Advertising Bike History Alive .........14 Gary Gadd (817) 284-8195, [email protected] Commercial Advertising Region A Honda History: Norman Smith At Home In Japan ..........18 (941) 792-0003, [email protected] Commercial Advertising Region B Brad Powell Big Horn Reborn ..........22 (678) 576-4258, [email protected] Membership Bill Granade Vintage Japanese (813) 961-3737, [email protected] Webmaster Bikes in Hawaii ...........25 Jason Bell (972) 245-0634, [email protected] The Hodaka Motorcycle Revival . 26 Cover Layout Andre Okazaki Magazine Layout International Motorcycle Darin Watson SuperShow Report .........28 © 2004 Vintage Japanese Motorcycle Club. All rights reserved. No part of this document may be reproduced or transmit- Classifieds ..............31 ted in any form without permission. -

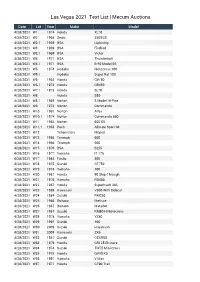

Las Vegas 2021 Text List | Mecum Auctions

Las Vegas 2021 Text List | Mecum Auctions Date Lot Year Make Model 4/28/2021 W1 1974 Honda XL70 4/28/2021 W2 1968 Sears 250SGS 4/28/2021 W2.1 1969 BSA Lightning 4/28/2021 W3 1969 BSA Firebird 4/28/2021 W3.1 1969 BSA Victor 4/28/2021 W4 1971 BSA Thunderbolt 4/28/2021 W4.1 1971 BSA B-50 Model SS 4/28/2021 W5 1974 Hodaka Motocross 100 4/28/2021 W5.1 Hodaka Super Rat 100 4/28/2021 W6 1964 Honda CB750 4/28/2021 W6.1 1973 Honda CB450 4/28/2021 W7.1 1973 Honda SL70 4/28/2021 W8 Honda S90 4/28/2021 W8.1 1969 Norton S Model Hi-Pipe 4/28/2021 W9 1973 Norton Commando 4/28/2021 W10 1967 Norton Atlas 4/28/2021 W10.1 1974 Norton Commando 850 4/28/2021 W11 1962 Norton 650 SS 4/28/2021 W11.1 1963 Puch Allstate Sport 60 4/28/2021 W12 Teliamotors Moped 4/28/2021 W13 1956 Triumph 650 4/28/2021 W14 1966 Triumph 500 4/28/2021 W15 1970 BSA B255 4/28/2021 W16 1977 Yamaha IT 175 4/28/2021 W17 1984 Fantic 300 4/28/2021 W18 1975 Suzuki GT750 4/28/2021 W19 1974 Yamaha 100 4/28/2021 W20 1967 Honda 90 Step-Through 4/28/2021 W21 1976 Yamaha RD400 4/28/2021 W22 1967 Honda Superhawk 305 4/28/2021 W23 1999 Kawasaki V800 With Sidecar 4/28/2021 W24 1984 Suzuki RM250 4/28/2021 W25 1966 Bultaco Metisse 4/28/2021 W26 1967 Bultaco Matador 4/28/2021 W27 1987 Suzuki RM80 H Motocross 4/28/2021 W28 1978 Yamaha YZ80 4/28/2021 W29 1994 Suzuki 400 4/28/2021 W30 2009 Suzuki Hayabusa 4/28/2021 W31 2009 Kawasaki ZX6 4/28/2021 W32 1987 Suzuki GSXR50 4/28/2021 W33 1979 Honda CR125 Elsinore 4/28/2021 W34 1974 Suzuki TM75 Mini-Cross 4/28/2021 W35 1975 Honda QA50 K3 4/28/2021 W36 1997 Yamaha -

Master Thesis Robot's Appearance

VILNIUS ACADEMY OF ARTS FACULTY OF POSTGRADUATE STUDIES PRODUCT DESIGN DEPARTMENT II YEAR MASTER DEGREE Inna Popa MASTER THESIS ROBOT’S APPEARANCE Theoretical part of work supervisor: Mantas Lesauskas Practical part of work supervisor: Šarūnas Šlektavičius Vilnius, 2016 CONTENTS Preface .................................................................................................................................................... 3 Problematics and actuality ...................................................................................................................... 3 Motives of research and innovation of the work .................................................................................... 3 The hypothesis ........................................................................................................................................ 4 Goal of the research ................................................................................................................................ 4 Object of research ................................................................................................................................... 4 Aims of Research ..................................................................................................................................... 5 Sources of research (books, images, robots, companies) ....................................................................... 5 Robots design ......................................................................................................................................... -

Master's Thesis

MASTER'S THESIS Developing a ROS Enabled Full Autonomous Quadrotor Ivan Monzon 2013 Master of Science in Engineering Technology Engineering Physics and Electrical Engineering Luleå University of Technology Department of Computer Science, Electrical and Space Engineering Developing a ROS Enabled Full Autonomous Quadrotor Iv´anMonz´on Lule˚aUniversity of Technology Department of Computer science, Electrical and Space engineering, Control Engineering Group 27th August 2013 ABSTRACT The aim of this Master Thesis focuses on: a) the design and development of a quadrotor and b) on the design and development of a full ROS enabled software environment for controlling the quadrotor. ROS (Robotic Operating System) is a novel operating system, which has been fully oriented to the specific needs of the robotic platforms. The work that has been done covers various software developing aspects, such as: operating system management in different robotic platforms, the study of the various forms of program- ming in the ROS environment, evaluating building alternatives, the development of the interface with ROS or the practical tests with the developed aerial platform. In more detail, initially in this thesis, a study of the ROS possibilities applied to flying robots, the development alternatives and the feasibility of integration has been done. These applications have included the aerial SLAM implementations (Simultaneous Location and Mapping) and aerial position control. After the evaluation of the alternatives and considering the related functionality, au- tonomy and price, it has been considered to base the development platform on the Ar- duCopter platform. Although there are some examples of unmanned aerial vehicles in ROS, there is no support for this system, thus proper design and development work was necessary to make the two platforms compatible as it will be presented. -

(Single Occupancy) TWIN RING MOTEGI

We will provide a dramatic race‐experience tour in Japan. You will visit Twin Ring Motegi to enjoy “AUTOBACS SUPER GT Round8 MOTEGI GT GRAND FINAL” with VIP seat and also experience Honda CR‐Z test drive on the same course. We also offer a premium ppyarty on the night of the race day with a special guest. Please check below and the following pages for more details. On the last day, we can arrange some optional tours depending on your flights. Please feel free to contact us. TOUR PRICE : JPY 1,030,000/pp *Reference price: USD 9,800 Package Includes: Hotel Accommodation (3 nights) Transportation between Narita, Motegi and Motegi toTokyo Englhlish speaking assistant Ticket and VIP seat for Super GT Round Final All meals including 2 parties Honda CR‐Z test drive fee at Motegi Airport bus tickets to NRT/HND. Package Excluded: International Flight to/from Japan Optional Tour fees Incidental Charges *After your application, we will send you a confirmation. **Minimum participants required : 8 persons TOUR ITIENARARY #Date Detail Nov.12(Sat.) NARITA ARRIVAL/NARITA Arrive at the Narita International Airport(NRT) *If you prefer Haneda Airport, please contact us. DAY 1 Move to Hilton Narita on your own. Dinner/Briefing at Hilton Narita Overnight Hilton Narita (Single Occupancy) Nov.13(Sun.) NARITA/MOTEGI ama.m. BkfBreakfast at the HlHotel Transfer to Twin Ring Motegi p.m. Spectating SuperGT Grand Final (VIP seat) DAY2 Lunch at Twin Ring Motegi Visit Honda collection Hall Premium Party at Hotel Twin Ring (with a Special guest) OihtOvernight HtlHotel TiTwin Ring (i(sing le O)Occupancy) Nov.14(Mon.) MOTEGI/TOKYO a.m. -

Chapter 6. Evolution of Legged Machines and Robots

excerpt from the book: Marko B. Popovic, Biomechanics and Robotics, Pan Stanford Publishing Pte. Ltd., 2013 (No of pages 351) Print ISBN: 9789814411370, eBook ISBN: 9789814411387, DOI: 10.4032/9789814411387 Copyright © 2014 by Pan Stanford Publishing Pte. Ltd. Chapter 6. Evolution of Legged Machines and Robots Chapter outline: Early Legged Machines in the 19th Century 193 Legged Machines in the First Half of the 20th Century 195 Legged Machines during 1950–1970 196 Legged Robots during 1970–1980 197 Legged Robots during 1980–1990 199 Legged Robots during 1990–2000 204 Legged Robots since 2000 208 References and Suggested Reading 214 [chapter content intentionally omitted] References: About AIBO, http://www.sonyaibo.net/aboutaibo.htm. Retrieved July 2012. Asimo by Honda, history, http://asimo.honda.com/asimo-history/. Retrieved July 2012. ASIMO—Wikipedia, the free encyclopedia, http://en.wikipedia.org/wiki/ASIMO. Retrieved July 2012. BBC News—Robotic cheetah breaks speed record for legged robots, (BBC News Technology 6 March 2012) http://www.bbc.com/news/technology-17269535. Retrieved July 2012. Big Muskie’s Bucket, McConnelsville, Ohio, http://www.roadsideamerica.com/story/2184. Retrieved July 2012. Boston Dynamics: Dedicated to the Science and Art of How Things Move; http://www.bostondynamics.com/robot_bigdog.html. Retrieved July 2012. Chavez-Clemente, D., “Gait Optimization for Multi-legged Walking Robots, with Application to a Lunar Hexapod”, PhD Dissertation, the Stanford University Department of Aeronautics and Astronautics, January 2011. Cox, W., “Big muskie”, The Ohio State Engineer, p. 25–52, 1970. Cyberneticzoo.com. Early Humanoid Robots, http://cyberneticzoo.com/?page_id=45. Retrieved July 2012. Cyberneticzoo.com. -

Humanoid Robot: ASIMO

Special Issue - 2017 International Journal of Engineering Research & Technology (IJERT) ISSN: 2278-0181 ICADEMS - 2017 Conference Proceedings Humanoid Robot: ASIMO 1Vipul Dalal 2Upasna Setia Department of Computer Science & Engineering, Department of Computer Science & Engineering, GITAM Kablana(Jhajjar), India GITAM Kablana (Jhajjar), India Abstract:Today we find most robots working for people in It has been suggested that very advanced robotics will industries, factories, warehouses, and laboratories. Robots are facilitate the enhancement of ordinary humans. useful in many ways. For instance, it boosts economy because businesses need to be efficient to keep up with the industry Although the initial aim of humanoid research was to build competition. Therefore, having robots helps business owners to better orthotics and prosthesis for human beings, knowledge be competitive, because robots can do jobs better and faster than has been transferred between both disciplines. A few humans can, e.g. robot can built, assemble a car. Yet robots examples are: powered leg prosthesis for neuromuscular cannot perform every job; today robots roles include assisting impaired, ankle-foot orthotics, biological realistic leg research and industry. Finally, as the technology improves, there prosthesis and forearm prosthesis. will be new ways to use robots which will bring new hopes and new potentials. Besides the research, humanoid robots are being developed to perform human tasks like personal assistance, where they Index Terms--Humanoid robots, ASIMO, Honda. should be able to assist the sick and elderly, and dirty or dangerous jobs. Regular jobs like being a receptionist or a I. INTRODUCTION worker of an automotive manufacturing line are also suitable A HUMANOID ROBOT is a robot with its body shape built for humanoids. -

Honda Motor Company

ŠKODA AUTO a.s., Střední odborné učiliště strojírenské, odštěpný závod Honda motor company Jakub Kareš ŠKODA AUTO a.s., Střední odborné učiliště strojírenské, odštěpný závod obor: 39-41-L/51 Autotronik třída: X2. školní rok: 2016/2017 Zadání maturitní práce pro: Jakub Kareš předmět: Odborné předměty zadání: Honda motor co. vedoucí maturitní práce: Ing. Jan Heidler termín odevzdání maturitní práce: 31.03.2017 Prohlášení Prohlašuji, že jsem maturitní práci na téma Komfortní výbava automobilů vypracoval samostatně s využitím uvedených pramenů a literatury. V Mladé Boleslavi dne 27.03.2017 Podpis:…………………………………………………………………… Obsah 1. Úvod ............................................................................................................................. 2 2. Historie ......................................................................................................................... 2 3. VTEC ............................................................................................................................. 3 4. Typy výrobků ................................................................................................................. 6 5. VIN ............................................................................................................................. 19 6. Závěr ........................................................................................................................... 20 7. Poděkování, zdroje .....................................................................................................