Implicit Linking of Food Entities in Social Media

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ancient Mysteries Revealed on Secrets of the Dead A

NEW FROM AUGUST 2019 2019 NOVA ••• The in-depth story of Apollo 8 premieres Wednesday, December 26. DID YOU KNOW THIS ABOUT WOODSTOCK? ••• Page 6 Turn On (Your TV), Tune In Premieres Saturday, August 17, at 8pm on KQED 9 Ancient Mysteries A Heartwarming Shetland Revealed on New Season of Season Premiere!Secrets of the Dead Call the Midwife AUGUST 1 ON KQEDPAGE 9 XX PAGE X CONTENTS 2 3 4 5 6 22 PERKS + EVENTS NEWS + NOTES RADIO SCHEDULE RADIO SPECIALS TV LISTINGS PASSPORT AND PODCASTS Special events Highlights of What’s airing Your monthly guide What’s new and and member what’s happening when New and what’s going away benefits recommended PERKS + EVENTS Inside PBS and KQED: The Role and Future of Public Media Tuesday, August 13, 6:30 – 7:30pm The Commonwealth Club, San Francisco With much of the traditional local news space shrinking and trust in news at an all-time low, how are PBS and public media affiliates such as KQED adapting to the new media industry and political landscapes they face? PBS CEO and President Paula Kerger joins KQED President and CEO Michael Isip and President Emeritus John Boland to discuss the future of public media PERK amidst great technological, political and environmental upheaval. Use member code SpecialPBS for $10 off at kqed.org/events. What Makes a Classic Country Song? Wednesday, August 21, 7pm SFJAZZ Center, San Francisco Join KQED for a live concert and storytelling event that explores the essence and evolution of country music. KQED Senior Arts Editor Gabe Meline and the band Red Meat break down the musical elements, tall tales and true histories behind some iconic country songs and singers. -

Town Wide Newsletter Fall- Winter 2013

Issue No. 84 Fall/Winter 2013 Town of Malta Founded 1802 A MESSAGE FROM THE SUPERVISOR Dear Resident: The construction of GlobalFoundries has been transformational for Saratoga County and Malta. In the past 8 years we have seen GlobalFoundries not only build the first semiconductor manufacturing plant, but add to their 229 acre complex a large administrative building and then a Technology Development Center, bringing the total workforce to 2,000. On August 19, the Town Board amended zoning for the Campus to clear the way for even further expansion. See companion article on page 2. This driving force in our economy has brought a “second tier” of businesses - INSIDE THIS ISSUE: high-tech suppliers such as; Tokyo Electron, KLA Tencor, DNSE, Novellus, Applied Malta’s New Businesses Materials, Ovivo Water, Ebara Technologies, Lansing Engineering and Variant Technologies. Round Lake Road Improvement Plans And, there are a number of “third tier” businesses that have emerged. Page 2 contains a list of these businesses. Senior Corner As we jokingly say, Malta “The Center of the Universe” has become the job center for Saratoga County. During this growth period, the Town Board has provided Residents In The News steady leadership to not only support economic development, but to protect our quality of life. We welcome all our new businesses to The Malta Town Family. We welcome your EVENTS: culture of excellence and public service. We encourage you and your employees Malta Community Day to become part of our community in ways best for you, whether as a volunteer for our fire departments, donor at our blood drives or members of our theatre group. -

Maltese Immigrants in Detroit and Toronto, 1919-1960

Graduate Theses, Dissertations, and Problem Reports 2018 Britishers in Two Worlds: Maltese Immigrants in Detroit and Toronto, 1919-1960 Marc Anthony Sanko Follow this and additional works at: https://researchrepository.wvu.edu/etd Recommended Citation Sanko, Marc Anthony, "Britishers in Two Worlds: Maltese Immigrants in Detroit and Toronto, 1919-1960" (2018). Graduate Theses, Dissertations, and Problem Reports. 6565. https://researchrepository.wvu.edu/etd/6565 This Dissertation is protected by copyright and/or related rights. It has been brought to you by the The Research Repository @ WVU with permission from the rights-holder(s). You are free to use this Dissertation in any way that is permitted by the copyright and related rights legislation that applies to your use. For other uses you must obtain permission from the rights-holder(s) directly, unless additional rights are indicated by a Creative Commons license in the record and/ or on the work itself. This Dissertation has been accepted for inclusion in WVU Graduate Theses, Dissertations, and Problem Reports collection by an authorized administrator of The Research Repository @ WVU. For more information, please contact [email protected]. Britishers in Two Worlds: Maltese Immigrants in Detroit and Toronto, 1919-1960 Marc Anthony Sanko Dissertation submitted to the Eberly College of Arts and Sciences at West Virginia University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in History Kenneth Fones-Wolf, Ph.D., Chair James Siekmeier, Ph.D. Joseph Hodge, Ph.D. Melissa Bingmann, Ph.D. Mary Durfee, Ph.D. Department of History Morgantown, West Virginia 2018 Keywords: Immigration History, U.S. -

4849 Nurse Goes on Queen

,'>V •.r •- 7 , t ► -r A <5 ‘ i W •v-v* ■- . *V NBT PRESS RUR AVERAGE DAILY CIRCULATION THE WBATinnt. OF THE EVBNINO HERALD for die month of September, 1988. Partly clondy end colder tonlidit. Tuesday fair and continiied cold. 4 , 8 4 9 ■'^1 -.n _ - .-ij y -tt. VOL. XLVn NO. 21. Otasslfled Adyertlstng on Page • MANCHBS CONNW:MONOAY, OCTOBl^ 25, 19^^K; PRICE THREE CENtS .•»-:Vv\VvV- WWW • .. NURSE GOES ON U. S. TO FINISH A 400,000 GATHER of a,New York Mojdd' WASHINGTON JOB ■i y PLAN PREMICAN To Complete Dismal Swamp Among QUEEN MARIE’S Canal Surveyed by the First AT WEDDING OF ’ -50 . Who ’P^ Sr’Day REMOVEOFFICE President. Injurious. W E S ™ T R IP Washington, Oct. 25.—The SULTMS SONS VNewrjTorK, Oct. 26.-r-Five HOLDERATWILL United States government is dcf)^ a week in the, nation’s in- about to complete a Job which .dusitrles as adyoeated by Henry George Washington started in . Ford'meets with strenuons ob- Attends Rumanian Qneen 1785. Double Nuptials of-Moroc jjsctipp on ..fi^ty. leading manu- Snpreme Conrt, Backmg The Department of Justice ' '• lopturera. .according- to' a state announced today that legal ex can Princes Becomes ment made publte today by the as Tonr to Pacific Begins, perts have virtually finished National-Association of mann-4 WOson’s Act, Settles Dis- the preparation of titles to the . ' ' -1 ' ■ lactu^'ra^! ■' Thongii Throat Is Called Dismal Swamp property, con , Triple Affair and Vast W hTe^di^tQr oL H # : Summ.a.rised, the specific ob- pate M Senate Half necting the Elizabeth river Iq. -

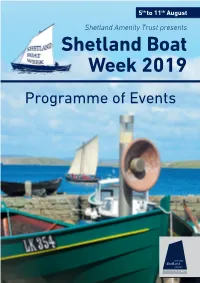

Shetland Boat Week 2019 Programme of Events

5thth toto 1111thth AugustAugust Shetland Amenity Trust presents Shetland Boat Week 2019 Programme of Events Shetland Amenity Trust Proud to support Shetland Boat Week Discover more at www.northlinkferries.co.uk Operated by Welcome to Shetland Boat Week 2019 Shetland Amenity Trust Shetland Amenity Trust is very proud to present the fourth year of maritime heritage activity with Shetland Boat Week 2019. The programme is back to full strength this year with many new events, activities and features. The organising committee are particularly proud of, and grateful for, the collaboration and co-operation between the sponsors, clubs, organisations and individuals, without which, this festival would not be possible. The ambition of Shetland Boat Week is to encourage and enable the use of boats of all kinds, by all ages. No less important is getting people on the water and reviving the passion for Shetland’s maritime heritage, by encouraging the maintenance of traditional boats and retention of traditional skills. This programme of events facilitates access to a range of maritime themed activities for all ages. Many events take place in and around Hay’s Dock in Lerwick, with active encouragement to visit the nautical offerings from Unst to Sandsayre and everywhere in between. Thank you for taking part in this year’s festival and a very special thanks goes to our 2019 Shetland Boat Week Sponsors: Ruth Mackenzie, Chair, SAT www.oceankinetics.co.uk www.lerwick-harbour.co.uk www.somuchtosea.co.uk www.northlinkferries.co.uk Support has also been received from: Promote Shetland and Hay &Co. Buildbase 3 Contents Page 6 .. -

In Other Words: Maltese Primary School Teachers' Perceptions Of

In Other Words: Maltese Primary School Teachers’ Perceptions of Cross-linguistic Practices and Flexible Language Pedagogies in Bilingual and Multilingual English Language Classes. Michelle Panzavecchia A thesis submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy The University of Sheffield School of Education September 2020 Abstract As a result of globalisation, bilingualism and multilingualism are becoming more of a norm rather than an exception and speaking two or more languages is associated with multiple benefits. Bilingual social identities are shaped by language acquisition and socialisation, and educators construct their own teacher identities and pedagogies through their past personal, educational, and professional experiences. This study provides a basis for critical reflection and discussion amongst English language primary school Maltese teachers, to explore how their bilingual identities affect their pedagogical practices. The study probes into teacher’s perceptions on whether, why and how cross-linguistic pedagogies are beneficial within bilingual and multilingual language classroom settings. Data was collected through in-depth semi-structured interviews with nine purposely selected primary school teachers, each with over ten years teaching experience, to explore their bilingual identities and beliefs, how being bilingual may affect their pedagogical practices, and to investigate whether they believe they are using cross-linguistic practices during English lessons. The process of data collection and analysis highlighted the fact that educators’ perceived pedagogies, beliefs, and language preferences stem from their own personal, educational, and teaching experiences, and are embedded in Malta’s socio-cultural context. Maltese teachers believe that they use fluid language practices in their classrooms as a natural part of their daily communicative practices, and as a means of reaching out to all their students. -

Our New E-Commerce Enabled Shop Website Is Still Under Construction

Our new e-commerce enabled shop website is still under construction. In the meantime, to order any title listed in this booklist please email requirements to [email protected] or tel. +44(0)1595 695531 2009Page 2 The Shetland Times Bookshop Page 2009 2 CONTENTS About us! ..................................................................................................... 2 Shetland – General ...................................................................................... 3 Shetland – Knitting .................................................................................... 14 Shetland – Music ........................................................................................ 15 Shetland – Nature ...................................................................................... 16 Shetland – Nautical .................................................................................... 17 Children – Shetland/Scotland..................................................................... 18 Orkney – Mackay Brown .......................................................................... 20 Orkney ...................................................................................................... 20 Scottish A-Z ............................................................................................... 21 Shetland – Viking & Picts ........................................................................... 22 Shetland Maps .......................................................................................... -

The Amazing Race 1 Leg 1: Livingstone, Zambia Leg 2: Paris

The Amazing Race 1 The Amazing Race 6 Leg 1: Livingstone, Zambia Leg 1: Grindavik, Iceland Leg 2: Paris, France Leg 2: Voss, Norway Leg 3: Les Baux-de-Provence, France Leg 3: Stockholm, Sweden Leg 4: El Jem, Tunisia Leg 4: Goree, Senegal Leg 5: Guermessa, Tunisia Leg 5: Berlin, Germany Leg 6: Sant'Agata Bolognese, Italy Leg 6: Eger, Hungary Leg 7: Agra, India Leg 7: Budapest, Hungary Leg 8: Bikaner, India Leg 8: L'Ile Rousse, France Leg 9: Krabi, Thailand Leg 9: Lalibela, Ethiopia Leg 10: Ao Nang, Thailand Leg 10: Sigiriya, Sri Lanka Leg 11: Beijing, China Leg 11: Shanghai, China Leg 12: Denali, Alaska Leg 12: Xi'an, China Leg 13: New York, New York Leg 13: Chicago, Illinois (via Hawaii) The Amazing Race 2 The Amazing Race 7 Leg 1: Rio de Janeiro, Brazil Leg 1: Cusco, Peru Leg 2: Foz do Iguaçu, Brazil Leg 2: Santiago, Chile Leg 3: Stellenbosch, South Africa Leg 3: Mendoza, Argentina Leg 4: Windhoek, Namibia Leg 4: Vicente Cesares, Argentina Leg 5: Amphawa, Thailand Leg 5: Soweto, South Africa Leg 6: Chiang Mai, Thailand Leg 6: Makgadikgadi Pans, Botswana Leg 7: Hong Kong, China Leg 7: Khwai, Botswana Leg 8: Sydney, Australia Leg 8: Lucknow, India Leg 9: Coober Pedy, Australia Leg 9: Jodhpur, India Leg 10: Mt. Somers, New Zealand Leg 10: Istanbul, Turkey Leg 11: Auckland, New Zealand Leg 11: London, England Leg 12: Maui, Hawaii Leg 12: Montego Bay, Jamaica Leg 13: San Francisco, California (via Alaska) Leg 13: Miami, Florida (via Puerto Rico) The Amazing Race 3 The Amazing Race 8 Leg 1: Puente D'Ixtla, Mexico Leg 1: Lancaster, Pennsylvania -

The Northern Isles Tom Smith & Chris Jex

Back Cover - South Mainland, Clift Sound, Shetland | Tom Smith Back Cover - South Mainland, Clift Sound, Shetland | Tom Orkney | Chris Jex Front Cover - Sandstone cliffs, Hoy, The NorthernThe Isles PAPA STOUR The Northern Isles orkney & shetland sea kayaking The Northern Isles FOULA LERWICK orkney & shetland sea kayaking Their relative isolation, stunning scenery and Norse S h history make Orkney and Shetland a very special e t l a n d place. For the sea kayaker island archipelagos are particularly rewarding ... none more so than these. Smith Tom Illustrated with superb colour photographs and useful FAIR ISLE maps throughout, this book is a practical guide to help you select and plan trips. It will provide inspiration for future voyages and a souvenir of & journeys undertaken. Chris Jex WESTRAY As well as providing essential information on where to start and finish, distances, times and tidal information, this book does much to ISBN 978-1-906095-00-0 stimulate interest in the r k n e y environment. It is full of OKIRKWALL facts and anecdotes about HOY local history, geology, scenery, seabirds and sea 9781906 095000 mammals. Tom Smith & Chris Jex PENTLAND SKERRIES SHETLAND 40 Unst SHETLAND Yell ORKNEY 38 36 39 North Roe Fetlar 35 37 34 33 Out Skerries 41 Papa 32 Stour Whalsay 31 30 43 42 ORKNEY 44 Foula Lerwick 29 28 Bressay 27 North 45 Ronaldsay Burra 26 25 46 Mousa 50 Westray 24 Eday 23 48 21 47 Rousay Sanday 16 22 20 19 Stronsay 49 18 Fair Isle 14 Shapinsay 12 11 17 15 Kirkwall 13 Hoy 09 07 05 10 08 06 04 03 South Ronaldsay 02 01 Pentland Skerries The Northern Isles orkney & shetland sea kayaking Tom Smith & Chris Jex Pesda Press www.pesdapress.com First published in Great Britain 2007 by Pesda Press Galeri 22, Doc Victoria Caernarfon, Gwynedd LL55 1SQ Wales Copyright © 2007 Tom Smith & Chris Jex ISBN 978-1-906095-00-0 The Authors assert the moral right to be identified as the authors of this work. -

October 2014

-DINING-GUIDE CONCERTS SPORTS-SCHEDULES MOVIES TV- FREE OCTOBER 2014 READ IT! new vetted bar & restaurant @ MrKupTweets LISTINGS Bartender Tressa of Spaghetti Benders THIS MONTH PARADE 10 A.M. ON 11TH Updated Daily on Twitter 21, 22 & 23 @ 157 GUIDE week-long costume celebrations 157LIVE.com TUES-SAT / KITCHEN OPEN WE DELIVER LATE ‘TIL 3 AM ‘TIL 3 AM AFTER MID. PIZZA • FRIES $1 DOLLAR DEALS! DOUGH ROLLS $ 2 BUD LIGHT Pitchers 10-12 Tuesdays & Thursdays OPEN 11 AM EVERYDAY Full Menu & Specials Online 566 Phila. St. • 724-801-8628 GrubsSportsBar.com BUD LIGHT SPECIALS: TUESDAY $4 BL PITCHERS 10-12 WEDNESDAY $1.25 D. DRAFTS 10-12 THURSDAY $4 BL PITCHERS 10-12 FRIDAY $2.25 BL DRAFTS ALL DAY $1 BL DRAFTS 10-12 SATURDAY $4 BL PITCHERS 10-12 $2.25 BUD LIGHT Drafts All Day Friday 642 Philadelphia St. r 724.465.8082 r TheConey.com Available as a Free Download at: downtownindiana.mobapp.at $ 2 BUD LIGHT Pitchers Tuesdays & Thursdays Also, Don’t Miss the Annual Oktoberfest October 4th at the Old Borough Building Parking Lot — Food, Entertainment & Brews THE QUINTESSENTIAL INDIANA EATING & DRINKING GUIDE A-Z There are dozens of places to wine and dine in the THE CONEY 642 Phila. St. 724-465-8082 greater Indiana area. So, to assist you in selecting an Established in 1933, full-service restaurant, establishment with the ambiance and cuisine you three bars, catering on or off premises, dance desire, we’ve added brief descriptions to give you an club, game room, outdoor café; Irish specialties idea of what you can expect. -

HAPPY~O LIDAYS! the ORWRANKINGS for 1984 the Ohio Racewalker Is Proud to Announce :.Ts Annual World and U.S

HI VOWME XXI NUMBER10 COWMBUS1 OHIO DEX:FMBER1984 HAPPY~O LIDAYS! THE ORWRANKINGS FOR 1984 The Ohio Racewalker is proud to announce :.ts annual world and U.S. rankinRS for both men and womenat theprimary distances. As usual, the whole world has been impi.tiently awaiting the momentous announcement of these prestigious rankings. Of course, those honored receive absolutely no reward and may never even hear of their eelection--only a few hep individuals read the ORW,but how much glory is their in race walking anyway. This marks the 14th consecutive year the ORWhas ranked the wor'.d •s top male walkers at 20 and 50 kilometers, the 12th time we have ranked U.S men at the sameutwo distances, and the seventh year for rankin g women, both world and .S, at 5 and 10 kilometers. (Actually, we have not ranked U. s . womenat 5 Kmthis year, because there was just too littl e action, at least repcrted to us, to justify rankings.) The rankings ar e based on the athletes• record over the season and represent a consensus of opinion between your editor and absolutely nobody else -- they are subjective and arbitrary. Criteria used are times, record in major competition, head-to head competition, and personal bias. The rankings at each distance are fol.lowed by lists of the top times for the yaar. 1984WOBLn 20 KMRANKINc:3 1. Ernesto Canto, Mexico 5. Ralf Kowalsky, Ger. Dem, Rep. 1:26:13 ~2) Guadaljara 4/1 1:22 1)6 !1)Naumbur g 5/1 1:18:4ot 1) Bergen 5/5 l :25fOJ J~ Copenhagen 5/12 1:23:13 1) Olympics 8/J 1125 _56 2 Stockholm 5/26 2. -

The AMAZING LIBRARY RACE!

The AMAZING LIBRARY RACE! A library family program based on the television reality show “The Amazing Race” This program requires teams to complete ten challenges at ten different Dewey Decimal stations within the library. Each team must choose between two activities at each station and earn points for each challenge completed. Then the teams race to check in at the Finish Line where additional points are awarded according to the check-in order. Choosing the challenges with higher point values may get you ahead, but only if you finish sooner than everyone else! Created by Rebecca E. Smiley, Children’s Services Manager ~ [email protected] Children’s Dept. ~ Citizens Library 55 S. College St. ~ Washington, PA 15301 724-222-2400, ext. 235 ~ www.washlibs.org/citizens “Citizens Library’s mission is to provide the resources to enrich, inform, and educate the public.” Welcome to THE AMAZING LIBRARY RACE! This “AMAZING LIBRARY RACE” is loosely based on the television show “The Amazing Race,” but in this game teams will complete challenges based on the subject areas of the Dewey Decimal System. Here’s how it works: 1. First, you must divide into teams. Each team must have at least 2 people, but no more than 4. Your team must work together, and stay together for the entire race. 2. Each team will receive a passport and a sealed clue that will direct you to your starting point. 3. Each team starts at a different point, but must collect passport stamps and points from all 10 stations. 4. At each station there is a “Challenge” choice; you must choose one of two activities to complete at that station.