Warmsley Cornellgrad 0058F 1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Changing Face of American White Supremacy Our Mission: to Stop the Defamation of the Jewish People and to Secure Justice and Fair Treatment for All

A report from the Center on Extremism 09 18 New Hate and Old: The Changing Face of American White Supremacy Our Mission: To stop the defamation of the Jewish people and to secure justice and fair treatment for all. ABOUT T H E CENTER ON EXTREMISM The ADL Center on Extremism (COE) is one of the world’s foremost authorities ADL (Anti-Defamation on extremism, terrorism, anti-Semitism and all forms of hate. For decades, League) fights anti-Semitism COE’s staff of seasoned investigators, analysts and researchers have tracked and promotes justice for all. extremist activity and hate in the U.S. and abroad – online and on the ground. The staff, which represent a combined total of substantially more than 100 Join ADL to give a voice to years of experience in this arena, routinely assist law enforcement with those without one and to extremist-related investigations, provide tech companies with critical data protect our civil rights. and expertise, and respond to wide-ranging media requests. Learn more: adl.org As ADL’s research and investigative arm, COE is a clearinghouse of real-time information about extremism and hate of all types. COE staff regularly serve as expert witnesses, provide congressional testimony and speak to national and international conference audiences about the threats posed by extremism and anti-Semitism. You can find the full complement of COE’s research and publications at ADL.org. Cover: White supremacists exchange insults with counter-protesters as they attempt to guard the entrance to Emancipation Park during the ‘Unite the Right’ rally August 12, 2017 in Charlottesville, Virginia. -

How Informal Justice Systems Can Contribute

United Nations Development Programme Oslo Governance Centre The Democratic Governance Fellowship Programme Doing Justice: How informal justice systems can contribute Ewa Wojkowska, December 2006 United Nations Development Programme – Oslo Governance Centre Contents Contents Contents page 2 Acknowledgements page 3 List of Acronyms and Abbreviations page 4 Research Methods page 4 Executive Summary page 5 Chapter 1: Introduction page 7 Key Definitions: page 9 Chapter 2: Why are informal justice systems important? page 11 UNDP’s Support to the Justice Sector 2000-2005 page 11 Chapter 3: Characteristics of Informal Justice Systems page 16 Strengths page 16 Weaknesses page 20 Chapter 4: Linkages between informal and formal justice systems page 25 Chapter 5: Recommendations for how to engage with informal justice systems page 30 Examples of Indicators page 45 Key features of selected informal justice systems page 47 United Nations Development Programme – Oslo Governance Centre Acknowledgements Acknowledgements I am grateful for the opportunity provided by UNDP and the Oslo Governance Centre (OGC) to undertake this fellowship and thank all OGC colleagues for their kindness and support throughout my stay in Oslo. I would especially like to thank the following individuals for their contributions and support throughout the fellowship period: Toshihiro Nakamura, Nina Berg, Siphosami Malunga, Noha El-Mikawy, Noelle Rancourt, Noel Matthews from UNDP, and Christian Ranheim from the Norwegian Centre for Human Rights. Special thanks also go to all the individuals who took their time to provide information on their experiences of working with informal justice systems and UNDP Indonesia for releasing me for the fellowship period. Any errors or omissions that remain are my responsibility alone. -

An Evaluation of Theonomic Neopostmillennialism Thomas D

Liberty University DigitalCommons@Liberty University Faculty Publications and Presentations School of Religion 1988 An Evaluation of Theonomic Neopostmillennialism Thomas D. Ice Liberty University, [email protected] Follow this and additional works at: http://digitalcommons.liberty.edu/sor_fac_pubs Recommended Citation Ice, Thomas D., "An Evaluation of Theonomic Neopostmillennialism" (1988). Faculty Publications and Presentations. Paper 103. http://digitalcommons.liberty.edu/sor_fac_pubs/103 This Article is brought to you for free and open access by the School of Religion at DigitalCommons@Liberty University. It has been accepted for inclusion in Faculty Publications and Presentations by an authorized administrator of DigitalCommons@Liberty University. For more information, please contact [email protected]. An Evaluation of Theonomic Neopostmillennialism Thomas D. Ice Pastor Oak Hill Bible Church, Austin, Texas Today Christians are witnessing "the most rapid cultural re alignment in history."1 One Christian writer describes the last 25 years as "The Great Rebellion," which has resulted in a whole new culture replacing the more traditional Christian-influenced Ameri can culture.2 Is the light flickering and about to go out? Is this a part of the further development of the apostasy that many premillenni- alists say is taught in the Bible? Or is this "posf-Christian" culture3 one of the periodic visitations of a judgment/salvation4 which is furthering the coming of a posfmillennial kingdom? Leaders of the 1 Marilyn Ferguson, -

FUNDING HATE How White Supremacists Raise Their Money

How White Supremacists FUNDING HATE Raise Their Money 1 RESPONDING TO HATE FUNDING HATE INTRODUCTION 1 SELF-FUNDING 2 ORGANIZATIONAL FUNDING 3 CRIMINAL ACTIVITY 9 THE NEW KID ON THE BLOCK: CROWDFUNDING 10 BITCOIN AND CRYPTOCURRENCIES 11 THE FUTURE OF WHITE SUPREMACIST FUNDING 14 2 RESPONDING TO HATE How White Supremacists FUNDING HATE Raise Their Money It’s one of the most frequent questions the Anti-Defamation League gets asked: WHERE DO WHITE SUPREMACISTS GET THEIR MONEY? Implicit in this question is the assumption that white supremacists raise a substantial amount of money, an assumption fueled by rumors and speculation about white supremacist groups being funded by sources such as the Russian government, conservative foundations, or secretive wealthy backers. The reality is less sensational but still important. As American political and social movements go, the white supremacist movement is particularly poorly funded. Small in numbers and containing many adherents of little means, the white supremacist movement has a weak base for raising money compared to many other causes. Moreover, ostracized because of its extreme and hateful ideology, not to mention its connections to violence, the white supremacist movement does not have easy access to many common methods of raising and transmitting money. This lack of access to funds and funds transfers limits what white supremacists can do and achieve. However, the means by which the white supremacist movement does raise money are important to understand. Moreover, recent developments, particularly in crowdfunding, may have provided the white supremacist movement with more fundraising opportunities than it has seen in some time. This raises the disturbing possibility that some white supremacists may become better funded in the future than they have been in the past. -

Psychological and Personality Profiles of Political Extremists

Psychological and Personality Profiles of Political Extremists Meysam Alizadeh1,2, Ingmar Weber3, Claudio Cioffi-Revilla2, Santo Fortunato1, Michael Macy4 1 Center for Complex Networks and Systems Research, School of Informatics and Computing, Indiana University, Bloomington, IN 47405, USA 2 Computational Social Science Program, Department of Computational and Data Sciences, George Mason University, Fairfax, VA 22030, USA 3 Qatar Computing Research Institute, Doha, Qatar 4 Social Dynamics Laboratory, Cornell University, Ithaca, NY 14853, USA Abstract Global recruitment into radical Islamic movements has spurred renewed interest in the appeal of political extremism. Is the appeal a rational response to material conditions or is it the expression of psychological and personality disorders associated with aggressive behavior, intolerance, conspiratorial imagination, and paranoia? Empirical answers using surveys have been limited by lack of access to extremist groups, while field studies have lacked psychological measures and failed to compare extremists with contrast groups. We revisit the debate over the appeal of extremism in the U.S. context by comparing publicly available Twitter messages written by over 355,000 political extremist followers with messages written by non-extremist U.S. users. Analysis of text-based psychological indicators supports the moral foundation theory which identifies emotion as a critical factor in determining political orientation of individuals. Extremist followers also differ from others in four of the Big -

VIRGINIA LAWS LAW ENFORCEMENT Your Guide To

Your guide to VIRGINIA LAWS and LAW ENFORCEMENT eamwork approach to building relationships T with police emoving barriers to communication R with youth U nified approach to mutual understanding S eparating out individual and group prejudice eaching the benefits of recognizing and T reporting crime TABLE OF CONTENTS 4 Message from Chesterfield Police 5 Our Mission and Goals 6 Police Encounters 7 Laws 8 Alcohol-Related Crimes 9 Group Offenses 10 Crimes Against Others 12 Crimes Against Property 13 Drug Crimes 14 Weapons Crimes 15 Cyber Crimes 16 Crimes Against Peace 17 Traffic Infractions 18 Social Media 19 Crime and the Community 21 Law Enforcement Vocabulary message from us The Chesterfield County Police Department’s most important goal is the constant safety of you, your families, friends, and neighbors. You are the future leaders of our county and the United States as a whole; therefore, we place special emphasis on providing this service to young people such as yourself. The officers of Chesterfield County are people just like you; they are your neighbors, friends, and family. Therefore, we believe that by creating a partnership with our neighborhoods, community policing will become everybody’s job and a responsibility that we all share. This guide is designed to help you understand how police work, explain how officers enforce the law, and help you make good decisions when meeting police officers at any time. Inside you will find definitions of the law, information about the most common types of crimes, how to safely report criminal activity, and several different ways to keep you and your family safe. -

In Quest for a Culture of Peace in the IGAD Region: the Role Intellectuals and Scholars Somalia Stability

Cover Final.pdf 6/15/06 10:54:43 AM The Intergovernmental Authority on Development (IGAD) region com- prising Kenya, Uganda, Sudan, Somalia, Ethiopia, Eritrea and Djibouti faces a distinct set of problems stemming from diverse historical, social, economic, political and cultural factors. Despite the socio-cultural affinities and economic interdependence between the peoples of this Eritrea region, the IGAD nations have not developed coherent policies for Sudan Djibouti regional integration, economic cooperation and lasting peace and of Peace in the IGAD Region: In Quest for a Culture Somalia stability. Ethiopia Intermittent conflicts between and within nations, poor governance, Uganda low economic performance, and prolonged drought which affects food Kenya security, are the key problems that bedevil the region. But in recent years the IGAD countries have made great strides towards finding lasting solutions to these problems. The African Union Peace and Security Council and IGAD have gained significant mileage in con- flict resolution. Major milestones include the signing of the Compre- hensive Peace Agreement between the Government of Sudan and the C Sudan People’s Liberation Movement (SPLA/SPLM) in January 2005. M Ethiopia held democratic multi-party elections in May 2005 and Kenya Y carried out a referendum on the draft constitution in November 2005. In Quest for a Culture of Peace CM Somalia’s newly established Transitional Federal Government was able to relocate to Somalia in 2005 as a first step towards establishing lasting MY peace. CY in the IGAD Region: The Role of Intellectuals and Scholars CMY The East African sub-region countries of Kenya, Uganda and Tanzania K have made progress towards economic and eventual political integra- tion through the East African Community and Customs Union treaties. -

Classification and Its Consequences for Online Harassment: Design Insights from Heartmob

Classification and Its Consequences for Online Harassment: Design Insights from HeartMob LINDSAY BLACKWELL, University of Michigan School of Information JILL DIMOND, Sassafras Tech Collective SARITA SCHOENEBECK, University of Michigan School of Information CLIFF LAMPE, University of Michigan School of Information Online harassment is a pervasive and pernicious problem. Techniques like natural language processing and machine learning are promising approaches for identifying abusive language, but they fail to address structural power imbalances perpetuated by automated labeling and classification. Similarly, platform policies and reporting tools are designed for a seemingly homogenous user base and do not account for individual experiences and systems of social oppression. This paper describes the design and evaluation of HeartMob, a platform built by and for people who are disproportionately affected by the most severe forms of online harassment. We conducted interviews with 18 HeartMob users, both targets and supporters, about their harassment experiences and their use of the site. We examine systems of classification enacted by technical systems, platform policies, and users to demonstrate how 1) labeling serves to validate (or invalidate) harassment experiences; 2) labeling motivates bystanders to provide support; and 3) labeling content as harassment is critical for surfacing community norms around appropriate user behavior. We discuss these results through the lens of Bowker and Star’s classification theories and describe implications -

In the United States District Court for the Western District of Virginia Charlottesville Division

08/12/2019 IN THE UNITED STATES DISTRICT COURT FOR THE WESTERN DISTRICT OF VIRGINIA CHARLOTTESVILLE DIVISION DEANDRE HARRIS, § Plaintiff, § § v. § CIVIL ACTION NO. § ________________________3:19CV00046 JASON KESSLER, RICHARD SPENCER § VANGUARD AMERICA, ANDREW § ANGLIN MOONBASE HOLDINGS, LLC, § ROBERT “AZZMADOR” RAY, § NATHAN DAMIGO, ELLIOT KLINE § AKA ELI MOSLEY, IDENTITY EVROPA, § MATTHEW HEIMBACH, MATTHEW § PARROT AKA DAVID MATTHEW § PARROTT, TRADITIONALIST WORKERS § PARTY , MICHAEL HILL, MICHAEL § TUBBS, LEAGUE OF THE SOUTH, § JEFF SCHOEP, NATIONAL SOCIALIST § MOVEMENT, NATIONALIST FRONT, § AUGUSTUS SOL INVICTUS, § FRATERNAL § ORDER OF THE ALT-KNIGHTS, § MICHAEL “ENOCH” PEINOVICH, § LOYAL WHITE KNIGHTS OF THE § KU KLUX KLAN, § AND EAST COAST KNIGHTS OF THE § KU KLUX KLAN AKA EAST COAST § KNIGHTS OF THE TRUE INVISIBLE § EMPIRE, NATIONAL POLICY INSTITUTE, § THE PROUD BOYS, COUNCIL OF § CONSERVATIVE CITIZENS, AMERICAN § RENAISSANCE, THE RED ELEPHANTS, § AMERICAN FREEDOM KEEPERS, § ALTRIGHT.COM, § DANIEL P. BORDEN, § JURY TRIAL ALEX MICHAEL RAMOS, § JACOB SCOTT GOODWIN, § TYLER WATKINS DAVIS, § JOHN DOE 1, JOHN DOE 2 § Defendants. § DeAndre Harris v. Jason Kessler, et al Page 1 of 25 Case 3:19-cv-00046-NKM Document 1 Filed 08/12/19 Page 1 of 25 Pageid#: 28 PLAINTIFF’S ORIGINAL COMPLAINT TO THE HONORABLE UNITED STATES DISTRICT JUDGE: Plaintiff, DeAndre Harris, by his undersigned attorney alleges upon knowledge as to himself and his own actions and upon information and belief as to all the other matters, as follows: I. NATURE OF THE ACTION 1. This is an action brought by the Plaintiff against the Defendants for violation of Plaintiff’s individual rights to be free from assault and battery, and, consequently, in violation of his civil rights pursuant to 42 U.S.C. -

Aryan Nations Deflates

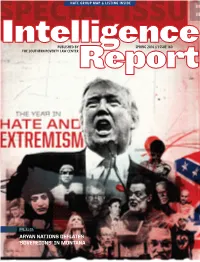

HATE GROUP MAP & LISTING INSIDE PUBLISHED BY SPRING 2016 // ISSUE 160 THE SOUTHERN POVERTY LAW CENTER PLUS: ARYAN NATIONS DEFLATES ‘SOVEREIGNS’ IN MONTANA EDITORIAL A Year of Living Dangerously BY MARK POTOK Anyone who read the newspapers last year knows that suicide and drug overdose deaths are way up, less edu- 2015 saw some horrific political violence. A white suprem- cated workers increasingly are finding it difficult to earn acist murdered nine black churchgoers in Charleston, S.C. a living, and income inequality is at near historic lev- Islamist radicals killed four U.S. Marines in Chattanooga, els. Of course, all that and more is true for most racial Tenn., and 14 people in San Bernardino, Calif. An anti- minorities, but the pressures on whites who have his- abortion extremist shot three people to torically been more privileged is fueling real fury. death at a Planned Parenthood clinic in It was in this milieu that the number of groups on Colorado Springs, Colo. the radical right grew last year, according to the latest But not many understand just how count by the Southern Poverty Law Center. The num- bad it really was. bers of hate and of antigovernment “Patriot” groups Here are some of the lesser-known were both up by about 14% over 2014, for a new total political cases that cropped up: A West of 1,890 groups. While most categories of hate groups Virginia man was arrested for allegedly declined, there were significant increases among Klan plotting to attack a courthouse and mur- groups, which were energized by the battle over the der first responders; a Missourian was Confederate battle flag, and racist black separatist accused of planning to murder police officers; a former groups, which grew largely because of highly publicized Congressional candidate in Tennessee allegedly conspired incidents of police shootings of black men. -

People for the American Way Collection of Conservative Political Ephemera, 1980-2004

http://oac.cdlib.org/findaid/ark:/13030/hb596nb6hz No online items Finding Aid to People for the American Way Collection of Conservative Political Ephemera, 1980-2004 Sarah Lopez The processing of this collection was made possible by the Center for the Comparative Study of Right-Wing Movements, Institute for the Study of Social Change, U.C. Berkeley, and by the generosity of the People for the American Way. The Bancroft Library University of California, Berkeley Berkeley, CA 94720-6000 Phone: (510) 642-6481 Fax: (510) 642-7589 Email: [email protected] URL: http://bancroft.berkeley.edu/ © 2011 The Regents of the University of California. All rights reserved. BANC MSS 2010/152 1 Finding Aid to People for the American Way Collection of Conservative Political Ephemera, 1980-2004 Collection number: BANC MSS 2010/152 The Bancroft Library University of California, Berkeley Berkeley, CA 94720-6000 Phone: (510) 642-6481 Fax: (510) 642-7589 Email: [email protected] URL: http://bancroft.berkeley.edu/ The processing of this collection was made possible by the Center for the Comparative Study of Right-Wing Movements, Institute for the Study of Social Change, U.C. Berkeley, and by the generosity of the People for the American Way. Finding Aid Author(s): Sarah Lopez Finding Aid Encoded By: GenX © 2011 The Regents of the University of California. All rights reserved. Collection Summary Collection Title: People for the American Way collection of conservative political ephemera Date (inclusive): 1980-2004 Collection Number: BANC MSS 2010/152 Creator: People for the American Way Extent: 118 cartons; 1 box; 1 oversize box.circa 148 linear feet Repository: The Bancroft Library University of California, Berkeley Berkeley, CA 94720-6000 Phone: (510) 642-6481 Fax: (510) 642-7589 Email: [email protected] URL: http://bancroft.berkeley.edu/ Abstract: This collection is comprised of ephemera assembled by the People For the American Way and pertaining to right-wing movements in the United States. -

16-40. Status in Police Department

POLICE DEPARTMENT PLL § 16-40 PARK POLICE § 16-40. Status in Police Department. Any person who became a member of the Baltimore City Police Department as a result of the merger of the Park Police, a Division of the Department of Recreation and Parks, of the City of Baltimore, with the Police Department shall be deemed to have been a member of the Baltimore City Police Department for the period such person was employed as a member of the said Park Police Division; and the period of each person’s employment time spent with the Park Police Division prior to the effective date of the merger on January 1, 1961, shall be held to have been spent in the service of the Baltimore City Police Department for purposes of probationary period, seniority rating, length of service for compensation, or additional compensation, eligibility for promotion and all other purposes except eligibility for membership in the Special Fund for Widows; and each person shall continue in the rank attained in the Park Police Division during his tenure in the Baltimore City Police Department, until promoted, reduced, retired, dropped, dismissed, or otherwise altered, according to law, and in the same manner as other members of the Baltimore City Police Department. Any person who is a member of the Baltimore City Police Department shall be given credit for all the purposes aforesaid for all time spent as a member of the said Park Police Division. (P.L.L., 1969, §16-40.) (1961, ch. 290.) CIVILIAN REVIEW BOARD § 16-41. Definitions. (a) In general. In this subheading the following words have the meanings indicated.