Illustrated Book Study: Digital Conversion Requirements of Printed Illustrations Anne R

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cat Talogu E 61

Grosvenor Prints 19 Shelton Street Covent Garden London WC2H 9JN Tel: 020 7836 1979 Fax: 020 7379 6695 E-mail: [email protected] www.grosvenorprints.com Dealers in Antique Prints & Books Catalogue 61 [The Alternative of Williams Burg.] A New Method of Macarony Making, as Item 288 practiced at Boston. Item 289 All items listed are illustrated on our web site: www.grosvenorprints.com Registered in England No. 1305630 Registered Office: 2, Castle Business Villlage, Station Roaad, Hampton, Middlesex. TW12 2BX. Rainbrook Ltd. Directors: N.C. Talbot. T.D.M. Rayment. C.E. Elliis. E&OE VAT No. 217 6907 49 1. Drawings from Original Pictures of Boswell and Dr.Johnson. While Cox's name is on Philip Reinagle, Esq. R.A. No. 2. Mrs these pieces he would have had to depend on skilled Wrightson (subscriber's name in craftsmen to produce the elaborate exhibition pieces contemporary ink mss]. that can now be seen in the Metropolitan Museum of By an Amateur. [n.d., c.1826.] Art. A spectacular survivor of Cox's Museum is a Imperial folio, original printed wrappers, four Peacock that was taken to St. Petersburg in 1781 and is lithographic plates on india, stitched. Wear to edges. now in the Hermitage. British Library: 000807157 Stock: 40010 £280 Four untitled British landscape scenes presented in their original wrapper, apparently the complete second 4. The Duke of Bedford's Stables, with the installment of what became a series of 16 plates after New Tennis-Court & Riding-House at Woburn Reinagle. We have seen another wrapper with the Abbey subscriber's same in the same handwriting, suggesting [Anon, c.1750] it was written by the anonymous 'Amateur'. -

Typography, Illustration and Narration in Three Novels by Alasdair Gray

Title Page. Typography, Illustration and Narration in Three Novels by Alasdair Gray: Lanark, 1982, Janine and Poor Things. Craig Linwood Bachelor of Arts (Honours) School of Humanities Arts, Education and Law Griffith University Submitted in fulfilment of the requirements of the degree of Doctor of Philosophy February 2017 Abstract. The impetus of the thesis emerged through an academic interest in how experimental uses of typography and illustration functioned as a method of narration within literature. This was followed by investigations into the use of typography and illustration yielded that while there is a growing field of literary study examining non-linguistic elements within narratives, there are few studies into typography and illustration and how an author utilises and develops them as a method of narration. In light of this, this thesis examines attempts to expand upon the act of narration through the use of typography and illustration in both experimental and common forms. This is focused through Scottish artist Alasdair Gray and three of his novels: Lanark: A Life in Four Books, 1982, Janine and Poor Things. While Gray’s novels are contemporary his use of typography and illustration engages in wider print cultures that facilitated experiment into literature involving the manipulation of typography, illustration and the traditions of narrative. Experimentation in literature from 1650 to 1990, be it through illustration, typography or the composition of narrative, often emerged when printing practice and its product were no longer seen as efficient at communicating to modernising audiences. This act often coincided with larger changes within print cultures that affected laws, politics, the means of distribution, views of design i and methods of distribution. -

"General Print Mint" For

FOR ESTABLISIUWO, BY ACT OF CONOREBS,NOTA OWN 'MD4T, FOR THERE IS ONE ALREADY, BUT A W.3LUHOTON FOR THE EMISM9N OF PArkE MO r TO THE AMOUNT flRHAP& OF. - H TO 3Z SOUnDED UPON A ROCH, Afl UPON A CRfl)IT OF TLSYEAR$ATS !tVnYq: WHIOD WILL It PRODUCTIVIorARItOF PXOPEWET ILP -: - 10, 15, rztaa,. 20PZR CZRT., aflOflmflafl asx.t - E' -- :. BY EDWAn 0. OQflctLL, Title page of an early pamphlet in the collection of the Library of Congress proposing a "General Print Mint" for the United States.The author shipped a quantity of his treatise to the Postmaster General at Washington requesting that official to distribute the copies to the Members of Congress. HISTORY of the BUREAU of ENGRAVING and PRINTING 1862-1962 TREASURY DEPARTMENT Washington, D.C. For sale by the Superintendent of Documents, U.S. Government Printing Office Washington, D.C.20402.Price $7.00 CENTENNIAL HISTORY STAFF MICHAEL L. PLANT Office of the Controller ARTHUR BARON LOUISE S. BROWN Office of Office of Plant Currency and Stamp Manufacturing Facilities and Industrial Procurement JOHN J. DRISCOLL MICHAEL J. EVANS Internal Audit Staff Internal Audit Staff The Introduction, giving a brief history of the art of engraving and its application in American colonial and early Federal days, was prepared by Robert L. Miller of the Bureau's Designing Staff, Office of Engraving and Plate Manufacturing. II Foreword RE IDEA of publishing ahistory of the Bureau of Engraving and Printing to commemorate the centennialanniversary of its establishment was nurtured in the knowledge that a recitalof its accom- plishments was a story that well deserved the telling.It is not a subject that has been dealt with widely.Much of what has already appeared inprint concerning the Bureau is in the nature of guidebookmaterial or relates to its products, especially currency notes and stamps, ratherthan to the agency itself. -

A Brief History of Children's Storybooks

THE PENNSYLVANIA STATE UNIVERSITY SCHREYER HONORS COLLEGE SCHOOL OF VISUAL ARTS AN ORIGINAL STORY WITH RELIEF PRINT ILLUSTRATIONS MARILYN TURNER MCPHERON Fall 2010 A thesis submitted in partial fulfillment of the requirements for a baccalaureate degree in Art with honors in Art Reviewed and approved* by the following: Robin Gibson Associate Professor of Art Thesis Supervisor Jerrold Maddox Professor of Art Honors Adviser *Signatures are on file in the Schreyer Honors College ABSTRACT Children’s literature, in the form of picture and storybooks, introduce a child to one of the most important tools needed to succeed in life: the ability to read. With the availability of affordable books in the 18th century, due to the introduction of new mechanization, individuals had the ability to improve their lives and widen their worlds. In the 19th century, writers of fiction began to specialize in literature for children. In the 20th century, books for children, with beautiful, colorful illustrations, became a common gift for children. The relatively rapid progression from moralistic small pamphlets on cheap paper with crude woodcuts to the world of Berenstain Bears, colorful Golden Books, and the tongue-twisters of Dr. Seuss is an intriguing social change. The story of how a storybook moves from an idea to the bookstore shelf is equally fascinating. Combining the history of children’s literature with how a storybook is created inspired me to write and illustrate my own children’s book, ―OH NO, MORE SNOW!‖ i ACKNOWLEDGEMENTS The Schreyer Honors College, -

PDF Download the Art of the Woodcut: Masterworks from The

THE ART OF THE WOODCUT: MASTERWORKS FROM THE 1920S PDF, EPUB, EBOOK Malcolm C. Salaman,David A. Berona | 192 pages | 21 Apr 2010 | Dover Publications Inc. | 9780486473598 | English | New York, United States The Art of the Woodcut: Masterworks from the 1920s PDF Book In both cases, the doors to future research were opened, offering me the anticipation of moments of inquiry and enjoyment. Organized by country, the book reviews the work of artists from several European nations, as well as Japan and the United States. Bridie rated it really liked it Jun 21, Bops marked it as to-read Oct 28, There are no discussion topics on this book yet. Cutting, although so much older than engraving, is a more complicated process. Enter your search. Rating details. He studied mechanical engineering at the University of Manchester; however, he decided to pursue literary interests and published a book of poems in As the demand for quality illustrations grew, Thomas Bewick, who was trained as an engraver, created the technique of etching in wood, and as a result wood engraving replaced steel engraving. He was the general editor for the annual Fine Prints of the Year from to , which was one of the few directories-although with noted inconsistencies-in which artist, title, and edition size of every etching plate created each year was documented. This invention allowed various engravers to work on individual sections of an illustration, which were pulled together after completion and finished so that the joints of the individual blocks did not appear on the page. Yet there was little contemporary encouragement for those choice productions, and the regular publishers, profiting not at all by the executive example of Ricketts and Shannon, Sturge Moore and Pissarro, or by Morris's co-operative enthusiasm, continued to regard wood-engraving as only a reproductive method which had been entirely replaced by the photographic zinco-processes of modern usage. -

The Illustrated Book Cover Illustrations

THE ILLUSTRATED BOOK COVER ILLUSTRATIONS: A collection of 18 pronouncements by Buddhist sages accompanied by their pictures. n.p., n.d. Manuscript scroll folded into 42 pages, written on leaves of the bodhi tree. Chinese text, beginning with the date wu-shu of Tao kuang [·i.e. 1838 ] Wooden covers. Picture of Buddhist sage Hsu tung on front cover, accompanied by text of his pronouncement on separate leaf on back cover: "A Buddhist priest asked Buddha, 'How did the Buddha attain the most superior way?' Buddha replied, 'Protect the heart from sins; as one shines a mirror by keeping off dust, one can attain enlightenment.'" --i~ ti_ Hsu tung THE ILLUSTRATED BOOK • An Exhibit: March-May 1991 • Compiled by Alice N. Loranth Cleveland Public Library Fine Arts and Special Collections Department PREFACE The Illustrated Book exhibit was assembled to present an overview of the history of book illustration for a general audience. The plan and scope of the exhibit were developed within the confines of available exhibit space on the third floor of Main Library. Materials were selected from the holdings of Special Collections, supplemented by a few titles chosen from the collections of Fine Arts. Selection of materials was further restrained by concern for the physical well-being of very brittle or valuable items. Many rare items were omitted from the exhibit in order to safeguard them from the detrimental effects of an extended exhibit period. Book illustration is a cooperation of word and picture. At the beginning, writing itself was pictorial, as words were expressed through pictorial representation. -

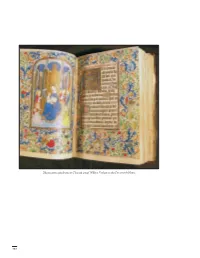

Illumination Attributed to Flemish Artist Willem Vrelant in the Farnsworth Hours

Illumination attributed to Flemish artist Willem Vrelant in the Farnsworth Hours. 132 Book Arts a Medieval Manuscripts Georgetown’s largest collection of late medieval and early renaissance documents, the Scheuch Collection, is described in the European History chapter. In addition to that collection, the library possesses nearly a score of early liturgical and theological manuscripts, including some with interesting and sometimes significant miniatures and illumina tion. Those held prior to 1970 are for the most part listed in Seymour de Ricci’s Census or its supplement, but special note should be made of the volume of spiritual opuscules in Old French (gift of John Gooch) and the altus part of the second set of the musical anthology known as the “Scots Psalter” (1586) by Thomas Wode (or Wood) of St. Andrews, possibly from the library of John Gilmary Shea. Also of note are two quite remarkable fifteenth-century manuscripts: one with texts of Bede, Hugh of St. Victor, and others (gift of Ralph A. Hamilton); the other containing works by Henry of Hesse, St. John Chrysostom, and others (gift of John H. Drury). In recent years the collection has Euclid, Elementa geometriae (1482). grown with two important additions: a truly first-rate manuscript, the Farnsworth Hours, probably illuminated in Bruges about 1465 by Willem Vrelant (gift of Mrs. Thomas M. Evans), and a previously unrecorded fifteenth-century Flemish manuscript of the Imitatio Christi in a very nearly contemporary binding (gift of the estate of Louise A. Emling). The relatively small number of complete manuscripts is supplemented, especially for teaching purposes, by a variety of leaves from individual manuscripts dating from the twelfth to the sixteenth century (in part the gifts of Bishop Michael Portier, Frederick Schneider, Mrs. -

Chats on Old Prints

x<^ Irerm. Q!^ndTQb)s CHATS ON OLD PRINTS THE LIBRARY OF THE UNIVERSITY OF CALIFORNIA LOS ANGELES BOOKS FOR COLLECTORS tVith Frontispieces and many Illustrations. CHATS ON ENQLISH CHINA. By ARTHUR HAYDEN'. CHATS ON OLD FURNITURE. By Arthur Havden. CHATS ON OLD PRINTS. (How to collect and valuo Old Engravlnfs.) By Arthur Hayden. CHATS ON COSTUME. By G. Wooluscrokt Rhead. CHATS ON OLD LACE AND NEEDLEWORK. By E. L. Lowes. CHATS ON ORIENTAL CHINA. By J. F. Blacker. CHATS ON OLD MINIATURES. By J. J. Foster, F.S.A. CHATS ON ENQLISH EARTHENWARE. (Companion volune to " Ctaat« on EnglUh Ctalaa.") By Arthur Hayden. CHATS ON AUTOGRAPHS. By A. M. Broadley. CHATS ON PEWTER. By H. J. L. ]. MASSfe, MA. CHATS ON POSTAGE STAMPS. By Fred. J. Melville. CHATS ON OLD JEWELLERY AND TRINKETS. By MacIver Percival. CHATS ON COTTAQE AND FARMHOUSE FURNITURB, (Companion volume to " Chat* on Old Furniture.") By Arthur Hayuen. CHATS ON OLD COINS. By Fred. W. Bukgess. CHATS ON OLD COPPER AND BRASS. By Fred. W. Burgkss. CHATS ON HOUSEHOLD CURIOS. By Fred. W. Burgess. CHATS ON OLD SILVER. By Arthur HAYnE.\. CHATS ON JAPANESE PRINTS. By Arthur Davison Ficke. CHATS ON MILITARY CURI05. By Stakley C. Johnson. CHATS ON OLD CLOCKS AND WATCHES. By Arthur Hatden. CHAT5 ON ROYAL COPENHAGEN PORCELAIN. By Arthur Hayden. CHATS ON OLD SHEFFIELD PLATE. (Companion volume to "Chat* on Old Silver.**) By Arthur Hayden. CHATS ON OLD ENGLISH DRAWINGS. By Randall Davies. CHATS ON WEDGWOOD WARE. \ By Hakrt Barnard. BYB PATHS OF CURIO COLLECTING. By Arthur Hatdbm. With Frontitpiece and 72 Pull pafe Illustrations. -

Seawright-Dissertation-2017

Bodies of Books: Literary Illustration in Twentieth Century Brazil The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:37945017 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA Bodies of Books: Literary Illustration in Twentieth Century Brazil A dissertation presented by Max Ashton Seawright to The Department of Romance Languages and Literatures in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the subject of Romance Languages and Literatures Harvard University Cambridge, Massachusetts January, 2017 © 2017 Max Ashton Seawright All rights reserved. Professor Josiah Blackmore Max Ashton Seawright Bodies of Books: Literary Illustration in Twentieth Century Brazil ABSTRACT This dissertation explores the nature and role of literary illustrations twentieth century Brazil, not just in relation to their companion texts, but also in what ways they reflect defining characteristics of Brazilian literature beyond the chronological or theoretical limits of modernism, regionalism, magic realism, or postmodernism. Illustrations in new fiction — that is, writer and artist and editor collaborating on a book to be illustrated in its first or otherwise definitive edition — gained popularity in Brazil just as the form waned from existence in North America and Europe, where the “Golden Age” of book illustration was a nineteenth century phenomenon. Understanding illustrated books is key to approaching Brazil’s artistic production beyond the strictly textual or visual. -

“The Art of the Picture Book,” by Mary Erbach (2008)

The Art of the Picture Book MARY M. ERbaCH PICTURE BOOKS at AN ART MUSEUM PROGRAMS WITH PICTURE BOOKS Once upon a time back in 1964 the Art Institute of Chicago Families visit the museum to learn, have fun, and spend time opened an education space called the Junior Museum, with together. Many children visiting the museum have been read galleries, studios, and a little library of picture books for visi- to their entire lives and have a book collection at home. They tors to enjoy. What foresight the planners had to include a may even be familiar with visits to the public library and room full of books for children at an art museum. Thus began have their own card. For our youngest audience (3–5-year- an era that still prevails almost a half century later. Speed olds), a museum visit might not be a common experience, ahead to 2007: a recent study with parents showed that the but it can be an exciting one. Museum educators understand family library is still a favorite destination. This cozy space is that the workshops and gallery walks need to be engaging a comfortable gathering spot where families can hang out and and interactive. Beginning with an activity that children are read, or where teachers can have some down time with their familiar with, such as reading a picture book, establishes a classes and share a story. It’s a great place for storytelling pro- comfort zone for young children who are in a strange big grams and for hosting book signings by guest illustrators. -

Profile of Illustration in Children's Literature Books Based on The

Beata Mazepa-Domagała Poland Profile of Illustration in Children’s Literature Books Based on the Image Preferences of the Youngest Readers DOI: 10.15804/tner.2017.49.3.18 Abstract The presented paper is a result of research conducted on the issue of visual communication as an art supplement and explanation of printed content. The publication begins with a reflection on visual culture illustrating its polysemic character and referring to the iconic nature of images. Further, the paper describes the art of illustration and the value of visual communication in the form of book illustration. The last part of the paper attempts to present the structure of a well-designed illustration in the form of profiles, constructed on the basis of picture tendencies appear in a group of pre-readers. Keywords: book illustration, visual communication, pre-school child Introduction Recent years have brought significant changes in widely understood culture, which today is undoubtedly of audiovisual nature. The emerging phenomenon in which visuality dominates over the word especially confirms that fact. The expansion of visual communication, visual external advertising, picture books, comics, the spread of audiovisual media – electronic sources of experience and the invasion of homogeneous – often trashy – mass culture as well as dominance of adult culture over the culture addressed to children in both the offer and reception are only some examples where this phenomenon may be observed. 224 Beata Mazepa-Domagała This state of affairs makes a person live in a world in which his or her daily exist- ence depends on certain impulses, pressures derived from visual stimuli and being accustomed to the surrounding images, he or she is unaware of their influence. -

Graphic Versus Photographic in the Nineteenth-Century Reproduction Trevor Fawcett

GRAPHIC VERSUS PHOTOGRAPHIC IN THE NINETEENTH-CENTURY REPRODUCTION TREVOR FAWCETT I THE REPRODUCTIVE PRINT UP TO 1840 Good prints are no doubt better than bad pictures; or prints, generally speaking, are better than pictures; for we have more prints ofgood pictures than of bad ones; yet they are for the most part but hints, loose memorandums, outlines in little ofwhat the painter has done. How often, in turning over a number of choice engravings, do we tantalize ourselves by thinking 'what a head that must be', - in wondering what colour a piece of drapery is, green or black, - in wishing, in vain, to know the exact tone of the sky in a particular corner of the picture! Throw open the folding-doors ofa fine Collection and you see all you have desired realised at a blow- the bright originals starting up in their own proper shape, clad with flesh and blood, and teeming with the first conceptions of the painter's mind! So William Hazlitt, musing on reproductions and going on to console himself with the thought that at least their inadequacy made 'the sight of a fine original picture an event so much the more memorable, and the impression so much the deeper. A visit to a genuine Collection is like going a pilgrimage.'! Yet reproductive prints were indispensable. People had to rely on them. The masterpieces of Western painting were scattered through Europe or hidden away in private galleries. Even the assiduous grand tourist saw only a fraction and that relatively briefly. A heavy responsibility was placed on their printed simulacra to represent them justly.