Prototype Eclipse OMR Port Performance Evaluation on Aarch64

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Model-Based System Engineering for Powertrain Systems Optimization Sandra Hamze

Model-based System Engineering for Powertrain Systems Optimization Sandra Hamze To cite this version: Sandra Hamze. Model-based System Engineering for Powertrain Systems Optimization. Automatic Control Engineering. Université Grenoble Alpes, 2019. English. NNT : 2019GREAT055. tel- 02524432 HAL Id: tel-02524432 https://tel.archives-ouvertes.fr/tel-02524432 Submitted on 30 Mar 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. THÈSE pour obtenir le grade de DOCTEUR DE L’UNIVERSITÉ DE GRENOBLE ALPES Spécialité : Automatique-Productique Arrêté ministériel : 7 août 2006 Présentée par Sandra HAMZE Thèse dirigée par Emmanuel WITRANT et codirigée par Delphine BRESCH-PIETRI et Vincent TALON préparée au sein du laboratoire Grenoble Images Parole Signal Automatique (GIPSA-lab) dans l’école doctorale Electronique, Electrotechnique, Automatique, Traitement du Signal (EEATS) en collaboration avec Renault s.a.s Optimisation Multi-objectifs Inter-systèmes des Groupes Motopropulseurs Model-based System Engineering for Powertrain Systems Optimization 2 Thèse soutenue -

Construction of an Active Rectifier for a Transverse-Flux Wave Power Generator

EXAMENSARBETE INOM ELEKTROTEKNIK, AVANCERAD NIVÅ, 30 HP STOCKHOLM, SVERIGE 2017 Construction of an Active Rectifier for a Transverse-Flux Wave Power Generator OLOF BRANDT LUNDQVIST KTH SKOLAN FÖR ELEKTRO- OCH SYSTEMTEKNIK 1 Sammanfattning Vågkraft är en energikälla som skulle kunna göra en avgörande skillnad i om- ställningen mot en hållbar energisektor. Tillväxten för vågkraft har dock inte varit lika snabb som tillväxten för andra förnybara energislag, såsom vindkraft och solkraft. Vissa tekniska hinder kvarstår innan ett stort genombrott för våg- kraft kan bli möjligt. Ett hinder fram tills nu har varit de låga spänningarna och de resulterande höga effektförlusterna i många vågkraftverk. En ny typ av våg- kraftsgenerator, som har tagits fram av Anders Hagnestål vid KTH i Stockholm, avser att lösa dessa problem. I det här examensarbetet behandlas det effekte- lektroniska omvandlingssystemet för Anders Hagneståls generator. Det beskriver planerings- och konstruktionsprocessen för en enfasig AC/DC-omvandlare, som så småningom skall bli en del av det större omvandlingssystemet för generatorn. Ett kontrollsystem för omvandlaren, baserat på hystereskontroll för strömmen, planeras och sätts ihop. Den färdiga enfasomvandlaren visar goda resultat under drift som växelriktare. Dock kvarstår visst konstruktionsarbete och viss kalibre- ring av det digitala kontrollsystemet innan omvandlaren kan användas för sin uppgift i effektomvandlingen hos vågkraftverket. 2 2 Abstract Wave power is an energy source which could make a decisive difference in the transition towards a more sustainable energy sector. The growth of wave power production has however not been as rapid as the growth in other renewable energy fields, such as wind power and solar power. Some technical obstacles remain before a major breakthrough for wave power can be expected. -

The Governance of Galileo

The Governance of Galileo Report 62 January 2017 Amiel Sitruk Serge Plattard Short title: ESPI Report 62 ISSN: 2218-0931 (print), 2076-6688 (online) Published in January 2017 Editor and publisher: European Space Policy Institute, ESPI Schwarzenbergplatz 6 • 1030 Vienna • Austria http://www.espi.or.at Tel. +43 1 7181118-0; Fax -99 Rights reserved – No part of this report may be reproduced or transmitted in any form or for any purpose without permission from ESPI. Citations and extracts to be published by other means are subject to mentioning “Source: ESPI Report 62; January 2017. All rights reserved” and sample transmission to ESPI before publishing. ESPI is not responsible for any losses, injury or damage caused to any person or property (including under contract, by negligence, product liability or otherwise) whether they may be direct or indirect, special, incidental or consequential, resulting from the information contained in this publication. Design: Panthera.cc ESPI Report 62 2 January 2017 The Governance of Galileo Table of Contents Executive Summary 5 1. Introduction 7 1.1 Purposes, Principle and Current State of Global Navigation Satellite Systems (GNSS) 7 1.2 Description of Galileo 7 1.3 A Brief History of Galileo and Its Governance 9 1.4 Current State and Next Steps 10 2. The Challenges of Galileo Governance 12 2.1 Political Challenges 12 2.1.1 Giving to the EU and Its Member States an Effective Instrument of Sovereignty 12 2.1.2 Providing Effective Interaction between the European Stakeholders 12 2.1.3 Dealing with Security Issues Related to Galileo 13 2.1.4 Ensuring a Strong Presence on the International Scene 13 2.2 Economic Challenges 14 2.2.1 Setting up a Cost-Effective Organization 14 2.2.2 Fostering the Development of a Downstream Market Associated with Galileo 14 2.2.3 Fostering Indirect Benefits 15 2.3 Technical Challenges 15 2.3.1 Successfully Exploiting the System 15 2.3.2 Ensuring the Evolution of the System 16 2.3.3 Technically Enabling “GNSS Diplomacy” 16 3. -

A Viga T Ing R T Ificia L N Te Ll Igence

July 24, 2018 Semiconductor Get real with artificial intelligence (AI) "Seriously, do you think you could actually purchase one of my kind in Walmart, say in the next 10 years?" NTELLIGENCE I "You do?! You'd better read this report from RTIFICIAL RTIFICIAL cover to cover, and I assure you Peter is not being funny at all this time." A ■ Fantasies remain in Star Trek. Let’s talk about practical AI technologies. ■ There are practical limitations in using today’s technology to realise AI elegantly. ■ AI is to be enabled by a collaborative ecosystem, likely dominated by “gorillas”. ■ An explosion of innovations in AI is happening to enhance user experience. ■ Rewards will go to the problem solvers that have invested in R&D ahead of others. Analyst(s) AVIGATING AVIGATING Peter CHAN T (82) 2 6730 6128 E [email protected] N IMPORTANT DISCLOSURES, INCLUDING ANY REQUIRED RESEARCH CERTIFICATIONS, ARE PROVIDED AT THE Powered by END OF THIS REPORT. IF THIS REPORT IS DISTRIBUTED IN THE UNITED STATES IT IS DISTRIBUTED BY CIMB the EFA SECURITIES (USA), INC. AND IS CONSIDERED THIRD-PARTY AFFILIATED RESEARCH. Platform Navigating Artificial Intelligence Technology - Semiconductor│July 24, 2018 TABLE OF CONTENTS KEY CHARTS .......................................................................................................................... 4 Executive Summary .................................................................................................................. 5 I. From human to machine .......................................................................................................10 -

[email protected] University of Pittsburgh Web : Pittsburgh, PA 15260 Office : 412.624.8924, Fax : 412.624.8854

PANOS K. CHRYSANTHIS Department of Computer Science E-Mail : [email protected] University of Pittsburgh Web : http://panos.cs.pitt.edu Pittsburgh, PA 15260 Office : 412.624.8924, Fax : 412.624.8854 RESEARCH INTERESTS Management of Data (Big Data, Databases, Web Databases, Data Streams & Sensor networks), Cloud, Distributed & Mobile Computing, Workflow Management, Operating & Real-time Systems EDUCATION Ph.D. in Computer and Information Science, University of Massachusetts, Amherst, August 1991 M.S. in Computer and Information Science, University of Massachusetts, Amherst, May 1986 B.S. in Physics (Computer Science concentration), University of Athens, Greece, December 1982 APPOINTMENTS Professor, Dept. of Computer Science (Joint-Secondary appointment in Electrical Engineering and the Telcomm Program), University of Pittsburgh (Jan. 2004 to present). Adjunct Professor, Dept. of Computer Science, Carnegie-Mellon University (Aug. 2000 to present). Faculty member, Computational Biology PhD Program, University of Pittsburgh and Carnegie-Mellon University (Sept. 2004 to present). Adjunct Professor, Dept. of Computer Science, University of Cyprus, Cyprus (Jan. 2008 to Dec. 2016, Jan. 2018 to present). Visiting Professor, Laboratory of Information, Networking and Communication Sciences (LINCS), Paris, France (Feb. 2019 to Mar. 2019) Visiting Professor, Dept. of Computer Science, University of Cyprus, Cyprus (Aug. 2006 to June 2007, May 2016, June 2018 June 2019). Visiting Professor, Dept. of Computer Science, Carnegie Mellon University, Pittsburgh (Aug. 1999 to Aug. 2000; Dec. 2014 to Aug. 2015) Faculty Member, RODS Laboratory, Center for Biomedical Informatics, University of Pittsburgh (May 2002 to Aug. 2006). Associate Professor, Dept. of Computer Science (Joint-Secondary appointment in Electrical Engineering), University of Pittsburgh (Sept. 1997 to Dec. -

Agent: STRAUSS, Ryan N. Et Al.; 121 1 SW 5Th Avenue, H04W 72/04 (2009.01) H04W4/40 (2018.01) Suite 1500-1900, Portland, Oregon 97204 (US)

( (51) International Patent Classification: (74) Agent: STRAUSS, Ryan N. et al.; 121 1 SW 5th Avenue, H04W 72/04 (2009.01) H04W4/40 (2018.01) Suite 1500-1900, Portland, Oregon 97204 (US). (21) International Application Number: (81) Designated States (unless otherwise indicated, for every PCT/US20 19/035597 kind of national protection av ailable) . AE, AG, AL, AM, AO, AT, AU, AZ, BA, BB, BG, BH, BN, BR, BW, BY, BZ, (22) International Filing Date: CA, CH, CL, CN, CO, CR, CU, CZ, DE, DJ, DK, DM, DO, 05 June 2019 (05.06.2019) DZ, EC, EE, EG, ES, FI, GB, GD, GE, GH, GM, GT, HN, (25) Filing Language: English HR, HU, ID, IL, IN, IR, IS, JO, JP, KE, KG, KH, KN, KP, KR, KW, KZ, LA, LC, LK, LR, LS, LU, LY, MA, MD, ME, (26) Publication Language: English MG, MK, MN, MW, MX, MY, MZ, NA, NG, NI, NO, NZ, (30) Priority Data: OM, PA, PE, PG, PH, PL, PT, QA, RO, RS, RU, RW, SA, 62/682,732 08 June 2018 (08.06.2018) US SC, SD, SE, SG, SK, SL, SM, ST, SV, SY, TH, TJ, TM, TN, TR, TT, TZ, UA, UG, US, UZ, VC, VN, ZA, ZM, ZW. (71) Applicant: INTEL CORPORATION [US/US]; 2200 Mission College Boulevard, Santa Clara, California 95054 (84) Designated States (unless otherwise indicated, for every (US). kind of regional protection available) . ARIPO (BW, GH, GM, KE, LR, LS, MW, MZ, NA, RW, SD, SL, ST, SZ, TZ, (72) Inventors: MUECK, Markus Dominik; Jaegerstrasse 4b, UG, ZM, ZW), Eurasian (AM, AZ, BY, KG, KZ, RU, TJ, 82008 Unterhaching (DE). -

610456 Confidentiality

D7.9 Review of Industry trends – Competitive analysis Project No: 610456 D7.9 Review of Industry trends – Competitive analysis February 28th, 2017 Abstract: This deliverable provides an update on the competitive analysis performed by the EUROSERVER consortium. As of the time of writing, it is clear that it has not been easy to commercialise ARM-based micro-servers, and many large companies have been unable to bring a viable solution to market. The remaining providers that already have or intend to launch server-ready products are Qualcomm and Cavium. The decision by Fujitsu and RIKEN to base the Post-K supercomputer on the ARM architecture is a positive development. Document Manager: John Thomson (Editor) Authors AFFILIATION John Thomson OnApp Ltd. (ONAPP) Paul Carpenter Barcelona Supercomputing Center (BSC) Manolis Katevenis, Iakovos Mavroidis FORTH Denis Dutoit CEA Internal Reviewers Per Stenstrom CHALMERS Isabelle Dor CEA Document Id N°: Version: 0.7 Date: 28/2/2017 Filename: Euroserver_D7.9_v0.7.docx Confidentiality This document is public and was produced by EUROSERVER contractors. Some of the graphics and information belongs to third-parties and this information is highlighted where appropriate. The commercial use of any information contained in this document may require a license from the proprietor of that information. Page 1 of 23 This document is Public, and was produced under the EUROSERVER project (EC contract 610456). D7.9 Review of Industry trends – Competitive analysis The EUROSERVER Consortium consists of the following -

An Overview of the Netware Operating System

An Overview of the NetWare Operating System Drew Major Greg Minshall Kyle Powell Novell, Inc. Abstract The NetWare operating system is designed specifically to provide service to clients over a computer network. This design has resulted in a system that differs in several respects from more general-purpose operating systems. In addition to highlighting the design decisions that have led to these differences, this paper provides an overview of the NetWare operating system, with a detailed description of its kernel and its software-based approach to fault tolerance. 1. Introduction The NetWare operating system (NetWare OS) was originally designed in 1982-83 and has had a number of major changes over the intervening ten years, including converting the system from a Motorola 68000-based system to one based on the Intel 80x86 architecture. The most recent re-write of the NetWare OS, which occurred four years ago, resulted in an “open” system, in the sense of one in which independently developed programs could run. Major enhancements have occurred over the past two years, including the addition of an X.500-like directory system for the identification, location, and authentication of users and services. The philosophy has been to start as with as simple a design as possible and try to make it simpler as we gain experience and understand the problems better. The NetWare OS provides a reasonably complete runtime environment for programs ranging from multiprotocol routers to file servers to database servers to utility programs, and so forth. Because of the design tradeoffs made in the NetWare OS and the constraints those tradeoffs impose on the structure of programs developed to run on top of it, the NetWare OS is not suited to all applications. -

D2.3 Design Guidelines for the Rapid-Api Industrial Design of Multimodal Interactive Expressive Technology

REALTIME ADAPTIVE PROTOTYPING FOR D2.3 DESIGN GUIDELINES FOR THE RAPID-API INDUSTRIAL DESIGN OF MULTIMODAL INTERACTIVE EXPRESSIVE TECHNOLOGY D2.3 DESIGN GUIDELINES FOR THE RAPID-API Grant Agreement nr 644862 Project title Realtime Adaptive Prototyping for Industrial Design of Multimodal Interactive eXpressive technology Project acronym RAPID-MIX Start date of project (dur.) Feb 1st, 2015 (3 years) Document reference RAPIDMIX-WD-WP2-UPF-D2.3.docx Report availability PU - Public Document due Date June 30th, 2016 Actual date of delivery July 31st, 2016 Leader UPF Reply to Sebastian Mealla C. ([email protected]) Additional main contributors Panos Papiots (UPF) (author’s name / partner acr.) Carles F. Julia (UPF) Frederic Bevilacqua (IRCAM) Joseph Larralde (IRCAM) Norbert Schnell (IRCAM) Mick Grierson (GS) Rebecca Fiebrink (GS) Francisco Bernardo (GS) Michael Zbyszyński (GS) xavier boissarie (ORBE) Hugo Silva (PLUX) Document status Final version (reviewed by GS) This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement N° 644862 D2.3 DESIGN GUIDELINES FOR THE RAPID-API Page 1 of 32- MIX_WD_WP1_DeliverableTemplate_20120314_MTG-UPF Page 1 of 32 EXECUTIVE SUMMARY This deliverable updates the user-centred design specifications defined in D2.2 to include guidelines for the RAPID-API development, according to the requirements of research and SME partners, and end-users beyond the consortium. D2.3 DESIGN GUIDELINES FOR THE RAPID-API Page 2 of 32 TABLE OF CONTENTS 1 INTRODUCTION -

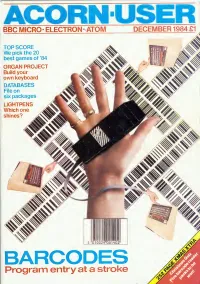

Acorn User Welcomes Submissions Irom Readers

ACORN BBC MICRO- ELECTRON- ATOM DECEMBER 1984 £1 TOP SCORE We pick the 20 best games of '84 ORGAN PROJECT Build your own keyboard DATABASES File on six packages LIGHTPENS Which one shines? Program entry at a stroke ' MUSIC MICRO PLEASE!! Jj V L S ECHO I is a high quality 3 octave keyboard of 37 full sized keys operating electroni- cally through gold plated contacts. The keyboard which is directly connected to the user port of the computer does not require an independent power supply unit. The ECHOSOFT Programme "Organ Master" written for either the BBC Model B' or the Commodore 64 supplied with the keyboard allows these computers to be used as real time synth- esizers with full control of the sound envelopes. The pitch and duration of the sound envelope can be changed whilst playing, and the programme allows the user to create and allocate his own sounds to four pre-defined keys. Additional programmes in the ECHOSOFT Series are in the course of preparation and will be released shortly. Other products in the range available from your LVL Dealer are our: ECHOKIT (£4.95)" External Speaker Adaptor Kit, allows your Commodore or BBC Micro- computer to have an external sound output socket allowing the ECHOSOUND Speaker amplifier to be connected. (£49.95)' - ECHOSOUND A high quality speaker amplifier with a 6 dual cone speaker and a full 6 watt output will fill your room with sound. The sound frequency control allows the tone of the sound output to be changed. Both of the above have been specifically designed to operate with the ECHO Series keyboard. -

Acorn ABC 210/Cambridge Workstation

ACORN COMPUTERS LTD. ACW 443 SERVICE MANUAL 0420,001 Issue 1 January 1987 ACW SERVICE MANUAL Title: ACW SERVICE MANUAL Reference: 0420,001 Issue: 1 Replaces: 0.56 Applicability: Product Support Distribution: Authorised Service Agents Status: for publication Author: C.Watters, J.Wilkins and Others Date: 7 January 1987 Published by: Acorn Computers Ltd, Fulbourn Road, Cherry Hinton, Cambridge, CB1 4JN, England Within this publication the term 'BBC' is used as an abbreviation for 'British Broadcasting Corporation'. Copyright ACORN Computers Limited 1985 Neither the whole or any part of the information contained in, or the product described in, this manual may be adapted or reproduced in any material form except with the prior written approval of ACORN Computers Limited ( ACORN Computers). The product described in this manual and products for use with it, are subject to continuous development and improvement. All information of a technical nature and particulars of the product and its use (including the information and particulars in this manual) are given by ACORN Computers in good faith. However, it is acknowledged that there may be errors or omissions in this manual. A list of details of any amendments or revisions to this manual can be obtained upon request from ACORN Computers Technical Enquiries. ACORN Computers welcome comments and suggestions relating to the product and this manual. All correspondence should be addressed to:- Technical Enquiries ACORN Computers Limited Newmarket Road Cambridge CB5 8PD All maintenance and service on the product must be carried out by ACORN Computers' authorised service agents. ACORN Computers can accept no liability whatsoever for any loss or damage caused by service or maintenance by unauthorised personnel. -

United States District Court Eastern District of Texas Marshall Division

Case 2:19-cv-00056 Document 1 Filed 02/14/19 Page 1 of 19 PageID #: 1 UNITED STATES DISTRICT COURT EASTERN DISTRICT OF TEXAS MARSHALL DIVISION KIPB LLC, Plaintiff, v. Case No. 2:19-cv-00056 SAMSUNG ELECTRONICS CO., LTD.; SAMSUNG ELECTRONICS AMERICA, INC.; JURY TRIAL DEMANDED SAMSUNG SEMICONDUCTOR, INC.; SAMSUNG AUSTIN SEMICONDUCTOR, LLC; AND QUALCOMM GLOBAL TRADING PTE. LTD., Defendants. COMPLAINT FOR PATENT INFRINGEMENT Plaintiff KIPB LLC, formerly known as KAIST IP US LLC (“KAIST IP US”), hereby alleges infringement of United States Patent No. 6,885,055 (the “ʼ055 Patent”) against Defendants Samsung Electronics Co., Ltd. (“SEC”), Samsung Electronics America, Inc. (“SEA”), Samsung Semiconductor, Inc. (“SSI”), and Samsung Austin Semiconductor LLC (“SAS”) (collectively, “Samsung”), and Qualcomm Global Trading Pte. Ltd. (“Qualcomm”), as follows: THE PARTIES 1. Plaintiff KAIST IP US is a corporation organized and existing under the laws of the State of Texas, having a principal place of business at 2591 Dallas Parkway, Frisco, Texas 75034. 2. Defendant SEC is a corporation organized and existing under the laws of the Republic of Korea, and located at 129 Samsung-ro, Yeongtong-gu, Suwon-si, Gyeonggi-do, 1 30379890 Case 2:19-cv-00056 Document 1 Filed 02/14/19 Page 2 of 19 PageID #: 2 Republic of Korea. 3. Defendant SEA is a corporation organized and existing under the laws of the state of New York, with corporate offices in the Eastern District of Texas at 1301 E. Lookout Drive, Richardson, Texas 75082, and 2800 Technology Drive, Suite 200, Plano, Texas 75074. Defendant SEA may be served with process through its registered agent CT Corporation System, 1999 Bryan St., Ste.