Representing Numeric Data in 32 Bits While Preserving 64-Bit Precision

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Polymorphic Type System for Extensible Records and Variants

A Polymorphic Typ e System for Extensible Records and Variants Benedict R. Gaster and Mark P. Jones Technical rep ort NOTTCS-TR-96-3, November 1996 Department of Computer Science, University of Nottingham, University Park, Nottingham NG7 2RD, England. fbrg,[email protected] Abstract b oard, and another indicating a mouse click at a par- ticular p oint on the screen: Records and variants provide exible ways to construct Event = Char + Point : datatyp es, but the restrictions imp osed by practical typ e systems can prevent them from b eing used in ex- These de nitions are adequate, but they are not par- ible ways. These limitations are often the result of con- ticularly easy to work with in practice. For example, it cerns ab out eciency or typ e inference, or of the di- is easy to confuse datatyp e comp onents when they are culty in providing accurate typ es for key op erations. accessed by their p osition within a pro duct or sum, and This pap er describ es a new typ e system that reme- programs written in this way can b e hard to maintain. dies these problems: it supp orts extensible records and To avoid these problems, many programming lan- variants, with a full complement of p olymorphic op era- guages allow the comp onents of pro ducts, and the al- tions on each; and it o ers an e ective type inference al- ternatives of sums, to b e identi ed using names drawn gorithm and a simple compilation metho d. -

Virtual Memory

Chapter 4 Virtual Memory Linux processes execute in a virtual environment that makes it appear as if each process had the entire address space of the CPU available to itself. This virtual address space extends from address 0 all the way to the maximum address. On a 32-bit platform, such as IA-32, the maximum address is 232 − 1or0xffffffff. On a 64-bit platform, such as IA-64, this is 264 − 1or0xffffffffffffffff. While it is obviously convenient for a process to be able to access such a huge ad- dress space, there are really three distinct, but equally important, reasons for using virtual memory. 1. Resource virtualization. On a system with virtual memory, a process does not have to concern itself with the details of how much physical memory is available or which physical memory locations are already in use by some other process. In other words, virtual memory takes a limited physical resource (physical memory) and turns it into an infinite, or at least an abundant, resource (virtual memory). 2. Information isolation. Because each process runs in its own address space, it is not possible for one process to read data that belongs to another process. This improves security because it reduces the risk of one process being able to spy on another pro- cess and, e.g., steal a password. 3. Fault isolation. Processes with their own virtual address spaces cannot overwrite each other’s memory. This greatly reduces the risk of a failure in one process trig- gering a failure in another process. That is, when a process crashes, the problem is generally limited to that process alone and does not cause the entire machine to go down. -

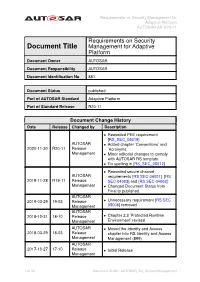

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Requirements on Security Document Title Management for Adaptive Platform Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 881 Document Status published Part of AUTOSAR Standard Adaptive Platform Part of Standard Release R20-11 Document Change History Date Release Changed by Description • Reworded PEE requirement [RS_SEC_05019] AUTOSAR • Added chapter ’Conventions’ and 2020-11-30 R20-11 Release ’Acronyms’ Management • Minor editorial changes to comply with AUTOSAR RS template • Fix spelling in [RS_SEC_05012] • Reworded secure channel AUTOSAR requirements [RS SEC 04001], [RS 2019-11-28 R19-11 Release SEC 04003] and [RS SEC 04003] Management • Changed Document Status from Final to published AUTOSAR 2019-03-29 19-03 Release • Unnecessary requirement [RS SEC Management 05006] removed AUTOSAR 2018-10-31 18-10 Release • Chapter 2.3 ’Protected Runtime Management Environment’ revised AUTOSAR • Moved the Identity and Access 2018-03-29 18-03 Release chapter into RS Identity and Access Management Management (899) AUTOSAR 2017-10-27 17-10 Release • Initial Release Management 1 of 32 Document ID 881: AUTOSAR_RS_SecurityManagement Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intel- lectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. -

Generic Programming

Generic Programming July 21, 1998 A Dagstuhl Seminar on the topic of Generic Programming was held April 27– May 1, 1998, with forty seven participants from ten countries. During the meeting there were thirty seven lectures, a panel session, and several problem sessions. The outcomes of the meeting include • A collection of abstracts of the lectures, made publicly available via this booklet and a web site at http://www-ca.informatik.uni-tuebingen.de/dagstuhl/gpdag.html. • Plans for a proceedings volume of papers submitted after the seminar that present (possibly extended) discussions of the topics covered in the lectures, problem sessions, and the panel session. • A list of generic programming projects and open problems, which will be maintained publicly on the World Wide Web at http://www-ca.informatik.uni-tuebingen.de/people/musser/gp/pop/index.html http://www.cs.rpi.edu/˜musser/gp/pop/index.html. 1 Contents 1 Motivation 3 2 Standards Panel 4 3 Lectures 4 3.1 Foundations and Methodology Comparisons ........ 4 Fundamentals of Generic Programming.................. 4 Jim Dehnert and Alex Stepanov Automatic Program Specialization by Partial Evaluation........ 4 Robert Gl¨uck Evaluating Generic Programming in Practice............... 6 Mehdi Jazayeri Polytypic Programming........................... 6 Johan Jeuring Recasting Algorithms As Objects: AnAlternativetoIterators . 7 Murali Sitaraman Using Genericity to Improve OO Designs................. 8 Karsten Weihe Inheritance, Genericity, and Class Hierarchies.............. 8 Wolf Zimmermann 3.2 Programming Methodology ................... 9 Hierarchical Iterators and Algorithms................... 9 Matt Austern Generic Programming in C++: Matrix Case Study........... 9 Krzysztof Czarnecki Generative Programming: Beyond Generic Programming........ 10 Ulrich Eisenecker Generic Programming Using Adaptive and Aspect-Oriented Programming . -

Virtual Memory - Paging

Virtual memory - Paging Johan Montelius KTH 2020 1 / 32 The process code heap (.text) data stack kernel 0x00000000 0xC0000000 0xffffffff Memory layout for a 32-bit Linux process 2 / 32 Segments - a could be solution Processes in virtual space Address translation by MMU (base and bounds) Physical memory 3 / 32 one problem Physical memory External fragmentation: free areas of free space that is hard to utilize. Solution: allocate larger segments ... internal fragmentation. 4 / 32 another problem virtual space used code We’re reserving physical memory that is not used. physical memory not used? 5 / 32 Let’s try again It’s easier to handle fixed size memory blocks. Can we map a process virtual space to a set of equal size blocks? An address is interpreted as a virtual page number (VPN) and an offset. 6 / 32 Remember the segmented MMU MMU exception no virtual addr. offset yes // < within bounds index + physical address segment table 7 / 32 The paging MMU MMU exception virtual addr. offset // VPN available ? + physical address page table 8 / 32 the MMU exception exception virtual address within bounds page available Segmentation Paging linear address physical address 9 / 32 a note on the x86 architecture The x86-32 architecture supports both segmentation and paging. A virtual address is translated to a linear address using a segmentation table. The linear address is then translated to a physical address by paging. Linux and Windows do not use use segmentation to separate code, data nor stack. The x86-64 (the 64-bit version of the x86 architecture) has dropped many features for segmentation. -

Data Types and Variables

Color profile: Generic CMYK printer profile Composite Default screen Complete Reference / Visual Basic 2005: The Complete Reference / Petrusha / 226033-5 / Chapter 2 2 Data Types and Variables his chapter will begin by examining the intrinsic data types supported by Visual Basic and relating them to their corresponding types available in the .NET Framework’s Common TType System. It will then examine the ways in which variables are declared in Visual Basic and discuss variable scope, visibility, and lifetime. The chapter will conclude with a discussion of boxing and unboxing (that is, of converting between value types and reference types). Visual Basic Data Types At the center of any development or runtime environment, including Visual Basic and the .NET Common Language Runtime (CLR), is a type system. An individual type consists of the following two items: • A set of values. • A set of rules to convert every value not in the type into a value in the type. (For these rules, see Appendix F.) Of course, every value of another type cannot always be converted to a value of the type; one of the more common rules in this case is to throw an InvalidCastException, indicating that conversion is not possible. Scalar or primitive types are types that contain a single value. Visual Basic 2005 supports two basic kinds of scalar or primitive data types: structured data types and reference data types. All data types are inherited from either of two basic types in the .NET Framework Class Library. Reference types are derived from System.Object. Structured data types are derived from the System.ValueType class, which in turn is derived from the System.Object class. -

Virtual Memory in X86

Fall 2017 :: CSE 306 Virtual Memory in x86 Nima Honarmand Fall 2017 :: CSE 306 x86 Processor Modes • Real mode – walks and talks like a really old x86 chip • State at boot • 20-bit address space, direct physical memory access • 1 MB of usable memory • No paging • No user mode; processor has only one protection level • Protected mode – Standard 32-bit x86 mode • Combination of segmentation and paging • Privilege levels (separate user and kernel) • 32-bit virtual address • 32-bit physical address • 36-bit if Physical Address Extension (PAE) feature enabled Fall 2017 :: CSE 306 x86 Processor Modes • Long mode – 64-bit mode (aka amd64, x86_64, etc.) • Very similar to 32-bit mode (protected mode), but bigger address space • 48-bit virtual address space • 52-bit physical address space • Restricted segmentation use • Even more obscure modes we won’t discuss today xv6 uses protected mode w/o PAE (i.e., 32-bit virtual and physical addresses) Fall 2017 :: CSE 306 Virt. & Phys. Addr. Spaces in x86 Processor • Both RAM hand hardware devices (disk, Core NIC, etc.) connected to system bus • Mapped to different parts of the physical Virtual Addr address space by the BIOS MMU Data • You can talk to a device by performing Physical Addr read/write operations on its physical addresses Cache • Devices are free to interpret reads/writes in any way they want (driver knows) System Interconnect (Bus) : all addrs virtual DRAM Network … Disk (Memory) Card : all addrs physical Fall 2017 :: CSE 306 Virt-to-Phys Translation in x86 0xdeadbeef Segmentation 0x0eadbeef Paging 0x6eadbeef Virtual Address Linear Address Physical Address Protected/Long mode only • Segmentation cannot be disabled! • But can be made a no-op (a.k.a. -

Static Typing Where Possible, Dynamic Typing When Needed: the End of the Cold War Between Programming Languages

Intended for submission to the Revival of Dynamic Languages Static Typing Where Possible, Dynamic Typing When Needed: The End of the Cold War Between Programming Languages Erik Meijer and Peter Drayton Microsoft Corporation Abstract Advocates of dynamically typed languages argue that static typing is too rigid, and that the softness of dynamically lan- This paper argues that we should seek the golden middle way guages makes them ideally suited for prototyping systems with between dynamically and statically typed languages. changing or unknown requirements, or that interact with other systems that change unpredictably (data and application in- tegration). Of course, dynamically typed languages are indis- 1 Introduction pensable for dealing with truly dynamic program behavior such as method interception, dynamic loading, mobile code, runtime <NOTE to="reader"> Please note that this paper is still very reflection, etc. much work in progress and as such the presentation is unpol- In the mother of all papers on scripting [16], John Ousterhout ished and possibly incoherent. Obviously many citations to argues that statically typed systems programming languages related and relevant work are missing. We did however do our make code less reusable, more verbose, not more safe, and less best to make it provocative. </NOTE> expressive than dynamically typed scripting languages. This Advocates of static typing argue that the advantages of static argument is parroted literally by many proponents of dynami- typing include earlier detection of programming mistakes (e.g. cally typed scripting languages. We argue that this is a fallacy preventing adding an integer to a boolean), better documenta- and falls into the same category as arguing that the essence of tion in the form of type signatures (e.g. -

Virtual Memory

CSE 410: Systems Programming Virtual Memory Ethan Blanton Department of Computer Science and Engineering University at Buffalo Introduction Address Spaces Paging Summary References Virtual Memory Virtual memory is a mechanism by which a system divorces the address space in programs from the physical layout of memory. Virtual addresses are locations in program address space. Physical addresses are locations in actual hardware RAM. With virtual memory, the two need not be equal. © 2018 Ethan Blanton / CSE 410: Systems Programming Introduction Address Spaces Paging Summary References Process Layout As previously discussed: Every process has unmapped memory near NULL Processes may have access to the entire address space Each process is denied access to the memory used by other processes Some of these statements seem contradictory. Virtual memory is the mechanism by which this is accomplished. Every address in a process’s address space is a virtual address. © 2018 Ethan Blanton / CSE 410: Systems Programming Introduction Address Spaces Paging Summary References Physical Layout The physical layout of hardware RAM may vary significantly from machine to machine or platform to platform. Sometimes certain locations are restricted Devices may appear in the memory address space Different amounts of RAM may be present Historically, programs were aware of these restrictions. Today, virtual memory hides these details. The kernel must still be aware of physical layout. © 2018 Ethan Blanton / CSE 410: Systems Programming Introduction Address Spaces Paging Summary References The Memory Management Unit The Memory Management Unit (MMU) translates addresses. It uses a per-process mapping structure to transform virtual addresses into physical addresses. The MMU is physical hardware between the CPU and the memory bus. -

Software II: Principles of Programming Languages

Software II: Principles of Programming Languages Lecture 6 – Data Types Some Basic Definitions • A data type defines a collection of data objects and a set of predefined operations on those objects • A descriptor is the collection of the attributes of a variable • An object represents an instance of a user- defined (abstract data) type • One design issue for all data types: What operations are defined and how are they specified? Primitive Data Types • Almost all programming languages provide a set of primitive data types • Primitive data types: Those not defined in terms of other data types • Some primitive data types are merely reflections of the hardware • Others require only a little non-hardware support for their implementation The Integer Data Type • Almost always an exact reflection of the hardware so the mapping is trivial • There may be as many as eight different integer types in a language • Java’s signed integer sizes: byte , short , int , long The Floating Point Data Type • Model real numbers, but only as approximations • Languages for scientific use support at least two floating-point types (e.g., float and double ; sometimes more • Usually exactly like the hardware, but not always • IEEE Floating-Point Standard 754 Complex Data Type • Some languages support a complex type, e.g., C99, Fortran, and Python • Each value consists of two floats, the real part and the imaginary part • Literal form real component – (in Fortran: (7, 3) imaginary – (in Python): (7 + 3j) component The Decimal Data Type • For business applications (money) -

Should Your Specification Language Be Typed?

Should Your Specification Language Be Typed? LESLIE LAMPORT Compaq and LAWRENCE C. PAULSON University of Cambridge Most specification languages have a type system. Type systems are hard to get right, and getting them wrong can lead to inconsistencies. Set theory can serve as the basis for a specification lan- guage without types. This possibility, which has been widely overlooked, offers many advantages. Untyped set theory is simple and is more flexible than any simple typed formalism. Polymorphism, overloading, and subtyping can make a type system more powerful, but at the cost of increased complexity, and such refinements can never attain the flexibility of having no types at all. Typed formalisms have advantages too, stemming from the power of mechanical type checking. While types serve little purpose in hand proofs, they do help with mechanized proofs. In the absence of verification, type checking can catch errors in specifications. It may be possible to have the best of both worlds by adding typing annotations to an untyped specification language. We consider only specification languages, not programming languages. Categories and Subject Descriptors: D.2.1 [Software Engineering]: Requirements/Specifica- tions; D.2.4 [Software Engineering]: Software/Program Verification—formal methods; F.3.1 [Logics and Meanings of Programs]: Specifying and Verifying and Reasoning about Pro- grams—specification techniques General Terms: Verification Additional Key Words and Phrases: Set theory, specification, types Editors’ introduction. We have invited the following -

Virtual Memory

Saint LouisCarnegie University Mellon Virtual Memory CSCI 224 / ECE 317: Computer Architecture Instructor: Prof. Jason Fritts Slides adapted from Bryant & O’Hallaron’s slides 1 Saint LouisCarnegie University Mellon Data Representation in Memory Memory organization within a process Virtual vs. Physical memory ° Fundamental Idea and Purpose ° Page Mapping ° Address Translation ° Per-Process Mapping and Protection 2 Saint LouisCarnegie University Mellon Recall: Basic Memory Organization • • • Byte-Addressable Memory ° Conceptually a very large array, with a unique address for each byte ° Processor width determines address range: ° 32-bit processor has 2 32 unique addresses ° 64-bit processor has 264 unique addresses Where does a given process reside in memory? ° depends upon the perspective… – virtual memory: process can use most any virtual address – physical memory: location controlled by OS 3 Saint LouisCarnegie University Mellon Virtual Address Space not drawn to scale 0xFFFFFFFF for IA32 (x86) Linux Stack 8MB All processes have the same uniform view of memory Stack ° Runtime stack (8MB limit) ° E. g., local variables Heap ° Dynamically allocated storage ° When call malloc() , calloc() , new() Data ° Statically allocated data ° E.g., global variables, arrays, structures, etc. Heap Text Data Text ° Executable machine instructions 0x08000000 ° Read-only data 0x00000000 4 Saint LouisCarnegie University Mellon not drawn to scale Memory Allocation Example 0xFF…F Stack char big_array[1<<24]; /* 16 MB */ char huge_array[1<<28]; /* 256 MB */ int beyond; char *p1, *p2, *p3, *p4; int useless() { return 0; } int main() { p1 = malloc(1 <<28); /* 256 MB */ p2 = malloc(1 << 8); /* 256 B */ p3 = malloc(1 <<28); /* 256 MB */ p4 = malloc(1 << 8); /* 256 B */ /* Some print statements ..