CS 351: Systems Programming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Virtual Memory

Chapter 4 Virtual Memory Linux processes execute in a virtual environment that makes it appear as if each process had the entire address space of the CPU available to itself. This virtual address space extends from address 0 all the way to the maximum address. On a 32-bit platform, such as IA-32, the maximum address is 232 − 1or0xffffffff. On a 64-bit platform, such as IA-64, this is 264 − 1or0xffffffffffffffff. While it is obviously convenient for a process to be able to access such a huge ad- dress space, there are really three distinct, but equally important, reasons for using virtual memory. 1. Resource virtualization. On a system with virtual memory, a process does not have to concern itself with the details of how much physical memory is available or which physical memory locations are already in use by some other process. In other words, virtual memory takes a limited physical resource (physical memory) and turns it into an infinite, or at least an abundant, resource (virtual memory). 2. Information isolation. Because each process runs in its own address space, it is not possible for one process to read data that belongs to another process. This improves security because it reduces the risk of one process being able to spy on another pro- cess and, e.g., steal a password. 3. Fault isolation. Processes with their own virtual address spaces cannot overwrite each other’s memory. This greatly reduces the risk of a failure in one process trig- gering a failure in another process. That is, when a process crashes, the problem is generally limited to that process alone and does not cause the entire machine to go down. -

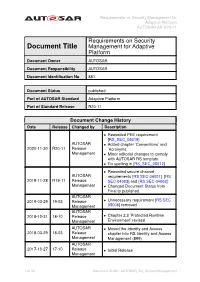

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Requirements on Security Document Title Management for Adaptive Platform Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 881 Document Status published Part of AUTOSAR Standard Adaptive Platform Part of Standard Release R20-11 Document Change History Date Release Changed by Description • Reworded PEE requirement [RS_SEC_05019] AUTOSAR • Added chapter ’Conventions’ and 2020-11-30 R20-11 Release ’Acronyms’ Management • Minor editorial changes to comply with AUTOSAR RS template • Fix spelling in [RS_SEC_05012] • Reworded secure channel AUTOSAR requirements [RS SEC 04001], [RS 2019-11-28 R19-11 Release SEC 04003] and [RS SEC 04003] Management • Changed Document Status from Final to published AUTOSAR 2019-03-29 19-03 Release • Unnecessary requirement [RS SEC Management 05006] removed AUTOSAR 2018-10-31 18-10 Release • Chapter 2.3 ’Protected Runtime Management Environment’ revised AUTOSAR • Moved the Identity and Access 2018-03-29 18-03 Release chapter into RS Identity and Access Management Management (899) AUTOSAR 2017-10-27 17-10 Release • Initial Release Management 1 of 32 Document ID 881: AUTOSAR_RS_SecurityManagement Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intel- lectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. -

Common Object File Format (COFF)

Application Report SPRAAO8–April 2009 Common Object File Format ..................................................................................................................................................... ABSTRACT The assembler and link step create object files in common object file format (COFF). COFF is an implementation of an object file format of the same name that was developed by AT&T for use on UNIX-based systems. This format encourages modular programming and provides powerful and flexible methods for managing code segments and target system memory. This appendix contains technical details about the Texas Instruments COFF object file structure. Much of this information pertains to the symbolic debugging information that is produced by the C compiler. The purpose of this application note is to provide supplementary information on the internal format of COFF object files. Topic .................................................................................................. Page 1 COFF File Structure .................................................................... 2 2 File Header Structure .................................................................. 4 3 Optional File Header Format ........................................................ 5 4 Section Header Structure............................................................. 5 5 Structuring Relocation Information ............................................... 7 6 Symbol Table Structure and Content........................................... 11 SPRAAO8–April 2009 -

Virtual Memory - Paging

Virtual memory - Paging Johan Montelius KTH 2020 1 / 32 The process code heap (.text) data stack kernel 0x00000000 0xC0000000 0xffffffff Memory layout for a 32-bit Linux process 2 / 32 Segments - a could be solution Processes in virtual space Address translation by MMU (base and bounds) Physical memory 3 / 32 one problem Physical memory External fragmentation: free areas of free space that is hard to utilize. Solution: allocate larger segments ... internal fragmentation. 4 / 32 another problem virtual space used code We’re reserving physical memory that is not used. physical memory not used? 5 / 32 Let’s try again It’s easier to handle fixed size memory blocks. Can we map a process virtual space to a set of equal size blocks? An address is interpreted as a virtual page number (VPN) and an offset. 6 / 32 Remember the segmented MMU MMU exception no virtual addr. offset yes // < within bounds index + physical address segment table 7 / 32 The paging MMU MMU exception virtual addr. offset // VPN available ? + physical address page table 8 / 32 the MMU exception exception virtual address within bounds page available Segmentation Paging linear address physical address 9 / 32 a note on the x86 architecture The x86-32 architecture supports both segmentation and paging. A virtual address is translated to a linear address using a segmentation table. The linear address is then translated to a physical address by paging. Linux and Windows do not use use segmentation to separate code, data nor stack. The x86-64 (the 64-bit version of the x86 architecture) has dropped many features for segmentation. -

Virtual Memory in X86

Fall 2017 :: CSE 306 Virtual Memory in x86 Nima Honarmand Fall 2017 :: CSE 306 x86 Processor Modes • Real mode – walks and talks like a really old x86 chip • State at boot • 20-bit address space, direct physical memory access • 1 MB of usable memory • No paging • No user mode; processor has only one protection level • Protected mode – Standard 32-bit x86 mode • Combination of segmentation and paging • Privilege levels (separate user and kernel) • 32-bit virtual address • 32-bit physical address • 36-bit if Physical Address Extension (PAE) feature enabled Fall 2017 :: CSE 306 x86 Processor Modes • Long mode – 64-bit mode (aka amd64, x86_64, etc.) • Very similar to 32-bit mode (protected mode), but bigger address space • 48-bit virtual address space • 52-bit physical address space • Restricted segmentation use • Even more obscure modes we won’t discuss today xv6 uses protected mode w/o PAE (i.e., 32-bit virtual and physical addresses) Fall 2017 :: CSE 306 Virt. & Phys. Addr. Spaces in x86 Processor • Both RAM hand hardware devices (disk, Core NIC, etc.) connected to system bus • Mapped to different parts of the physical Virtual Addr address space by the BIOS MMU Data • You can talk to a device by performing Physical Addr read/write operations on its physical addresses Cache • Devices are free to interpret reads/writes in any way they want (driver knows) System Interconnect (Bus) : all addrs virtual DRAM Network … Disk (Memory) Card : all addrs physical Fall 2017 :: CSE 306 Virt-to-Phys Translation in x86 0xdeadbeef Segmentation 0x0eadbeef Paging 0x6eadbeef Virtual Address Linear Address Physical Address Protected/Long mode only • Segmentation cannot be disabled! • But can be made a no-op (a.k.a. -

Address Translation

CS 152 Computer Architecture and Engineering Lecture 8 - Address Translation John Wawrzynek Electrical Engineering and Computer Sciences University of California at Berkeley http://www.eecs.berkeley.edu/~johnw http://inst.eecs.berkeley.edu/~cs152 9/27/2016 CS152, Fall 2016 CS152 Administrivia § Lab 2 due Friday § PS 2 due Tuesday § Quiz 2 next Thursday! 9/27/2016 CS152, Fall 2016 2 Last time in Lecture 7 § 3 C’s of cache misses – Compulsory, Capacity, Conflict § Write policies – Write back, write-through, write-allocate, no write allocate § Multi-level cache hierarchies reduce miss penalty – 3 levels common in modern systems (some have 4!) – Can change design tradeoffs of L1 cache if known to have L2 § Prefetching: retrieve memory data before CPU request – Prefetching can waste bandwidth and cause cache pollution – Software vs hardware prefetching § Software memory hierarchy optimizations – Loop interchange, loop fusion, cache tiling 9/27/2016 CS152, Fall 2016 3 Bare Machine Physical Physical Address Inst. Address Data Decode PC Cache D E + M Cache W Physical Memory Controller Physical Address Address Physical Address Main Memory (DRAM) § In a bare machine, the only kind of address is a physical address 9/27/2016 CS152, Fall 2016 4 Absolute Addresses EDSAC, early 50’s § Only one program ran at a time, with unrestricted access to entire machine (RAM + I/O devices) § Addresses in a program depended upon where the program was to be loaded in memory § But it was more convenient for programmers to write location-independent subroutines How -

Lecture 12: Virtual Memory Finale and Interrupts/Exceptions 1 Review And

EE360N: Computer Architecture Lecture #12 Department of Electical and Computer Engineering The University of Texas at Austin October 9, 2008 Disclaimer: The contents of this document are scribe notes for The University of Texas at Austin EE360N Fall 2008, Computer Architecture.∗ The notes capture the class discussion and may contain erroneous and unverified information and comments. Lecture 12: Virtual Memory Finale and Interrupts/Exceptions Lecture #12: October 8, 2008 Lecturer: Derek Chiou Scribe: Michael Sullivan and Peter Tran Lecture 12 of EE360N: Computer Architecture summarizes the significance of virtual memory and introduces the concept of interrupts and exceptions. As such, this document is divided into two sections. Section 1 details the key points from the discussion in class on virtual memory. Section 2 goes on to introduce interrupts and exceptions, and shows why they are necessary. 1 Review and Summation of Virtual Memory The beginning of this lecture wraps up the review of virtual memory. A broad summary of virtual memory systems is given first, in Subsection 1.1. Next, the individual components of the hardware and OS that are required for virtual memory support are covered in 1.2. Finally, the addressing of caches and the interface between the cache and virtual memory are described in 1.3. 1.1 Summary of Virtual Memory Systems Virtual memory automatically provides the illusion of a large, private, uniform storage space for each process, even on a limited amount of physically addressed memory. The virtual memory space available to every process looks the same every time the process is run, irrespective of the amount of memory available, or the program’s actual placement in main memory. -

Virtual Memory

CSE 410: Systems Programming Virtual Memory Ethan Blanton Department of Computer Science and Engineering University at Buffalo Introduction Address Spaces Paging Summary References Virtual Memory Virtual memory is a mechanism by which a system divorces the address space in programs from the physical layout of memory. Virtual addresses are locations in program address space. Physical addresses are locations in actual hardware RAM. With virtual memory, the two need not be equal. © 2018 Ethan Blanton / CSE 410: Systems Programming Introduction Address Spaces Paging Summary References Process Layout As previously discussed: Every process has unmapped memory near NULL Processes may have access to the entire address space Each process is denied access to the memory used by other processes Some of these statements seem contradictory. Virtual memory is the mechanism by which this is accomplished. Every address in a process’s address space is a virtual address. © 2018 Ethan Blanton / CSE 410: Systems Programming Introduction Address Spaces Paging Summary References Physical Layout The physical layout of hardware RAM may vary significantly from machine to machine or platform to platform. Sometimes certain locations are restricted Devices may appear in the memory address space Different amounts of RAM may be present Historically, programs were aware of these restrictions. Today, virtual memory hides these details. The kernel must still be aware of physical layout. © 2018 Ethan Blanton / CSE 410: Systems Programming Introduction Address Spaces Paging Summary References The Memory Management Unit The Memory Management Unit (MMU) translates addresses. It uses a per-process mapping structure to transform virtual addresses into physical addresses. The MMU is physical hardware between the CPU and the memory bus. -

Virtual Memory

Saint LouisCarnegie University Mellon Virtual Memory CSCI 224 / ECE 317: Computer Architecture Instructor: Prof. Jason Fritts Slides adapted from Bryant & O’Hallaron’s slides 1 Saint LouisCarnegie University Mellon Data Representation in Memory Memory organization within a process Virtual vs. Physical memory ° Fundamental Idea and Purpose ° Page Mapping ° Address Translation ° Per-Process Mapping and Protection 2 Saint LouisCarnegie University Mellon Recall: Basic Memory Organization • • • Byte-Addressable Memory ° Conceptually a very large array, with a unique address for each byte ° Processor width determines address range: ° 32-bit processor has 2 32 unique addresses ° 64-bit processor has 264 unique addresses Where does a given process reside in memory? ° depends upon the perspective… – virtual memory: process can use most any virtual address – physical memory: location controlled by OS 3 Saint LouisCarnegie University Mellon Virtual Address Space not drawn to scale 0xFFFFFFFF for IA32 (x86) Linux Stack 8MB All processes have the same uniform view of memory Stack ° Runtime stack (8MB limit) ° E. g., local variables Heap ° Dynamically allocated storage ° When call malloc() , calloc() , new() Data ° Statically allocated data ° E.g., global variables, arrays, structures, etc. Heap Text Data Text ° Executable machine instructions 0x08000000 ° Read-only data 0x00000000 4 Saint LouisCarnegie University Mellon not drawn to scale Memory Allocation Example 0xFF…F Stack char big_array[1<<24]; /* 16 MB */ char huge_array[1<<28]; /* 256 MB */ int beyond; char *p1, *p2, *p3, *p4; int useless() { return 0; } int main() { p1 = malloc(1 <<28); /* 256 MB */ p2 = malloc(1 << 8); /* 256 B */ p3 = malloc(1 <<28); /* 256 MB */ p4 = malloc(1 << 8); /* 256 B */ /* Some print statements .. -

Chapter 9: Memory Management

Chapter 9: Memory Management I Background I Swapping I Contiguous Allocation I Paging I Segmentation I Segmentation with Paging Operating System Concepts 9.1 Silberschatz, Galvin and Gagne 2002 Background I Program must be brought into memory and placed within a process for it to be run. I Input queue – collection of processes on the disk that are waiting to be brought into memory to run the program. I User programs go through several steps before being run. Operating System Concepts 9.2 Silberschatz, Galvin and Gagne 2002 Binding of Instructions and Data to Memory Address binding of instructions and data to memory addresses can happen at three different stages. I Compile time: If memory location known a priori, absolute code can be generated; must recompile code if starting location changes. I Load time: Must generate relocatable code if memory location is not known at compile time. I Execution time: Binding delayed until run time if the process can be moved during its execution from one memory segment to another. Need hardware support for address maps (e.g., base and limit registers). Operating System Concepts 9.3 Silberschatz, Galvin and Gagne 2002 Multistep Processing of a User Program Operating System Concepts 9.4 Silberschatz, Galvin and Gagne 2002 Logical vs. Physical Address Space I The concept of a logical address space that is bound to a separate physical address space is central to proper memory management. ✦ Logical address – generated by the CPU; also referred to as virtual address. ✦ Physical address – address seen by the memory unit. I Logical and physical addresses are the same in compile- time and load-time address-binding schemes; logical (virtual) and physical addresses differ in execution-time address-binding scheme. -

Design of the RISC-V Instruction Set Architecture

Design of the RISC-V Instruction Set Architecture Andrew Waterman Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2016-1 http://www.eecs.berkeley.edu/Pubs/TechRpts/2016/EECS-2016-1.html January 3, 2016 Copyright © 2016, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor David Patterson, Chair Professor Krste Asanovi´c Associate Professor Per-Olof Persson Spring 2016 Design of the RISC-V Instruction Set Architecture Copyright 2016 by Andrew Shell Waterman 1 Abstract Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman Doctor of Philosophy in Computer Science University of California, Berkeley Professor David Patterson, Chair The hardware-software interface, embodied in the instruction set architecture (ISA), is arguably the most important interface in a computer system. Yet, in contrast to nearly all other interfaces in a modern computer system, all commercially popular ISAs are proprietary. -

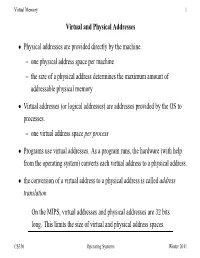

One Physical Address Space Per Machine – the Size of a Physical Address Determines the Maximum Amount of Addressable Physical Memory

Virtual Memory 1 Virtual and Physical Addresses • Physical addresses are provided directly by the machine. – one physical address space per machine – the size of a physical address determines the maximum amount of addressable physical memory • Virtual addresses (or logical addresses) are addresses provided by the OS to processes. – one virtual address space per process • Programs use virtual addresses. As a program runs, the hardware (with help from the operating system) converts each virtual address to a physical address. • the conversion of a virtual address to a physical address is called address translation On the MIPS, virtual addresses and physical addresses are 32 bits long. This limits the size of virtual and physical address spaces. CS350 Operating Systems Winter 2011 Virtual Memory 2 What is in a Virtual Address Space? 0x00400000 − 0x00401b30 text (program code) and read−only data growth 0x10000000 − 0x101200b0 stack high end of stack: 0x7fffffff data 0x00000000 0xffffffff This diagram illustrates the layout of the virtual address space for the OS/161 test application testbin/sort CS350 Operating Systems Winter 2011 Virtual Memory 3 Simple Address Translation: Dynamic Relocation • hardware provides a memory management unit which includes a relocation register • at run-time, the contents of the relocation register are added to each virtual address to determine the corresponding physical address • the OS maintains a separate relocation register value for each process, and ensures that relocation register is reset on each context