Virtual Memory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Better Performance Through a Disk/Persistent-RAM Hybrid Design

The Conquest File System: Better Performance Through a Disk/Persistent-RAM Hybrid Design AN-I ANDY WANG Florida State University GEOFF KUENNING Harvey Mudd College PETER REIHER, GERALD POPEK University of California, Los Angeles ________________________________________________________________________ Modern file systems assume the use of disk, a system-wide performance bottleneck for over a decade. Current disk caching and RAM file systems either impose high overhead to access memory content or fail to provide mechanisms to achieve data persistence across reboots. The Conquest file system is based on the observation that memory is becoming inexpensive, which enables all file system services to be delivered from memory, except providing large storage capacity. Unlike caching, Conquest uses memory with battery backup as persistent storage, and provides specialized and separate data paths to memory and disk. Therefore, the memory data path contains no disk-related complexity. The disk data path consists of only optimizations for the specialized disk usage pattern. Compared to a memory-based file system, Conquest incurs little performance overhead. Compared to several disk-based file systems, Conquest achieves 1.3x to 19x faster memory performance, and 1.4x to 2.0x faster performance when exercising both memory and disk. Conquest realizes most of the benefits of persistent RAM at a fraction of the cost of a RAM-only solution. Conquest also demonstrates that disk-related optimizations impose high overheads for accessing memory content in a memory-rich environment. Categories and Subject Descriptors: D.4.2 [Operating Systems]: Storage Management—Storage Hierarchies; D.4.3 [Operating Systems]: File System Management—Access Methods and Directory Structures; D.4.8 [Operating Systems]: Performance—Measurements General Terms: Design, Experimentation, Measurement, and Performance Additional Key Words and Phrases: Persistent RAM, File Systems, Storage Management, and Performance Measurement ________________________________________________________________________ 1. -

Virtual Memory

Chapter 4 Virtual Memory Linux processes execute in a virtual environment that makes it appear as if each process had the entire address space of the CPU available to itself. This virtual address space extends from address 0 all the way to the maximum address. On a 32-bit platform, such as IA-32, the maximum address is 232 − 1or0xffffffff. On a 64-bit platform, such as IA-64, this is 264 − 1or0xffffffffffffffff. While it is obviously convenient for a process to be able to access such a huge ad- dress space, there are really three distinct, but equally important, reasons for using virtual memory. 1. Resource virtualization. On a system with virtual memory, a process does not have to concern itself with the details of how much physical memory is available or which physical memory locations are already in use by some other process. In other words, virtual memory takes a limited physical resource (physical memory) and turns it into an infinite, or at least an abundant, resource (virtual memory). 2. Information isolation. Because each process runs in its own address space, it is not possible for one process to read data that belongs to another process. This improves security because it reduces the risk of one process being able to spy on another pro- cess and, e.g., steal a password. 3. Fault isolation. Processes with their own virtual address spaces cannot overwrite each other’s memory. This greatly reduces the risk of a failure in one process trig- gering a failure in another process. That is, when a process crashes, the problem is generally limited to that process alone and does not cause the entire machine to go down. -

The Case for Compressed Caching in Virtual Memory Systems

THE ADVANCED COMPUTING SYSTEMS ASSOCIATION The following paper was originally published in the Proceedings of the USENIX Annual Technical Conference Monterey, California, USA, June 6-11, 1999 The Case for Compressed Caching in Virtual Memory Systems _ Paul R. Wilson, Scott F. Kaplan, and Yannis Smaragdakis aUniversity of Texas at Austin © 1999 by The USENIX Association All Rights Reserved Rights to individual papers remain with the author or the author's employer. Permission is granted for noncommercial reproduction of the work for educational or research purposes. This copyright notice must be included in the reproduced paper. USENIX acknowledges all trademarks herein. For more information about the USENIX Association: Phone: 1 510 528 8649 FAX: 1 510 548 5738 Email: [email protected] WWW: http://www.usenix.org The Case for Compressed Caching in Virtual Memory Systems Paul R. Wilson, Scott F. Kaplan, and Yannis Smaragdakis Dept. of Computer Sciences University of Texas at Austin Austin, Texas 78751-1182 g fwilson|sfkaplan|smaragd @cs.utexas.edu http://www.cs.utexas.edu/users/oops/ Abstract In [Wil90, Wil91b] we proposed compressed caching for virtual memory—storing pages in compressed form Compressed caching uses part of the available RAM to in a main memory compression cache to reduce disk pag- hold pages in compressed form, effectively adding a new ing. Appel also promoted this idea [AL91], and it was level to the virtual memory hierarchy. This level attempts evaluated empirically by Douglis [Dou93] and by Russi- to bridge the huge performance gap between normal (un- novich and Cogswell [RC96]. Unfortunately Douglis’s compressed) RAM and disk. -

Memory Protection at Option

Memory Protection at Option Application-Tailored Memory Safety in Safety-Critical Embedded Systems – Speicherschutz nach Wahl Auf die Anwendung zugeschnittene Speichersicherheit in sicherheitskritischen eingebetteten Systemen Der Technischen Fakultät der Universität Erlangen-Nürnberg zur Erlangung des Grades Doktor-Ingenieur vorgelegt von Michael Stilkerich Erlangen — 2012 Als Dissertation genehmigt von der Technischen Fakultät Universität Erlangen-Nürnberg Tag der Einreichung: 09.07.2012 Tag der Promotion: 30.11.2012 Dekan: Prof. Dr.-Ing. Marion Merklein Berichterstatter: Prof. Dr.-Ing. Wolfgang Schröder-Preikschat Prof. Dr. Michael Philippsen Abstract With the increasing capabilities and resources available on microcontrollers, there is a trend in the embedded industry to integrate multiple software functions on a single system to save cost, size, weight, and power. The integration raises new requirements, thereunder the need for spatial isolation, which is commonly established by using a memory protection unit (MPU) that can constrain access to the physical address space to a fixed set of address regions. MPU-based protection is limited in terms of available hardware, flexibility, granularity and ease of use. Software-based memory protection can provide an alternative or complement MPU-based protection, but has found little attention in the embedded domain. In this thesis, I evaluate qualitative and quantitative advantages and limitations of MPU-based memory protection and software-based protection based on a multi-JVM. I developed a framework composed of the AUTOSAR OS-like operating system CiAO and KESO, a Java implementation for deeply embedded systems. The framework allows choosing from no memory protection, MPU-based protection, software-based protection, and a combination of the two. -

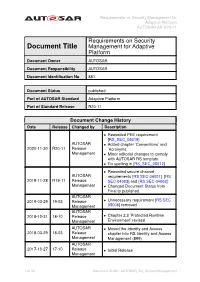

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Requirements on Security Document Title Management for Adaptive Platform Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 881 Document Status published Part of AUTOSAR Standard Adaptive Platform Part of Standard Release R20-11 Document Change History Date Release Changed by Description • Reworded PEE requirement [RS_SEC_05019] AUTOSAR • Added chapter ’Conventions’ and 2020-11-30 R20-11 Release ’Acronyms’ Management • Minor editorial changes to comply with AUTOSAR RS template • Fix spelling in [RS_SEC_05012] • Reworded secure channel AUTOSAR requirements [RS SEC 04001], [RS 2019-11-28 R19-11 Release SEC 04003] and [RS SEC 04003] Management • Changed Document Status from Final to published AUTOSAR 2019-03-29 19-03 Release • Unnecessary requirement [RS SEC Management 05006] removed AUTOSAR 2018-10-31 18-10 Release • Chapter 2.3 ’Protected Runtime Management Environment’ revised AUTOSAR • Moved the Identity and Access 2018-03-29 18-03 Release chapter into RS Identity and Access Management Management (899) AUTOSAR 2017-10-27 17-10 Release • Initial Release Management 1 of 32 Document ID 881: AUTOSAR_RS_SecurityManagement Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intel- lectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. -

Chapter 3. Booting Operating Systems

Chapter 3. Booting Operating Systems Abstract: Chapter 3 provides a complete coverage on operating systems booting. It explains the booting principle and the booting sequence of various kinds of bootable devices. These include booting from floppy disk, hard disk, CDROM and USB drives. Instead of writing a customized booter to boot up only MTX, it shows how to develop booter programs to boot up real operating systems, such as Linux, from a variety of bootable devices. In particular, it shows how to boot up generic Linux bzImage kernels with initial ramdisk support. It is shown that the hard disk and CDROM booters developed in this book are comparable to GRUB and isolinux in performance. In addition, it demonstrates the booter programs by sample systems. 3.1. Booting Booting, which is short for bootstrap, refers to the process of loading an operating system image into computer memory and starting up the operating system. As such, it is the first step to run an operating system. Despite its importance and widespread interests among computer users, the subject of booting is rarely discussed in operating system books. Information on booting are usually scattered and, in most cases, incomplete. A systematic treatment of the booting process has been lacking. The purpose of this chapter is to try to fill this void. In this chapter, we shall discuss the booting principle and show how to write booter programs to boot up real operating systems. As one might expect, the booting process is highly machine dependent. To be more specific, we shall only consider the booting process of Intel x86 based PCs. -

Virtual Memory

Virtual Memory Copyright ©: University of Illinois CS 241 Staff 1 Address Space Abstraction n Program address space ¡ All memory data ¡ i.e., program code, stack, data segment n Hardware interface (physical reality) ¡ Computer has one small, shared memory How can n Application interface (illusion) we close this gap? ¡ Each process wants private, large memory Copyright ©: University of Illinois CS 241 Staff 2 Address Space Illusions n Address independence ¡ Same address can be used in different address spaces yet remain logically distinct n Protection ¡ One address space cannot access data in another address space n Virtual memory ¡ Address space can be larger than the amount of physical memory on the machine Copyright ©: University of Illinois CS 241 Staff 3 Address Space Illusions: Virtual Memory Illusion Reality Giant (virtual) address space Many processes sharing Protected from others one (physical) address space (Unless you want to share it) Limited memory More whenever you want it Today: An introduction to Virtual Memory: The memory space seen by your programs! Copyright ©: University of Illinois CS 241 Staff 4 Multi-Programming n Multiple processes in memory at the same time n Goals 1. Layout processes in memory as needed 2. Protect each process’s memory from accesses by other processes 3. Minimize performance overheads 4. Maximize memory utilization Copyright ©: University of Illinois CS 241 Staff 5 OS & memory management n Main memory is normally divided into two parts: kernel memory and user memory n In a multiprogramming system, the “user” part of main memory needs to be divided to accommodate multiple processes. n The user memory is dynamically managed by the OS (known as memory management) to satisfy following requirements: ¡ Protection ¡ Relocation ¡ Sharing ¡ Memory organization (main mem. -

Extracting Compressed Pages from the Windows 10 Virtual Store WHITE PAPER | EXTRACTING COMPRESSED PAGES from the WINDOWS 10 VIRTUAL STORE 2

white paper Extracting Compressed Pages from the Windows 10 Virtual Store WHITE PAPER | EXTRACTING COMPRESSED PAGES FROM THE WINDOWS 10 VIRTUAL STORE 2 Abstract Windows 8.1 introduced memory compression in August 2013. By the end of 2013 Linux 3.11 and OS X Mavericks leveraged compressed memory as well. Disk I/O continues to be orders of magnitude slower than RAM, whereas reading and decompressing data in RAM is fast and highly parallelizable across the system’s CPU cores, yielding a significant performance increase. However, this came at the cost of increased complexity of process memory reconstruction and thus reduced the power of popular tools such as Volatility, Rekall, and Redline. In this document we introduce a method to retrieve compressed pages from the Windows 10 Memory Manager Virtual Store, thus providing forensics and auditing tools with a way to retrieve, examine, and reconstruct memory artifacts regardless of their storage location. Introduction Windows 10 moves pages between physical memory and the hard disk or the Store Manager’s virtual store when memory is constrained. Universal Windows Platform (UWP) applications leverage the Virtual Store any time they are suspended (as is the case when minimized). When a given page is no longer in the process’s working set, the corresponding Page Table Entry (PTE) is used by the OS to specify the storage location as well as additional data that allows it to start the retrieval process. In the case of a page file, the retrieval is straightforward because both the page file index and the location of the page within the page file can be directly retrieved. -

ELF1 7D Virtual Memory

ELF1 7D Virtual Memory Young W. Lim 2021-07-06 Tue Young W. Lim ELF1 7D Virtual Memory 2021-07-06 Tue 1 / 77 Outline 1 Based on 2 Virtual memory Virtual Memory in Operating System Physical, Logical, Virtual Addresses Kernal Addresses Kernel Logical Address Kernel Virtual Address User Virtual Address User Space Young W. Lim ELF1 7D Virtual Memory 2021-07-06 Tue 2 / 77 Based on "Study of ELF loading and relocs", 1999 http://netwinder.osuosl.org/users/p/patb/public_html/elf_ relocs.html I, the copyright holder of this work, hereby publish it under the following licenses: GNU head Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled GNU Free Documentation License. CC BY SA This file is licensed under the Creative Commons Attribution ShareAlike 3.0 Unported License. In short: you are free to share and make derivative works of the file under the conditions that you appropriately attribute it, and that you distribute it only under a license compatible with this one. Young W. Lim ELF1 7D Virtual Memory 2021-07-06 Tue 3 / 77 Compling 32-bit program on 64-bit gcc gcc -v gcc -m32 t.c sudo apt-get install gcc-multilib sudo apt-get install g++-multilib gcc-multilib g++-multilib gcc -m32 objdump -m i386 Young W. -

HALO: Post-Link Heap-Layout Optimisation

HALO: Post-Link Heap-Layout Optimisation Joe Savage Timothy M. Jones University of Cambridge, UK University of Cambridge, UK [email protected] [email protected] Abstract 1 Introduction Today, general-purpose memory allocators dominate the As the gap between memory and processor speeds continues landscape of dynamic memory management. While these so- to widen, efficient cache utilisation is more important than lutions can provide reasonably good behaviour across a wide ever. While compilers have long employed techniques like range of workloads, it is an unfortunate reality that their basic-block reordering, loop fission and tiling, and intelligent behaviour for any particular workload can be highly subop- register allocation to improve the cache behaviour of pro- timal. By catering primarily to average and worst-case usage grams, the layout of dynamically allocated memory remains patterns, these allocators deny programs the advantages of largely beyond the reach of static tools. domain-specific optimisations, and thus may inadvertently Today, when a C++ program calls new, or a C program place data in a manner that hinders performance, generating malloc, its request is satisfied by a general-purpose allocator unnecessary cache misses and load stalls. with no intimate knowledge of what the program does or To help alleviate these issues, we propose HALO: a post- how its data objects are used. Allocations are made through link profile-guided optimisation tool that can improve the fixed, lifeless interfaces, and fulfilled by -

Isolation, Resource Management, and Sharing in Java

Processes in KaffeOS: Isolation, Resource Management, and Sharing in Java Godmar Back, Wilson C. Hsieh, Jay Lepreau School of Computing University of Utah Abstract many environments for executing untrusted code: for example, applets, servlets, active packets [41], database Single-language runtime systems, in the form of Java queries [15], and kernel extensions [6]. Current systems virtual machines, are widely deployed platforms for ex- (such as Java) provide memory protection through the ecuting untrusted mobile code. These runtimes pro- enforcement of type safety and secure system services vide some of the features that operating systems pro- through a number of mechanisms, including namespace vide: inter-application memory protection and basic sys- and access control. Unfortunately, malicious or buggy tem services. They do not, however, provide the ability applications can deny service to other applications. For to isolate applications from each other, or limit their re- example, a Java applet can generate excessive amounts source consumption. This paper describes KaffeOS, a of garbage and cause a Web browser to spend all of its Java runtime system that provides these features. The time collecting it. KaffeOS architecture takes many lessons from operating To support the execution of untrusted code, type-safe system design, such as the use of a user/kernel bound- language runtimes need to provide a mechanism to iso- ary, and employs garbage collection techniques, such as late and manage the resources of applications, analogous write barriers. to that provided by operating systems. Although other re- The KaffeOS architecture supports the OS abstraction source management abstractions exist [4], the classic OS of a process in a Java virtual machine. -

FIRM: Fair and High-Performance Memory Control for Persistent Memory Systems

FIRM: Fair and High-Performance Memory Control for Persistent Memory Systems Jishen Zhao∗†, Onur Mutlu†, Yuan Xie‡∗ ∗Pennsylvania State University, †Carnegie Mellon University, ‡University of California, Santa Barbara, Hewlett-Packard Labs ∗ † ‡ [email protected], [email protected], [email protected] Abstract—Byte-addressable nonvolatile memories promise a new tech- works [54, 92] even demonstrated a persistent memory system with nology, persistent memory, which incorporates desirable attributes from performance close to that of a system without persistence support in both traditional main memory (byte-addressability and fast interface) memory. and traditional storage (data persistence). To support data persistence, a persistent memory system requires sophisticated data duplication Various types of physical devices can be used to build persistent and ordering control for write requests. As a result, applications memory, as long as they appear byte-addressable and nonvolatile that manipulate persistent memory (persistent applications) have very to applications. Examples of such byte-addressable nonvolatile different memory access characteristics than traditional (non-persistent) memories (BA-NVMs) include spin-transfer torque RAM (STT- applications, as shown in this paper. Persistent applications introduce heavy write traffic to contiguous memory regions at a memory channel, MRAM) [31, 93], phase-change memory (PCM) [75, 81], resis- which cannot concurrently service read and write requests, leading to tive random-access memory (ReRAM) [14, 21], battery-backed memory bandwidth underutilization due to low bank-level parallelism, DRAM [13, 18, 28], and nonvolatile dual in-line memory mod- frequent write queue drains, and frequent bus turnarounds between reads ules (NV-DIMMs) [89].1 and writes. These characteristics undermine the high-performance and fairness offered by conventional memory scheduling schemes designed As it is in its early stages of development, persistent memory for non-persistent applications.