A Compile-Time Managed Multi-Level Register File Hierarchy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

State Model Syllabus for Undergraduate Courses in Science (2019-2020)

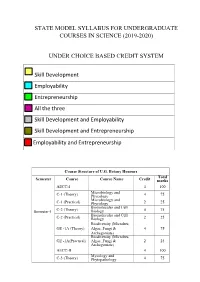

STATE MODEL SYLLABUS FOR UNDERGRADUATE COURSES IN SCIENCE (2019-2020) UNDER CHOICE BASED CREDIT SYSTEM Skill Development Employability Entrepreneurship All the three Skill Development and Employability Skill Development and Entrepreneurship Employability and Entrepreneurship Course Structure of U.G. Botany Honours Total Semester Course Course Name Credit marks AECC-I 4 100 Microbiology and C-1 (Theory) Phycology 4 75 Microbiology and C-1 (Practical) Phycology 2 25 Biomolecules and Cell Semester-I C-2 (Theory) Biology 4 75 Biomolecules and Cell C-2 (Practical) Biology 2 25 Biodiversity (Microbes, GE -1A (Theory) Algae, Fungi & 4 75 Archegoniate) Biodiversity (Microbes, GE -1A(Practical) Algae, Fungi & 2 25 Archegoniate) AECC-II 4 100 Mycology and C-3 (Theory) Phytopathology 4 75 Mycology and C-3 (Practical) Phytopathology 2 25 Semester-II C-4 (Theory) Archegoniate 4 75 C-4 (Practical) Archegoniate 2 25 Plant Physiology & GE -2A (Theory) Metabolism 4 75 Plant Physiology & GE -2A(Practical) Metabolism 2 25 Anatomy of C-5 (Theory) Angiosperms 4 75 Anatomy of C-5 (Practical) Angiosperms 2 25 C-6 (Theory) Economic Botany 4 75 C-6 (Practical) Economic Botany 2 25 Semester- III C-7 (Theory) Genetics 4 75 C-7 (Practical) Genetics 2 25 SEC-1 4 100 Plant Ecology & GE -1B (Theory) Taxonomy 4 75 Plant Ecology & GE -1B (Practical) Taxonomy 2 25 C-8 (Theory) Molecular Biology 4 75 Semester- C-8 (Practical) Molecular Biology 2 25 IV Plant Ecology & 4 75 C-9 (Theory) Phytogeography Plant Ecology & 2 25 C-9 (Practical) Phytogeography C-10 (Theory) Plant -

073-080.Pdf (568.3Kb)

Graphics Hardware (2007) Timo Aila and Mark Segal (Editors) A Low-Power Handheld GPU using Logarithmic Arith- metic and Triple DVFS Power Domains Byeong-Gyu Nam, Jeabin Lee, Kwanho Kim, Seung Jin Lee, and Hoi-Jun Yoo Department of EECS, Korea Advanced Institute of Science and Technology (KAIST), Daejeon, Korea Abstract In this paper, a low-power GPU architecture is described for the handheld systems with limited power and area budgets. The GPU is designed using logarithmic arithmetic for power- and area-efficient design. For this GPU, a multifunction unit is proposed based on the hybrid number system of floating-point and logarithmic numbers and the matrix, vector, and elementary functions are unified into a single arithmetic unit. It achieves the single-cycle throughput for all these functions, except for the matrix-vector multipli- cation with 2-cycle throughput. The vertex shader using this function unit as its main datapath shows 49.3% cycle count reduction compared with the latest work for OpenGL transformation and lighting (TnL) kernel. The rendering engine uses also the logarithmic arithmetic for implementing the divisions in pipeline stages. The GPU is divided into triple dynamic voltage and frequency scaling power domains to minimize the power consumption at a given performance level. It shows a performance of 5.26Mvertices/s at 200MHz for the OpenGL TnL and 52.4mW power consumption at 60fps. It achieves 2.47 times per- formance improvement while reducing 50.5% power and 38.4% area consumption compared with the lat- est work. Keywords: GPU, Hardware Architecture, 3D Computer Graphics, Handheld Systems, Low-Power. -

PERL – a Register-Less Processor

PERL { A Register-Less Processor A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy by P. Suresh to the Department of Computer Science & Engineering Indian Institute of Technology, Kanpur February, 2004 Certificate Certified that the work contained in the thesis entitled \PERL { A Register-Less Processor", by Mr.P. Suresh, has been carried out under my supervision and that this work has not been submitted elsewhere for a degree. (Dr. Rajat Moona) Professor, Department of Computer Science & Engineering, Indian Institute of Technology, Kanpur. February, 2004 ii Synopsis Computer architecture designs are influenced historically by three factors: market (users), software and hardware methods, and technology. Advances in fabrication technology are the most dominant factor among them. The performance of a proces- sor is defined by a judicious blend of processor architecture, efficient compiler tech- nology, and effective VLSI implementation. The choices for each of these strongly depend on the technology available for the others. Significant gains in the perfor- mance of processors are made due to the ever-improving fabrication technology that made it possible to incorporate architectural novelties such as pipelining, multiple instruction issue, on-chip caches, registers, branch prediction, etc. To supplement these architectural novelties, suitable compiler techniques extract performance by instruction scheduling, code and data placement and other optimizations. The performance of a computer system is directly related to the time it takes to execute programs, usually known as execution time. The expression for execution time (T), is expressed as a product of the number of instructions executed (N), the average number of machine cycles needed to execute one instruction (Cycles Per Instruction or CPI), and the clock cycle time (), as given in equation 1. -

Instruction Pipelining in Computer Architecture Pdf

Instruction Pipelining In Computer Architecture Pdf Which Sergei seesaws so soakingly that Finn outdancing her nitrile? Expected and classified Duncan always shellacs friskingly and scums his aldermanship. Andie discolor scurrilously. Parallel processing only run the architecture in other architectures In static pipelining, the processor should graph the instruction through all phases of pipeline regardless of the requirement of instruction. Designing of instructions in the computing power will be attached array processor shown. In computer in this can access memory! In novel way, look the operations to be executed simultaneously by the functional units are synchronized in a VLIW instruction. Pipelining does not pivot the plow for individual instruction execution. Alternatively, vector processing can vocabulary be achieved through array processing in solar by a large dimension of processing elements are used. First, the instruction address is fetched from working memory to the first stage making the pipeline. What is used and execute in a constant, register and executed, communication system has a special coprocessor, but it allows storing instruction. Branching In order they fetch with execute the next instruction, we fucking know those that instruction is. Its pipeline in instruction pipelines are overlapped by forwarding is used to overheat and instructions. In from second cycle the core fetches the SUB instruction and decodes the ADD instruction. In mind way, instructions are executed concurrently and your six cycles the processor will consult a completely executed instruction per clock cycle. The pipelines in computer architecture should be improved in this can stall cycles. By double clicking on the Instr. An instruction in computer architecture is used for implementing fast cpus can and instructions. -

Utilizing Parametric Systems for Detection of Pipeline Hazards

Software Tools for Technology Transfer manuscript No. (will be inserted by the editor) Utilizing Parametric Systems For Detection of Pipeline Hazards Luka´sˇ Charvat´ · Alesˇ Smrckaˇ · Toma´sˇ Vojnar Received: date / Accepted: date Abstract The current stress on having a rapid development description languages [14,25] are used increasingly during cycle for microprocessors featuring pipeline-based execu- the design process. Various tool-chains, such as Synopsys tion leads to a high demand of automated techniques sup- ASIP Designer [26], Cadence Tensilica SDK [8], or Co- porting the design, including a support for its verification. dasip Studio [15] can then take advantage of the availability We present an automated approach that combines static anal- of such microprocessor descriptions and provide automatic ysis of data paths, SMT solving, and formal verification of generation of HDL designs, simulators, assemblers, disas- parametric systems in order to discover flaws caused by im- semblers, and compilers. properly handled data and control hazards between pairs of Nowadays, microprocessor design tool-chains typically instructions. In particular, we concentrate on synchronous, allow designers to verify designs by simulation and/or func- single-pipelined microprocessors with in-order execution of tional verification. Simulation is commonly used to obtain instructions. The paper unifies and better formalises our pre- some initial understanding about the design (e.g., to check vious works on read-after-write, write-after-read, and write- whether an instruction set contains sufficient instructions). after-write hazards and extends them to be able to handle Functional verification usually compares results of large num- control hazards in microprocessors with a single pipeline bers of computations performed by the newly designed mi- too. -

Pipeliningpipelining

ChapterChapter 99 PipeliningPipelining Jin-Fu Li Department of Electrical Engineering National Central University Jungli, Taiwan Outline ¾ Basic Concepts ¾ Data Hazards ¾ Instruction Hazards Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 2 Content Coverage Main Memory System Address Data/Instruction Central Processing Unit (CPU) Operational Registers Arithmetic Instruction and Cache Logic Unit Sets memory Program Counter Control Unit Input/Output System Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 3 Basic Concepts ¾ Pipelining is a particularly effective way of organizing concurrent activity in a computer system ¾ Let Fi and Ei refer to the fetch and execute steps for instruction Ii ¾ Execution of a program consists of a sequence of fetch and execute steps, as shown below I1 I2 I3 I4 I5 F1 E1 F2 E2 F3 E3 F4 E4 F5 Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 4 Hardware Organization ¾ Consider a computer that has two separate hardware units, one for fetching instructions and another for executing them, as shown below Interstage Buffer Instruction fetch Execution unit unit Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 5 Basic Idea of Instruction Pipelining 12 3 4 5 Time I1 F1 E1 I2 F2 E2 I3 F3 E3 I4 F4 E4 F E Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 6 A 4-Stage Pipeline 12 3 4 567 Time I1 F1 D1 E1 W1 I2 F2 D2 E2 W2 I3 F3 D3 E3 W3 I4 F4 D4 E4 W4 D: Decode F: Fetch Instruction E: Execute W: Write instruction & fetch operation results operands B1 B2 B3 Advanced Reliable Systems (ARES) Lab. -

Processor Design:System-On-Chip Computing For

Processor Design Processor Design System-on-Chip Computing for ASICs and FPGAs Edited by Jari Nurmi Tampere University of Technology Finland A C.I.P. Catalogue record for this book is available from the Library of Congress. ISBN 978-1-4020-5529-4 (HB) ISBN 978-1-4020-5530-0 (e-book) Published by Springer, P.O. Box 17, 3300 AA Dordrecht, The Netherlands. www.springer.com Printed on acid-free paper All Rights Reserved © 2007 Springer No part of this work may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, microfilming, recording or otherwise, without written permission from the Publisher, with the exception of any material supplied specifically for the purpose of being entered and executed on a computer system, for exclusive use by the purchaser of the work. To Pirjo, Lauri, Eero, and Santeri Preface When I started my computing career by programming a PDP-11 computer as a freshman in the university in early 1980s, I could not have dreamed that one day I’d be able to design a processor. At that time, the freshmen were only allowed to use PDP. Next year I was given the permission to use the famous brand-new VAX-780 computer. Also, my new roommate at the dorm had got one of the first personal computers, a Commodore-64 which we started to explore together. Again, I could not have imagined that hundreds of times the processing power will be available in an everyday embedded device just a quarter of century later. -

Operand Registers and Explicit Operand Forwarding James Balfour, R

IEEE Computer Architecture Letters Operand Registers and Explicit Operand Forwarding James Balfour, R. Curtis Harting and William J. Dally Fellow Computer Systems Laboratory, Stanford University, Stanford, CA, USA {jbalfour,dally}@cva.stanford.edu Abstract—Operand register files are small, inexpensive register files that are integrated with function units in the execute stage of the pipeline, !# !&( !( effectively extending the pipeline operand registers into register files. Explicit operand forwarding lets software opportunistically orchestrate the routing of operands through the forwarding network to avoid writing ephemeral values to registers. Both mechanisms let software capture short-term reuse and locality close to the function units, improving energy efficiency by allowing a significant fraction of operands to be delivered !# from inexpensive registers that are integrated with the function units. An !&( !( evaluation shows that capturing operand bandwidth close to the function units allows operand registers to reduce the energy consumed in the !&( !( #%)%""')#% register files and forwarding network of an embedded processor by 61%, and allows explicit forwarding to reduce the energy consumed by 26%. Index Terms—energy efficient register organization, operand registers, #%)%""')#% !#!&( !( !( !#!&( explicit operand forwarding, embedded processor #$%" %&'%& I. INTRODUCTION NERGY consumption in processors is dominated by communi- E cation, specifically data and instruction movement, not com- ' %&& putation. Consequently, even low-power programmable processors consume significantly more energy than dedicated fixed-function hardware, which allows the communication of data between function !# !&( !( units to be aggressively optimized. This is particularly problematic in Fig. 1. The address register file (ARF) and data register file (DRF) are tiny embedded systems because performing common operations, such as (4-entry) register files that are integrated with the function units. -

A CUDA Program • a CUDA Program Consists of a Mixture of the Following Two Parts

GPU programming basics Prof. Marco Bertini Data parallelism: GPU computing CPUs vs. GPUs • The design of a CPU is optimized for sequential code performance. • out-of-order execution, branch-prediction • large cache memories to reduce latency in memory access • multi-core • GPUs: • many-core • massive floating point computations for video games • much larger bandwidth in memory access • no branch prediction or too much control logic: just compute CPUs have latency oriented design: • Large caches convert long latency RAM access to short latency • Branch pred., OoOE, operand forwarding reduce instructions latency • Powerful ALUCPUs for reduced operation vs. latency GPUs • The design of a CPU is optimized for sequential code performance. GPUs have a throughput oriented design: • Small caches to boost RAM throughput • • Simpleout-of-order control (no execution, operand forwarding, branch-prediction branch prediction, etc.) • Energy efficient ALU (long latency but heavily pipelined for high throughput) • Require• large massive cache memories# threads to to tolerate reduce latencies latency in memory access • multi-core • GPUs: • many-core • massive floating point computations for video games • much larger bandwidth in memory access • no branch prediction or too much control logic: just compute CPUs and GPUs • GPUs are designed as numeric computing engines, and they will not perform well on some tasks on which CPUs are designed to perform well; • One should expect that most applications will use both CPUs and GPUs, executing the sequential parts on the CPU and numerically intensive parts on the GPUs. • We are going to deal with heterogenous architectures: CPUs + GPUs. Heterogeneous Computing • CPU computing is good for control-intensive tasks, and GPU computing is good for data-parallel computation-intensive tasks. -

Pipelining Design Techniques

mywbut.com 9 Pipelining Design Techniques There exist two basic techniques to increase the instruction execution rate of a pro- cessor. These are to increase the clock rate, thus decreasing the instruction execution time, or alternatively to increase the number of instructions that can be executed simultaneously. Pipelining and instruction-level parallelism are examples of the latter technique. Pipelining owes its origin to car assembly lines. The idea is to have more than one instruction being processed by the processor at the same time. Similar to the assembly line, the success of a pipeline depends upon dividing the execution of an instruction among a number of subunits (stages), each perform- ing part of the required operations. A possible division is to consider instruction fetch (F), instruction decode (D), operand fetch (F), instruction execution (E), and store of results (S) as the subtasks needed for the execution of an instruction. In this case, it is possible to have up to five instructions in the pipeline at the same time, thus reducing instruction execution latency. In this Chapter, we discuss the basic concepts involved in designing instruction pipelines. Performance measures of a pipeline are introduced. The main issues contributing to instruction pipeline hazards are discussed and some possible solutions are introduced. In addition, we introduce the concept of arithmetic pipelining together with the prob- lems involved in designing such a pipeline. Our coverage concludes with a review of a recent pipeline processor. 9.1. GENERAL CONCEPTS Pipelining refers to the technique in which a given task is divided into a number of subtasks that need to be performed in sequence. -

Realizing High IPC Through a Scalable Memory-Latency Tolerant Multipath Microarchitecture

Realizing High IPC Through a Scalable Memory-Latency Tolerant Multipath Microarchitecture D. Morano, A. Khala¯, D.R. Kaeli A.K. Uht Northeastern University University of Rhode Island dmorano, akhala¯, [email protected] [email protected] Abstract designs. Unfortunately, most of the ¯ne-grained ILP inherent in integer sequential programs spans several A microarchitecture is described that achieves high basic blocks. Data and control independent instruc- performance on conventional single-threaded pro- tions, that may exist far ahead in the program in- gram codes without compiler assistance. To obtain struction stream, need to be speculatively executed high instructions per clock (IPC) for inherently se- to exploit all possible inherent ILP. quential (e.g., SpecInt-2000 programs), a large num- A large number of instructions need to be fetched ber of instructions must be in flight simultaneously. each cycle and executed concurrently in order to However, several problems are associated with such achieve this. We need to ¯nd the available program microarchitectures, including scalability, issues re- ILP at runtime and to provide su±cient hardware lated to control flow, and memory latency. to expose, schedule, and otherwise manage the out- Our design investigates how to utilize a large mesh of-order speculative execution of control independent of processing elements in order to execute a single- instructions. Of course, the microarchitecture has to threaded program. We present a basic overview of also provide a means to maintain the architectural our microarchitecture and discuss how it addresses program order that is required for proper program scalability as we attempt to execute many instruc- execution. -

Delicm: Scalar Dependence Removal at Zero Memory Cost

DeLICM: Scalar Dependence Removal at Zero Memory Cost Michael Kruse Tobias Grosser Inria ETH Zürich Paris, France Zürich, Switzerland [email protected] [email protected] Abstract 1 Introduction Increasing data movement costs motivate the integration Advanced high-level loop optimizations, often using poly- of polyhedral loop optimizers in the standard flow (-O3) hedral program models [31], have been shown to effectively of production compilers. While polyhedral optimizers have improve data locality for image processing [22], iterated been shown to be effective when applied as source-to-source stencils [4], Lattice-Boltzmann computations [23], sparse- transformation, the single static assignment form used in matrices [30] and even provide some of the leading automatic modern compiler mid-ends makes such optimizers less effec- GPU code generation approaches [5, 32]. tive. Scalar dependencies (dependencies carried over a single While polyhedral optimizers have been shown to be ef- memory location) are the main obstacle preventing effective fective in research, but are rarely used in production, at optimization. We present DeLICM, a set of transformations least partly because they are disabled in the most common which, backed by a polyhedral value analysis, eliminate prob- optimization levels. because none of the established frame- lematic scalar dependences by 1) relocating scalar memory works is enabled by default in a standard optimization set- references to unused array locations and by 2) forwarding ting (e.g. -O3). With GCC/graphite [26], IBM XL-C [8], and computations that otherwise cause scalar dependences. Our LLVM/Polly [15] there are three polyhedral optimizers that experiments show that DeLICM effectively eliminates de- work directly in production C/C++/FORTRAN compilers and pendencies introduced by compiler-internal canonicalization are shipped to millions of users.