Abstracts Booklet

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Table of Contents

Table of Contents Vision Statement 5 Strengthening the Intellectual Community 7 Academic Review 17 Recognition of Excellence 20 New Faculty 26 Student Prizes and Scholarships 28 News from the Faculties and Schools 29 Institute for Advanced Studies 47 Saltiel Pre‐Academic Center 49 Truman Institute for the Advancement of Peace 50 The Authority for Research Students 51 The Library Authority 54 In Appreciation 56 2009 Report by the Rector Back ACADEMIC DEVELOPMENTS VISION STATEMENT The academic year 2008/2009, which is now drawing to its end, has been a tumultuous one. The continuous budgetary cuts almost prevented the opening of the academic year, and we end the year under the shadow of the current global economic crisis. Notwithstanding these difficulties, the Hebrew University continues to thrive: as the present report shows, we have maintained our position of excellence. However the name of our game is not maintenance, but rather constant change and improvement, and despite the need to cut back and save, we have embarked on several new, innovative initiatives. As I reflect on my first year as Rector of the Hebrew University, the difficulties fade in comparison to the feeling of opportunity. We are fortunate to have the ongoing support of our friends, both in Israel and abroad, which is a constant reminder to us of the significance of the Hebrew University beyond its walls. Prof. Menachem Magidor is stepping down after twelve years as President of the University: it has been an enormous privilege to work with him during this year, and benefit from his experience, wisdom and vision. -

Meaning and Self-Organisation in Visual Cognition and Thought

SEEING, THINKING AND KNOWING THEORY AND DECISION LIBRARY General Editors: W. Leinfellner (Vienna) and G. Eberlein (Munich) Series A: Philosophy and Methodology of the Social Sciences Series B: Mathematical and Statistical Methods Series C: Game Theory, Mathematical Programming and Operations Research SERIES A: PHILOSOPHY AND METHODOLOGY OF THE SOCIAL SCIENCES VOLUME 38 Series Editor: W. Leinfellner (Technical University of Vienna), G. Eberlein (Technical University of Munich); Editorial Board: R. Boudon (Paris), M. Bunge (Montreal), J. S. Coleman (Chicago), J. Götschl (Graz), L. Kern (Pullach), I. Levi (New York), R. Mattessich (Vancouver), B. Munier (Cachan), J. Nida-Rümelin (Göttingen), A. Rapoport (Toronto), A. Sen (Cambridge, U.S.A.), R. Tuomela (Helsinki), A. Tversky (Stanford). Scope: This series deals with the foundations, the general methodology and the criteria, goals and purpose of the social sciences. The emphasis in the Series A will be on well-argued, thoroughly ana- lytical rather than advanced mathematical treatments. In this context, particular attention will be paid to game and decision theory and general philosophical topics from mathematics, psychology and economics, such as game theory, voting and welfare theory, with applications to political science, sociology, law and ethics. The titles published in this series are listed at the end of this volume. SEEING, THINKING AND KNOWING Meaning and Self-Organisation in Visual Cognition and Thought Edited by Arturo Carsetti University of Rome “Tor Vergata”, Rome, Italy KLUWER -

Emergence in Science and Philosophy Edited by Antonella

Emergence in Science and Philosophy Edited by Antonella Corradini and Timothy O’Connor New York London Contents List of Figures ix Introduction xi ANTONELLA CORRADINI AND TIMOTHY O’CONNOR PART I Emergence: General Perspectives Part I Introduction 3 ANTONELLA CORRADINI AND TIMOTHY O’CONNOR 1 The Secret Lives of Emergents 7 HONG YU WONG 2 On the Implications of Scientifi c Composition and Completeness: Or, The Troubles, and Troubles, of Non- Reductive Physicalism 25 CARL GILLETT 3 Weak Emergence and Context-Sensitive Reduction 46 MARK A. BEDAU 4 Two Varieties of Causal Emergentism 64 MICHELE DI FRANCESCO 5 The Emergence of Group Cognition 78 GEORG THEINER AND TIMOTHY O’CONNOR vi Contents PART II Self, Agency, and Free Will Part II Introduction 121 ANTONELLA CORRADINI AND TIMOTHY O’CONNOR 6 Why My Body is Not Me: The Unity Argument for Emergentist Self-Body Dualism 127 E. JONATHAN LOWE 7 What About the Emergence of Consciousness Deserves Puzzlement? 149 MARTINE NIDA-RÜMELIN 8 The Emergence of Rational Souls 163 UWE MEIXNER 9 Are Deliberations and Decisions Emergent, if Free? 180 ACHIM STEPHAN 10 Is Emergentism Refuted by the Neurosciences? The Case of Free Will 190 MARIO DE CARO PART III Physics, Mathematics, and the Special Sciences Part III Introduction 207 ANTONELLA CORRADINI AND TIMOTHY O’CONNOR 11 Emergence in Physics 213 PATRICK MCGIVERN AND ALEXANDER RUEGER 12 The Emergence of the Intuition of Truth in Mathematical Thought 233 SERGIO GALVAN Contents vii 13 The Emergence of Mind at the Co-Evolutive Level 251 ARTURO CARSETTI 14 Emerging Mental Phenomena: Implications for Psychological Explanation 266 ALESSANDRO ANTONIETTI 15 How Special Are Special Sciences? 289 ANTONELLA CORRADINI Contributors 305 Index 309 Introduction Antonella Corradini and Timothy O’Connor The concept of emergence has seen a signifi cant resurgence in philosophy and a number of sciences in the past couple decades. -

International Congress

Copertina_Copertina.qxd 29/10/15 16:17 Pagina 1 THE PONTIFICAL ACADEMY OF INTERNATIONAL SAINT THOMAS AQUINAS CONGRESS SOCIETÀ Christian Humanism INTERNAZIONALE TOMMASO in the Third Millennium: I N T D’AQUINO The Perspective of Thomas Aquinas E R N A T I O N Rome, 21-25 September 2003 A L C O N G R E S …we are thereby taught how great is man’s digni - S ty , lest we should sully it with sin; hence Augustine says (De Vera Relig. XVI ): ‘God has C h r i proved to us how high a place human nature s t i a holds amongst creatures, inasmuch as He n H appeared to men as a true man’. And Pope Leo u m says in a sermon on the Nativity ( XXI ): ‘Learn, O a n i Christian, thy worth; and being made a partici - s m i pant of the divine nature (2 Pt 1,4) , refuse to return n t by evil deeds to your former worthlessness’ h e T h i r d M i St. Thomas Aquinas l l e n Summa Theologiae III, q.1, a.2 n i u m : T h e P e r s p e c t i v e o f T h o m a SANCT s IA I T A M H q E O u D M i A n C A a E A s A A I Q C U I F I I N T A T N I O S P • PALAZZO DELLA CANCELLERIA – A NGELICUM The Pontifical Academy of Saint Thomas Aquinas (PAST) Società Internazionale Tommaso d’Aquino (SITA) Tel: +39 0669883195 / 0669883451 – Fax: +39 0669885218 E-mail: [email protected] – Website: http://e-aquinas.net/2003 INTERNATIONAL CONGRESS Christian Humanism in the Third Millennium: The Perspective of Thomas Aquinas Rome, 21-25 September 2003 PRESENTATION Since the beginning of 2002, the Pontifical Academy of Saint Thomas and the Thomas Aquinas International Society, have been jointly preparing an International Congress which will take place in Rome, from 21 to 25 September 2003. -

Metabiology and the Complexity of Natural Evolution

Arturo Carsetti Biology ︱ system being studied. In addition, he The tree-like branching of evolution is investigated the boundaries of semantic programmed by natural selection. information in order to outline the principles of an adequate intentional information theory. LuckyStep/Shutterstock.com Metabiology and SELF-ORGANISATION Professor Carsetti quotes Henri Atlan – “the function self-organises together with its meaning” – to highlight the prerequisite of both a conceptual the complexity of theory of complexity and a theory of self-organisation. Self-organisation refers to the process whereby complex systems develop order via internal processes, also in the absence of natural evolution external intended constraints or forces. It can be described in terms of network In his study of metabiology, rturo Carsetti is Professor Vittorio Somenzi, Ilya Prigogine, Heinz properties such as connectivity, making Arturo Carsetti, from the of Philosophy of Science von Foerster and Henri Atlan, Arturo it an ideal subject for complexity theory University of Rome Tor Vergata, A at the University of Carsetti became interested in applying and artificial life research. In accordance new mathematics. This is a mathematics Chaitin’s insight into biological reviews existing theories Rome Tor Vergata and Editor Cybernetics and Information Theory with Carsetti’s main thesis, we have that necessarily moulds coder’s activity. evolution led him to view “life as and explores novel concepts of the Italian Journal for to living systems. Subsequently, to recognise that, at the level of a Hence the importance of articulating evolving software”. He employed regarding the complexity the Philosophy of Science during his stay in Trieste he worked biological cognitive system, sensibility and inventing each time a mathematics algorithmic information theory to of biological systems while La Nuova Critica. -

Computability Computability Computability Turing, Gödel, Church, and Beyond Turing, Gödel, Church, and Beyond Edited by B

computer science/philosophy Computability Computability Computability turing, Gödel, Church, and beyond turing, Gödel, Church, and beyond edited by b. Jack Copeland, Carl J. posy, and oron Shagrir edited by b. Jack Copeland, Carl J. posy, and oron Shagrir Copeland, b. Jack Copeland is professor of philosophy at the ContributorS in the 1930s a series of seminal works published by university of Canterbury, new Zealand, and Director Scott aaronson, Dorit aharonov, b. Jack Copeland, martin Davis, Solomon Feferman, Saul alan turing, Kurt Gödel, alonzo Church, and others of the turing archive for the History of Computing. Kripke, Carl J. posy, Hilary putnam, oron Shagrir, Stewart Shapiro, Wilfried Sieg, robert established the theoretical basis for computability. p Carl J. posy is professor of philosophy and member irving Soare, umesh V. Vazirani editors and Shagrir, osy, this work, advancing precise characterizations of ef- of the Centers for the Study of rationality and for lan- fective, algorithmic computability, was the culmina- guage, logic, and Cognition at the Hebrew university tion of intensive investigations into the foundations of Jerusalem. oron Shagrir is professor of philoso- of mathematics. in the decades since, the theory of phy and Former Chair of the Cognitive Science De- computability has moved to the center of discussions partment at the Hebrew university of Jerusalem. He in philosophy, computer science, and cognitive sci- is currently the vice rector of the Hebrew university. ence. in this volume, distinguished computer scien- tists, mathematicians, logicians, and philosophers consider the conceptual foundations of comput- ability in light of our modern understanding. Some chapters focus on the pioneering work by turing, Gödel, and Church, including the Church- turing thesis and Gödel’s response to Church’s and turing’s proposals. -

Elements of Neurogeometry Functional Architectures of Vision Lecture Notes in Morphogenesis

Lecture Notes in Morphogenesis Series Editor: Alessandro Sarti Jean Petitot Elements of Neurogeometry Functional Architectures of Vision Lecture Notes in Morphogenesis Series editor Alessandro Sarti, CAMS Center for Mathematics, CNRS-EHESS, Paris, France e-mail: [email protected] More information about this series at http://www.springer.com/series/11247 Jean Petitot Elements of Neurogeometry Functional Architectures of Vision 123 Jean Petitot CAMS, EHESS Paris France Translated by Stephen Lyle ISSN 2195-1934 ISSN 2195-1942 (electronic) Lecture Notes in Morphogenesis ISBN 978-3-319-65589-5 ISBN 978-3-319-65591-8 (eBook) DOI 10.1007/978-3-319-65591-8 Library of Congress Control Number: 2017950247 Translation from the French language edition: Neurogéométrie de la vision by Jean Petitot, © Les Éditions de l’École Polytechnique 2008. All Rights Reserved © Springer International Publishing AG 2017 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant protective laws and regulations and therefore free for general use. The publisher, the authors and the editors are safe to assume that the advice and information in this book are believed to be true and accurate at the date of publication. -

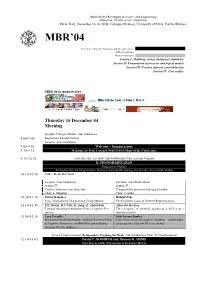

MBR04 Schedule

Model-Based Reasoning in Science and Engineering Abduction, Visualization, Simulation Pavia, Italy, December 16-18, 2004, Collegio Ghislieri, University of Pavia, Piazza Ghislieri MBR’04 Invited presentations, Symposia, and Special Sessions Full presentations Short presentations Session I: Modeling: visual, analogical, simulative Session II: Computational processes and logical models Session III: Creative inference and abduction Session IV: Case studies MBR’04 is sponsored by Thursday 16 December 04 Morning Location: Collegio Ghislieri, Aula Goldoniana 8.00-9:00 Registration Sala del Camino Location: Aula Goldoniana 9.00-9.10 Welcome - Inaugurazione 9.10-9.15 Welcome by Prof. Lorenzo MAGNANI Chair of the Conference 9.15-10.10 Invited Lecture Location: Aula Goldoniana Chair: Lorenzo Magnani B. CHANDRASEKARAN Diagrams as Models: An Architecture for Diagrammatic Representation and Reasoning -based values in scie ntific models 10.10-10.30 Coffee Break, Refettorio Location: Aula Goldoniana Location: Aula Sandra Bruni Session III Session II Creative inferences and abduction Computational processes and logical models Chair: L. Magnani Chair: T. Addis 10.30-11.10 Bonny Banerjee Helmut Pape Using Abduction for Diagramming Group Motions The Pragmatic Logic of Ordered Representations 11.10-11.50 P.D. Bruza, R.J. Cole, D. Song, Z. Abdul Bari Albrecht Heefner Towards Operational Abduction from a Cognitive Per- The emergence of symbolic algebra as a shift in pre- spective dominant models 11.50-12.10 Luca Pezzullo Irobi Ijeoma Sandra Environmental Mental Models: Applying Repertory Grids Correctness criteria for models’ validation – a philosophi- to Cognitive Geoscience and Risk Perception Studies cal perspective (Session IV Case studies (Session IV Case studies ) Invited Lecture Location (Symposium: Tracking the Real): Aula Goldoniana Chair: B. -

Formalizing Common Sense Reasoning for Scalable Inconsistency-Robust Information Integration Using Direct Logictm Reasoning and the Actor Model

Formalizing common sense reasoning for scalable inconsistency-robust information integration using Direct LogicTM Reasoning and the Actor Model Carl Hewitt. http://carlhewitt.info This paper is dedicated to Alonzo Church, Stanisław Jaśkowski, John McCarthy and Ludwig Wittgenstein. ABSTRACT People use common sense in their interactions with large software systems. This common sense needs to be formalized so that it can be used by computer systems. Unfortunately, previous formalizations have been inadequate. For example, because contemporary large software systems are pervasively inconsistent, it is not safe to reason about them using classical logic. Our goal is to develop a standard foundation for reasoning in large-scale Internet applications (including sense making for natural language) by addressing the following issues: inconsistency robustness, classical contrapositive inference bug, and direct argumentation. Inconsistency Robust Direct Logic is a minimal fix to Classical Logic without the rule of Classical Proof by Contradiction [i.e., (Ψ├ (¬))├¬Ψ], the addition of which transforms Inconsistency Robust Direct Logic into Classical Logic. Inconsistency Robust Direct Logic makes the following contributions over previous work: Direct Inference Direct Argumentation (argumentation directly expressed) Inconsistency-robust Natural Deduction that doesn’t require artifices such as indices (labels) on propositions or restrictions on reiteration Intuitive inferences hold including the following: . Propositional equivalences (except absorption) including Double Negation and De Morgan . -Elimination (Disjunctive Syllogism), i.e., ¬Φ, (ΦΨ)├T Ψ . Reasoning by disjunctive cases, i.e., (), (├T ), (├T Ω)├T Ω . Contrapositive for implication i.e., Ψ⇒ if and only if ¬⇒¬Ψ . Soundness, i.e., ├T ((├T) ⇒ ) . Inconsistency Robust Proof by Contradiction, i.e., ├T (Ψ⇒ (¬))⇒¬Ψ 1 A fundamental goal of Inconsistency Robust Direct Logic is to effectively reason about large amounts of pervasively inconsistent information using computer information systems. -

An Observation About Truth

An Observation about Truth (with Implications for Meaning and Language) Thesis submitted for the degree of “Doctor of Philosophy” By David Kashtan Submitted to the Senate of the Hebrew University of Jerusalem November 2017 i This work was carried out under the supervision of: Prof. Carl Posy ii Racheli Kasztan-Czerwonogórze i Avigail Kasztan-Czerwonogórze i ich matce iii Acknowledgements I am the proximal cause of this dissertation. It has many distal causes, of which I will give only a partial list. During the several years in which this work was in preparation I was lucky to be supported by several sources. For almost the whole duration of my doctoral studies I was a funded doctoral fellow at the Language, Logic and Cognition Center. The membership in the LLCC has been of tremendous significance to my scientific education and to the final shape and content of this dissertation. I thank especially Danny Fox, from whom I learnt how linguistics is done (though, due to my stubbornness, not how to do it) and all the other members of this important place, past and present, and in particular Lital Myers who makes it all come together. In the years 2011-2013 I was a funded participant in an inter-university research and study program about Kantian philosophy. The program was organized by Ido Geiger and Yakir Levin from Ben-Gurion University, and by Eli Friedlander, Ofra Rechter and Yaron Senderowicz from Tel-Aviv University. The atmosphere in the program was one of intimacy and devotion to philosophy; I predict we will soon witness blossoms, the seeds of which were planted there. -

APA Newsletter on Philosophy and Computers, Vol. 19, No. 2 (Spring

NEWSLETTER | The American Philosophical Association Philosophy and Computers SPRING 2020 VOLUME 19 | NUMBER 2 PREFACE Stephen L. Thaler DABUS in a Nutshell Peter Boltuc Terry Horgan FROM THE ARCHIVES The Real Moral of the Chinese Room: Understanding Requires Understanding Phenomenology AI Ontology and Consciousness Lynne Rudder Baker Selmer Bringsjord A Refutation of Searle on Bostrom (re: Malicious Machines) and The Shrinking Difference between Artifacts and Natural Objects Floridi (re: Information) Amie L. Thomasson AI and Axiology Artifacts and Mind-Independence: Comments on Lynne Rudder Baker’s “The Shrinking Difference between Artifacts and Natural Luciano Floridi Objects” Understanding Information Ethics Gilbert Harman John Barker Explaining an Explanatory Gap Too Much Information: Questioning Information Ethics Yujin Nagasawa Martin Flament Fultot Formulating the Explanatory Gap Ethics of Entropy Jaakko Hintikka James Moore Logic as a Theory of Computability Taking the Intentional Stance Toward Robot Ethics Stan Franklin, Bernard J. Baars, and Uma Ramamurthy Keith W. Miller and David Larson Robots Need Conscious Perception: A Reply to Aleksander and Measuring a Distance: Humans, Cyborgs, Robots Haikonen Dominic McIver Lopes P. O. Haikonen Remediation Revisited: Replies to Gaut, Matravers, and Tavinor Flawed Workspaces? FROM THE EDITOR: NEWSLETTER HIGHLIGHTS M. Shanahan Unity from Multiplicity: A Reply to Haikonen REPORT FROM THE CHAIR Gregory Chaitin NOTE FROM THE 2020 BARWISE PRIZE WINNER Leibniz, Complexity, and Incompleteness Aaron Sloman Aaron Sloman My Philosophy in AI: A Very Short Set of Notes Towards My Architecture-Based Motivation vs. Reward-Based Motivation Barwise Prize Acceptance Talk Ricardo Sanz ANNOUNCEMENT Consciousness, Engineering, and Anthropomorphism Robin Hill Troy D. Kelley and Vladislav D. -

Intelligence and Artificial Intelligence

We invite applications to participate in the 4th Intercontinental Academia dedicated to Intelligence and Artificial Intelligence Opening online session: 13-18 June 2021 1st workshop in Paris, France: 18-27 October 2021 2nd workshop in Belo Horizonte, Brazil: 6-15 June 2022 Call for fellows Deadline: March 31, 2021 (6 pm CET) The Intercontinental Academia (ICA) seeks to create a global network of future research leaders in which some of the very best early/mid-career scholars will work together on paradigm-shifting cross-disciplinary research, mentored by some of the most eminent researchers from across the globe. In order to achieve this high objective, we organize in a year three immersive and intense sessions. The experience is expected to transform the scholar's own approach to research, enhance their awareness of the work, relevance and potential impact of other disciplines, and to inspire and facilitate new collaborations between distant disciplines. Our aim is to make a real, yet volatile, intellectual cocktail that leads to meaningful exchange and long-lasting outputs. The theme Intelligence and Artificial Intelligence have been selected to provide a framework for stimulating intellectual exchange in 2021-2022. The past decades have witnessed an impressive progress in cognitive science, neuroscience and artificial intelligence. Beside the decisive scientific advances that have been accomplished in the analysis of brain activity and its behavioral counterparts or in the information processing sciences (machine learning, wearable sensors…), several fundamental and broader questions of deep interdisciplinary nature have been arising. As artificial intelligence and neuroscience/cognitive science seem to show significant complementarities, a key question is to inquire to which extent these complementarities should drive research in both areas and how to optimize synergies.