Abstracts E-CAP2004

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Meaning and Self-Organisation in Visual Cognition and Thought

SEEING, THINKING AND KNOWING THEORY AND DECISION LIBRARY General Editors: W. Leinfellner (Vienna) and G. Eberlein (Munich) Series A: Philosophy and Methodology of the Social Sciences Series B: Mathematical and Statistical Methods Series C: Game Theory, Mathematical Programming and Operations Research SERIES A: PHILOSOPHY AND METHODOLOGY OF THE SOCIAL SCIENCES VOLUME 38 Series Editor: W. Leinfellner (Technical University of Vienna), G. Eberlein (Technical University of Munich); Editorial Board: R. Boudon (Paris), M. Bunge (Montreal), J. S. Coleman (Chicago), J. Götschl (Graz), L. Kern (Pullach), I. Levi (New York), R. Mattessich (Vancouver), B. Munier (Cachan), J. Nida-Rümelin (Göttingen), A. Rapoport (Toronto), A. Sen (Cambridge, U.S.A.), R. Tuomela (Helsinki), A. Tversky (Stanford). Scope: This series deals with the foundations, the general methodology and the criteria, goals and purpose of the social sciences. The emphasis in the Series A will be on well-argued, thoroughly ana- lytical rather than advanced mathematical treatments. In this context, particular attention will be paid to game and decision theory and general philosophical topics from mathematics, psychology and economics, such as game theory, voting and welfare theory, with applications to political science, sociology, law and ethics. The titles published in this series are listed at the end of this volume. SEEING, THINKING AND KNOWING Meaning and Self-Organisation in Visual Cognition and Thought Edited by Arturo Carsetti University of Rome “Tor Vergata”, Rome, Italy KLUWER -

Emergence in Science and Philosophy Edited by Antonella

Emergence in Science and Philosophy Edited by Antonella Corradini and Timothy O’Connor New York London Contents List of Figures ix Introduction xi ANTONELLA CORRADINI AND TIMOTHY O’CONNOR PART I Emergence: General Perspectives Part I Introduction 3 ANTONELLA CORRADINI AND TIMOTHY O’CONNOR 1 The Secret Lives of Emergents 7 HONG YU WONG 2 On the Implications of Scientifi c Composition and Completeness: Or, The Troubles, and Troubles, of Non- Reductive Physicalism 25 CARL GILLETT 3 Weak Emergence and Context-Sensitive Reduction 46 MARK A. BEDAU 4 Two Varieties of Causal Emergentism 64 MICHELE DI FRANCESCO 5 The Emergence of Group Cognition 78 GEORG THEINER AND TIMOTHY O’CONNOR vi Contents PART II Self, Agency, and Free Will Part II Introduction 121 ANTONELLA CORRADINI AND TIMOTHY O’CONNOR 6 Why My Body is Not Me: The Unity Argument for Emergentist Self-Body Dualism 127 E. JONATHAN LOWE 7 What About the Emergence of Consciousness Deserves Puzzlement? 149 MARTINE NIDA-RÜMELIN 8 The Emergence of Rational Souls 163 UWE MEIXNER 9 Are Deliberations and Decisions Emergent, if Free? 180 ACHIM STEPHAN 10 Is Emergentism Refuted by the Neurosciences? The Case of Free Will 190 MARIO DE CARO PART III Physics, Mathematics, and the Special Sciences Part III Introduction 207 ANTONELLA CORRADINI AND TIMOTHY O’CONNOR 11 Emergence in Physics 213 PATRICK MCGIVERN AND ALEXANDER RUEGER 12 The Emergence of the Intuition of Truth in Mathematical Thought 233 SERGIO GALVAN Contents vii 13 The Emergence of Mind at the Co-Evolutive Level 251 ARTURO CARSETTI 14 Emerging Mental Phenomena: Implications for Psychological Explanation 266 ALESSANDRO ANTONIETTI 15 How Special Are Special Sciences? 289 ANTONELLA CORRADINI Contributors 305 Index 309 Introduction Antonella Corradini and Timothy O’Connor The concept of emergence has seen a signifi cant resurgence in philosophy and a number of sciences in the past couple decades. -

International Congress

Copertina_Copertina.qxd 29/10/15 16:17 Pagina 1 THE PONTIFICAL ACADEMY OF INTERNATIONAL SAINT THOMAS AQUINAS CONGRESS SOCIETÀ Christian Humanism INTERNAZIONALE TOMMASO in the Third Millennium: I N T D’AQUINO The Perspective of Thomas Aquinas E R N A T I O N Rome, 21-25 September 2003 A L C O N G R E S …we are thereby taught how great is man’s digni - S ty , lest we should sully it with sin; hence Augustine says (De Vera Relig. XVI ): ‘God has C h r i proved to us how high a place human nature s t i a holds amongst creatures, inasmuch as He n H appeared to men as a true man’. And Pope Leo u m says in a sermon on the Nativity ( XXI ): ‘Learn, O a n i Christian, thy worth; and being made a partici - s m i pant of the divine nature (2 Pt 1,4) , refuse to return n t by evil deeds to your former worthlessness’ h e T h i r d M i St. Thomas Aquinas l l e n Summa Theologiae III, q.1, a.2 n i u m : T h e P e r s p e c t i v e o f T h o m a SANCT s IA I T A M H q E O u D M i A n C A a E A s A A I Q C U I F I I N T A T N I O S P • PALAZZO DELLA CANCELLERIA – A NGELICUM The Pontifical Academy of Saint Thomas Aquinas (PAST) Società Internazionale Tommaso d’Aquino (SITA) Tel: +39 0669883195 / 0669883451 – Fax: +39 0669885218 E-mail: [email protected] – Website: http://e-aquinas.net/2003 INTERNATIONAL CONGRESS Christian Humanism in the Third Millennium: The Perspective of Thomas Aquinas Rome, 21-25 September 2003 PRESENTATION Since the beginning of 2002, the Pontifical Academy of Saint Thomas and the Thomas Aquinas International Society, have been jointly preparing an International Congress which will take place in Rome, from 21 to 25 September 2003. -

Metabiology and the Complexity of Natural Evolution

Arturo Carsetti Biology ︱ system being studied. In addition, he The tree-like branching of evolution is investigated the boundaries of semantic programmed by natural selection. information in order to outline the principles of an adequate intentional information theory. LuckyStep/Shutterstock.com Metabiology and SELF-ORGANISATION Professor Carsetti quotes Henri Atlan – “the function self-organises together with its meaning” – to highlight the prerequisite of both a conceptual the complexity of theory of complexity and a theory of self-organisation. Self-organisation refers to the process whereby complex systems develop order via internal processes, also in the absence of natural evolution external intended constraints or forces. It can be described in terms of network In his study of metabiology, rturo Carsetti is Professor Vittorio Somenzi, Ilya Prigogine, Heinz properties such as connectivity, making Arturo Carsetti, from the of Philosophy of Science von Foerster and Henri Atlan, Arturo it an ideal subject for complexity theory University of Rome Tor Vergata, A at the University of Carsetti became interested in applying and artificial life research. In accordance new mathematics. This is a mathematics Chaitin’s insight into biological reviews existing theories Rome Tor Vergata and Editor Cybernetics and Information Theory with Carsetti’s main thesis, we have that necessarily moulds coder’s activity. evolution led him to view “life as and explores novel concepts of the Italian Journal for to living systems. Subsequently, to recognise that, at the level of a Hence the importance of articulating evolving software”. He employed regarding the complexity the Philosophy of Science during his stay in Trieste he worked biological cognitive system, sensibility and inventing each time a mathematics algorithmic information theory to of biological systems while La Nuova Critica. -

Elements of Neurogeometry Functional Architectures of Vision Lecture Notes in Morphogenesis

Lecture Notes in Morphogenesis Series Editor: Alessandro Sarti Jean Petitot Elements of Neurogeometry Functional Architectures of Vision Lecture Notes in Morphogenesis Series editor Alessandro Sarti, CAMS Center for Mathematics, CNRS-EHESS, Paris, France e-mail: [email protected] More information about this series at http://www.springer.com/series/11247 Jean Petitot Elements of Neurogeometry Functional Architectures of Vision 123 Jean Petitot CAMS, EHESS Paris France Translated by Stephen Lyle ISSN 2195-1934 ISSN 2195-1942 (electronic) Lecture Notes in Morphogenesis ISBN 978-3-319-65589-5 ISBN 978-3-319-65591-8 (eBook) DOI 10.1007/978-3-319-65591-8 Library of Congress Control Number: 2017950247 Translation from the French language edition: Neurogéométrie de la vision by Jean Petitot, © Les Éditions de l’École Polytechnique 2008. All Rights Reserved © Springer International Publishing AG 2017 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant protective laws and regulations and therefore free for general use. The publisher, the authors and the editors are safe to assume that the advice and information in this book are believed to be true and accurate at the date of publication. -

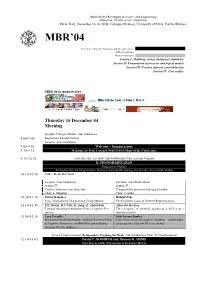

MBR04 Schedule

Model-Based Reasoning in Science and Engineering Abduction, Visualization, Simulation Pavia, Italy, December 16-18, 2004, Collegio Ghislieri, University of Pavia, Piazza Ghislieri MBR’04 Invited presentations, Symposia, and Special Sessions Full presentations Short presentations Session I: Modeling: visual, analogical, simulative Session II: Computational processes and logical models Session III: Creative inference and abduction Session IV: Case studies MBR’04 is sponsored by Thursday 16 December 04 Morning Location: Collegio Ghislieri, Aula Goldoniana 8.00-9:00 Registration Sala del Camino Location: Aula Goldoniana 9.00-9.10 Welcome - Inaugurazione 9.10-9.15 Welcome by Prof. Lorenzo MAGNANI Chair of the Conference 9.15-10.10 Invited Lecture Location: Aula Goldoniana Chair: Lorenzo Magnani B. CHANDRASEKARAN Diagrams as Models: An Architecture for Diagrammatic Representation and Reasoning -based values in scie ntific models 10.10-10.30 Coffee Break, Refettorio Location: Aula Goldoniana Location: Aula Sandra Bruni Session III Session II Creative inferences and abduction Computational processes and logical models Chair: L. Magnani Chair: T. Addis 10.30-11.10 Bonny Banerjee Helmut Pape Using Abduction for Diagramming Group Motions The Pragmatic Logic of Ordered Representations 11.10-11.50 P.D. Bruza, R.J. Cole, D. Song, Z. Abdul Bari Albrecht Heefner Towards Operational Abduction from a Cognitive Per- The emergence of symbolic algebra as a shift in pre- spective dominant models 11.50-12.10 Luca Pezzullo Irobi Ijeoma Sandra Environmental Mental Models: Applying Repertory Grids Correctness criteria for models’ validation – a philosophi- to Cognitive Geoscience and Risk Perception Studies cal perspective (Session IV Case studies (Session IV Case studies ) Invited Lecture Location (Symposium: Tracking the Real): Aula Goldoniana Chair: B. -

APA Newsletter on Philosophy and Computers, Vol. 19, No. 2 (Spring

NEWSLETTER | The American Philosophical Association Philosophy and Computers SPRING 2020 VOLUME 19 | NUMBER 2 PREFACE Stephen L. Thaler DABUS in a Nutshell Peter Boltuc Terry Horgan FROM THE ARCHIVES The Real Moral of the Chinese Room: Understanding Requires Understanding Phenomenology AI Ontology and Consciousness Lynne Rudder Baker Selmer Bringsjord A Refutation of Searle on Bostrom (re: Malicious Machines) and The Shrinking Difference between Artifacts and Natural Objects Floridi (re: Information) Amie L. Thomasson AI and Axiology Artifacts and Mind-Independence: Comments on Lynne Rudder Baker’s “The Shrinking Difference between Artifacts and Natural Luciano Floridi Objects” Understanding Information Ethics Gilbert Harman John Barker Explaining an Explanatory Gap Too Much Information: Questioning Information Ethics Yujin Nagasawa Martin Flament Fultot Formulating the Explanatory Gap Ethics of Entropy Jaakko Hintikka James Moore Logic as a Theory of Computability Taking the Intentional Stance Toward Robot Ethics Stan Franklin, Bernard J. Baars, and Uma Ramamurthy Keith W. Miller and David Larson Robots Need Conscious Perception: A Reply to Aleksander and Measuring a Distance: Humans, Cyborgs, Robots Haikonen Dominic McIver Lopes P. O. Haikonen Remediation Revisited: Replies to Gaut, Matravers, and Tavinor Flawed Workspaces? FROM THE EDITOR: NEWSLETTER HIGHLIGHTS M. Shanahan Unity from Multiplicity: A Reply to Haikonen REPORT FROM THE CHAIR Gregory Chaitin NOTE FROM THE 2020 BARWISE PRIZE WINNER Leibniz, Complexity, and Incompleteness Aaron Sloman Aaron Sloman My Philosophy in AI: A Very Short Set of Notes Towards My Architecture-Based Motivation vs. Reward-Based Motivation Barwise Prize Acceptance Talk Ricardo Sanz ANNOUNCEMENT Consciousness, Engineering, and Anthropomorphism Robin Hill Troy D. Kelley and Vladislav D. -

Semantica Molecolare Ed Intenzionalità Nei Sistemi Viventi 1

Mirko Di Bernardo Verso una fondazione naturalistica delle pre- condizioni dell'etica: semantica molecolare ed intenzionalità nei sistemi viventi 1. Agenti autonomi ed auto-catalisi In Esplorazioni Evolutive, testo pubblicato nel 2000 dopo una faticosa gestazione durata quattro anni, Stuart Alan Kauffman, uno dei padri della teoria della complessità biologica contemporanea, mostra l'esito di una ricerca serendipica con un cospicuo numero di risultati sorprendenti. Si tratta di un'opera realmente esplorativa, piena di ipotesi di lavoro euristiche, talvolta feconde talvolta destinate al fallimento la cui argomentazione è, a tratti, oscura ed enigmatica, ma da cui traspaiono, senza ombra di dubbio, sia la passione per la ricerca della verità che l'attaccamento ad alcune tracce di lavoro promettenti. Rispetto agli anni di massimo fervore intellettuale del «pensatoio interdisciplinare» formatosi attorno al Santa Fe Institute durante i quali hanno visto la luce The Origins of Order e A casa nell'universo; rispetto, cioè, a quella temperie scientifica di fine novecento dove tutto sembrava possibile (scoprire la quarta legge della termodinamica per i sistemi aperti in non equilibrio, tracciare una teoria unificata dell'universo, decifrare le leggi senza tempo della biologia universale) e dove la Scienza della Complessità gettava ponti tra domini diversi della fisica, fra la Cibernetica e la teoria dell'informazione, nonché fra matematica, scienze biologiche, economia, psicologia e politica; Esplorazioni evolutive, rispetto a quell'epoca pionieristica, «rappresenta al contempo la chiusura di una trilogia e l'apertura di nuove possibilità, mosse dallo stesso stupore sincero, quasi fanciullesco degli esordi».1 Il grande biochimico americano, infatti, nelle sue esplorazioni cerca di dar vita ad un'ermeneutica dell'evoluzione che spieghi la logica costruttivista del vivente, una logica, vale a dire, che deriva dalla selezione naturale, dall'auto- organizzazione e da altri principi che tutt'ora restano incomprensibili. -

Metabiology Non-Standard Models, General Semantics and Natural Evolution Series: Studies in Applied Philosophy, Epistemology and Rational Ethics

springer.com Arturo Carsetti Metabiology Non-standard Models, General Semantics and Natural Evolution Series: Studies in Applied Philosophy, Epistemology and Rational Ethics Presents fundamental philosophical and mathematical concepts underlying biology Describes new ideas related to the complexity of biological systems Offers a comprehensive view, supported by extensive historical references In the context of life sciences, we are constantly confronted with information that possesses precise semantic values and appears essentially immersed in a specific evolutionary trend. In such a framework, Nature appears, in Monod’s words, as a tinkerer characterized by the presence of precise principles of self-organization. However, while Monod was obliged to incorporate his brilliant intuitions into the framework of first-order cybernetics and a theory of information with an exclusively syntactic character such as that defined by Shannon, research advances in recent decades have led not only to the definition of a second-order cybernetics but also to an exploration of the boundaries of semantic information. As H. Atlan states, on a 1st ed. 2020, X, 172 p. biological level "the function self-organizes together with its meaning". Hence the need to refer to a conceptual theory of complexity and to a theory of self-organization characterized in an Printed book intentional sense. There is also a need to introduce, at the genetic level, a distinction between Hardcover coder and ruler as well as the opportunity to define a real software space for natural evolution. 119,99 € | £109.99 | $149.99 The recourse to non-standard model theory, the opening to a new general semantics, and the [1]128,39 € (D) | 131,99 € (A) | CHF innovative definition of the relationship between coder and ruler can be considered, today, 141,50 among the most powerful theoretical tools at our disposal in order to correctly define the Softcover contours of that new conceptual revolution increasingly referred to as metabiology. -

APA Newsletters

APA Newsletters Volume 04, Number 1 Fall 2004 NEWSLETTER ON PHILOSOPHY AND COMPUTERS FROM THE EDITOR, JON DORBOLO PROFILE BILL UZGALIS “A Conversation with Susan Stuart” ECAP REVIEWS SUSAN STUART “Review of the European Conference for Computing and Philosophy, 2004” MARCELLO GUARINI “Review of the European Conference for Computing and Philosophy, 2004” PROGRAM Computing and Philosophy, University of Pavia, Italy 2004 ARTICLE JON DORBOLO “Getting Outside of the Margins” © 2004 by The American Philosophical Association ISSN: 1067-9464 APA NEWSLETTER ON Philosophy and Computers Jon Dorbolo, Editor Fall 2004 Volume 04, Number 1 Philosophy, the Barwise Prize is awarded for significant and ROM THE DITOR sustained contributions to areas relevant to the philosophical F E study of computing and information. To commemorate this award, Minds and Machines and Editorial Board the APA Newsletter on Computing and Philosophy will collaborate to publish two special issues regarding “Daniel Jon Dorbolo, Editor Dennett and the Computational Turn.” The Fall Spring 2005 4140 The Valley Library APA Newsletter on Computers and Philosophy issue (Guest Oregon State University Editor: Ron Barnette) and a special issue of Minds and Machines Corvallis OR 97331-4502 in Fall 2005 (Guest Editor: Jon Dorbolo) will present this work. Submissions made in response to this call will be considered [email protected] for both publications, and authors will be consulted on the Phone: 541.737.3811 outcomes of the review process, with regard to which publication is suitable. -

APA Newsletter on Philosophy and Computers a Basic Cognitive Cycle, Including Several Modes of Learning, 08:2

APA Newsletters NEWSLETTER ON PHILOSOPHY AND COMPUTERS Volume 09, Number 1 Fall 2009 FROM THE EDITOR, PETER BOLTUC FROM THE CHAIR, MICHAEL BYRON NEW AND NOTEWORTHY: A CENTRAL APA INVITATION ARTICLES Featured Article RAYMOND TURNER “The Meaning of Programming Languages” GREGORY CHAITIN “Leibniz, Complexity, and Incompleteness” AARON SLOMAN “Architecture-Based Motivation vs. Reward-Based Motivation” DISCUSSION 1: ON ROBOT CONSCIOUSNESS STAN FRANKLIN, BERNARD J. BAARS, AND UMA RAMAMURTHY “Robots Need Conscious Perception: A Reply to Aleksander and Haikonen” PENTTI O. A. HAIKONEN “Conscious Perception Missing. A Reply to Franklin, Baars, and Ramamurthy” © 2009 by The American Philosophical Association ONTOLOGICAL STATUS OF WEB-BASED OBJECTS DAVID LEECH ANDERSON “A Semantics for Virtual Environments and the Ontological Status of Virtual Objects” ROBERT ARP “Realism and Antirealism in Informatics Ontologies” DISCUSSION 2: ON FLORIDI KEN HEROLD “A Response to Barker” JOHN BARKER “Reply to Herold” DISCUSSION 3: ON LOPES GRANT TAVINOR “Videogames, Interactivity, and Art” ONLINE EDUCATION MARGARET A. CROUCH “Gender and Online Education” H. E. BABER “Women Don’t Blog” BOOK REVIEW Christian Fuchs: Social Networking Sites and the Surveillance Society. A Critical Case Study of the Usage of studiVZ, Facebook, and MySpace by Students in Salzburg in the Context of Electronic Surveillance REVIEWED BY SANDOVAL MARISOL AND THOMAS ALLMER SYLLABUS DISCUSSION AARON SLOMAN “Teaching AI and Philosophy at School?” CALL FOR PAPERS Call for Papers with Ethics Information Technology on “The Case of e-Trust: A New Ethical Challenge” APA NEWSLETTER ON Philosophy and Computers Piotr Bołtuć, Editor Fall 2009 Volume 09, Number 1 The second topic area pertains to L. -

Abstracts Booklet

1 TURING CENTENARY CONFERENCE CiE 2012: How the World Computes University of Cambridge 18-23 June, 2012 ABSTRACTS OF INFORMAL PRESENTATIONS I Table of Contents Collective Reasoning under Uncertainty and Inconsistency ............. 1 Martin Adamcik Facticity as the amount of self-descriptive information in a data set ..... 2 Pieter Adriaans Towards a Type Theory of Predictable Assembly ...................... 3 Assel Altayeva and Iman Poernomo Worst case analysis of non-local games ............................... 4 Andris Ambainis, Art¯ursBaˇckurs,Kaspars Balodis, Agnis Skuˇskovniks,ˇ Juris Smotrovs and Madars Virza Numerical evaluation of the average number of successive guesses ....... 5 Kerstin Andersson Turing-degree of first-order logic FOL and reducing FOL to a propositional logic ................................................ 6 Hajnal Andr´ekaand Istv´anN´emeti Computing without a computer: the analytical calculus by means of formalizing classical computer operations ............................ 7 Vladimir Aristov and Andrey Stroganov On Models of the Modal Logic GL .................................. 8 Ilayda˙ Ate¸sand C¸i˘gdemGencer 0 1-Genericity and the Π2 Enumeration Degrees ........................ 9 Liliana Badillo and Charles Harris A simple proof that complexity of complexity can be large, and that some strings have maximal plain Kolmogorov complexity and non-maximal prefix-free complexity. ................................. 10 Bruno Bauwens When is the denial inequality an apartness relation? ................... 11 Josef