An Observation About Truth

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Table of Contents

Table of Contents Vision Statement 5 Strengthening the Intellectual Community 7 Academic Review 17 Recognition of Excellence 20 New Faculty 26 Student Prizes and Scholarships 28 News from the Faculties and Schools 29 Institute for Advanced Studies 47 Saltiel Pre‐Academic Center 49 Truman Institute for the Advancement of Peace 50 The Authority for Research Students 51 The Library Authority 54 In Appreciation 56 2009 Report by the Rector Back ACADEMIC DEVELOPMENTS VISION STATEMENT The academic year 2008/2009, which is now drawing to its end, has been a tumultuous one. The continuous budgetary cuts almost prevented the opening of the academic year, and we end the year under the shadow of the current global economic crisis. Notwithstanding these difficulties, the Hebrew University continues to thrive: as the present report shows, we have maintained our position of excellence. However the name of our game is not maintenance, but rather constant change and improvement, and despite the need to cut back and save, we have embarked on several new, innovative initiatives. As I reflect on my first year as Rector of the Hebrew University, the difficulties fade in comparison to the feeling of opportunity. We are fortunate to have the ongoing support of our friends, both in Israel and abroad, which is a constant reminder to us of the significance of the Hebrew University beyond its walls. Prof. Menachem Magidor is stepping down after twelve years as President of the University: it has been an enormous privilege to work with him during this year, and benefit from his experience, wisdom and vision. -

Association of Jewish Libraries N E W S L E T T E R February/March 2008 Volume XXVII, No

Association of Jewish Libraries N E W S L E T T E R February/March 2008 Volume XXVII, No. 3 JNUL Officially Becomes The National Library of Israel ELHANAN ADL E R On November 26, 2007, The Knesset enacted the “National Li- assistance of the Yad Hanadiv foundation, which previously brary Law,” transforming the Jewish National and University contributed the buildings of two other state bodies: the Knesset, Library (JNUL) at The Hebrew University’s Givat Ram campus and the Supreme Court. The new building will include expanded into the National Library of Israel. reading rooms and state-of-the-art storage facilities, as well as The JNUL was founded in 1892 by the Jerusalem Lodge of a planned Museum of the Book. B’nai B’rith. After the first World War, the library’s ownership The new formal status and the organizational change will was transferred to the World Zionist Organization. With the enable the National Library to expand and to serve as a leader opening of the Hebrew University on the Mount Scopus campus in its scope of activities in Israel, to broaden its links with simi- in 1925, the library was reorganized into the Jewish National and lar bodies in the world, and to increase its resources via the University Library and has been an administrative unit of the government and through contributions from Israel and abroad. Hebrew University ever since. With the founding of the State The law emphasizes the role of the Library in using technology of Israel the JNUL became the de facto national library of Israel. -

Computability Computability Computability Turing, Gödel, Church, and Beyond Turing, Gödel, Church, and Beyond Edited by B

computer science/philosophy Computability Computability Computability turing, Gödel, Church, and beyond turing, Gödel, Church, and beyond edited by b. Jack Copeland, Carl J. posy, and oron Shagrir edited by b. Jack Copeland, Carl J. posy, and oron Shagrir Copeland, b. Jack Copeland is professor of philosophy at the ContributorS in the 1930s a series of seminal works published by university of Canterbury, new Zealand, and Director Scott aaronson, Dorit aharonov, b. Jack Copeland, martin Davis, Solomon Feferman, Saul alan turing, Kurt Gödel, alonzo Church, and others of the turing archive for the History of Computing. Kripke, Carl J. posy, Hilary putnam, oron Shagrir, Stewart Shapiro, Wilfried Sieg, robert established the theoretical basis for computability. p Carl J. posy is professor of philosophy and member irving Soare, umesh V. Vazirani editors and Shagrir, osy, this work, advancing precise characterizations of ef- of the Centers for the Study of rationality and for lan- fective, algorithmic computability, was the culmina- guage, logic, and Cognition at the Hebrew university tion of intensive investigations into the foundations of Jerusalem. oron Shagrir is professor of philoso- of mathematics. in the decades since, the theory of phy and Former Chair of the Cognitive Science De- computability has moved to the center of discussions partment at the Hebrew university of Jerusalem. He in philosophy, computer science, and cognitive sci- is currently the vice rector of the Hebrew university. ence. in this volume, distinguished computer scien- tists, mathematicians, logicians, and philosophers consider the conceptual foundations of comput- ability in light of our modern understanding. Some chapters focus on the pioneering work by turing, Gödel, and Church, including the Church- turing thesis and Gödel’s response to Church’s and turing’s proposals. -

Formalizing Common Sense Reasoning for Scalable Inconsistency-Robust Information Integration Using Direct Logictm Reasoning and the Actor Model

Formalizing common sense reasoning for scalable inconsistency-robust information integration using Direct LogicTM Reasoning and the Actor Model Carl Hewitt. http://carlhewitt.info This paper is dedicated to Alonzo Church, Stanisław Jaśkowski, John McCarthy and Ludwig Wittgenstein. ABSTRACT People use common sense in their interactions with large software systems. This common sense needs to be formalized so that it can be used by computer systems. Unfortunately, previous formalizations have been inadequate. For example, because contemporary large software systems are pervasively inconsistent, it is not safe to reason about them using classical logic. Our goal is to develop a standard foundation for reasoning in large-scale Internet applications (including sense making for natural language) by addressing the following issues: inconsistency robustness, classical contrapositive inference bug, and direct argumentation. Inconsistency Robust Direct Logic is a minimal fix to Classical Logic without the rule of Classical Proof by Contradiction [i.e., (Ψ├ (¬))├¬Ψ], the addition of which transforms Inconsistency Robust Direct Logic into Classical Logic. Inconsistency Robust Direct Logic makes the following contributions over previous work: Direct Inference Direct Argumentation (argumentation directly expressed) Inconsistency-robust Natural Deduction that doesn’t require artifices such as indices (labels) on propositions or restrictions on reiteration Intuitive inferences hold including the following: . Propositional equivalences (except absorption) including Double Negation and De Morgan . -Elimination (Disjunctive Syllogism), i.e., ¬Φ, (ΦΨ)├T Ψ . Reasoning by disjunctive cases, i.e., (), (├T ), (├T Ω)├T Ω . Contrapositive for implication i.e., Ψ⇒ if and only if ¬⇒¬Ψ . Soundness, i.e., ├T ((├T) ⇒ ) . Inconsistency Robust Proof by Contradiction, i.e., ├T (Ψ⇒ (¬))⇒¬Ψ 1 A fundamental goal of Inconsistency Robust Direct Logic is to effectively reason about large amounts of pervasively inconsistent information using computer information systems. -

YIVO Encyclopedia Published to Celebration and Acclaim Former

YIVO Encyclopedia Published to Celebration and Acclaim alled “essential” and a “goldmine” in early reviews, The YIVO Encyclo- pedia of Jews in Eastern Europe was published this spring by Yale University Press. CScholars immediately hailed the two-volume, 2,400-page reference, the culmination of more than seven years of intensive editorial develop- ment and production, for its thoroughness, accuracy, and readability. YIVO began the celebrations surrounding the encyclopedia’s completion in March, just weeks after the advance copies arrived. On March 11, with Editor in Chief Gershon Hundert serving as moderator and respondent, a distinguished panel of “first readers”—scholars and writers who had not participated in the project—gave their initial reactions to the contents of the work. Noted author Allegra Goodman was struck by the simultaneous development, in the nineteenth century, of both Yiddish and Hebrew popular literature, with each trad- with which the contributors took their role in Perhaps the most enthusiastic remarks of the ing ascendance at different times. Marsha writing their articles. Dartmouth University evening came from Edward Kasinec, chief of Rozenblit of the University of Maryland noted professor Leo Spitzer emphasized that even the Slavic and Baltic Division of the New York that the encyclopedia, without nostalgia, pro- in this age of the Internet and Wikipedia, we Public Library, who said, “I often had the feeling vides insight into ordinary lives, the “very still need this encyclopedia for its informa- that Gershon Hundert and his many collabo- texture of Jewish life in Eastern Europe,” and tive, balanced, and unbiased treatment, unlike rators were like masters of a kaleidoscope, was impressed with the obvious seriousness much information found on the Web. -

Intelligence and Artificial Intelligence

We invite applications to participate in the 4th Intercontinental Academia dedicated to Intelligence and Artificial Intelligence Opening online session: 13-18 June 2021 1st workshop in Paris, France: 18-27 October 2021 2nd workshop in Belo Horizonte, Brazil: 6-15 June 2022 Call for fellows Deadline: March 31, 2021 (6 pm CET) The Intercontinental Academia (ICA) seeks to create a global network of future research leaders in which some of the very best early/mid-career scholars will work together on paradigm-shifting cross-disciplinary research, mentored by some of the most eminent researchers from across the globe. In order to achieve this high objective, we organize in a year three immersive and intense sessions. The experience is expected to transform the scholar's own approach to research, enhance their awareness of the work, relevance and potential impact of other disciplines, and to inspire and facilitate new collaborations between distant disciplines. Our aim is to make a real, yet volatile, intellectual cocktail that leads to meaningful exchange and long-lasting outputs. The theme Intelligence and Artificial Intelligence have been selected to provide a framework for stimulating intellectual exchange in 2021-2022. The past decades have witnessed an impressive progress in cognitive science, neuroscience and artificial intelligence. Beside the decisive scientific advances that have been accomplished in the analysis of brain activity and its behavioral counterparts or in the information processing sciences (machine learning, wearable sensors…), several fundamental and broader questions of deep interdisciplinary nature have been arising. As artificial intelligence and neuroscience/cognitive science seem to show significant complementarities, a key question is to inquire to which extent these complementarities should drive research in both areas and how to optimize synergies. -

Turing and the Art of Classical Computability

Turing and the Art of Classical Computability Robert Irving Soare Contents 1 Mathematics as an Art 1 2 Defining the Effectively Calculable Functions 2 3 Why Turing and not Church? 4 4 Why Michelangelo and not Donatello? 4 5 Classical Art and Classical Computability 6 6 Computability and Recursion 7 7 The Art of Exposition 8 8 Conclusion 8 1. Mathematics as an Art Mathematics is an art as well as a science. It is an art in the sense of a skill as in Donald Knuth's series, The Art of Computer Programming, but it is also an art in the sense of an esthetic endeavor with inherent beauty which is recognized by all mathematicians. One of the world's leading art treasures is Michelangelo's statue of David as a young man displayed in the Accademia Gallery in Florence. There is a long aisle to approach the statue of David. The aisle is flanked by the statues of Michelangelo's unfinished slaves struggling as if to emerge from the blocks of marble. These figures reveal Michelangelo's work process. There are practically no details, and yet they possess a weight and power beyond their physical proportions. Michelangelo thought of himself not as carving a statue but as seeing clearly the figure within the marble and then Preprint submitted to Elsevier July 15, 2011 chipping away the marble to release it. The unfinished slaves are perhaps a more revealing example of this talent than the finished statue of David. Similarly, it was Alan Turing [1936] and [1939] who saw the figure of computability in the marble more clearly than anyone else. -

Implicit and Explicit Examples of the Phenomenon of Deviant Encodings

STUDIES IN LOGIC, GRAMMAR AND RHETORIC 63 (76) 2020 DOI: 10.2478/slgr-2020-0027 This work is licensed under a Creative Commons Attribution BY 4.0 License (http://creativecommons.org/licenses/by/4.0) Paula Quinon Warsaw University of Technology Faculty of Administration and Social Sciences International Center for Formal Ontology e-mail: [email protected] ORCID: 0000-0001-7574-6227 IMPLICIT AND EXPLICIT EXAMPLES OF THE PHENOMENON OF DEVIANT ENCODINGS Abstract. The core of the problem discussed in this paper is the following: the Church-Turing Thesis states that Turing Machines formally explicate the intuitive concept of computability. The description of Turing Machines requires description of the notation used for the INPUT and for the OUTPUT. Providing a general definition of notations acceptable in the process of computations causes problems. This is because a notation, or an encoding suitable for a computation, has to be computable. Yet, using the concept of computation, in a definition of a notation, which will be further used in a definition of the concept of computation yields an obvious vicious circle. The circularity of this definition causes trouble in distinguishing on the theoretical level, what is an acceptable notation from what is not an acceptable notation, or as it is usually referred to in the literature, “deviant encodings”. Deviant encodings appear explicitly in discussions about what is an ad- equate or correct conceptual analysis of the concept of computation. In this paper, I focus on philosophical examples where the phenomenon appears im- plicitly, in a “disguised” version. In particular, I present its use in the analysis of the concept of natural number. -

A Bibliography of Publications of Alan Mathison Turing

A Bibliography of Publications of Alan Mathison Turing Nelson H. F. Beebe University of Utah Department of Mathematics, 110 LCB 155 S 1400 E RM 233 Salt Lake City, UT 84112-0090 USA Tel: +1 801 581 5254 FAX: +1 801 581 4148 E-mail: [email protected], [email protected], [email protected] (Internet) WWW URL: http://www.math.utah.edu/~beebe/ 24 August 2021 Version 1.227 Abstract This bibliography records publications of Alan Mathison Turing (1912– 1954). Title word cross-reference 0(z) [Fef95]. $1 [Fis15, CAC14b]. 1 [PSS11, WWG12]. $139.99 [Ano20]. $16.95 [Sal12]. $16.96 [Kru05]. $17.95 [Hai16]. $19.99 [Jon17]. 2 [Fai10b]. $21.95 [Sal12]. $22.50 [LH83]. $24.00/$34 [Kru05]. $24.95 [Sal12, Ano04, Kru05]. $25.95 [KP02]. $26.95 [Kru05]. $29.95 [Ano20, CK12b]. 3 [Ano11c]. $54.00 [Kru05]. $69.95 [Kru05]. $75.00 [Jon17, Kru05]. $9.95 [CK02]. H [Wri16]. λ [Tur37a]. λ − K [Tur37c]. M [Wri16]. p [Tur37c]. × [Jon17]. -computably [Fai10b]. -conversion [Tur37c]. -D [WWG12]. -definability [Tur37a]. -function [Tur37c]. 1 2 . [Nic17]. Zycie˙ [Hod02b]. 0-19-825079-7 [Hod06a]. 0-19-825080-0 [Hod06a]. 0-19-853741-7 [Rus89]. 1 [Ano12g]. 1-84046-250-7 [CK02]. 100 [Ano20, FB17, Gin19]. 10011-4211 [Kru05]. 10th [Ano51]. 11th [Ano51]. 12th [Ano51]. 1942 [Tur42b]. 1945 [TDCKW84]. 1947 [CV13b, Tur47, Tur95a]. 1949 [Ano49]. 1950s [Ell19]. 1951 [Ano51]. 1988 [Man90]. 1995 [Fef99]. 2 [DH10]. 2.0 [Wat12o]. 20 [CV13b]. 2001 [Don01a]. 2002 [Wel02]. 2003 [Kov03]. 2004 [Pip04]. 2005 [Bro05]. 2006 [Mai06, Mai07]. 2008 [Wil10]. 2011 [Str11]. 2012 [Gol12]. 20th [Kru05]. 25th [TDCKW84]. -

Is the Whole Universe a Computer?

CHAPTER 41 Is the whole universe a computer? JACK COPELAND, MARK SPREVAK, and ORON SHAGRIR The theory that the whole universe is a computer is a bold and striking one. It is a theory of everything: the entire universe is to be understood, fundamentally, in terms of the universal computing machine that Alan Turing introduced in 1936. We distinguish between two versions of this grand-scale theory and explain what the universe would have to be like for one or both versions to be true. Spoiler: the question is in fact wide open – at the present stage of science, nobody knows whether it's true or false that the whole universe is a computer. But the issues are as fascinating as they are important, so it's certainly worthwhile discussing them. We begin right at the beginning: what exactly is a computer? What is a computer? To start with the obvious, your laptop is a computer. But there are also computers very different from your laptop – tiny embedded computers inside watches, and giant networked supercomputers like China’s Tianhe-2, for example. So what feature do all computers have in common? What is it that makes them all computers? Colossus was a computer, even though (as Chapter 14 explained) it did not make use of stored programs and could do very few of the things that your laptop can do (not even long multiplication). Turing's ACE (Chapters 21 and 22) was a computer, even though its design was dissimilar from that of your laptop – for example, the ACE had no central processing unit (CPU), and moreover it stored its data and programs in the form of 'pings' of supersonic sound travelling along tubes of liquid. -

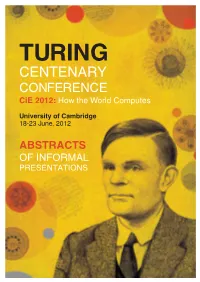

Abstracts Booklet

1 TURING CENTENARY CONFERENCE CiE 2012: How the World Computes University of Cambridge 18-23 June, 2012 ABSTRACTS OF INFORMAL PRESENTATIONS I Table of Contents Collective Reasoning under Uncertainty and Inconsistency ............. 1 Martin Adamcik Facticity as the amount of self-descriptive information in a data set ..... 2 Pieter Adriaans Towards a Type Theory of Predictable Assembly ...................... 3 Assel Altayeva and Iman Poernomo Worst case analysis of non-local games ............................... 4 Andris Ambainis, Art¯ursBaˇckurs,Kaspars Balodis, Agnis Skuˇskovniks,ˇ Juris Smotrovs and Madars Virza Numerical evaluation of the average number of successive guesses ....... 5 Kerstin Andersson Turing-degree of first-order logic FOL and reducing FOL to a propositional logic ................................................ 6 Hajnal Andr´ekaand Istv´anN´emeti Computing without a computer: the analytical calculus by means of formalizing classical computer operations ............................ 7 Vladimir Aristov and Andrey Stroganov On Models of the Modal Logic GL .................................. 8 Ilayda˙ Ate¸sand C¸i˘gdemGencer 0 1-Genericity and the Π2 Enumeration Degrees ........................ 9 Liliana Badillo and Charles Harris A simple proof that complexity of complexity can be large, and that some strings have maximal plain Kolmogorov complexity and non-maximal prefix-free complexity. ................................. 10 Bruno Bauwens When is the denial inequality an apartness relation? ................... 11 Josef -

Intercontinental Academia

th INTERCONTINENTAL 4 ACADEMIA The University-Based Institutes for Advanced Study CANDIDATES th (Ubias) is receiving submissions for the 4 Intercontinental Early/mid-career researchers with doctorate and Academia which theme is Intelligence and Artificial fluency in English can apply. Applications from Intelligence. candidates who hold a research post in academia, industry, charity, arts or other organizations, The Intercontinental Academia (ICA) is an initiative equivalent to the academic post of Lecturer that seeks to create a global network of future research or above, or holders of a Principal Investigator leaders by the interaction of young researchers mentored Fellowship, are accepted. by some of the most eminent researchers from across the globe. Applications and informal enquiries should be submitted to [email protected] until March In this edition, a meeting held in virtual format, in June 15, 2021. All communication will be conducted in 2021, and two immersion meetings are scheduled – the English. first scheduled to October 2021 in Paris and the second to June 2022 in Belo Horizonte. The French Network of Advanced Institutes and the Institute of Advanced Transdisciplinary Studies at UFMG are responsible for the organization. MENTORS E FELLOWS CALL FOR FELLOWS At the meetings, both in Paris and Belo Horizonte, http://www.ubias.net/intercontinental-academia selected candidates will have the chance to discuss research topics with a notable group of mentors, invited to participate in this edition of the Intercontinental