Response to NSF 20-015 Request for Information on Data-Focused

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ERC Document on Open Research Data and Data Management Plans

Open Research Data and Data Management Plans Information for ERC grantees by the ERC Scientific Council Version 4.0 11 August 2021 This document is regularly updated in order to take into account new developments in this rapidly evolving field. Comments, corrections and suggestions should be sent to the Secretariat of the ERC Scientific Council Working Group on Open Science via the address [email protected]. The table below summarizes the main changes that this document has undergone. HISTORY OF CHANGES Version Publication Main changes Page (in the date relevant version) 1.0 23.02.2018 Initial version 2.0 24.04.2018 Part ‘Open research data and data deposition in the 15-17 Physical Sciences and Engineering domain’ added Minor editorial changes; faulty link corrected 6, 10 Contact address added 2 3.0 23.04.2019 Name of WG updated 2 Added text to the section on ‘Data deposition’ 5 Reference to FAIRsharing moved to the general part 7 from the Life Sciences part and extended Added example of the Austrian Science Fund in the 8 section on ‘Policies of other funding organisations’; updated links related to the German Research Foundation and the Arts and Humanities Research Council; added reference to the Science Europe guide Small changes to the text on ‘Image data’ 9 Added reference to the Ocean Biogeographic 10 Information (OBIS) Reformulation of the text related to Biostudies 11 New text in the section on ‘Metadata’ in the Life 11 Sciences part Added reference to openICPSR 13 Added references to ioChem-BD and ChemSpider -

Open Science Toolbox

Open Science Toolbox A Collection of Resources to Facilitate Open Science Practices Lutz Heil [email protected] LMU Open Science Center April 14, 2018 [last edit: July 10, 2019] Contents Preface 2 1 Getting Started 3 2 Resources for Researchers 4 2.1 Plan your Study ................................. 4 2.2 Conduct your Study ............................... 6 2.3 Analyse your Data ................................ 7 2.4 Publish your Data, Material, and Paper .................... 10 3 Resources for Teaching 13 4 Key Papers 16 5 Community 18 1 Preface The motivation to engage in a more transparent research and the actual implementation of Open Science practices into the research workflow can be two very distinct things. Work on the motivational side has been successfully done in various contexts (see section Key Papers). Here the focus rather lays on closing the intention-action gap. Providing an overview of handy Open Science tools and resources might facilitate the implementation of these practices and thereby foster Open Science on a practical level rather than on a theoretical. So far, there is a vast body of helpful tools that can be used in order to foster Open Science practices. Without doubt, all of these tools add value to a more transparent research. But for reasons of clarity and to save time which would be consumed by trawling through the web, this toolbox aims at providing only a selection of links to these resources and tools. Our goal is to give a short overview on possibilities of how to enhance your Open Science practices without consuming too much of your time. -

Assessing the Impact and Quality of Research Data Using Altmetrics and Other Indicators

Konkiel, S. (2020). Assessing the Impact and Quality of Research Data Using Altmetrics and Other Indicators. Scholarly Assessment Reports, 2(1): 13. DOI: https://doi.org/10.29024/sar.13 COMMISSIONED REPORT Assessing the Impact and Quality of Research Data Using Altmetrics and Other Indicators Stacy Konkiel Altmetric, US [email protected] Research data in all its diversity—instrument readouts, observations, images, texts, video and audio files, and so on—is the basis for most advancement in the sciences. Yet the assessment of most research pro- grammes happens at the publication level, and data has yet to be treated like a first-class research object. How can and should the research community use indicators to understand the quality and many poten- tial impacts of research data? In this article, we discuss the research into research data metrics, these metrics’ strengths and limitations with regard to formal evaluation practices, and the possible meanings of such indicators. We acknowledge the dearth of guidance for using altmetrics and other indicators when assessing the impact and quality of research data, and suggest heuristics for policymakers and evaluators interested in doing so, in the absence of formal governmental or disciplinary policies. Policy highlights • Research data is an important building block of scientific production, but efforts to develop a framework for assessing data’s impacts have had limited success to date. • Indicators like citations, altmetrics, usage statistics, and reuse metrics highlight the influence of research data upon other researchers and the public, to varying degrees. • In the absence of a shared definition of “quality”, varying metrics may be used to measure a dataset’s accuracy, currency, completeness, and consistency. -

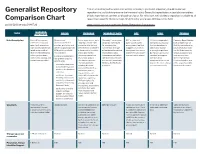

Generalist Repository Comparison Chart

This chart is designed to assist researchers in finding a generalist repository should no domain Generalist Repository repository be available to preserve their research data. Generalist repositories accept data regardless of data type, format, content, or disciplinary focus. For this chart, we included a repository available to all Comparison Chart researchers specific to clinical trials (Vivli) to bring awareness to those in this field. doi: 10.5281/zenodo.3946720 https://fairsharing.org/collection/GeneralRepositoryComparison HARVARD TOPIC DRYAD FIGSHARE MENDELEY DATA OSF VIVLI ZENODO DATAVERSE Brief Description Harvard Dataverse is Open-source, A free, open access, data Mendeley Data is a free OSF is a free and Vivli is an independent, Powering Open Science, a free data repository community-led data repository where users repository specialized open source project non-profit organization built on Open Source. open to all researchers curation, publishing, and can make all outputs of for research data. management tool that that has developed a Built by reserachers for from any discipline, both preservation platform for their research available in Search more than 20+ supports researchers global data-sharing researchers. Run from inside and outside of CC0 publicly available a discoverable, reusable, million datasets indexed throughout their entire and analytics platform. the CERN data centre, the Harvard community, research data and citable manner. from 1000s of data project lifecycle in open Our focus is on sharing whose purpose is long where you can share, Dryad is an independent Users can upload files repositories and collect science best practices. individual participant- term preservation archive, cite, access, and non-profit that works of any type and are and share datasets with level data from for the High Energy explore research data. -

How to Cite Datasets and Link to Publications

A Digital Curation Centre ‘working level’ guide How to Cite Datasets and Link to Publications Alex Ball (DCC) and Monica Duke (DCC) Please cite as: Ball, A., & Duke, M. (2015). ‘How to Cite Datasets and Link to Publications’. DCC How-to Guides. Edinburgh: Digital Curation Centre. Available online: http://www.dcc.ac.uk/resources/how-guides Digital Curation Centre, 2015. Licensed under Creative Commons Attribution 4.0 International: http://creativecommons.org/licenses/by/4.0/ How to Cite Datasets and Link to Publications Introduction This guide will help you create links between your academic publications and the underlying datasets, so that anyone viewing the publication will be able to locate the dataset and vice versa. It provides a working knowledge of the issues and challenges involved, and of how current approaches seek to address them. This guide should interest researchers and principal investigators working on data-led research, as well as the data repositories with which they work. Why cite datasets and link mechanisms allowing authors to be open about their research while still receiving due credit; metrics used them to publications? to translate such attributions into rewards for authors 1 and their institutions; and archives ensuring that the The motivation to cite datasets arises from a recog- work is permanently available for reference and reuse.5 nition that data generated in the course of research If datasets are to be regarded as first-class records of are just as valuable to the ongoing academic discourse research, as they need to be, a similar set of control as papers and monographs. -

2018 Organizational and Membership Report

2018 Organizational and Membership Report Table of contents Summary Data packages published in 2018 Membership Data Publishing Charges Downloads and web traffic Outreach Message from the Executive Director This past year, 2018, was a year of transition and new beginnings. After a very productive three and a half years at the helm, Meredith Morovati stepped down from her post as Executive Director. A several-month recruitment resulted in my appointment in August when I was required to hit the ground running given all of the exciting changes happening. The most transformative engagement Dryad launched in 2018 would be our ground-breaking partnership with the California Digital Library. Our shared goal is to incentivize data publishing best practices while facilitating a modern open source/open science community solution for data curation and publishing. Our joint effort better leads us to this goal by enabling Dryad to make much needed enhancements to core technology and to create a more sustainable business model. Working together is mutually beneficial as we pool our respective resources, share complementary areas of expertise, and play a strategic role in each other’s mission. To be clear, Dryad remains an independent, non-profit, community-owned 501(3)c accountable to our Board of Directors, while CDL remains a department of the University of California accountable to its institutional setting. No formal or legal status for either entity has changed. There is no financial investment to or from either party. The partnership has meant the Dryad repository will be fully upgraded and is moving from D-Space technology to the more nimble Ruby on Rails. -

Data Governance Workshop, Final Report Arlington, VA December 14-15, 2011

Data Governance Workshop, Final Report Arlington, VA December 14-15, 2011 Supported by NSF #0753138 and #0830944 Abstract The Internet and related technologies have created new opportunities to advance scientific research, in part by sharing research data sooner and more widely. The ability to discover, access and reuse existing research data has the potential to both improve the reproducibility of research as well as enable new research that builds on prior results in novel ways. Because of this potential there is increased interest from across the research enterprise (researchers, universities, funders, societies, publishers, etc.) in data sharing and related issues. This applies to all types of research, but particularly data-intensive or “big science”, and where data is expensive to produce or is not reproducible. However, our understanding of the legal, regulatory and policy environment surrounding research data lags behind that of other research outputs like publications or conference proceedings. This lack of shared understanding is hindering our ability to develop good policy and improve data sharing and reusability, but it is not yet clear who should take the lead in this area and create the framework for data governance that we currently lack. This workshop was a first attempt to define the issues of data governance, identify short- term activities to clarify and improve the situation, and suggest a long-term research agenda that would allow the research enterprise to create the vision of a truly scalable and interoperable “Web of data" that we believe can take scientific progress to new heights. Table of Contents Introduction ………………………………………………………………………… p. 2 Section I: Current Conventions for Data Sharing and Reuse … p. -

Open Data As Open Educational Resources: Towards Transversal Skills and Global Citizenship

Open Praxis, vol. 7 issue 4, October–December 2015, pp. 00–00 (ISSN 2304-070X) Open data as open educational resources: Towards transversal skills and global citizenship Javiera Atenas University College London (United Kingdom) [email protected] Leo Havemann Birkbeck, University of London (United Kingdom) [email protected] Ernesto Priego City University London (United Kingdom) [email protected] Abstract Open Data is the name given to datasets which have been generated by international organisations, governments, NGOs and academic researchers, and made freely available online and openly-licensed. These datasets can be used by educators as Open Educational Resources (OER) to support different teaching and learning activities, allowing students to gain experience working with the same raw data researchers and policy-makers generate and use. In this way, educators can facilitate students to understand how information is generated, processed, analysed and interpreted. This paper offers an initial exploration of ways in which the use of Open Data can be key in the development of transversal skills (including digital and data literacies, alongside skills for critical thinking, research, teamwork, and global citizenship), enhancing students’ abilities to understand and select information sources, to work with, curate, analyse and interpret data, and to conduct and evaluate research. This paper also presents results of an exploratory survey that can guide further research into Open Data-led learning activities. Our goal is to support educators in empowering students to engage, critically and collaboratively, as 21st century global citizens. Keywords: Open Data; Open Educational Resources; Research based learning; Critical Thinking; Global Citizenship; Higher Education Introduction The illusion of access promoted by computers provokes a confusion between the presentation of information and the capacity to use, sort and interpret it. -

A Study of Current Policies, Practices and Infrastructure Supporting the Sharing of Data to Prevent and Respond to Epidemic and Pandemic Threats

January 2018 A study of current policies, practices and infrastructure supporting the sharing of data to prevent and respond to epidemic and pandemic threats Elizabeth Pisani, Amrita Ghataure, Laura Merson Summary: Data sharing in public health emergencies: Wellcome/GloPID-R ii Executive summary Introduction Discussions around sharing public health research data have been running for close to a decade, and yet when the Ebola epidemic hit West Africa in 2014, data sharing remained the exception, not the norm. In response, the GloPID-R consortium of research funders commissioned a study to take stock of current data sharing practices involving research on pathogens with pandemic potential. The study catalogued different data sharing practices, and investigated the governance and curation standards of the most frequently used platforms and mechanisms. In addition, it sought to identify specific areas of support or investment which could lead to more effective sharing of data to prevent or limit future epidemics. Methods The study proceeded in three phases: a search for shared data, interviews with investigators, and a review of policies and statements about data sharing. We began by searching the academic literature, trials registries and research repositories for papers and data related to 12 pathogens named by the WHO as of priority concern because of their epidemic or pandemic potential. Chikungunya, Crimean Congo Haemorrhagic Fever (CCHF), Ebola, Lassa Fever, Marburg, Middle East Respiratory Syndrome Coronavirus (MERS-CoV), Severe Acute Respiratory Syndrome (SARS), Nipah, Rift Valley Fever (RVF), Severe Fever with Thrombocytopenia Syndrome (SFTS), Zika. For biomedical research involving these pathogens in humans, animals, in vitro and in silico, we sought to access the data underlying each paper, including through a short, on-line survey sent to 110 corresponding authors. -

The Open Knowledge Foundation: Open Data Means Better Science

Community Page The Open Knowledge Foundation: Open Data Means Better Science Jennifer C. Molloy* Department of Zoology, University of Oxford, Oxford, United Kingdom Data provides the evidence for the In response to these problems, multiple data wranglers, lawyers, and other indi- published body of scientific knowledge, individuals, groups, and organisations are viduals with interests in both open data which is the foundation for all scientific involved in a major movement to reform and the broader concept of open science. progress. The more data is made openly the process of scientific communication. available in a useful manner, the greater The promotion of open access and open The Open Knowledge Definition the level of transparency and reproduc- data and the development of platforms ibility and hence the more efficient the that reduce the cost and difficulty of data The definition of ‘‘open’’, crystallised in scientific process becomes, to the benefit of handling play a principal role in this. the OKD, means the freedom to use, society. This viewpoint is becoming main- One such organisation is the Working reuse, and redistribute without restrictions stream among many funders, publishers, Group on Open Data in Science (also beyond a requirement for attribution and scientists, and other stakeholders in re- known as the Open Science Working share-alike. Any further restrictions make an item closed knowledge. It also empha- search, but barriers to achieving wide- Group) at the Open Knowledge Founda- sises the importance of usability and access spread publication of open data remain. tion (OKF). The OKF is a community- to the entire dataset or knowledge work: The Open Data in Science working group based organisation that promotes open at the Open Knowledge Foundation is a knowledge, which encompasses open data, community that works to develop tools, free culture, the public domain, and other ‘‘The work shall be available as a applications, datasets, and guidelines to areas of the knowledge commons. -

Assigning Creative Commons Licenses to Research Metadata: Issues and Cases

Assigning Creative Commons Licenses to Research Metadata: Issues and Cases Marta POBLET a,1, Amir ARYANIb, Paolo MANGHIc, Kathryn UNSWORTHb, Jingbo WANGd , Brigitte HAUSSTEINe, Sunje DALLMEIER-TIESSENf, Claus-Peter KLASe, Pompeu CASANOVAS g,h and Victor RODRIGUEZ-DONCELi a RMIT University bAustralian National Data Service (ANDS) c ISTI, Italian Research Council d Australian National University eGESIS – Leibniz Institute for the Social Sciences fCERN g IDT, Autonomous University of Barcelona hDeakin University iUniversidad Politécnica de Madrid Abstract. This paper discusses the problem of lack of clear licensing and transparency of usage terms and conditions for research metadata. Making research data connected, discoverable and reusable are the key enablers of the new data revolution in research. We discuss how the lack of transparency hinders discovery of research data and make it disconnected from the publication and other trusted research outcomes. In addition, we discuss the application of Creative Commons licenses for research metadata, and provide some examples of the applicability of this approach to internationally known data infrastructures. Keywords. Semantic Web, research metadata, licensing, discoverability, data infrastructure, Creative Commons, open data Introduction The emerging paradigm of open science relies on increased discovery, access, and sharing of trusted and open research data. New data infrastructures, policies, principles, and standards already provide the bases for data-driven research. For example, the FAIR Guiding Principles for scientific data management and stewardship [23] describe the four principles—findability, accessibility, interoperability, and reusability—that should inform how research data are produced, curated, shared, and stored. The same principles are applicable to metadata records, since they describe datasets and related research information (e.g. -

A Balancing Act: the Ideal and the Realistic in Dryad's Preservation

A Balancing Act: The Ideal and the Realistic in Dryad’s Preservation Policy Development Sara Mannheimer, Ayoung Yoon, Jane Greenberg, Elena Feinstein, and Ryan Scherle This is a postprint of an article that originally appeared in First Monday, 4 August 2014. http://firstmonday.org/ Mannheimer S, Yoon A, Greenberg J, Feinstein E, Scherle R (2014) A balancing Act: he ideal and the realistic in Dryad’s preservation policy development. First Monday 19(8). http://dx.doi.org/10.5210/fm.v19i8.5415 Made available through Montana State University’s ScholarWorks scholarworks.montana.edu First Monday, Volume 19, Number 8 4 August 2014 Data preservation has gained momentum and visibility in connection with the growth in digital data and data sharing policies. The Dryad Repository, a curated general–purpose repository for preserving and sharing the data underlying scientific publications, has taken steps to develop a preservation policy to ensure the long–term persistence of this archived data. In 2013, a Preservation Working Group, consisting of Dryad staff and national and international experts in data management and preservation, was convened to guide the development of a preservation policy. This paper describes the policy development process, outcomes, and lessons learned in the process. To meet Dryad’s specific needs, Dryad’s preservation policy negotiates between the ideal and the realistic, including complying with broader governing policies, matching current practices, and working within system constraints. Contents Introduction The Dryad Repository Literature review Data preservation policy development process Results: Dryad preservation policy Discussion Conclusion Introduction The growth in digital data and data–intensive computing has contributed to the phenomenon often referred to as “the data deluge” (Hey and Trefethen, 2003).