A Ballista Retrospective

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction to UNIX What Is UNIX? Why UNIX? Brief History of UNIX Early UNIX History UNIX Variants

What is UNIX? A modern computer operating system Introduction to UNIX Operating system: “a program that acts as an intermediary between a user of the computer and the computer hardware” CS 2204 Software that manages your computer’s resources (files, programs, disks, network, …) Class meeting 1 e.g. Windows, MacOS Modern: features for stability, flexibility, multiple users and programs, configurability, etc. *Notes by Doug Bowman and other members of the CS faculty at Virginia Tech. Copyright 2001-2003. (C) Doug Bowman, Virginia Tech, 2001- 2 Why UNIX? Brief history of UNIX Used in many scientific and industrial settings Ken Thompson & Dennis Richie Huge number of free and well-written originally developed the earliest software programs versions of UNIX at Bell Labs for Open-source OS internal use in 1970s Internet servers and services run on UNIX Borrowed best ideas from other Oss Largely hardware-independent Meant for programmers and computer Based on standards experts Meant to run on “mini computers” (C) Doug Bowman, Virginia Tech, 2001- 3 (C) Doug Bowman, Virginia Tech, 2001- 4 Early UNIX History UNIX variants Thompson also rewrote the operating system Two main threads of development: in high level language of his own design Berkeley software distribution (BSD) which he called B. Unix System Laboratories System V Sun: SunOS, Solaris The B language lacked many features and Ritchie decided to design a successor to B GNU: Linux (many flavors) which he called C. SGI: Irix They then rewrote UNIX in the C FreeBSD programming language to aid in portability. Hewlett-Packard: HP-UX Apple: OS X (Darwin) … (C) Doug Bowman, Virginia Tech, 2001- 5 (C) Doug Bowman, Virginia Tech, 2001- 6 1 Layers in the UNIX System UNIX Structure User Interface The kernel is the core of the UNIX Library Interface Users system, controlling the system Standard Utility Programs hardware and performing various low- (shell, editors, compilers, etc.) System Interface calls User Mode level functions. -

Absolute BSD—The Ultimate Guide to Freebsd Table of Contents Absolute BSD—The Ultimate Guide to Freebsd

Absolute BSD—The Ultimate Guide to FreeBSD Table of Contents Absolute BSD—The Ultimate Guide to FreeBSD............................................................................1 Dedication..........................................................................................................................................3 Foreword............................................................................................................................................4 Introduction........................................................................................................................................5 What Is FreeBSD?...................................................................................................................5 How Did FreeBSD Get Here?..................................................................................................5 The BSD License: BSD Goes Public.......................................................................................6 The Birth of Modern FreeBSD.................................................................................................6 FreeBSD Development............................................................................................................7 Committers.........................................................................................................................7 Contributors........................................................................................................................8 Users..................................................................................................................................8 -

Introduction

CS307 Operating Systems Introduction Fan Wu Department of Computer Science and Engineering Shanghai Jiao Tong University Spring 2020 Operating Systems Operating Systems 2 Operating Systems UNIX-family: BSD(Berkeley Software Distribution), System-V, GNU/Linux, MINIX, Nachos, OS X, iOS BSD-family: FreeBSD, NetBSD, OpenBSD System-V-family: AIX, HP-UX, IRIX, Solaris Linux-family: Red Hat, Debian, Ubuntu, Fedora, openSUSE, Linux Mint, Google's Android, WebOS, Meego MS-DOS, Microsoft Windows, Windows Mobile, Win-CE, WP8 AmigaOS Symbian, MeeGo Google Chrome OS OS/2 XrossMediaBar(XMB) for PS3, Orbis OS for PS4 Input Output System for Wii Tiny-OS, LynxOS, QNX, VxWorks Operating Systems 3 Four Components of a Computer System People, machines, other computers Application programs define the ways in which theSystem system programs resources are arecomputer used to software solve the computingdesigned to problems operate theof thecomputer users hardware and toControls provide and a platformcoordinates for runninguse of hardware application among programsvarious applications and users provides basic computing resources Operating Systems 4 Computer System Structure Hardware – provides basic computing resources CPU, memory, I/O devices Operating system – Controls and coordinates use of hardware among various applications and users System programs – are computer software designed to operate the computer hardware and to provide a platform for running application programs BIOS and device drivers Application programs – define the ways in -

Google Announces Chrome OS, for Release Mid

Google Announces Chrome OS, For Release Mid-... http://tech.slashdot.org/story/09/07/08/0953238/... Slashdot Stories Slash Boxes Comments Search Deals new SlashTV Jobs Newsletter Submit Login Join Slashdot81 Full 19 stories Abbreviated can be 0listened Hidden to in audio form via an RSS feed,/Sea as read by our own robotic overlord. Score: 5 4 3 2 Nickname: 1 0 -1Password: 989 More Login Public Terminal Nickname:Log In Forgot your password? Sign in with Password: Google Public Terminal Twitter Log In Forgot yourFacebook password? Close LinkedIn Close Close Stories Submissions Popular Blog Slashdot Build Ask Slashdot Book Reviews Games Idle YRO Technology Cloud Hardware Linux Management Mobile Science Security Storage 1 of 31 03/01/15 17:19 Google Announces Chrome OS, For Release Mid-... http://tech.slashdot.org/story/09/07/08/0953238/... Announcing: Slashdot Deals - Explore geek apps, games, gadgets and more. (what is this?) Close, and don't show me this again Google Announces Chrome OS, For Release Mid-2010 1089 Posted by timothy on Wednesday July 08, 2009 @07:14AM from the bring-on-the-shiny dept. Zaiff Urgulbunger writes "After years of speculation, Google has announced Google Chrome OS, which should be available mid-2010. Initially targeting netbooks, its main selling points are speed, simplicity and security — which kind of implies that the current No.1 OS doesn't deliver in these areas! The Chrome OS will run on both x86 and ARM architectures, uses a Linux kernel with a new windowing system. According to Google, 'For application developers, the web is the platform. -

SDE 3.0.1 System Requirements

SDE 3.0.1 System Requirements This PDF contains system requirements information, including hardware requirements, best performance configurations, and limitations, for SDE 3.0.1. Compaq/Digital Tru64 UNIX V4.0b o Oracle 8 (32 bit) Enterprise Server 7.3.3 o Oracle 8 (32 bit) Workgroup Server 7.3.3 Compaq/Digital Tru64 UNIX V4.0c o Oracle 8 (32 bit) Enterprise Server 7.3.3 o Oracle 8 (32 bit) Workgroup Server 7.3.3 Compaq/Digital Tru64 UNIX V4.0d o Oracle 8 (32 bit) Enterprise Server 7.3.3 o Oracle 8 (32 bit) Workgroup Server 7.3.3 HP HP-UX 10.20 (700 series, 8x7 series) o Oracle 8 (32 bit) Enterprise Server 7.3.3 o Oracle 8 (32 bit) Workgroup Server 7.3.3 o Sybase SQL Server 11.0.2 HP HP-UX 11.0 (700 series, 8x7 series) o Oracle 8 (32 bit) Enterprise Server 7.3.3 o Oracle 8 (32 bit) Workgroup Server 7.3.3 IBM AIX 4.1.5.0 o IBM DB2 Common Server 2.1.2 o IBM DB2 Universal Database (UDB) 5.0 o Oracle 8 (32 bit) Enterprise Server 7.3.3 o Oracle 8 (32 bit) Workgroup Server 7.3.3 o Sybase SQL Server 11.0.2 IBM AIX 4.2.1.0 o IBM DB2 Universal Database (UDB) 5.0 o Oracle 8 (32 bit) Enterprise Server 7.3.3 o Oracle 8 (32 bit) Workgroup Server 7.3.3 IBM AIX 4.3.0.0 o Oracle 8 (32 bit) Enterprise Server 7.3.3 o Oracle 8 (32 bit) Workgroup Server 7.3.3 PC-Intel Windows NT 4.0 o Microsoft SQL Server 6.5 o Oracle 8 (32 bit) Enterprise Server 7.3.3 o Oracle 8 (32 bit) Workgroup Server 7.3.3 SGI IRIX 6.2 o Oracle 8 (32 bit) Enterprise Server 7.3.3 o Oracle 8 (32 bit) Workgroup Server 7.3.3 o Sybase SQL Server 11.0.2 SGI IRIX 6.3 o Oracle 8 (32 bit) Enterprise -

CXFSTM 5 Administration Guide for SGI® Infinitestorage

CXFSTM 5 Administration Guide for SGI® InfiniteStorage 007–4016–029 COPYRIGHT © 1999–2009 SGI. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of SGI. The following copyright notice applies to the LZF algorithm: Copyright (c) 2000-2005 Marc Alexander Lehmann <[email protected]> Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met: 1. Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer. 2. Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution. 3. The name of the author may not be used to endorse or promote products derived from this software without specific prior written permission. THIS SOFTWARE IS PROVIDED BY THE AUTHOR ‘‘AS IS’’ AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE AUTHOR BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY,WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE. -

Porting IRIX® Applications to SGI® Altix® Platforms: SGI Propack™ for Linux®

Porting IRIX® Applications to SGI® Altix® Platforms: SGI ProPack™ for Linux® 007-4674-001 CONTRIBUTORS WrittenbyStevenLevine Illustrated by Chrystie Danzer Production by Karen Jacobson Engineering contributions by George Pirocanac COPYRIGHT © 2004, Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The software described in this document is “commercial computer software” provided with restricted rights (except as to included open/free source) as specified in the FAR 52.227-19 and/or the DFAR 227.7202, or successive sections. Use beyond license provisions is a violation of worldwide intellectual property laws, treaties and conventions. This document is provided with limited rights as defined in 52.227-14. TRADEMARKS AND ATTRIBUTIONS Silicon Graphics, SGI, the SGI logo, Altix, IRIX, Origin, Onyx, Onyx2, and XFS are registered trademarks and CaseVision, NUMAflex, NUMAlink, OpenMP, Performance Co-Pilot, ProDev, SGI Advanced Linux, SGI ProPack, SGIconsole, and SHMEM are trademarks of Silicon Graphics, Inc., in the United States and/or other countries worldwide. SGI Advanced Linux Environment 3.0 is based on Red Hat Enterprise Linux AS 3.0, but is not sponsored by or endorsed by Red Hat, Inc. in any way. Cray is a registered trademark of Cray, Inc. FLEXlm is a registered trademark of Macrovision Corporation. Java is a registered trademark of Sun Microsystems, Inc. in the United States and/or other countries. -

IRIX® Admin System Configuration and Operation

IRIX® Admin System Configuration and Operation 007-2859-017 COPYRIGHT © 1992-2001 Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The electronic (software) version of this document was developed at private expense; if acquired under an agreement with the USA government or any contractor thereto, it is acquired as "commercial computer software" subject to the provisions of its applicable license agreement, as specified in (a) 48 CFR 12.212 of the FAR; or, if acquired for Department of Defense units, (b) 48 CFR 227-7202 of the DoD FAR Supplement; or sections succeeding thereto. Contractor/manufacturer is Silicon Graphics, Inc., 1600 Amphitheatre Pkwy 2E, Mountain View, CA 94043-1351. TRADEMARKS AND ATTRIBUTIONS Challenge, Indigo, IRIS, IRIX, Octane, and Onyx are registered trademarks and SGI, Crimson, Indigo2, IRIS FailSafe, IRIS InSight, IRIS WorkSpace, IRIX Networker, NUMAlink, Origin, Performance Co-Pilot, Power Challenge, Power Indigo2, Power Onyx, the SGI logo, and XFS are trademarks of Silicon Graphics, Inc. Indy is a registered trademark, used under license in the United States and owned by Silicon Graphics, Inc., in other countries worldwide. Centronics is a trademark of Centronics Data Computer Corporation. Cray is a registered trademark of Cray, Inc. Documenter’s Workbench is a trademark of Novell, Inc. FrameMaker, Illustrator, and PostScript are trademarks of Adobe Systems, Incorporated. -

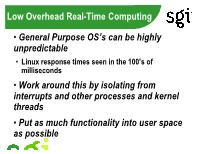

Low Overhead Real-Time Computing • General Purpose OS's Can Be

Low Overhead Real-Time Computing • General Purpose OS’s can be highly unpredictable • Linux response times seen in the 100’s of milliseconds • Work around this by isolating from interrupts and other processes and kernel threads • Put as much functionality into user space as possible User Space Functionality • Memory map hardware and clocks, and handle interrupts in user space • Use shared memory for IPC • Memory map special kernel pages or use virtual system calls • Do uncontended locking with IRIX usyncs or Linux futuxes Mutex Protocol Performance Time to Acquire and Release Once (ns) 100000 14,219 10000 725 715 1000 254 260 100 10 1 None Non- Priority Priority Ceiling Priority Ceiling preemptive Inheritance - Same Priority - Higher Priority Mutex Protocol Overview - General Purpose OS’s are complicated and unpredictable - Good RT performance may be had by protecting cpu’s from outside interference - User level interfaces to common features further reduce latency and unpredictability - Summary: Lock out and avoid as much of the kernel as possible 10/1/2003 1 Methods of Blocking Interruptions - Restrict cpu’s to specific threads - Isolate cpu’s from TLB and cache invalidations - Direct hardware interrupts elsewhere - Turn off the timeshare scheduling interrupt - Ward off any remaining kernel background tasks 10/1/2003 2 Lock in User Space When Possible - IRIX? usyncs and Linux? futexes attempt to handle most locking actions with atomic primitives outside the kernel - Contended locks force accessors into the kernel to use wait queues - -

Packaging for 11 Platforms with One Tool

Packaging for 11 platforms with one tool Jens Rehsack 2013 Jens Rehsack () Packaging for 11 platforms with one tool 2013 1 / 6 everyone know that but packaging takes time can't use dpkg's from developers machine on CentOS production server dependencies don't compile on backend AIX machine Packaging improves . Packaging Why packaging? Packaging provides Information - always know what is installed Reliability - always know if things belong together Useful - easy deployment / rollout Jens Rehsack () Packaging for 11 platforms with one tool 2013 2 / 6 everyone know that but packaging takes time can't use dpkg's from developers machine on CentOS production server dependencies don't compile on backend AIX machine Packaging Why packaging? Packaging provides Information - always know what is installed Reliability - always know if things belong together Useful - easy deployment / rollout Packaging improves . Jens Rehsack () Packaging for 11 platforms with one tool 2013 2 / 6 but packaging takes time can't use dpkg's from developers machine on CentOS production server dependencies don't compile on backend AIX machine Packaging Why packaging? Packaging provides Information - always know what is installed Reliability - always know if things belong together Useful - easy deployment / rollout Packaging improves . everyone know that Jens Rehsack () Packaging for 11 platforms with one tool 2013 2 / 6 Packaging Why packaging? Packaging provides Information - always know what is installed Reliability - always know if things belong together Useful - easy -

Introduction to Unix

Introduction to Unix Class 1 * Notes adapted by Alexey Onufriev from previous work by other members of the CS faculty at Virginia Tech What is Unix? A modern computer operating system Operating System “a program that acts as an intermediary between a user of the computer and the computer hardware” Software that manages your computer’s resources (files, programs, disks, network) Examples: Windows, MacOSX, Solaris, BSD, Linux (e.g. Mandrake, Red Hat, Slackware, SUSE) Modern Stable, flexible, configurable, allows multiple users and programs (C) Alexey Onufriev and Virginia Tech CS and Physics Dept. 2 Why Learn Unix? Will make you a better computer scientist UNIX is a building block for many CS concepts Open source and stable (no viruses, worms, etc) Used in many scientific and industrial settings. Huge number of free and well-written software programs Excellent programming environment. Different paradigm. Roughly 65% of the world’s web servers are Linux/Unix machines running Apache. Prerequisite to many other CS courses to follow (Operating Systems, Numerical methods, etc. ) (C) Alexey Onufriev and Virginia Tech CS and Physics Dept. 3 Example: Unix Open Office (C) Alexey Onufriev and Virginia Tech CS and Physics Dept. 4 Brief History of Unix Ken Thompson and Dennis Ritchie originally developed the earliest versions of Unix at Bell Labs for internal use in the 1970s Simple and elegant Meant for programmers and experts Written in a high-level language instead of assembly language Small portion written in assembly language (kernel) Remaining code written in C on top of the kernel http://www.bell-labs.com/history/unix/ (C) Alexey Onufriev and Virginia Tech CS and Physics Dept. -

Binary Compatibility on Netbsd

Binary compatibility on NetBSD Emmanuel Dreyfus, july 2014 About me ● Emmanuel Dreyfus <[email protected]> ● IT manager at ESPCI ParisTech as daylight job ● NetBSD contributor since 2001 ● Milter-greylist since 2006 ● OpenLDAP, glusterFS, mod_auth_mellon... ● Le cahier de l'admin BSD, Eyrolles editions Binary compatibility ● Same CPU, different OS ● No emulation ● Kernel masquarade as target kernel ● Useful to run proprietary apps ● Almost native performances What do we need? ● Identifying foreign binaries ● System calls translation ● Signals translation ● Nothing more... ● … except if non Unix-like target Identifying aliens ● This happens inside execve(2) ● OS-specific dynamic linker? ● .interp ELF section (see next slide) ● objdump -s -j .interp /bin/ls ● NetBSD: /libexec/ld.elf_so ● Linux: /lib/ld-linux.so.2 ● Only for dynamic binaries Playing with objdump(1) $ objdump -h /bin/ls (...) Idx Name Size VMA LMA File off Algn 0 .interp 00000013 0000004001c8 0000004001c8 000001c8 2**0 CONTENTS, ALLOC, LOAD, READONLY, DATA 1 .note.netbsd.ident 018 0000004001dc 0000004001dc 000001dc 2**2 CONTENTS, ALLOC, LOAD, READONLY, DATA 2 .note.netbsd.pax 00014 0000004001f4 0000004001f4 000001f4 2**2 CONTENTS, ALLOC, LOAD, READONLY, DATA 3 .hash 0000019c 000000400208 000000400208 00000208 2**3 CONTENTS, ALLOC, LOAD, READONLY, DATA 4 .dynsym 00000600 0000004003a8 0000004003a8 000003a8 2**3 CONTENTS, ALLOC, LOAD, READONLY, DATA (…) $ objdump -s -j .interp /bin/ls (...) Contents of section .interp: 4001c8 2f6c6962 65786563 2f6c642e 656c665f /libexec/ld.elf_