Enter the Matrix: Safely Interruptible Autonomous Systems Via Virtualization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bianca Del Rio Floats Too, B*TCHES the ‘Clown in a Gown’ Talks Death-Drop Disdain and Why She’S Done with ‘Drag Race’

Drag Syndrome Barred From Tanglefoot: Discrimination or Protection? A Guide to the Upcoming Democratic Presidential Kentucky Marriage Battle LGBTQ Debates Bianca Del Rio Floats Too, B*TCHES The ‘Clown in a Gown’ Talks Death-Drop Disdain and Why She’s Done with ‘Drag Race’ PRIDESOURCE.COM SEPT.SEPT. 12, 12, 2019 2019 | | VOL. VOL. 2737 2737 | FREE New Location The Henry • Dearborn 300 Town Center Drive FREE PARKING Great Prizes! Including 5 Weekend Join Us For An Afternoon Celebration with Getaways Equality-Minded Businesses and Services Free Brunch Sunday, Oct. 13 Over 90 Equality Vendors Complimentary Continental Brunch Begins 11 a.m. Expo Open Noon to 4 p.m. • Free Parking Fashion Show 1:30 p.m. 2019 Sponsors 300 Town Center Drive, Dearborn, Michigan Party Rentals B. Ella Bridal $5 Advance / $10 at door Family Group Rates Call 734-293-7200 x. 101 email: [email protected] Tickets Available at: MiLGBTWedding.com VOL. 2737 • SEPT. 12 2019 ISSUE 1123 PRIDE SOURCE MEDIA GROUP 20222 Farmington Rd., Livonia, Michigan 48152 Phone 734.293.7200 PUBLISHERS Susan Horowitz & Jan Stevenson EDITORIAL 22 Editor in Chief Susan Horowitz, 734.293.7200 x 102 [email protected] Entertainment Editor Chris Azzopardi, 734.293.7200 x 106 [email protected] News & Feature Editor Eve Kucharski, 734.293.7200 x 105 [email protected] 12 10 News & Feature Writers Michelle Brown, Ellen Knoppow, Jason A. Michael, Drew Howard, Jonathan Thurston CREATIVE Webmaster & MIS Director Kevin Bryant, [email protected] Columnists Charles Alexander, -

Document 273 5-5-2014 – Download

Case 2:07-cv-00552-DB-EJF Document 273 Filed 05/05/14 Page 1 of 46 PROSE 1 SOPHIA STEWART P.O. BOX 31725 2 Las Vegasl NV 89173 3 702-501-5900 (PH) 4 ... IN PROPRIA PERSONA v: ---;;:\-CQ\( 5 IN THE UNITED STATES DISTRICT COURT \l~YU I I "' 6 FOR THE DISTRICT OF UTAH 7 SOPHIA STEWART, HON. EVELYN J. FURSE 8 Plaintiff, v. HON. DEE BENSON 9 MICHAEL STOLLER, GARY BROWN, Case No.: 2:07CV552 DB-EJF 10 DEAN WEBB, AND JONATHAN 11 LUBELL OBJECTION TO COURT DENIAL Defendants. TO AWARD DAMAGES AGAINST 12 DEFENDANT LUBELL AND 13 DEMAND FOR EXPEDITED AWARD 14 15 16 COMES NOW1 PLAINTIFF SOPHIA STEWART1 bringing forth the Objection To the 17 Court's Denial To Award Damages Against All Defendants And Demand For 18 Expedited Award of Damages in the amount of One Billion Three Hundred and 19 Sixty Seven Million $1,367,000,000.00 dollars for The Matrix Trilogy damages and 20 Three point five Billion $3,580,000,000.00 dollars in damages for the Terminator 21 Franchise Series. 22 23 Plaintiff brings forth this objection against the court for violating the 24 Plaintiff's constitutional Sixth and fourth amendments "Due Process and Equal 25 Protection" rights and obstructing her from a jury trial and the ability to face the 26 defendants in court for more than 6 years while Jonathan Lubell was deceased, 27 thus constituting a violation of racial discrimination and fraud. Racial 28 discrimination by concerted action is a federal offense under 18 U.S.C. -

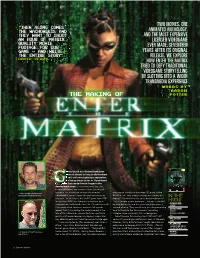

The Making of Enter the Matrix

TWO MOVIES. ONE “THEN ALONG COMES THE WACHOWSKIS AND ANIMATED ANTHOLOGY. THEY WANT TO SHOOT AND THE MOST EXPENSIVE AN HOUR OF MATRIX LICENSED VIDEOGAME QUALITY MOVIE FOOTAGE FOR OUR EVER MADE. SEVENTEEN GAME – AND WRITE YEARS AFTER ITS ORIGINAL THE ENTIRE STORY” RELEASE, WE EXPLORE DAVID PERRY HOW ENTER THE MATRIX TRIED TO DEFY TRADITIONAL VIDEOGAME STORYTELLING BY SLOTTING INTO A WIDER TRANSMEDIA EXPERIENCE Words by Aaron THE MAKING OF Potter ames based on a licence have been around almost as long as the medium itself, with most gaining a reputation for being cheap tie-ins or ill-produced cash grabs that needed much longer in the development oven. It’s an unfortunate fact that, in most instances, the creative teams tasked with » Shiny Entertainment founder and making a fun, interactive version of a beloved working on a really cutting-edge 3D game called former game director David Perry. Hollywood IP weren’t given the time necessary to Sacrifice, so I very embarrassingly passed on the IN THE succeed – to the extent that the ET game from 1982 project.” David chalks this up as being high on his for the Atari 2600 was famously rushed out by a “list of terrible career decisions”, though it wouldn’t KNOW single person and helped cause the US industry crash. be long before he and his team would be given a PUBLISHER: ATARI After every crash, however, comes a full system second chance. They could even use this pioneering DEVELOPER : reboot. And it was during the world’s reboot at the tech to translate the Wachowskis’ sprawling SHINY turn of the millennium, around the time a particular universe more accurately into a videogame. -

BEFORE the PHILOSOPHY There Are Several Ways That We Might Explain the Location of the Matrix

ONE BEFORE THE 7 PHILOSOPHY UNDERSTANDING THE FILMS BEFORE THE PHILOSOPHY I’m really struggling here. I’m trying to keep up, but I’m losing the plot. There is way too much weird shit going on around here and nothing is going the way it is supposed to go. I mean, doors that go nowhere and everywhere, programs acting like humans, multiplying agents . Oh when, when will it end? – SparksE The Matrix films often left audiences more confused than they had bargained for. Some say that their confusion began with the very first film, and was compounded with each sequel. Others understood the big picture, but found themselves a bit perplexed concerning the details. It’s safe to say that no one understands the films completely – there are always deeper levels to consider. So before we explore the more philosophical aspects of the films, I hope to clarify some of the common points of confusion. But first, I strongly encourage you to watch all three films. There are spoilers ahead. The Matrix Dreamworld You mean this isn’t real? – Neo† The Matrix is essentially a computer-generated dreamworld. It is the illusion of a world that no longer exists – a world of human technology and culture as it was at the end of the twentieth century. This illusion is pumped into the brains of millions of people who, in reality, are lying fast asleep in slime-filled cocoons. To them this virtual world seems like real life. They go to work, watch their televisions, and pay their taxes, fully believing that they are physically doing these things, when in fact they are doing them “virtually” – within their own minds. -

Breaking the Code of the Matrix; Or, Hacking Hollywood

Matrix.mss-1 September 1, 2002 “Breaking the Code of The Matrix ; or, Hacking Hollywood to Liberate Film” William B. Warner© Amusing Ourselves to Death The human body is recumbent, eyes are shut, arms and legs are extended and limp, and this body is attached to an intricate technological apparatus. This image regularly recurs in the Wachowski brother’s 1999 film The Matrix : as when we see humanity arrayed in the numberless “pods” of the power plant; as when we see Neo’s muscles being rebuilt in the infirmary; when the crew members of the Nebuchadnezzar go into their chairs to receive the shunt that allows them to enter the Construct or the Matrix. At the film’s end, Neo’s success is marked by the moment when he awakens from his recumbent posture to kiss Trinity back. The recurrence of the image of the recumbent body gives it iconic force. But what does it mean? Within this film, the passivity of the recumbent body is a consequence of network architecture: in order to jack humans into the Matrix, the “neural interactive simulation” built by the machines to resemble the “world at the end of the 20 th century”, the dross of the human body is left elsewhere, suspended in the cells of the vast power plant constructed by the machines, or back on the rebel hovercraft, the Nebuchadnezzar. The crude cable line inserted into the brains of the human enables the machines to realize the dream of “total cinema”—a 3-D reality utterly absorbing to those receiving the Matrix data feed.[footnote re Barzin] The difference between these recumbent bodies and the film viewers who sit in darkened theaters to enjoy The Matrix is one of degree; these bodies may be a figure for viewers subject to the all absorbing 24/7 entertainment system being dreamed by the media moguls. -

Play Chapter: Video Games and Transmedia Storytelling 1

LONG / PLAY CHAPTER: VIDEO GAMES AND TRANSMEDIA STORYTELLING 1 PLAY CHAPTER: VIDEO GAMES AND TRANSMEDIA STORYTELLING Geoffrey Long April 25, 2009 Media in Transition 6 Cambridge, MA Revision 1.1 ABSTRACT Although multi‐media franchises have long been common in the entertainment industry, the past two years have seen a renaissance of transmedia storytelling as authors such as Joss Whedon and J.J. Abrams have learned the advantages of linking storylines across television, feature films, video games and comic books. Recent video game chapters of transmedia franchises have included Star Wars: The Force Unleashed, Lost: Via Domus and, of course, Enter the Matrix ‐ but compared to comic books and webisodes, video games still remain a largely underutilized component in this emerging art form. This paper will use case studies from the transmedia franchises of Star Wars, Lost, The Matrix, Hellboy, Buffy the Vampire Slayer and others to examine some of the reasons why this might be the case (including cost, market size, time to market, and the impacts of interactivity and duration) and provide some suggestions as to how game makers and storytellers alike might use new trends and technologies to close this gap. INTRODUCTION First of all, thank you for coming. My name is Geoffrey Long, and I am the Communications Director and a Researcher for the Singapore‐MIT GAMBIT Game Lab, where I've been continuing the research into transmedia storytelling that I began as a Master's student here under Henry Jenkins. If you're interested, the resulting Master's thesis, Transmedia Storytelling: Business Aesthetics and Production at the Jim Henson Company is available for downloading from http://www.geoffreylong.com/thesis. -

Mapping the Canadian Video and Computer Game Industry

Mapping the Canadian Video and Computer Game Industry Nick Dyer-Witheford [email protected] A talk for the “Digital Poetics and Politics” Summer Institute, Queen’s University, Kingston, Ontario, Aug 4, 2004 1 Introduction Invented in the 1970s as the whimsical creation of bored Pentagon researchers, video and computer games have over thirty years exploded into the fastest growing of advanced capitalism’s cultural industries. Global revenues soared to a record US$30 billion in 2002 (Snider, 2003), exceeding Hollywood’s box-office receipts. Lara Croft, Pokemon, Counterstrike, Halo and Everquest are icons of popular entertainment in the US, Europe, Japan, and, increasingly, around the planet. This paper—a preliminary report on a three-year SSHRC funded study—describes the Canadian digital play industry, some controversies that attend it and some opportunities for constructive policy intervention. First, however, a quick overview of how the industry works. How Play Works Video games are played on a dedicated “console,” like Sony’s PlayStation 2 or Microsoft’s Xbox, connected to a television, or on a portable “hand-held” device, like Nintendo’s Game Boy. Computer games are played on a personal computer (PC). There is also a burgeoning field of wireless and cell phone games. Irrespective of platform, digital game production has four core activities: development, publishing, distribution and licensing. Development--the design of game software-- is the industry’s wellspring. No longer the domain of lone-wolf developers, making a commercial game is today a lengthy, costly, and cooperative venture; lasts twelve months to three years, costs $2 to 6 million, and requires 20 to 100 people. -

Infogrames Entertainment, SA

Infogrames Entertainment, SA Infogrames Entertainment, SA (IESA)(French pronun- more than $500 million; the objective was to become ciation: [ɛ̃fɔɡʁam]) was an international French holding the world’s leading interactive entertainment publisher.[6] company headquartered in Lyon, France. It was the While the company’s debt increased from $55 million in owner of Atari, Inc., headquartered in New York City, 1999 to $493 million in 2002, the company’s revenue also U.S. and Atari Europe. It was founded in 1983 by increased from $246 million to $650 million during the Bruno Bonnell and Christophe Sapet using the proceeds same period.[7] from an introductory computer book. Through its sub- In 1996 IESA bought Ocean Software for about $100 sidiaries, Infogrames produced, published and distributed million,[8] renaming the company as Infogrames UK.[9] interactive games for all major video game consoles and In 1997 Philips Media BV was purchased. computer game platforms. In 1998 IESA acquired a majority share of 62.5% in the game distributor OziSoft, which became Infogrames Australia,[10] and in 2002 IESA bought the remaining 1 History shares of Infogrames Australia from Sega and other share holders[11] for $3.7 million.[7] In this same year 1.1 Early history the distributors ABS Multimedia, Arcadia and the Swiss Gamecity GmbH were acquired.[12][13] The founders wanted to christen the company Zboub Sys- In 1999 IESA bought Gremlin Interactive for $40 mil- tème (which can be approximatively translated by Dick lion, renaming it to Infogrames Sheffield House but it was [4] System), but were dissuaded by their legal counsel. -

Religion and Filosophy in Hypertext: the Matrix Saga

MASTER UNIVERSITARIO EN CIENCIAS DE LAS RELIGIONES HIPERTEXTUALIDAD RELIGIOSA Y FILOSÓFICA: LA SAGA THE MATRIX RELIGION AND FILOSOPHY IN HYPERTEXT: THE MATRIX SAGA TRABAJO FIN DE MASTER. CURSO: 2014-2015 AUTOR: Doblado Ruiz-Olivares, Christian Alejandro ( [email protected] ) TUTOR: Francisco Javier Fernández Vallina. Dpto. de Estudios Hebreos y Arameos. Facultad de Filología. ( [email protected] ) DESCRIPTORES: Matrix Religión Filosofía Hipertextualidad Semiosfera Transmedia Cine Ciencia Ficción Mito Wachowski DESCRIPTORS: Matrix Religion Filosophy Hypertext Semiosphere Transmedia Narrative Cinema Science Fiction Myth Wachowski Resumen: El presente trabajo analiza la transtextualidad religiosa y filosófica que encierra tanto el guión de la saga Matrix, como el discurso que atraviesa su argumento. No solo limitarse a comparar los claros símbolos religiosos y filosóficos que existen en las películas con sus fuentes textuales, sino cómo estos constituyen un discurso hipertextual en el convergen confesiones y dogmas de esas distintas culturas, religiones, filosofías y tradiciones, dando lugar a un novedoso texto que está en línea con las preocupaciones espirituales y existenciales de la sociedad contemporáneo globalizada, construyendo una forma contemporánea de mito. Abstract: This paper analyzes the religious and philosophical transtextuality enclosing both the script of the Matrix saga, as the speech that crosses his argument. Not only limited to compare clear religious and philosophical symbols that exist in movies with their textual sources , but how these are a speech in hypertext converge confessions and dogmas of these different cultures , religions , philosophies and traditions , resulting in a novel text that is in line with the spiritual and existential concerns of the contemporary globalized society, building a contemporary form of myth. -

Read Book the Matrix

THE MATRIX PDF, EPUB, EBOOK Joshua Clover | 96 pages | 12 Jun 2007 | British Film Institute | 9781844570454 | English | London, United Kingdom The Matrix – Matrix Wiki – Neo, Trinity, the Wachowskis And the special effects are absolutely amazing even if similar ones have been used in other movies as a result- and not explained as well. But the movie has plot as well. It has characters that I cared about. From Keanu Reeves' excellent portrayal of Neo, the man trying to come to grips with his own identity, to Lawrence Fishburne's mysterious Morpheus, and even the creepy Agents, everyone does a stellar job of making their characters more than just the usual action "hero that kicks butt" and "cannon fodder" roles. I cared about each and every one of the heroes, and hated the villains with a passion. It has a plot, and it has a meaning Just try it, if you haven't seen the movie before. Watch one of the fight scenes. Then watch the whole movie. There's a big difference in the feeling and excitement of the scenes- sure, they're great as standalones, but the whole thing put together is an experience unlike just about everything else that's come to the theaters. Think about it next time you're watching one of the more brainless action flicks If you haven't, you're missing out on one of the best films of all time. It isn't just special effects, folks. Looking for some great streaming picks? Check out some of the IMDb editors' favorites movies and shows to round out your Watchlist. -

The Matrix Explained

The Matrix Explained http://www.idph.net 9 de fevereiro de 2004 2 IDPH The Matrix Esta é uma coletânea de diversos artigos publicados na Internet sobre a trilogia Matrix. Como os links na Internet desaparecem muito rapidamente, achei que seria in- teressante colocar, em um mesmo documento, as especulações mais provocati- vas sobre a trilogia. Cada um dos artigos contém o link onde o artigo original foi encontrado. Estas referências, em sua maior parte, foram obtidas no Google (http://www. google.com), buscando por documentos que contivessem, em seu título, as palavras “matrix explained”; intitle:"matrix explained" Infelizmente, estão todos em inglês. Em português existem dois livros muito bons sobre os filmes: • MATRIX – Bem-vindo ao Deserto do Real Coletânea de William Irwin Editora Madras • A Pílula Vermelha Questões de Ciência, Fiilosofia e Religião em Matrix Editora Publifolha Quem sabe vivemos mesmo em uma Matrix. Ao menos é o que diz um dos artigos do do livro “A Pílula Vermelha”. Espero que gostem ... http://www.idph.net IDPH 3 Matrix – Explained by Reshmi Posted on November 12, 2003 14:39 PM EST To understand “The Matrix Revolutions” we’re gonna have to go all the way back to the beginning. A time that was shown in the superior Animatrix shorts “The Second Renaissance Parts I & II. In the beginning there was man. Then man created machines. Designed in man’s likeness the machines served man well, but man’s jealous nature ruined that relationship. When a worker robot killed one of its own abusive masters it was placed on trial for murder and sentenced for destruction. -

Synergy of Computer Games and Films

Synergy of Computer Games and Films Computer games and films have had a complicated history together. While some see them as purely separate forms of media and entirely different, others see them as intertwined in both their narratological developments and their ability to enthral their respective audiences. Films have been around for over 100 years with the invention of the Kinetophonograph in the late 1880s [1] with ‘talkies’ (Films with sound rather than silence) occurring in the late 1920s. This has allowed films to have a long time to evolve and become more complex forms of media than the original silent films such as those of Chaplin and Buster Keaton. Films are now part of a much larger experience, allowing viewers an insight into various periods of history as well as various emotional states that actors can portray. They are also worth a huge sum of money with many film productions of recent years costing in the 100s of millions of dollars, and actors commanding fees of 10s of millions to star in the films. In comparison computer games are relatively behind on these developments. Having only been in mainstream culture for the past 30 years, and only to a particularly noticeable degree in the last 10 years, the game media has a lot of catching up to do. Crawford argued that games suffer from ‘movie envy’ [2] in their attempts at being the same as films. It is also arguable that most games have relatively simplistic storylines compared to some films but this will be examined in depth further on.