QUANTUM GRAVITY and the ROLE of CONSCIOUSNESS in PHYSICS Resolving the Contradictions Between Quantum Mechanics and Relativity

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Near-Infrared Luminosity Relations and Dust Colors L

A&A 578, A47 (2015) Astronomy DOI: 10.1051/0004-6361/201525817 & c ESO 2015 Astrophysics Obscuration in active galactic nuclei: near-infrared luminosity relations and dust colors L. Burtscher1, G. Orban de Xivry1, R. I. Davies1, A. Janssen1, D. Lutz1, D. Rosario1, A. Contursi1, R. Genzel1, J. Graciá-Carpio1, M.-Y. Lin1, A. Schnorr-Müller1, A. Sternberg2, E. Sturm1, and L. Tacconi1 1 Max-Planck-Institut für extraterrestrische Physik, Postfach 1312, Gießenbachstr., 85741 Garching, Germany e-mail: [email protected] 2 Raymond and Beverly Sackler School of Physics & Astronomy, Tel Aviv University, 69978 Ramat Aviv, Israel Received 5 February 2015 / Accepted 5 April 2015 ABSTRACT We combine two approaches to isolate the AGN luminosity at near-IR wavelengths and relate the near-IR pure AGN luminosity to other tracers of the AGN. Using integral-field spectroscopic data of an archival sample of 51 local AGNs, we estimate the fraction of non-stellar light by comparing the nuclear equivalent width of the stellar 2.3 µm CO absorption feature with the intrinsic value for each galaxy. We compare this fraction to that derived from a spectral decomposition of the integrated light in the central arcsecond and find them to be consistent with each other. Using our estimates of the near-IR AGN light, we find a strong correlation with presumably isotropic AGN tracers. We show that a significant offset exists between type 1 and type 2 sources in the sense that type 1 MIR X sources are 7 (10) times brighter in the near-IR at log LAGN = 42.5 (log LAGN = 42.5). -

Wolfgang Pauli

WOLFGANG PAULI Physique moderne et Philosophie 12 mai 1999 de Wolfgang Pauli Le Cas Kepler, précédé de "Les conceptions philosophiques de Wolfgang Pauli" 2 octobre 2002 de Wolfgang Pauli et Werner Heisenberg Page 1 sur 14 Le monde quantique et la conscience : Sommes-nous des robots ou les acteurs de notre propre vie ? 9 mai 2016 de Henry Stapp et Jean Staune Begegnungen. Albert Einstein - Karl Heim - Hermann Oberth - Wolfgang Pauli - Walter Heitler - Max Born - Werner Heisenberg - Max von… Page 2 sur 14 [Atom and Archetype: The Pauli/Jung Letters, 1932-1958] (By: Wolfgang Pauli) [published: June, 2001] 7 juin 2001 de Wolfgang Pauli [Deciphering the Cosmic Number: The Strange Friendship of Wolfgang Pauli and Carl Jung] (By: Arthur I. Miller) [published: May, 2009] 29 mai 2009 de Arthur I. Miller [(Atom and Archetype: The Pauli/Jung Letters, 1932-1958)] [Author: Wolfgang Pauli] published on (June, 2001) 7 juin 2001 Page 3 sur 14 de Wolfgang Pauli Wolfgang Pauli: Das Gewissen der Physik (German Edition) Softcover reprint of edition by Enz, Charles P. (2013) Paperback 1709 de Charles P. Enz Wave Mechanics: Volume 5 of Pauli Lectures on Physics (Dover Books on Physics) by Wolfgang Pauli (2000) Paperback 2000 Page 4 sur 14 Electrodynamics: Volume 1 of Pauli Lectures on Physics (Dover Books on Physics) by Wolfgang Pauli (2000) Paperback 2000 Journal of Consciousness Studies, Controversies in Science & the Humanities: Wolfgang Pauli's Ideas on Mind and Matter, Vol 13, No. 3,… 2006 de Joseph A. (ed.) Goguen Atom and Archetype: The Pauli/Jung Letters, 1932-1958 by Jung, C. -

The Universe, Life and Everything…

Our current understanding of our world is nearly 350 years old. Durston It stems from the ideas of Descartes and Newton and has brought us many great things, including modern science and & increases in wealth, health and everyday living standards. Baggerman Furthermore, it is so engrained in our daily lives that we have forgotten it is a paradigm, not fact. However, there are some problems with it: first, there is no satisfactory explanation for why we have consciousness and experience meaning in our The lives. Second, modern-day physics tells us that observations Universe, depend on characteristics of the observer at the large, cosmic Dialogues on and small, subatomic scales. Third, the ongoing humanitarian and environmental crises show us that our world is vastly The interconnected. Our understanding of reality is expanding to Universe, incorporate these issues. In The Universe, Life and Everything... our Changing Dialogues on our Changing Understanding of Reality, some of the scholars at the forefront of this change discuss the direction it is taking and its urgency. Life Understanding Life and and Sarah Durston is Professor of Developmental Disorders of the Brain at the University Medical Centre Utrecht, and was at the Everything of Reality Netherlands Institute for Advanced Study in 2016/2017. Ton Baggerman is an economic psychologist and psychotherapist in Tilburg. Everything ISBN978-94-629-8740-1 AUP.nl 9789462 987401 Sarah Durston and Ton Baggerman The Universe, Life and Everything… The Universe, Life and Everything… Dialogues on our Changing Understanding of Reality Sarah Durston and Ton Baggerman AUP Contact information for authors Sarah Durston: [email protected] Ton Baggerman: [email protected] Cover design: Suzan Beijer grafisch ontwerp, Amersfoort Lay-out: Crius Group, Hulshout Amsterdam University Press English-language titles are distributed in the US and Canada by the University of Chicago Press. -

Messier Objects

Messier Objects From the Stocker Astroscience Center at Florida International University Miami Florida The Messier Project Main contributors: • Daniel Puentes • Steven Revesz • Bobby Martinez Charles Messier • Gabriel Salazar • Riya Gandhi • Dr. James Webb – Director, Stocker Astroscience center • All images reduced and combined using MIRA image processing software. (Mirametrics) What are Messier Objects? • Messier objects are a list of astronomical sources compiled by Charles Messier, an 18th and early 19th century astronomer. He created a list of distracting objects to avoid while comet hunting. This list now contains over 110 objects, many of which are the most famous astronomical bodies known. The list contains planetary nebula, star clusters, and other galaxies. - Bobby Martinez The Telescope The telescope used to take these images is an Astronomical Consultants and Equipment (ACE) 24- inch (0.61-meter) Ritchey-Chretien reflecting telescope. It has a focal ratio of F6.2 and is supported on a structure independent of the building that houses it. It is equipped with a Finger Lakes 1kx1k CCD camera cooled to -30o C at the Cassegrain focus. It is equipped with dual filter wheels, the first containing UBVRI scientific filters and the second RGBL color filters. Messier 1 Found 6,500 light years away in the constellation of Taurus, the Crab Nebula (known as M1) is a supernova remnant. The original supernova that formed the crab nebula was observed by Chinese, Japanese and Arab astronomers in 1054 AD as an incredibly bright “Guest star” which was visible for over twenty-two months. The supernova that produced the Crab Nebula is thought to have been an evolved star roughly ten times more massive than the Sun. -

THE 1000 BRIGHTEST HIPASS GALAXIES: H I PROPERTIES B

The Astronomical Journal, 128:16–46, 2004 July A # 2004. The American Astronomical Society. All rights reserved. Printed in U.S.A. THE 1000 BRIGHTEST HIPASS GALAXIES: H i PROPERTIES B. S. Koribalski,1 L. Staveley-Smith,1 V. A. Kilborn,1, 2 S. D. Ryder,3 R. C. Kraan-Korteweg,4 E. V. Ryan-Weber,1, 5 R. D. Ekers,1 H. Jerjen,6 P. A. Henning,7 M. E. Putman,8 M. A. Zwaan,5, 9 W. J. G. de Blok,1,10 M. R. Calabretta,1 M. J. Disney,10 R. F. Minchin,10 R. Bhathal,11 P. J. Boyce,10 M. J. Drinkwater,12 K. C. Freeman,6 B. K. Gibson,2 A. J. Green,13 R. F. Haynes,1 S. Juraszek,13 M. J. Kesteven,1 P. M. Knezek,14 S. Mader,1 M. Marquarding,1 M. Meyer,5 J. R. Mould,15 T. Oosterloo,16 J. O’Brien,1,6 R. M. Price,7 E. M. Sadler,13 A. Schro¨der,17 I. M. Stewart,17 F. Stootman,11 M. Waugh,1, 5 B. E. Warren,1, 6 R. L. Webster,5 and A. E. Wright1 Received 2002 October 30; accepted 2004 April 7 ABSTRACT We present the HIPASS Bright Galaxy Catalog (BGC), which contains the 1000 H i brightest galaxies in the southern sky as obtained from the H i Parkes All-Sky Survey (HIPASS). The selection of the brightest sources is basedontheirHi peak flux density (Speak k116 mJy) as measured from the spatially integrated HIPASS spectrum. 7 ; 10 The derived H i masses range from 10 to 4 10 M . -

Cinemática Estelar, Modelos Dinâmicos E Determinaç˜Ao De

UNIVERSIDADE FEDERAL DO RIO GRANDE DO SUL PROGRAMA DE POS´ GRADUA¸CAO~ EM F´ISICA Cinem´atica Estelar, Modelos Din^amicos e Determina¸c~ao de Massas de Buracos Negros Supermassivos Daniel Alf Drehmer Tese realizada sob orienta¸c~aoda Professora Dra. Thaisa Storchi Bergmann e apresen- tada ao Programa de P´os-Gradua¸c~aoem F´ısica do Instituto de F´ısica da UFRGS em preenchimento parcial dos requisitos para a obten¸c~aodo t´ıtulo de Doutor em Ci^encias. Porto Alegre Mar¸co, 2015 Agradecimentos Gostaria de agradecer a todas as pessoas que de alguma forma contribu´ıram para a realiza¸c~ao deste trabalho e em especial agrade¸co: • A` Profa. Dra. Thaisa Storchi-Bergmann pela competente orienta¸c~ao. • Aos professores Dr. Fabr´ıcio Ferrari da Universidade Federal do Rio Grande, Dr. Rogemar Riffel da Universidade Federal de Santa Maria e ao Dr. Michele Cappellari da Universidade de Oxford cujas colabora¸c~oes foram fundamentais para a realiza¸c~ao deste trabalho. • A todos os professores do Departamento de Astronomia do Instituto de F´ısica da Universidade Federal do Rio Grande do Sul que contribu´ıram para minha forma¸c~ao. • Ao CNPq pelo financiamento desse trabalho. • Aos meus pais Ingon e Selia e meus irm~aos Neimar e Carla pelo apoio. Daniel Alf Drehmer Universidade Federal do Rio Grande do Sul Mar¸co2015 i Resumo O foco deste trabalho ´eestudar a influ^encia de buracos negros supermassivos (BNSs) nucleares na din^amica e na cinem´atica estelar da regi~ao central das gal´axias e determinar a massa destes BNSs. -

Spiral Galaxies, Elliptical Galaxies, � Irregular Galaxies, Dwarf Galaxies, � Peculiar/Interacting Galalxies Spiral Galaxies

Lecture 33: Announcements 1) Pick up graded hwk 5. Good job: Jessica, Jessica, and Elizabeth for a 100% score on hwk 5 and the other 25% of the class with an A. 2) Article and homework 7 were posted on class website on Monday (Apr 18) . Due on Mon Apr 25. 3) Reading Assignment for Quiz Wed Apr 27 Ch 23, Cosmic Perspectives: The Beginning of Time 4) Exam moved to Wed May 4 Lecture 33: Galaxy Formation and Evolution Several topics for galaxy evolution have already been covered in Lectures 2, 3, 4,14,15,16. you should refer to your in-class notes for these topics which include: - Types of galaxies (barred spiral, unbarred spirals, ellipticals, irregulars) - The Local Group of Galaxies, The Virgo and Coma Cluster of galaxies - How images of distant galaxies allow us to look back in time - The Hubble Ultra Deep Field (HUDF) - The Doppler blueshift (Lectures 15-16) - Tracing stars, dust, gas via observations at different wavelengths (Lecture15-16). In next lectures, we will cover - Galaxy Classification. The Hubble Sequence - Mapping the Distance of Galaxies - Mapping the Visible Constituents of Galaxies: Stars, Gas, Dust - Understanding Galaxy Formation and Evolution - Galaxy Interactions: Nearby Galaxies, the Milky Way, Distant Galaxies - Mapping the Dark Matter in Galaxies and in the Universe - The Big Bang - Fates of our Universe and Dark Energy Galaxy Classification Galaxy: Collection of few times (108 to 1012) stars orbiting a common center and bound by gravity. Made of gas, stars, dust, dark matter. There are many types of galaxies and they can be classfiied according to different criteria. -

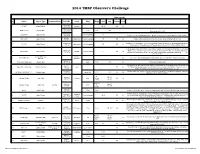

2014 Observers Challenge List

2014 TMSP Observer's Challenge Atlas page #s # Object Object Type Common Name RA, DEC Const Mag Mag.2 Size Sep. U2000 PSA 18h31m25s 1 IC 1287 Bright Nebula Scutum 20'.0 295 67 -10°47'45" 18h31m25s SAO 161569 Double Star 5.77 9.31 12.3” -10°47'45" Near center of IC 1287 18h33m28s NGC 6649 Open Cluster 8.9m Integrated 5' -10°24'10" Can be seen in 3/4d FOV with above. Brightest star is 13.2m. Approx 50 stars visible in Binos 18h28m 2 NGC 6633 Open Cluster Ophiuchus 4.6m integrated 27' 205 65 Visible in Binos and is about the size of a full Moon, brightest star is 7.6m +06°34' 17h46m18s 2x diameter of a full Moon. Try to view this cluster with your naked eye, binos, and a small scope. 3 IC 4665 Open Cluster Ophiuchus 4.2m Integrated 60' 203 65 +05º 43' Also check out “Tweedle-dee and Tweedle-dum to the east (IC 4756 and NGC 6633) A loose open cluster with a faint concentration of stars in a rich field, contains about 15-20 stars. 19h53m27s Brightest star is 9.8m, 5 stars 9-11m, remainder about 12-13m. This is a challenge obJect to 4 Harvard 20 Open Cluster Sagitta 7.7m integrated 6' 162 64 +18°19'12" improve your observation skills. Can you locate the miniature coathanger close by at 19h 37m 27s +19d? Constellation star Corona 5 Corona Borealis 55 Trace the 7 stars making up this constellation, observe and list the colors of each star asterism Borealis 15H 32' 55” Theta Corona Borealis Double Star 4.2m 6.6m .97” 55 Theta requires about 200x +31° 21' 32” The direction our Sun travels in our galaxy. -

V·M·I University Microfilms International a Bell & Howell Information Company 300 North Zeeb Road, Ann Arbor, M148106-1346 USA 313/761-4700 800/521-0600

The dynamics of satellite galaxies. Item Type text; Dissertation-Reproduction (electronic) Authors Zaritsky, Dennis Fabian. Publisher The University of Arizona. Rights Copyright © is held by the author. Digital access to this material is made possible by the University Libraries, University of Arizona. Further transmission, reproduction or presentation (such as public display or performance) of protected items is prohibited except with permission of the author. Download date 07/10/2021 09:44:33 Link to Item http://hdl.handle.net/10150/185639 INFORMATION TO USERS This manuscript has been reproduced from the microfilm master. UMI films the text directly from the original or copy submitted. Thus, some thesis and dissertation copies are in typewriter face, while others may be from any type of computer printer. The quality of this reproduction is dependent upon the quality of the copy submitted. Broken or indistinct print, colored or poor quality illustrations and photographs, print bleed through, substandard margins, and improper alignment can adversely affect reproduction. In the unlikely event that the author did not send UMI a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyright material had to be removed, a note will indicate the deletion. Oversize materials (e.g., maps, drawings, charts) are reproduced by sectioning the original, beginning at the upper left-hand corner and continuing from left to right in equal sections with small overlaps. Each original is also photographed in one exposure and is included in reduced form at the back of the book. Photographs included in the original manuscript have been reproduced xerographically in this copy. -

Astronomers Map Massive Structure Beyond Laniakea Supercluster 13 July 2020

Astronomers map massive structure beyond Laniakea Supercluster 13 July 2020 the Milky Way. Along the arm, galaxies are slowly moving toward the South Pole, and from there, across a part of the sky obscured from Earth by the Milky Way toward the dominant structure in the nearby universe, the Shapley connection. "We wonder if the South Pole Wall is much bigger than what we see. What we have mapped stretches across the full domain of the region we have surveyed. We are early explorers of the cosmos, extending our maps into unknown territory," described Tully. The team's research was published in Astrophysical Journal. Throughout the last 40 years, there has been a growing appreciation of patterns in the distribution of galaxies in the Universe, reminiscent of geographic features like mountain ranges and island archipelagos. The Milky Way galaxy, with its South Pole Wall. Credit: University of Hawaii at Manoa 100 billion stars, is part of the small Local Group of galaxies, which in turn is a suburb of the Virgo cluster with thousands of galaxies. The Virgo cluster in turn is an outer component of an even For the past decade, an international team of larger conglomeration of many rich clusters of astronomers, led in part by Brent Tully at the galaxies, collectively called the "Great Attractor" University of Hawai?i Institute for Astronomy, has because of its immense gravitational pull. In 2014, been mapping the distribution of galaxies around the team mapped out the Laniakea Supercluster, the Milky Way. They have discovered an immense the bundling of a hundred thousand galaxies over structure beyond Laniakea, an immense an even larger region, spanning 500 million light supercluster of galaxies, including our own. -

Perseus Perseus

Lateinischer Name: Deutscher Name: Per Perseus Perseus Atlas (2000.0) Karte Cambridge Star Kulmination um 2, 3 Atlas Mitternacht: Benachbarte 1, 4, Sky Atlas Sternbilder: 5 And Ari Aur Cam Cas 7. November Tau Tri Deklinationsbereich: 30° ... 59° Fläche am Himmel: 615° 2 Mythologie und Geschichte: Akrisios war der König von Argos und hatte eine hübsche Tochter namens Danae. Das Orakel von Delphi prophezeite ihm, sein Enkelsohn werde ihn einst töten. Aus Furcht vor diesem Orakelspruch ließ Akrisios seine Tochter Danae in einen Turm einschließen, damit sie keinen Mann empfangen könne. Akrisios konnte natürlich nicht wissen, dass Zeus selbst sich der liebreizenden Danae nähern wollte. Zeus tat dies auch und nahm dazu die Form eines goldenen Regens an, der durch die Wände, Fugen, Ritzen und Fenster drang. Danae gebar einen Sohn, welcher "Der aus fließendem Gold entstandene" oder auch "Der Goldgeborene" genannt wurde - eben den Perseus. Akrisios blieb dies natürlich nicht lange verborgen und er ließ seine Tochter und sein Enkelkind in einen Kasten einnageln, der dann ins Meer geworfen wurde. Danae und Perseus trieben lange Zeit auf dem Meer, bis sie schließlich an der Küste der steinigen Insel Seriphos strandeten und aus ihrem Gefängnis befreit wurden. Auf dieser Insel herrschte Polydektes, der bald jahrelang um die schöne Danae warb, jedoch vergebens. Entweder um Danae doch noch zur Ehe zu zwingen oder um Perseus aus dem Weg zu schaffen, schickte er den inzwischen zum Jüngling herangewachsenen Sohn der Danae aus, eine tödliche Aufgabe zu erledigen. Er solle das Haupt der Medusa zu holen. Die Medusa war eine der Gorgonen, eine der drei Töchter des Phorkys, die mit Schlangenhaaren ausgestattet waren und einen versteinernden Blick werfen konnten. -

Celebrating the Wonder of the Night Sky

Celebrating the Wonder of the Night Sky The heavens proclaim the glory of God. The skies display his craftsmanship. Psalm 19:1 NLT Celebrating the Wonder of the Night Sky Light Year Calculation: Simple! [Speed] 300 000 km/s [Time] x 60 s x 60 m x 24 h x 365.25 d [Distance] ≈ 10 000 000 000 000 km ≈ 63 000 AU Celebrating the Wonder of the Night Sky Milkyway Galaxy Hyades Star Cluster = 151 ly Barnard 68 Nebula = 400 ly Pleiades Star Cluster = 444 ly Coalsack Nebula = 600 ly Betelgeuse Star = 643 ly Helix Nebula = 700 ly Helix Nebula = 700 ly Witch Head Nebula = 900 ly Spirograph Nebula = 1 100 ly Orion Nebula = 1 344 ly Dumbbell Nebula = 1 360 ly Dumbbell Nebula = 1 360 ly Flame Nebula = 1 400 ly Flame Nebula = 1 400 ly Veil Nebula = 1 470 ly Horsehead Nebula = 1 500 ly Horsehead Nebula = 1 500 ly Sh2-106 Nebula = 2 000 ly Twin Jet Nebula = 2 100 ly Ring Nebula = 2 300 ly Ring Nebula = 2 300 ly NGC 2264 Nebula = 2 700 ly Cone Nebula = 2 700 ly Eskimo Nebula = 2 870 ly Sh2-71 Nebula = 3 200 ly Cat’s Eye Nebula = 3 300 ly Cat’s Eye Nebula = 3 300 ly IRAS 23166+1655 Nebula = 3 400 ly IRAS 23166+1655 Nebula = 3 400 ly Butterfly Nebula = 3 800 ly Lagoon Nebula = 4 100 ly Rotten Egg Nebula = 4 200 ly Trifid Nebula = 5 200 ly Monkey Head Nebula = 5 200 ly Lobster Nebula = 5 500 ly Pismis 24 Star Cluster = 5 500 ly Omega Nebula = 6 000 ly Crab Nebula = 6 500 ly RS Puppis Variable Star = 6 500 ly Eagle Nebula = 7 000 ly Eagle Nebula ‘Pillars of Creation’ = 7 000 ly SN1006 Supernova = 7 200 ly Red Spider Nebula = 8 000 ly Engraved Hourglass Nebula