Defeating Last-Level Cache Side Channel Attacks in Cloud Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cryptanalysis of a Reduced Version of the Block Cipher E2

Cryptanalysis of a Reduced Version of the Block Cipher E2 Mitsuru Matsui and Toshio Tokita Information Technology R&D Center Mitsubishi Electric Corporation 5-1-1, Ofuna, Kamakura, Kanagawa, 247, Japan [email protected], [email protected] Abstract. This paper deals with truncated differential cryptanalysis of the 128-bit block cipher E2, which is an AES candidate designed and submitted by NTT. Our analysis is based on byte characteristics, where a difference of two bytes is simply encoded into one bit information “0” (the same) or “1” (not the same). Since E2 is a strongly byte-oriented algorithm, this bytewise treatment of characteristics greatly simplifies a description of its probabilistic behavior and noticeably enables us an analysis independent of the structure of its (unique) lookup table. As a result, we show a non-trivial seven round byte characteristic, which leads to a possible attack of E2 reduced to eight rounds without IT and FT by a chosen plaintext scenario. We also show that by a minor modification of the byte order of output of the round function — which does not reduce the complexity of the algorithm nor violates its design criteria at all —, a non-trivial nine round byte characteristic can be established, which results in a possible attack of the modified E2 reduced to ten rounds without IT and FT, and reduced to nine rounds with IT and FT. Our analysis does not have a serious impact on the full E2, since it has twelve rounds with IT and FT; however, our results show that the security level of the modified version against differential cryptanalysis is lower than the designers’ estimation. -

Historical Ciphers • A

ECE 646 - Lecture 6 Required Reading • W. Stallings, Cryptography and Network Security, Chapter 2, Classical Encryption Techniques Historical Ciphers • A. Menezes et al., Handbook of Applied Cryptography, Chapter 7.3 Classical ciphers and historical development Why (not) to study historical ciphers? Secret Writing AGAINST FOR Steganography Cryptography (hidden messages) (encrypted messages) Not similar to Basic components became modern ciphers a part of modern ciphers Under special circumstances modern ciphers can be Substitution Transposition Long abandoned Ciphers reduced to historical ciphers Transformations (change the order Influence on world events of letters) Codes Substitution The only ciphers you Ciphers can break! (replace words) (replace letters) Selected world events affected by cryptology Mary, Queen of Scots 1586 - trial of Mary Queen of Scots - substitution cipher • Scottish Queen, a cousin of Elisabeth I of England • Forced to flee Scotland by uprising against 1917 - Zimmermann telegram, America enters World War I her and her husband • Treated as a candidate to the throne of England by many British Catholics unhappy about 1939-1945 Battle of England, Battle of Atlantic, D-day - a reign of Elisabeth I, a Protestant ENIGMA machine cipher • Imprisoned by Elisabeth for 19 years • Involved in several plots to assassinate Elisabeth 1944 – world’s first computer, Colossus - • Put on trial for treason by a court of about German Lorenz machine cipher 40 noblemen, including Catholics, after being implicated in the Babington Plot by her own 1950s – operation Venona – breaking ciphers of soviet spies letters sent from prison to her co-conspirators stealing secrets of the U.S. atomic bomb in the encrypted form – one-time pad 1 Mary, Queen of Scots – cont. -

The QARMA Block Cipher Family

The QARMA Block Cipher Family Almost MDS Matrices Over Rings With Zero Divisors, Nearly Symmetric Even-Mansour Constructions With Non-Involutory Central Rounds, and Search Heuristics for Low-Latency S-Boxes Roberto Avanzi Qualcomm Product Security, Munich, Germany [email protected], [email protected] Abstract. This paper introduces QARMA, a new family of lightweight tweakable block ciphers targeted at applications such as memory encryption, the generation of very short tags for hardware-assisted prevention of software exploitation, and the con- struction of keyed hash functions. QARMA is inspired by reflection ciphers such as PRINCE, to which it adds a tweaking input, and MANTIS. However, QARMA differs from previous reflector constructions in that it is a three-round Even-Mansour scheme instead of a FX-construction, and its middle permutation is non-involutory and keyed. We introduce and analyse a family of Almost MDS matrices defined over a ring with zero divisors that allows us to encode rotations in its operation while maintaining the minimal latency associated to {0, 1}-matrices. The purpose of all these design choices is to harden the cipher against various classes of attacks. We also describe new S-Box search heuristics aimed at minimising the critical path. QARMA exists in 64- and 128-bit block sizes, where block and tweak size are equal, and keys are twice as long as the blocks. We argue that QARMA provides sufficient security margins within the constraints de- termined by the mentioned applications, while still achieving best-in-class latency. Implementation results on a state-of-the art manufacturing process are reported. -

On Recent Attacks Against Cryptographic Hash Functions

On recent attacks against Cryptographic Hash Functions Martin Ekerå & Henrik Ygge 1 Outline ‣ First part ‣ Preliminaries ‣ Which cryptographic hash functions exist? ‣ What degree of security do they offer? ‣ An introduction to Wang’s attack ‣ Second part ‣ Wang’s attack applied to MD5 ‣ Demo 2 Part I 3 Operators Symbol Meaning x ⊞ y Addition modulo 2n x ⊟ y Subtraction modulo 2n x ⊕ y Exclusive OR x ⋀ y Bitwise AND x ⋁ y Bitwise OR ¬ x The negation of x. x ≪ s Shifting of x by s bits to the left. x ⋘ s Rotation of x by s bits to the left. 4 Bitwise Functions Function IF (x, y, z) (x ⋀ y) ⋁ ((¬ x) ⋀ z) XOR (x, y, z) x ⊕ y ⊕ z MAJ (x, y, z) (x ⋀ y) ⋁ (y ⋀ z) ⋁ (z ⋀ x) XNO (x, y, z) y ⊕ ((¬ z) ⋁ x) ‣ The functions above are all bitwise. 5 Hash Functions ‣ A hash function maps elements from a finite or infinite domain, into elements of a fixed size domain. 6 Attacks on Hash Functions ‣ Collision attack Find m and m’ ≠ m such that H(m) = H(m’). ‣ First pre-image attack Given h find m such that h = H(m). ‣ Second pre-image attack Given m find m’ ≠ m such that H(m) = H(m’). 7 Attack Complexities ‣ Collision attack Naïve complexity O(2n/2) due to the birthday paradox. ‣ First pre-image attack Naïve complexity O(2n) ‣ Second pre-image attack Naïve complexity O(2n) 8 Cryptographic Hash Functions ‣ It is desirable for a cryptographic hash function to be collision resistant, first pre-image resistant and second pre-image resistant. -

Freebsd File Formats Manual Libarchive-Formats (5)

libarchive-formats (5) FreeBSD File Formats Manual libarchive-formats (5) NAME libarchive-formats —archive formats supported by the libarchive library DESCRIPTION The libarchive(3) library reads and writes a variety of streaming archive formats. Generally speaking, all of these archive formats consist of a series of “entries”. Each entry stores a single file system object, such as a file, directory,orsymbolic link. The following provides a brief description of each format supported by libarchive,with some information about recognized extensions or limitations of the current library support. Note that just because a format is supported by libarchive does not imply that a program that uses libarchive will support that format. Applica- tions that use libarchive specify which formats theywish to support, though manyprograms do use libarchive convenience functions to enable all supported formats. TarFormats The libarchive(3) library can read most tar archives. However, itonly writes POSIX-standard “ustar” and “pax interchange” formats. All tar formats store each entry in one or more 512-byte records. The first record is used for file metadata, including filename, timestamp, and mode information, and the file data is stored in subsequent records. Later variants have extended this by either appropriating undefined areas of the header record, extending the header to multiple records, or by storing special entries that modify the interpretation of subsequent entries. gnutar The libarchive(3) library can read GNU-format tar archives. It currently supports the most popular GNU extensions, including modern long filename and linkname support, as well as atime and ctime data. The libarchive library does not support multi-volume archives, nor the old GNU long filename format. -

The Quasigroup Block Cipher and Its Analysis Matthew .J Battey University of Nebraska at Omaha

University of Nebraska at Omaha DigitalCommons@UNO Student Work 5-2014 The Quasigroup Block Cipher and its Analysis Matthew .J Battey University of Nebraska at Omaha Follow this and additional works at: https://digitalcommons.unomaha.edu/studentwork Part of the Computer Sciences Commons Recommended Citation Battey, Matthew J., "The Quasigroup Block Cipher and its Analysis" (2014). Student Work. 2892. https://digitalcommons.unomaha.edu/studentwork/2892 This Thesis is brought to you for free and open access by DigitalCommons@UNO. It has been accepted for inclusion in Student Work by an authorized administrator of DigitalCommons@UNO. For more information, please contact [email protected]. The Quasigroup Block Cipher and its Analysis A Thesis Presented to the Department of Computer Sience and the Faculty of the Graduate College University of Nebraska In partial satisfaction of the requirements for the degree of Masters of Science by Matthew J. Battey May, 2014 Supervisory Committee: Abhishek Parakh, Co-Chair Haifeng Guo, Co-Chair Kenneth Dick Qiuming Zhu UMI Number: 1554776 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. UMI 1554776 Published by ProQuest LLC (2014). Copyright in the Dissertation held by the Author. Microform Edition © ProQuest LLC. All rights reserved. This work is protected against unauthorized copying under Title 17, United States Code ProQuest LLC. 789 East Eisenhower Parkway P.O. -

Advanced Meet-In-The-Middle Preimage Attacks: First Results on Full Tiger, and Improved Results on MD4 and SHA-2

Advanced Meet-in-the-Middle Preimage Attacks: First Results on Full Tiger, and Improved Results on MD4 and SHA-2 Jian Guo1, San Ling1, Christian Rechberger2, and Huaxiong Wang1 1 Division of Mathematical Sciences, School of Physical and Mathematical Sciences, Nanyang Technological University, Singapore 2 Dept. of Electrical Engineering ESAT/COSIC, K.U.Leuven, and Interdisciplinary Institute for BroadBand Technology (IBBT), Kasteelpark Arenberg 10, B–3001 Heverlee, Belgium. [email protected] Abstract. We revisit narrow-pipe designs that are in practical use, and their security against preimage attacks. Our results are the best known preimage attacks on Tiger, MD4, and reduced SHA-2, with the result on Tiger being the first cryptanalytic shortcut attack on the full hash function. Our attacks runs in time 2188.8 for finding preimages, and 2188.2 for second-preimages. Both have memory requirement of order 28, which is much less than in any other recent preimage attacks on reduced Tiger. Using pre-computation techniques, the time complexity for finding a new preimage or second-preimage for MD4 can now be as low as 278.4 and 269.4 MD4 computations, respectively. The second-preimage attack works for all messages longer than 2 blocks. To obtain these results, we extend the meet-in-the-middle framework recently developed by Aoki and Sasaki in a series of papers. In addition to various algorithm-specific techniques, we use a number of conceptually new ideas that are applicable to a larger class of constructions. Among them are (1) incorporating multi-target scenarios into the MITM framework, leading to faster preimages from pseudo-preimages, (2) a simple precomputation technique that allows for finding new preimages at the cost of a single pseudo-preimage, and (3) probabilistic initial structures, to reduce the attack time complexity. -

DIN/ANSI Shark Line Material Specific Application Taps New Products 2017 SHARK

DIN/ANSI Shark Line Material Specific Application Taps New Products 2017 SHARK INTRODUCTION Dormer brand material specific application-based ranges of DIN ANSI Shark Taps offer high performance and process security. Shark Line taps are easily recognizable by their color ring coding, denoting recommendation for use on specific materials. FEATURES AND BENEFITS • COLOR RING CODING • EDGE TREATMENT (Red, Yellow, Blue Shark) The color ring on the tool shank identifies suitability for Spiral flute taps incorporate a special edge treatment specific materials and enables quick and easy tool selection. to increase strength and reduce the chance • ADVANCED GEOMETRY of micro-chipping on the cutting edges. This considerably Significant reduction in axial forces and torque compared improves performance and tool life. to conventional taps. This ensures problem-free threading of blind and through holes in the selected material. • DIN/ANSI STANDARD Standard ANSI shank and square with DIN overall length, SHARK LINE for extra reach and compatability with Inch Standard Tap Holding. MATERIAL GEOMETRY AND CHAMFER Shark taps are manufactured from a unique powder metallurgy Thread geometry with optimized form generates: tool steel different from any other HSS-E-PM. This provides • Low torque an unbeatable combination of toughness and edge strength, allowing the taps to perform at higher cutting temperatures • Excellent threads at both high and low speeds while offering excellent performance and longer tool life. • Superior surface finish • HIGHER IMPACT TOUGHNESS -

CDE: Run Any Linux Application On-Demand Without Installation

CDE: Run Any Linux Application On-Demand Without Installation Philip J. Guo Stanford University [email protected] Abstract with compiling, installing, and configuring software and their myriad of dependencies. For example, the official There is a huge ecosystem of free software for Linux, but Google Chrome help forum for “install/uninstall issues” since each Linux distribution (distro) contains a differ- has over 5800 threads. ent set of pre-installed shared libraries, filesystem layout In addition, a study of US labor statistics predicts that conventions, and other environmental state, it is difficult by 2012, 13 million American workers will do program- to create and distribute software that works without has- ming in their jobs, but amongst those, only 3 million will sle across all distros. Online forums and mailing lists be professional software developers [24]. Thus, there are are filled with discussions of users’ troubles with com- potentially millions of people who still need to get their piling, installing, and configuring Linux software and software to run on other machines but who are unlikely their myriad of dependencies. To address this ubiqui- to invest the effort to create one-click installers or wres- tous problem, we have created an open-source tool called tle with package managers, since their primary job is not CDE that automatically packages up the Code, Data, and to release production-quality software. For example: Environment required to run a set of x86-Linux pro- grams on other x86-Linux machines. Creating a CDE • System administrators often hack together ad- package is as simple as running the target application un- hoc utilities comprised of shell scripts and custom- der CDE’s monitoring, and executing a CDE package re- compiled versions of open-source software, in or- quires no installation, configuration, or root permissions. -

IDOL Keyview PDF Export SDK 12.6 C Programming Guide

KeyView Software Version 12.6 PDF Export SDK C Programming Guide Document Release Date: June 2020 Software Release Date: June 2020 PDF Export SDK C Programming Guide Legal notices Copyright notice © Copyright 1997-2020 Micro Focus or one of its affiliates. The only warranties for products and services of Micro Focus and its affiliates and licensors (“Micro Focus”) are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Micro Focus shall not be liable for technical or editorial errors or omissions contained herein. The information contained herein is subject to change without notice. Documentation updates The title page of this document contains the following identifying information: l Software Version number, which indicates the software version. l Document Release Date, which changes each time the document is updated. l Software Release Date, which indicates the release date of this version of the software. To check for updated documentation, visit https://www.microfocus.com/support-and-services/documentation/. Support Visit the MySupport portal to access contact information and details about the products, services, and support that Micro Focus offers. This portal also provides customer self-solve capabilities. It gives you a fast and efficient way to access interactive technical support tools needed to manage your business. As a valued support customer, you can benefit by using the MySupport portal to: l Search for knowledge documents of interest l Access product documentation l View software vulnerability alerts l Enter into discussions with other software customers l Download software patches l Manage software licenses, downloads, and support contracts l Submit and track service requests l Contact customer support l View information about all services that Support offers Many areas of the portal require you to sign in. -

Forcepoint DLP Supported File Formats and Size Limits

Forcepoint DLP Supported File Formats and Size Limits Supported File Formats and Size Limits | Forcepoint DLP | v8.8.1 This article provides a list of the file formats that can be analyzed by Forcepoint DLP, file formats from which content and meta data can be extracted, and the file size limits for network, endpoint, and discovery functions. See: ● Supported File Formats ● File Size Limits © 2021 Forcepoint LLC Supported File Formats Supported File Formats and Size Limits | Forcepoint DLP | v8.8.1 The following tables lists the file formats supported by Forcepoint DLP. File formats are in alphabetical order by format group. ● Archive For mats, page 3 ● Backup Formats, page 7 ● Business Intelligence (BI) and Analysis Formats, page 8 ● Computer-Aided Design Formats, page 9 ● Cryptography Formats, page 12 ● Database Formats, page 14 ● Desktop publishing formats, page 16 ● eBook/Audio book formats, page 17 ● Executable formats, page 18 ● Font formats, page 20 ● Graphics formats - general, page 21 ● Graphics formats - vector graphics, page 26 ● Library formats, page 29 ● Log formats, page 30 ● Mail formats, page 31 ● Multimedia formats, page 32 ● Object formats, page 37 ● Presentation formats, page 38 ● Project management formats, page 40 ● Spreadsheet formats, page 41 ● Text and markup formats, page 43 ● Word processing formats, page 45 ● Miscellaneous formats, page 53 Supported file formats are added and updated frequently. Key to support tables Symbol Description Y The format is supported N The format is not supported P Partial metadata -

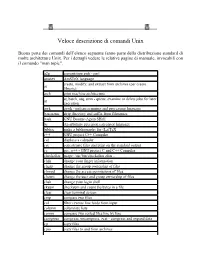

Unix Command

Veloce descrizione di comandi Unix Buona parte dei comandi dell’elenco seguente fanno parte della distribuzione standard di molte architetture Unix. Per i dettagli vedere le relative pagine di manuale, invocabili con il comando "man topic". a2p convertitore awk - perl amstex AmSTeX language create, modify, and extract from archives (per creare ar librerie) arch print machine architecture at, batch, atq, atrm - queue, examine or delete jobs for later at execution awk gawk - pattern scanning and processing language basename strip directory and suffix from filenames bash GNU Bourne-Again SHell bc An arbitrary precision calculator language bibtex make a bibliography for (La)TeX c++ GNU project C++ Compiler cal displays a calendar cat concatenate files and print on the standard output cc gcc, g++ - GNU project C and C++ Compiler checkalias usage: /usr/bin/checkalias alias .. chfn change your finger information chgrp change the group ownership of files chmod change the access permissions of files chown change the user and group ownership of files chsh change your login shell cksum checksum and count the bytes in a file clear clear terminal screen cmp compare two files col filter reverse line feeds from input column columnate lists comm compare two sorted files line by line compress compress, uncompress, zcat - compress and expand data cp copy files cpio copy files to and from archives tcsh - C shell with file name completion and command line csh editing csplit split a file into sections determined by context lines cut remove sections from each