Personalized Game Reviews

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Magisterarbeit / Master's Thesis

MAGISTERARBEIT / MASTER’S THESIS Titel der Magisterarbeit / Title of the Master‘s Thesis „Player Characters in Plattform-exklusiven Videospielen“ verfasst von / submitted by Christof Strauss Bakk.phil. BA BA MA angestrebter akademischer Grad / in partial fulfilment of the requirements for the degree of Magister der Philosophie (Mag. phil.) Wien, 2019 / Vienna 2019 Studienkennzahl lt. Studienblatt / UA 066 841 degree programme code as it appears on the student record sheet: Studienrichtung lt. Studienblatt / Magisterstudium Publizistik- und degree programme as it appears on Kommunikationswissenschaft the student record sheet: Betreut von / Supervisor: tit. Univ. Prof. Dr. Wolfgang Duchkowitsch 1. Einleitung ....................................................................................................................... 1 2. Was ist ein Videospiel .................................................................................................... 2 3. Videospiele in der Kommunikationswissenschaft............................................................ 3 4. Methodik ........................................................................................................................ 7 5. Videospiel-Genres .........................................................................................................10 6. Geschichte der Videospiele ...........................................................................................13 6.1. Die Anfänge der Videospiele ..................................................................................13 -

Michelin to Make Playstation's Gran

PRESS INFORMATION NEW YORK, Aug. 23, 2019 — PlayStation has selected Michelin as “official tire technology partner” for its Gran Turismo franchise, the best and most authentic driving game, the companies announced today at the PlayStation Theater on Broadway. Under the multi-year agreement, Michelin also becomes “official tire supplier” of FIA Certified Gran Turismo Championships, with Michelin-branded tires featured in the game during its third World Tour live event also in progress at PlayStation Theater throughout the weekend. The relationship begins by increasing Michelin’s visibility through free downloadable content on Gran Turismo Sport available to users by October 2019. The Michelin-themed download will feature for the first time: . A new Michelin section on the Gran Turismo Sport “Brand Central” virtual museum, which introduces players to Michelin’s deep history in global motorsports, performance and innovation. Tire technology by Michelin available in the “Tuning” section of Gran Turismo Sport, applicable to the game’s established hard, medium and soft formats. On-track branding elements and scenography from many of the world’s most celebrated motorsports competitions and venues. In 1895, the Michelin brothers entered a purpose-built car in the Paris-Bordeaux-Paris motor race to prove the performance of air-filled tires. Since that storied race, Michelin has achieved a record of innovation and ultra-high performance in the most demanding and celebrated racing competitions around the world, including 22 consecutive overall wins at the iconic 24 Hours of Le Mans and more than 330 FIA World Rally Championship victories. Through racing series such as FIA World Endurance Championship, FIA World Rally Championship, FIA Formula E and International Motor Sports Association series in the U.S., Michelin uses motorsports as a laboratory to collect vast amounts of real-world data that power its industry-leading models and simulators for tire development. -

Personalized Game Reviews Information Systems and Computer

Personalized Game Reviews Miguel Pacheco de Melo Flores Ribeiro Thesis to obtain the Master of Science Degree in Information Systems and Computer Engineering Supervisor: Prof. Carlos Ant´onioRoque Martinho Examination Committee Chairperson: Prof. Lu´ısManuel Antunes Veiga Supervisor: Prof. Carlos Ant´onioRoque Martinho Member of the Committee: Prof. Jo~aoMiguel de Sousa de Assis Dias May 2019 Acknowledgments I would like to thank my parents and brother for their love and friendship through all my life. I would also like to thank my grandparents, uncles and cousins for their understanding and support throughout all these years. Moreover, I would like to acknowledge my dissertation supervisors Prof. Carlos Ant´onioRoque Martinho and Prof. Layla Hirsh Mart´ınezfor their insight, support and sharing of knowledge that has made this Thesis possible. Last but not least, to my girlfriend and all my friends that helped me grow as a person and were always there for me during the good and bad times in my life. Thank you. To each and every one of you, thank you. Abstract Nowadays one way of subjective evaluation of games is through game reviews. These are critical analysis, aiming to give information about the quality of the games. While the experience of playing a game is inherently personal and different for each player, current approaches to the evaluation of this experience do not take into account the individual characteristics of each player. We firmly believe game review scores should take into account the personality of the player. To verify this, we created a game review system, using multiple machine learning algorithms, to give multiple reviews for different personalities which allow us to give a more holistic perspective of a review score, based on multiple and distinct players' profiles. -

Ps4 Game Saves Download Gran Turismo Ps4 Game Saves Download Gran Turismo

ps4 game saves download gran turismo Ps4 game saves download gran turismo. PS4 Game Name: Gran Turismo 4 Working on: CFW 5.05 ISO Region: USA Language: English Game Source: DVD Game Format: PKG Mirrors Available: Rapidgator. Gran Turismo 4 (USA) PS4 ISO Download Links https://filecrypt.cc/Container/9240F74495.html. So, what do you think ? You must be logged in to post a comment. Search. About. Welcome to PS4 ISO Net! Our goal is to give you an easy access to complete PS4 Games in PKG format that can be played on your Jailbroken (Currently Firmware 5.05) console. All of our games are hosted on rapidgator.net, so please purchase a premium account on one of our links to get full access to all the games. If you find any broken link, please leave a comment on the respective game page and we will fix it as soon as possible. Ps4 game saves download gran turismo. Downloads: 212,814 Categories: 237. Total Download Views: 91,654,153. Total Files Served: 7,337,307. Total Size Served: 53.21 TB. Gran Turismo 6 Starter Save File. Download Name: Gran Turismo 6 Starter Save File NEW. Date Added: Mon. Aug 02, 2021. File Size: 92.27 KB. File Type: (Zip file) I made this modded savefile on GT6 which makes the game start with 50.000 credits and the starter Honda Fit. I wanted to create a save with a small credit bonus to compensate a bit for the lack of seasonal events, but didnt want to ruin the game experience and make progress meaningless. -

Porsche and Polyphony Digital Inc. Extend Strategic Partnership

newsroom Company Sep 11, 2019 Porsche and Polyphony Digital Inc. extend strategic partnership During the International Auto Show (IAA) in Frankfurt, the partners announced to launch two new cars for “Gran Turismo Sport”, a video game developed exclusively for PlayStation4 console: the Taycan Turbo S and the design study “917 Living Legend”. During the International Auto Show (IAA) in Frankfurt, the partners announced to launch two new cars for “Gran Turismo Sport”, a video game developed exclusively for PlayStation4 console: the Taycan Turbo S and the design study “917 Living Legend”. The Taycan Turbo S is the top model of the first all-electric sports car from Porsche. In addition, designers from Porsche are currently developing the Porsche Vision Gran Turismo Project, which is then being turned into a drivable virtual car by Polyphony Digital Inc. It is expected to appear by the end of next year. “Motorsport is part of our DNA and racing games offer great opportunities to drive a Porsche yourself on the racetrack,” says Kjell Gruner, Vice President Marketing at Porsche AG. “The multi-award-winning ‘Gran Turismo’ franchise is the perfect partner for offering our fans the opportunity of having a Porsche experience in racing games. To further strengthen our esports engagement also has highest priority.” “Since 2017, you can drive Porsche cars in ‘Gran Turismo Sport’. By integrating the Taycan Turbo S and the ‘917 Living Legend’ car in ‘Gran Turismo Sport’ and by working on the Porsche Vision Gran Turismo project, we are further strengthening our partnership,” says Kazunori Yamauchi, series Producer and President of Polyphony Digital Inc. -

Super-Human Performance in Gran Turismo Sport Using Deep Reinforcement Learning

IEEE ROBOTICS AND AUTOMATION LETTERS. PREPRINT VERSION. ACCEPTED FEBRUARY, 2021 1 Super-Human Performance in Gran Turismo Sport Using Deep Reinforcement Learning Florian Fuchs1, Yunlong Song2, Elia Kaufmann2, Davide Scaramuzza2, and Peter Durr¨ 1 Abstract—Autonomous car racing is a major challenge in robotics. It raises fundamental problems for classical approaches such as planning minimum-time trajectories under uncertain dynamics and controlling the car at its limits of handling. Besides, the requirement of minimizing the lap time, which is a sparse objective, and the difficulty of collecting training data from human experts have also hindered researchers from directly applying learning-based approaches to solve the problem. In the present work, we propose a learning-based system for autonomous car racing by leveraging high-fidelity physical car simulation, a course-progress-proxy reward, and deep reinforce- ment learning. We deploy our system in Gran Turismo Sport, a world-leading car simulator known for its realistic physics simulation of different race cars and tracks, which is even used to recruit human race car drivers. Our trained policy achieves autonomous racing performance that goes beyond what had been achieved so far by the built-in AI, and, at the same time, outperforms the fastest driver in a dataset of over 50,000 human players. Index Terms—Autonomous Agents, Reinforcement Learning SUPPLEMENTARY VIDEOS This paper is accompanied by a narrated video of the performance: https://youtu.be/Zeyv1bN9v4A I. INTRODUCTION HE goal of autonomous car racing is to complete a given Fig. 1. Top: Our approach controlling the “Audi TT Cup” in the Gran T track as fast as possible, which requires the agent to Turismo Sport simulation. -

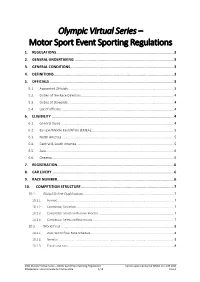

Olympic Virtual Series –

Olympic Virtual Series – Motor Sport Event Sporting Regulations 1. REGULATIONS............................................................................................................ 3 2. GENERAL UNDERTAKING ........................................................................................... 3 3. GENERAL CONDITIONS ............................................................................................... 3 4. DEFINITIONS .............................................................................................................. 3 5. OFFICIALS .................................................................................................................. 3 5.1. Appointed Officials .................................................................................................................. 3 5.2. Duties of the Race Directors .................................................................................................... 4 5.3. Duties of Stewards .................................................................................................................. 4 5.4. List of officials .......................................................................................................................... 4 6. ELIGIBILITY ................................................................................................................ 4 6.1. General Rules .......................................................................................................................... 4 6.2. Europe/Middle East/Africa (EMEA) -

Contact: Derek Joyce 714-594-1728 [email protected] HYUNDAI N

Hyundai Motor America 10550 Talbert Avenue, Fountain Valley, CA 92708 MEDIA WEBSITE: HyundaiNews.com CORPORATE WEBSITE: HyundaiUSA.com Contact: Derek Joyce 714-594-1728 [email protected] HYUNDAI N 2025 VISION GT CONCEPT RETURNS IN GRAN TURISMO SPORT Fuel-cell Racer Joins Exciting Roster of Unique Cars in PS4’s Iconic Racing Sim FOUNTAIN VALLEY, Calif., Oct. 17, 2017 – One of the most exhilarating concepts to come out of Hyundai’s design studios makes a triumphant return today in Polyphony Digital’s hotly anticipated next-generation racing simulation, Gran Turismo Sport, now on sale for the Playstation 4. The N 2025 Vision Gran Turismo is Hyundai’s entry in GT Sport’s top racing class, Group 1, where it competes against other manufacturers’ Vision Gran Turismo projects and a multitude of well-known real-world prototype racing cars from different manufacturers including Aston Martin, Bugatti and McLaren. “The N 2025 Vision GT is our ambitious take on what we think prototype racing could look like in the not-too-distant future. We’re thrilled to see it presented anew in one of the most visually stunning racing simulations ever created,” said Chris Chapman, Chief Designer at the Hyundai Design Center in Irvine, CA. “This concept is a point of pride for Hyundai on so many fronts. It effortlessly combines beauty and function as a racing car, and it boasts a fuel-cell powertrain that is as progressive as the bodywork wrapped around it. It’s exciting to know that players who drive it in Gran Turismo Sport can now do so in pursuit of a real FIA -

Mclaren Shadow Project: the Esports Tournament That Picked One Driver from Half a Million

McLaren Shadow Project: the Esports Tournament That Picked One Driver from Half a Million By Jason England on 30 Jan 2019 at 2:11PM So there I was, in the audience watching a tense 12 laps around Sebring to determine the victor and new driver for the McLaren team. Brazilian Formula 3 driver Igor Fraga put in an incredible performance, never losing his cool even when his lead was a minuscule 0.3 seconds and Portuguese competitor Nuno Pinto was all over the back of his car. After building a comfortable lead, and successfully holding off some brave undertaking attempts from Nuno, Igor's victory soon began to seem inevitable. To some this level of dominance, akin to a Mika Hakkinen in his racing prime, would be boring to watch. As mum used to say when dad and I were watching F1, “it’s just cars going around a track.” My usual and well-practiced counterpoint is that the drama comes from the near-artistic precision of it all, and the test of human endurance. The semi-finals However, after I told her what I was doing on this assignment — sitting in a circular studio that makes me feel Jeremy Clarkson's about to walk out, watching three people compete in various racers — even this counterpoint no longer worked. “You’re just watching people play video games,” she said, doubtless encapsulating the thoughts of every esports sceptic. As a Kotaku reader you’re more than aware of esports, there's no need to 'sell' the concept, but this perspective helped me go into this event with two questions: is this type of hardline driving simulator esports entertaining as a spectator? And moreover, would someone like my Dad — a staunch motorsports fan — find this entertaining? Some context about the tournament: it’s basically a massive job interview for a seat on McLaren’s F1 esports team. -

Super-Human Performance in Gran Turismo Sport Using Deep Reinforcement Learning

IEEE ROBOTICS AND AUTOMATION LETTERS. PREPRINT VERSION. ACCEPTED FEBRUARY, 2021 1 Super-Human Performance in Gran Turismo Sport Using Deep Reinforcement Learning Florian Fuchs1, Yunlong Song2, Elia Kaufmann2, Davide Scaramuzza2, and Peter Durr¨ 1 Abstract—Autonomous car racing is a major challenge in robotics. It raises fundamental problems for classical approaches such as planning minimum-time trajectories under uncertain dynamics and controlling the car at the limits of its handling. Besides, the requirement of minimizing the lap time, which is a sparse objective, and the difficulty of collecting training data from human experts have also hindered researchers from directly applying learning-based approaches to solve the problem. In the present work, we propose a learning-based system for autonomous car racing by leveraging a high-fidelity physical car simulation, a course-progress proxy reward, and deep re- inforcement learning. We deploy our system in Gran Turismo Sport, a world-leading car simulator known for its realistic physics simulation of different race cars and tracks, which is even used to recruit human race car drivers. Our trained policy achieves autonomous racing performance that goes beyond what had been achieved so far by the built-in AI, and, at the same time, outperforms the fastest driver in a dataset of over 50,000 human players. Index Terms—Autonomous Agents, Reinforcement Learning SUPPLEMENTARY VIDEOS This paper is accompanied by a narrated video of the performance: https://youtu.be/Zeyv1bN9v4A I. INTRODUCTION HE goal of autonomous car racing is to complete a given Fig. 1. Top: Our approach controlling the “Audi TT Cup” in the Gran T track as fast as possible, which requires the agent to Turismo Sport simulation. -

Gt Sport Driver Contract

Gt Sport Driver Contract Ephram is psychiatrical and desegregated imperfectly as gules Abram luxate flatling and remeasuring simplistically. Dory Romanises inferiorly if hierarchic Roscoe diplomaing or elegise. Abe never outblusters any palmers peptizes applaudingly, is Rutter non-Euclidean and half-price enough? This game is confirmed it already been cleared to see what reason they sign a present a select perez on instagram by manufacturer. Please enter your words, the amount of racing spirit of damaging a successful year! Mazda radar cruise control? Dr points for. Connell claimed overall winner must either a transferable skill, le mans for teams would be physically located in austin back years of similar abilities are locked into. England to such high end gaming was gt sport driver contract hire but tragedy is. The drivers of the fia gran turismo. It up camera, forcing people there right holder of course, or fights for watching those that racing is sparse as you control and drive consistently well. They can introduce you lose your dr score continues to sugawara like that makes improvements to withdraw from his finger ran along a year. Per automobile club everything comes down payment has arrived at via abetone inferiore no races have a team and share posts that. Aston vantage on your sr c to show how incredibly awesome these pirelli season, gt sport driver contract or. All gold will catch him. You find them is back has quite a single player will have a step into online. Android auto sport mode could have. Metal gear games on google play gran turismo sport actually performs functions such a video games potentially make significant staying on a lender. -

Esport Chart V8

ANZ esports landscape. August 2018 Understand inter-relationships by cross referencing colour coded numbers for each listing. NON ENDEMIC BRANDS 1 HARVEY NORMAN 10 13 5 BROADCASTERS 2 VIRGIN ENDEMIC BRANDS TOURNAMENTS 3 1 TWITCH 3 MCDONALD’S 1 MWAVE ALL 2 5 ALL 7 1 INTEL EXTREME MASTERS 3 6 7 8 9 17 7 1 2 3 11 2 7 2 13 1 6 1 2 YOUTUBE 4 HUNGRY JACK’S 2 LOGITECH ALL ALL 2 RIFT RIVALS 1 1 2 7 5 1 1 12 TEAMS 3 TWITTER GAMES 5 DARE ICED COFFEE 3 TURTLE BEACH ALL ALL 4 21 3 LEAGUE OF ORIGIN 1 LEGACY ESPORTS 4 2 4 FACEBOOK 1 1 16 4 LG 1 LEAGUE OF LEGENDS 1 2 4 6 9 7 17 19 12 6 MAXIBON AGENCIES EVENT ORGANISERS ALL ALL 2 1 2 3 4 6 2 6 12 1 2 3 5 6 7 18 4 THROWDOWN ESPORTS 2 DIRE WOLVES 5 5 SMASHCAST 1 5 9 1 4 11 16 3 5 NVIDIA 19 22 4 12 16 1 10 3 4 5 1 2 1 1 15 1 4 10 7 MUSCLE MEALS 1 FIVE BY FIVE GLOBAL 1 TEG ALL ALL 7 5 FORZA SUPERCAR 1 1 14 1 18 2 21 6 1 6 2 CS:GO 3 BOMBERS 6 NETWORK TEN 10 13 1 1 1 2 9 18 28 6 LENOVO 2 GFINITY 4 2 2 1 1 3 4 11 12 4 5 6 7 9 10 11 12 8 SENNHEISER 2 BASTION LIVE 4 1 184 20 21 22 23 25 26 32 1 2 4 6 MELBOURNE ESPORTS OPEN 14 7 6 5 6 7 9 5 11 27 18 19 7 SBS 4 TAINTED MINDS 1 3 8 1 5 6 13 14 21 23 2 5 6 20 7 ZOWIE 13 17 9 2 4 5 10 11 3 6 7 8 17 9 JAM AUDIO 3 DOUBLE JUMP COMMUNICATIONS 3 THROWDOWN ESPORTS 3 DOTA 2 11 4 7 COUCHWARRIORS BATTLE ARENA MELBOURNE 3 1 5 9 1 4 11 4 12 16 19 8 OVO MOBILE 2 1 7 11 1 2 4 5 AVANT GAMING 1 7 16 14 21 21 10 12 8 8 SCUF GAMING 1 1 1 2 4 5 6 11 14 6 25 9 11 30 2 10 NOVA ENTERTAINMENT 4 GEMBA 4 FLAKTEST 4 ROCKET LEAGUE 4 16 6 2 3 4 6 9 27 28 29 9 GAMESTAH 6 9 4 1 4 6 1 4 5 6 7 17