Lucas Bechberger Kai-Uwe Kühnberger Mingya Liu Editors Concepts in Action Representation, Learning, and Application Language, Cognition, and Mind

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Daily Egyptian, December 08, 1965

Southern Illinois University Carbondale OpenSIUC December 1965 Daily Egyptian 1965 12-8-1965 The aiD ly Egyptian, December 08, 1965 Daily Egyptian Staff Follow this and additional works at: http://opensiuc.lib.siu.edu/de_December1965 Volume 47, Issue 54 Recommended Citation , . "The aiD ly Egyptian, December 08, 1965." (Dec 1965). This Article is brought to you for free and open access by the Daily Egyptian 1965 at OpenSIUC. It has been accepted for inclusion in December 1965 by an authorized administrator of OpenSIUC. For more information, please contact [email protected]. Three Injured In Smashup DAILY EGYPTIAN Three persons were injured SOUTHERN ILLINOIS UNIVERSITY in an accident near University Park Tuesday morning. Deanna McCredie. 19. and C.r...... l •• III. Wednftday, December 8,1965 Mumber 54 Robert Wheelwright. 21. both of Southern HUls. were in a car which collided with an automobile driven by Alvin Daume.62. of Tatum Heights. The cars collided head-on. LA&S Begins New Procedure The accident occurred on the Southern Hills road shortly before 8 a.m. Mrs. McCredie and Wheel wright were taken to Doctors To Advise Students From GS Hospital J.nd then to the Health Service for treatment. and were released. Daume was System Provides treated at Holden Hospital. Progress Review Motorcycle Grou p The advisement center for the College of Liberal Arts Seeks Ordinances and Sciences is beginning a Two recommendations con new appointment and advise cerning motorcycle riding and ment procedure for entering parking were presented to the stUdents who have completed Carbondale City CouncilMon 96 hours of work in General day night by SIU student Larry Studies. -

Edible Insects

1.04cm spine for 208pg on 90g eco paper ISSN 0258-6150 FAO 171 FORESTRY 171 PAPER FAO FORESTRY PAPER 171 Edible insects Edible insects Future prospects for food and feed security Future prospects for food and feed security Edible insects have always been a part of human diets, but in some societies there remains a degree of disdain Edible insects: future prospects for food and feed security and disgust for their consumption. Although the majority of consumed insects are gathered in forest habitats, mass-rearing systems are being developed in many countries. Insects offer a significant opportunity to merge traditional knowledge and modern science to improve human food security worldwide. This publication describes the contribution of insects to food security and examines future prospects for raising insects at a commercial scale to improve food and feed production, diversify diets, and support livelihoods in both developing and developed countries. It shows the many traditional and potential new uses of insects for direct human consumption and the opportunities for and constraints to farming them for food and feed. It examines the body of research on issues such as insect nutrition and food safety, the use of insects as animal feed, and the processing and preservation of insects and their products. It highlights the need to develop a regulatory framework to govern the use of insects for food security. And it presents case studies and examples from around the world. Edible insects are a promising alternative to the conventional production of meat, either for direct human consumption or for indirect use as feedstock. -

The Affective Properties of Keys in Instrumental Music from the Late Nineteenth and Early Twentieth Centuries

University of Massachusetts Amherst ScholarWorks@UMass Amherst Masters Theses 1911 - February 2014 2010 The ffeca tive properties of keys in instrumental music from the late nineteenth and early twentieth centuries Maho A. Ishiguro University of Massachusetts Amherst Follow this and additional works at: https://scholarworks.umass.edu/theses Part of the Composition Commons, Musicology Commons, Music Practice Commons, Music Theory Commons, and the Other Arts and Humanities Commons Ishiguro, Maho A., "The ffea ctive properties of keys in instrumental music from the late nineteenth and early twentieth centuries" (2010). Masters Theses 1911 - February 2014. 536. Retrieved from https://scholarworks.umass.edu/theses/536 This thesis is brought to you for free and open access by ScholarWorks@UMass Amherst. It has been accepted for inclusion in Masters Theses 1911 - February 2014 by an authorized administrator of ScholarWorks@UMass Amherst. For more information, please contact [email protected]. THE AFFECTIVE PROPERTIES OF KEYS IN INSTRUMENTAL MUSIC FROM THE LATE NINETEENTH AND EARLY TWENTIETH CENTURIES A Thesis Presented by MAHO A. ISHIGURO Submitted to the Graduate School of the University of Massachusetts Amherst in partial fulfillment of the requirements for the degree of MASTER OF MUSIC September 2010 Music © Copyright by Maho A. Ishiguro 2010 All Rights Reserved THE AFFECTIVE PROPERTIES OF KEYS IN THE TWENTIETH CENTURY A Thesis Presented by MAHO A. ISHIGURO Approved as to style and content by: ________________________________________ Miriam Whaples, Chair ________________________________________ Brent Auerbach, Member ________________________________________ Robert Eisenstein, Member ________________________________________ Jeffrey Cox, Department Chair Music DEDICATION I dedicate my thesis to my father, Kenzo Ishiguro. ACKNOWLEDGEMENTS I would like to thank my advisor, Prof. -

Items Wanted Cars for Sale

Corner Store o advertise in the Corner Store, * fmx transmission tube and dipstick #C9AZ6213086-J Contact John Ross T-send your ad directly to the club looking for a transmission tube and dip- at: 317-773-7193 or E-mail at tbird@ and it will be posted on the WWW as stick for a 67 Galaxie 500 w/289, fmx indy.net (IN) well as in our magazine. No phone ads transmission, bolts into pan not case. * 62 Rocker Panel and Door pillar Contact Brian Haslinger at: 419-559- looking for NOS driver side outer rock- are taken. Members are allowed 3 free 3126 or E-mail at bhaslinger@woh. er and door pillar (B pillar)for 2 door. ads per year, up to 50 words. Any more rr.com (OH) C3AZ 6210129-A,C1AZ 6228161-A than 50 words please have a check to * Need a 6 way power front bench Contact Rick Wilson at: 847-699-7873 accompany the ad at .15 cents per word seat switch for a 1968 Galaxie 500 or E-mail at [email protected] (IL) past the 50 allowed. Please send ad convertible. This switch may have been * Rear Stainless Trim 1970 Galaxie typed if possible. E-mailed ads are also used on other years and models. Con- 500 XL Convertible. Moustache trim for accepted. ALL SALES ADS MUST BE tact Rod Nelson at: 951-789-6127 or E- trunk and both taillights. Contact Carter ACCOMPANIED BY PRICES or else Best mail at [email protected] (CA) Buschardt at: 816-304-5525 or E-mail offer. -

Low Down Studio 110

Low Down Studio 110 Service Manual Line 6, Inc. Customer Service and Repair 3850-A Royal Ave. Simi Valley, CA 93063 P. 818-575-3600 F. 818-575-3961 E. [email protected] 99-015-0505 A5-4 LOW DOWN STUDIO-10 US 75W Part Numbers Description Qty. Per Reference Designator(s) 21-37-1160 CBL PWR UL/CSA SJT 8.2ft Blk EL-302 w/GND EL70 1 PACKOUT 40-00-0301 MANUAL USER STUDIO 10 A5-4 1 PACKOUT 40-00-1000 CARD WARRANTY LINE 6 HARDWARE 1 PACKOUT 40-01-0016 CARD LICENSE-AGREEMNT END-USERALL-PRODUCTS 1 PACKOUT 40-03-0031 CARD REGISTRATION UK 1 PACKOUT 40-03-2000 CARD REGISTRATION US 1 PACKOUT 40-03-2000-1 CARD REGISTRATION EUROPE 1 PACKOUT 40-10-0108 PROTECTOR CORNER CARTON SPIDER2-1508/3012 8 PACKOUT 40-10-0250 CARTON SHIPPING STUDIO 1x10 14" x 14" x 14" A5-4 1 PACKOUT 40-15-0022 SILICA GEL PACK 3.0" x 3.5" 1 PLACE INSIDE PLASTIC BAG WITH UNIT 40-15-0023 ANTISEPTIC PACK (5g/package) 1 PACKOUT 40-20-0011 BAG PLASTIC 10 x 16 2 mil 1 PACKOUT 40-20-0113 PLASTIC BAG 16 x 16 x 25" 2MILCLEAR A5-4 1 PACKOUT 40-25-0100 LABEL BAR CODE SERIAL NUMBER 4-PANEL LABEL 1 REAR CHASSIS & GIGT/SHIPPING BOX 59-00-0030-1 ASSY UNIT COMPLETE BASS AMP US STUDIO 10 A5-4 1 59-00-0030-1 ASSY UNIT COMPLETE BASS AMP US STUDIO 10 Part Numbers Description Qty. Per Reference Designator(s) 11-20-0013 SPEAKER BASS 10" 8-OHM 75W HI-TOUCH P-0610001B-X2 1 INSTALL INTO CABINET 11-30-0006 XFMR 100/120VAC 50/60Hz 76mm 34VAC x 2 / 9.8VAC 1-CONNECTOR 1 24-19-4025 FUSE 4A 125V TL 5x20mm Littlefuse# H239 004 or equiv. -

Thestandard 2020 Edition

TheStandard 2020 Edition POWER UNLEASHED WWW.VICON.COM 1 A NEW VANTAGE POINT 2 3 18 28 32 36 A unique set of motion Epic Lab is creating the future Vicon technology helps teams Preparing student for the VFX capture challenges of wearable robots save the world applications of the future CONTENTS From extreme to mainstream Science fiction to science fact Using motion capture to create perfect communication Stronger Together 7 Using Motion Capture to Create Imogen Moorhouse, Vicon CEO Perfect Communication 36 Drexel University is preparing students for the VFX applications of the future Leaps & Bounds 8 Capturing the elite athletes Vicon Study of Dynamic Object of the NBA for 2K Tracking Accuracy 40 Fighting for the future At Vicon we understand that we produce a Motion Capture Has Become a Sixth system where our real-time data is relied upon. Sense for Autonomous Drone Flying 12 It’s natural for either our existing users or potential new customers to want to understand Bell Innovation is using mocap to model flight what the quality of that data is. in the smart cities of the future Cracking Knuckles, Metacarpals Bringing New Motion Capture Technology and Phalanges 60 Motion Capture’s to Old Biomechanical Problems 44 How Framestore and Vicon finally solved 44 58 Newest Frontier 16 hand capture Shedding new light on how How new tracking A collaborative approach to gait analysis is athletes’ joints work technologies will benefit life Flinders University is using its capture stage shedding new light on how athletes’ joints work sciences to -

August 1997 ¥ MAGAZINE ¥ Vol

August 1997 ¥ MAGAZINE ¥ Vol. 2 No. 5 Computer Animation SIGGRAPH Issue NASA’s JPL on Animation From Space SIGGRAPH’s Early Years John Whitney’s Legacy Plus: Computer Animation for Beginners Table of Contents 2 Table of Contents August 1997 Vol. 2, No. 5 4 Editor’s Notebook Computer animation is on everyone’s lips, but what exactly is being said? Heather Kenyon discusses the good and the bad. 6 Letters: [email protected] COMPUTER ANIMATION 9 Animation and Visualization of Space Mission Data We have all been glued to our television screens, amazed by the images of Mars that are being beamed thousands of miles through space. How do they do that? William B. Green and Eric M. DeJong from the California Institute of Technology Jet Propulsion Laboratory explain. 13 SIGGRAPH: Past and Present Super hip SIGGRAPH was founded in the world of academia and military tests far before visual effects were even considered. Joan Collins traces the growth of computer animation through the organization’s confer- ences. 17 Don’t Believe Your Eyes It is real, or is it animation? Bill Hilf explores the aesthetic implications of our new digital realm. 20 Going Digital And Loving It Traditional animator Guionne Leroy describes her first digital experience. Currently working on a new clay short, she is shooting it with a digital camera and having a blast with the new opportunities. 1997 22 Computer Animation 101:A Guide for the Computer Illiterate Hand-Animator Jo Jürgens answers everything you ever wanted to know about basic computer animation but were afraid to ask. -

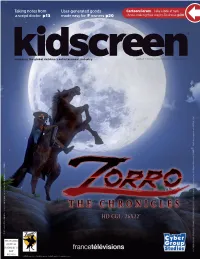

User-Generated Goods Made Easy for IP Owners P20 Taking Notes from a Script Doctor

Taking notes from User-generated goods Cartoon Forum—Take a look at new a script doctor p13 made easy for IP owners p20 shows making their way to Toulouse p30 engaging the global children’s entertainment industry A publication of Brunico Communications Ltd. JULY/AUGUST 2012 CANADA POST AGREEMENT NUMBER 40050265 PRINTED IN CANADA USPS AFSM 100 Approved Polywrap CANADA POST AGREEMENT NUMBER 40050265 PRINTED IN USPS AFSM 100 Approved US$7.95 in the U.S. CA$8.95 in Canada US$9.95 outside of Canada & the U.S. Inside July/August 2012 moves 7 Check out the top-five things on Kidscreen’s radar this month Hot Stuff—Disney’s rising star Olivia Holt dishes on monsters and Mars tv 13 Script doctor David Freedman shares tips on upping the comedy in a rewrite Tuning In—Italian kidnet Boing makes it big with free DTT and CN catalogue consumer products 20 CafePress helps licensors legitimize fans’ design-it-yourself impulses Licensee Lowdown—Apparel co Kahn Lucas sets sights on girls IP expansion kid insight 25 Nickelodeon studies the mindset of the Millennial gamer Muse of the Month—Five-year-old Riley takes pretend play online 17 ikids 28 JustLaunched—Kids’ CBC picks a springtime debut for number-themed Monster Math Squad Print books vs. eBooks—which are better for kid-and-parent co-reading? New Kid in Town—Bubble Gum Special Cartoon Forum Preview 30 readies Little Space Heroes for blast-off We take a look at projects with potential headed to next month’s annual Report European animation pitch fest next month in Toulouse, France Spider-Man and Discovery Kids Roobarb & One Direction, Kids take a spin Phineas walk into continues to Custard support cool with girls, with latest high- 16 a clubhouse.. -

Snow White Seven Dwarfs

AND SNOW WHITE THE SEVEN DWARFS EDUCATOR AND FAMILY GUIDE 1 ©Disney CONTENTS WELCOME 3 EXHIBITION OVERVIEW ABOUT WALT DISNEY 4 THE WALT DISNEY STUDIOS: INNOVATORS IN ANIMATION 6 THE MAKING OF SNOW WHITE AND THE SEVEN DWARFS 7 THE MAKING OF AN ANIMATED FILM: GUIDED DISCUSSIONS AND ACTIVITIES STORY STRUCTURE 8 CREATE A STORY STRUCTURE 9 STORY BOARDS 10 CREATE A STORYBOARD 11 CONCEPT ART 13 CREATE A KEY LOCATION 14 CREATE MOODS USING ART MEDIA 15 CHARACTER 16 CREATE YOUR DWARF 18 MODEL SHEETS 20 JUN 8, 2013–OCT 27, 2013 CREATE A CHARACTER MODEL SHEET 21 nrm.org/snowwhite ANIMATION 22 FLIPBOOKS 23 9 Route 183 | PO Box 308 | Stockbridge, MA 01262 CREATE A FLIPBOOK 24 Snow White and the Seven Dwarfs: The Creation of a Classic is organized by The Walt REFERENCE DRAWING 26 Disney Family Museum®. Major support is provided by Wells Fargo. Media sponsors: CREATE A REFERENCE DRAWING 27 News10 ABC, Radio Disney 1460, and Berkshire Magazine. INK AND PAINT 28 Exhibition design: IQ Magic. CREATE A CELL WITH INK AND PAINT 29 THE WALT DISNEY FAMILY MUSEUM® DISNEY ENTERPRISES, INC. | ©2013 THE WALT DISNEY FAMILY MUSEUM, LLC THE WALT DISNEY FAMILY MUSEUM IS NOT AFFILIATED WITH DISNEY ENTERPRISES, INC. KEY TERMS 30 IMAGE CREDITS 31 PRESENTING SPONSOR WELCOME Welcome to the Museum and our new special exhibition, Snow White and the Seven Dwarfs: The Creation of a Classic. This Educator and Family Guide, which, like the exhibition itself, was produced by The Walt Disney Family Museum, provides detailed information for educators ©Disney Reproduction cel set-up, Disney Studio Artists, and parents to engage young visitors in focused Snow White and the Seven Dwarfs (1937) exploration. -

List of Abbreviation and Acronym

List of Abbreviation and Acronym APJMB Asosiasi Petani Jambu Mete Lambusango Buton (Lambusango Cashews Farmer Association) APJMB Asosiasi Petani Jambu Mete Barangka Buton (Barabgka Cashews Farmer Association) APJMM Asosiasi Petani Jambu Mete Matanauwe (Matanauwe Cashews Farmer Association) BCFA Barangka Cashews Farmer Association BDFO Buton District Forestry Office BIPHUT Badan Inventarisasi dan Pemetaan Hutan (Agency for Forest Inventory and Mapping) BKSDA Balai Konservasi Sumber Daya Alam (Natural Resource Conservation Centre) BPD Badan Perwakilan Desa, Village Parliament BRI Bank Rakyat Indonesia Camat Head of sub-district CARE Canadian based NGO CFMP Community Forestry Management Forum COREMAP Coral Reef Rehabilitation and Management Project Danramil Head of sub-district army DFID Department for International Development DPRD Dewan Perwakilan Rakyat Daerah (District Parliament) FCUL Forest Crime Unit Lambusango FGD Focus Group Discussion FLO Fairtrade Labelling Organization Gerhan Gerakan Rehabilitasi Hutan dan Lahan, National wide government project for land rehabilitation and reforestation GIS Geo-Information System G-KDP Green Kecamatan Development Program GPS Geo Positioning System ICRAF World Agroforestry Center JC Just Cashews Kapolsek Head of sub-district police officer Kecamatan Sub-district KRPH Kepala Resort Polisi Hutan (Head of Forest Ranger at Kecamatan Level) KT Kalende Tribe KUHP Kitab Undang-Undang Hukum Pidana/criminal law LEAD Legal Empowerment & Assistance for the Disadvantaged LED Lembaga Ekonomi Dusun/sub-village -

Prime Time Animation: Television Animation and American Culture

Prime Time Animation Television animation and American culture Edited by Carol A. Stabile and Mark Harrison PRIME TIME ANIMATION In September 1960 a television show emerged from the mists of prehistoric time to take its place as the mother of all animated sitcoms. The Flintstones spawned dozens of imitations, just as, two decades later, The Simpsons sparked a renaissance of prime time animation. The essays in this volume critically survey the landscape of television animation, from Bedrock to Springfield and beyond. The contributors explore a series of key issues and questions, including: How do we explain the animation explosion of the 1960s? Why did it take nearly twenty years following the cancellation of The Flintstones for animation to find its feet again as prime time fare? In addressing these questions, as well as many others, the essays in the first section examine the relation between earlier, made-for-cinema animated production (such as the Warner Looney Toons shorts) and television-based animation; the role of animation in the economies of broadcast and cable television; and the links between animation production and brand image. Contributors also examine specific programs like The Powerpuff Girls, Daria, The Simpsons, The Ren and Stimpy Show and South Park from the perspective of fans, exploring fan cybercommunities, investi- gating how ideas of ‘class’ and ‘taste’ apply to recent TV animation, and addressing themes such as irony, alienation, and representations of the family. Carol A. Stabile is associate professor of communication and director of the Women’s Studies Program at the University of Pittsburgh. She is the author of Feminism and the Technological Fix (1994), editor of Turning the Century: Essays in Media and Cultural Studies (2000), and is currently working on a book on media coverage of crime from the 1830s to the present. -

SHORT FILMS the Blessing and Curse of All Creative People Is the “So, I Got This Awesome Idea” Conundrum

SHORT FILMS The blessing and curse of all creative people is the “so, I got this awesome idea” conundrum. STASH MEDIA INC. Editor: StePheN PriCE For most it begins with an urgent desire to make the world a cooler, smarter or funnier place. Publisher: GREG ROBINS The problem arrives when this rush of genius slams head first into the lumpen reality of Managing editor: HEATHER GRIEVE producing something new and meaningful then collapses in a dazed heap. Associate publisher: MARILEE BOITSON Associate editor: ABBEY KERR Account manager: APRIL HARVEY For a few exceptional creators, whether they design cars, meals, songs, houses, hand bags, Business development: wine labels or moving images, that awesome idea locks itself around their neck and does PaUliNE ThomPsoN Administration: STEFANIE POLSINELLI more than whisper seductively about freedom, glory or fulfillment. It pulls them out of their Preview editor: creative comfort zone and, I’ll argue, just as importantly, demands they plow up the fallow left HEATHER GRIEVE Technical guidance: IAN HASKIN hemisphere of their brain and shovel around mundane project details like budget, schedule, staffing and administration. GET YOUR INSPIRATION DELIVERED MONTHLY. This trek to the dark “corporate” side is the undoing of many creative ventures but talent SHORT who brave their projects through this valley of death (carpeted solely with meddling, suit-clad Every issue of Stash DVD magazine is packed with outstanding animation and VFX for design and advertising. cubicle-dwellers) emerge more focused, more mature and better prepared for their next stab at FILMS cooler/smarter/funnier. Subscribe now: WWW.STASHMEDIA.TV SHORT FILMS 1 showcases this kind of filmmaker and 30 of their ideas.