NVIDIA Tegra X1 Targets Neural Net Image Processing Performance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ATI Radeon™ HD 4870 Computation Highlights

AMD Entering the Golden Age of Heterogeneous Computing Michael Mantor Senior GPU Compute Architect / Fellow AMD Graphics Product Group [email protected] 1 The 4 Pillars of massively parallel compute offload •Performance M’Moore’s Law Î 2x < 18 Month s Frequency\Power\Complexity Wall •Power Parallel Î Opportunity for growth •Price • Programming Models GPU is the first successful massively parallel COMMODITY architecture with a programming model that managgped to tame 1000’s of parallel threads in hardware to perform useful work efficiently 2 Quick recap of where we are – Perf, Power, Price ATI Radeon™ HD 4850 4x Performance/w and Performance/mm² in a year ATI Radeon™ X1800 XT ATI Radeon™ HD 3850 ATI Radeon™ HD 2900 XT ATI Radeon™ X1900 XTX ATI Radeon™ X1950 PRO 3 Source of GigaFLOPS per watt: maximum theoretical performance divided by maximum board power. Source of GigaFLOPS per $: maximum theoretical performance divided by price as reported on www.buy.com as of 9/24/08 ATI Radeon™HD 4850 Designed to Perform in Single Slot SP Compute Power 1.0 T-FLOPS DP Compute Power 200 G-FLOPS Core Clock Speed 625 Mhz Stream Processors 800 Memory Type GDDR3 Memory Capacity 512 MB Max Board Power 110W Memory Bandwidth 64 GB/Sec 4 ATI Radeon™HD 4870 First Graphics with GDDR5 SP Compute Power 1.2 T-FLOPS DP Compute Power 240 G-FLOPS Core Clock Speed 750 Mhz Stream Processors 800 Memory Type GDDR5 3.6Gbps Memory Capacity 512 MB Max Board Power 160 W Memory Bandwidth 115.2 GB/Sec 5 ATI Radeon™HD 4870 X2 Incredible Balance of Performance,,, Power, Price -

An Evolution of Mobile Graphics

AN EVOLUTION OF MOBILE GRAPHICS Michael C. Shebanow Vice President, Advanced Processor Lab Samsung Electronics July 20, 20131 DISCLAIMER • The views herein are my own • They do not represent Samsung’s vision nor product plans 2 • The Mobile Market • Review of GPU Tech • GPU Efficiency • User Experience • Tech Challenges • Summary 3 The Rise of the Mobile GPU & Connectivity A NEW WORLD COMING? 4 DISCRETE GPU MARKET Flattening 5 MOBILE GPU MARKET Smart • In 2012, an estimated 800+ Phones million mobile GPUs shipped “Phablets” • ~123M tablets • ~712M smart phones Tablets • Will easily exceed 1B in the coming years • Trend: • Discrete GPU relatively flat • Mobile is growing rapidly 6 WW INTERNET TRAFFIC • Source: Cisco VNI Mobile INET IP Traffic growth Traffic • Internet traffic growth Year (TB/sec) rate (TB/sec) rate is staggering 2005 0.9 0.00 2006 1.5 65% 0.00 • 2012 total traffic is 2007 2.5 61% 0.01 13.7 GB per person 2008 3.8 54% 0.01 per month 2009 5.6 45% 0.04 2010 7.8 40% 0.10 • 2012 smart phone 2011 10.6 36% 0.23 traffic at 2012 12.4 17% 0.34 0.342 GB per person per month • 2017 smart phone traffic expected at 2.7 GB per person per month 7 WHERE ARE WE HEADED?… • Enormous quantity of GPUs • Large amount of interconnectivity • Better I/O 8 GPU Pipelines A BRIEF REVIEW OF GPU TECH 9 MOBILE GPU PIPELINE ARCHITECTURES Tile-based immediate mode rendering IA VS CCV RS PS ROP (TBIMR) Tile-based deferred IA VS CCV scene rendering (TBDR) RS PS ROP IA = input assembler VS = vertex shader CCV = cull, clip, viewport transform RS = rasterization, -

An Emerging Architecture in Smart Phones

International Journal of Electronic Engineering and Computer Science Vol. 3, No. 2, 2018, pp. 29-38 http://www.aiscience.org/journal/ijeecs ARM Processor Architecture: An Emerging Architecture in Smart Phones Naseer Ahmad, Muhammad Waqas Boota * Department of Computer Science, Virtual University of Pakistan, Lahore, Pakistan Abstract ARM is a 32-bit RISC processor architecture. It is develop and licenses by British company ARM holdings. ARM holding does not manufacture and sell the CPU devices. ARM holding only licenses the processor architecture to interested parties. There are two main types of licences implementation licenses and architecture licenses. ARM processors have a unique combination of feature such as ARM core is very simple as compare to general purpose processors. ARM chip has several peripheral controller, a digital signal processor and ARM core. ARM processor consumes less power but provide the high performance. Now a day, ARM Cortex series is very popular in Smartphone devices. We will also see the important characteristics of cortex series. We discuss the ARM processor and system on a chip (SOC) which includes the Qualcomm, Snapdragon, nVidia Tegra, and Apple system on chips. In this paper, we discuss the features of ARM processor and Intel atom processor and see which processor is best. Finally, we will discuss the future of ARM processor in Smartphone devices. Keywords RISC, ISA, ARM Core, System on a Chip (SoC) Received: May 6, 2018 / Accepted: June 15, 2018 / Published online: July 26, 2018 @ 2018 The Authors. Published by American Institute of Science. This Open Access article is under the CC BY license. -

NVIDIA Quadro P4000

NVIDIA Quadro P4000 GP104 1792 112 64 8192 MB GDDR5 256 bit GRAPHICS PROCESSOR CORES TMUS ROPS MEMORY SIZE MEMORY TYPE BUS WIDTH The Quadro P4000 is a professional graphics card by NVIDIA, launched in February 2017. Built on the 16 nm process, and based on the GP104 graphics processor, the card supports DirectX 12.0. The GP104 graphics processor is a large chip with a die area of 314 mm² and 7,200 million transistors. Unlike the fully unlocked GeForce GTX 1080, which uses the same GPU but has all 2560 shaders enabled, NVIDIA has disabled some shading units on the Quadro P4000 to reach the product's target shader count. It features 1792 shading units, 112 texture mapping units and 64 ROPs. NVIDIA has placed 8,192 MB GDDR5 memory on the card, which are connected using a 256‐bit memory interface. The GPU is operating at a frequency of 1227 MHz, which can be boosted up to 1480 MHz, memory is running at 1502 MHz. We recommend the NVIDIA Quadro P4000 for gaming with highest details at resolutions up to, and including, 5760x1080. Being a single‐slot card, the NVIDIA Quadro P4000 draws power from 1x 6‐pin power connectors, with power draw rated at 105 W maximum. Display outputs include: 4x DisplayPort. Quadro P4000 is connected to the rest of the system using a PCIe 3.0 x16 interface. The card measures 241 mm in length, and features a single‐slot cooling solution. Graphics Processor Graphics Card GPU Name: GP104 Released: Feb 6th, 2017 Architecture: Pascal Production Active Status: Process Size: 16 nm Bus Interface: PCIe 3.0 x16 Transistors: 7,200 -

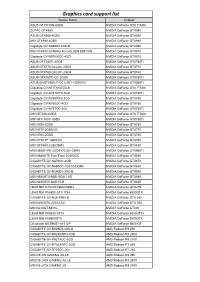

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

SECOND AMENDED COMPLAINT 3:14-Cv-582-JD

Case 3:14-cv-00582-JD Document 51 Filed 11/10/14 Page 1 of 19 1 EDUARDO G. ROY (Bar No. 146316) DANIEL C. QUINTERO (Bar No. 196492) 2 JOHN R. HURLEY (Bar No. 203641) PROMETHEUS PARTNERS L.L.P. 3 220 Montgomery Street Suite 1094 San Francisco, CA 94104 4 Telephone: 415.527.0255 5 Attorneys for Plaintiff 6 DANIEL NORCIA 7 UNITED STATES DISTIRCT COURT 8 NORTHERN DISTRICT OF CALIFORNIA 9 DANIEL NORCIA, on his own behalf and on Case No.: 3:14-cv-582-JD 10 behalf of all others similarly situated, SECOND AMENDED CLASS ACTION 11 Plaintiffs, COMPLAINT FOR: 12 v. 1. VIOLATION OF CALIFORNIA CONSUMERS LEGAL REMEDIES 13 SAMSUNG TELECOMMUNICATIONS ACT, CIVIL CODE §1750, et seq. AMERICA, LLC, a New York Corporation, and 2. UNLAWFUL AND UNFAIR 14 SAMSUNG ELECTRONICS AMERICA, INC., BUSINESS PRACTICES, a New Jersey Corporation, CALIFORNIA BUS. & PROF. CODE 15 §17200, et seq. Defendants. 3. FALSE ADVERTISING, 16 CALIFORNIA BUS. & PROF. CODE §17500, et seq. 17 4. FRAUD 18 JURY TRIAL DEMANDED 19 20 21 22 23 24 25 26 27 28 1 SECOND AMENDED COMPLAINT 3:14-cv-582-JD Case 3:14-cv-00582-JD Document 51 Filed 11/10/14 Page 2 of 19 1 Plaintiff DANIEL NORCIA, having not previously amended as a matter of course pursuant to 2 Fed.R.Civ.P. 15(a)(1)(B), hereby exercises that right by amending within 21 days of service of 3 Defendants’ Motion to Dismiss filed October 20, 2014 (ECF 45). 4 Individually and on behalf of all others similarly situated, Daniel Norcia complains and alleges, 5 by and through his attorneys, upon personal knowledge and information and belief, as follows: 6 NATURE OF THE ACTION 7 1. -

AMD Radeon E8860

Components for AMD’s Embedded Radeon™ E8860 GPU INTRODUCTION The E8860 Embedded Radeon GPU available from CoreAVI is comprised of temperature screened GPUs, safety certi- fiable OpenGL®-based drivers, and safety certifiable GPU tools which have been pre-integrated and validated together to significantly de-risk the challenges typically faced when integrating hardware and software components. The plat- form is an off-the-shelf foundation upon which safety certifiable applications can be built with confidence. Figure 1: CoreAVI Support for E8860 GPU EXTENDED TEMPERATURE RANGE CoreAVI provides extended temperature versions of the E8860 GPU to facilitate its use in rugged embedded applications. CoreAVI functionally tests the E8860 over -40C Tj to +105 Tj, increasing the manufacturing yield for hardware suppliers while reducing supply delays to end customers. coreavi.com [email protected] Revision - 13Nov2020 1 E8860 GPU LONG TERM SUPPLY AND SUPPORT CoreAVI has provided consistent and dedicated support for the supply and use of the AMD embedded GPUs within the rugged Mil/Aero/Avionics market segment for over a decade. With the E8860, CoreAVI will continue that focused support to ensure that the software, hardware and long-life support are provided to meet the needs of customers’ system life cy- cles. CoreAVI has extensive environmentally controlled storage facilities which are used to store the GPUs supplied to the Mil/ Aero/Avionics marketplace, ensuring that a ready supply is available for the duration of any program. CoreAVI also provides the post Last Time Buy storage of GPUs and is often able to provide additional quantities of com- ponents when COTS hardware partners receive increased volume for existing products / systems requiring additional inventory. -

Graphics: Mesa, AMDVLK, Adreno and Protected Xe Path

Published on Tux Machines (http://www.tuxmachines.org) Home > content > Graphics: Mesa, AMDVLK, Adreno and Protected Xe Path Graphics: Mesa, AMDVLK, Adreno and Protected Xe Path By Roy Schestowitz Created 08/02/2021 - 11:48pm Submitted by Roy Schestowitz on Monday 8th of February 2021 11:48:11 PM Filed under Graphics/Benchmarks [1] Panfrost Gallium3D Lands Its New Bifrost Scheduler In Mesa 21.1 - Phoronix[2] Hitting Mesa 21.1 this morning is a scheduler implementation for Panfrost Gallium3D, the open-source Arm Mali graphics driver. Lead Panfrost developer Alyssa Rosenzweig has been working to implement a scheduler in panfrost for the Arm Bifrost graphics code path. The scheduler has been in the works for a number of months and is passing the relevant conformance tests and has now been merged. AMDVLK 2021.Q1.3 Brings Performance Tuning For War Thunder - Phoronix[3] AMDVLK 2021.Q1.3 is out this morning as the latest snapshot of the official open-source AMD Radeon Vulkan driver for Linux systems that is derived from their shared platform driver sources. AMDVLK 2021.Q1.3 is on the lighter side with AMDVLK 2021.Q1.2 having arrived just over one week ago. Of the two listed driver changes, AMDVLK 2021.Q1.3 is rebuilt against the Vulkan API 1.2.168 headers. Freedreno's MSM DRM Driver Adds More Adreno Support, Speedbin Capability For Linux 5.12 - Phoronix[4] The MSM Direct Rendering Manager driver originally developed as part of the Freedreno effort for open-source Qualcomm Adreno graphics on Linux while now supported by the likes of Google and Qualcomm's Code Aurora engineers has some notable changes in store for the next Linux kernel cycle. -

Graphics Processing Units

Graphics Processing Units Graphics Processing Units (GPUs) are coprocessors that traditionally perform the rendering of 2-dimensional and 3-dimensional graphics information for display on a screen. In particular computer games request more and more realistic real-time rendering of graphics data and so GPUs became more and more powerful highly parallel specialist computing units. It did not take long until programmers realized that this computational power can also be used for tasks other than computer graphics. For example already in 1990 Lengyel, Re- ichert, Donald, and Greenberg used GPUs for real-time robot motion planning [43]. In 2003 Harris introduced the term general-purpose computations on GPUs (GPGPU) [28] for such non-graphics applications running on GPUs. At that time programming general-purpose computations on GPUs meant expressing all algorithms in terms of operations on graphics data, pixels and vectors. This was feasible for speed-critical small programs and for algorithms that operate on vectors of floating-point values in a similar way as graphics data is typically processed in the rendering pipeline. The programming paradigm shifted when the two main GPU manufacturers, NVIDIA and AMD, changed the hardware architecture from a dedicated graphics-rendering pipeline to a multi-core computing platform, implemented shader algorithms of the rendering pipeline in software running on these cores, and explic- itly supported general-purpose computations on GPUs by offering programming languages and software- development toolchains. This chapter first gives an introduction to the architectures of these modern GPUs and the tools and languages to program them. Then it highlights several applications of GPUs related to information security with a focus on applications in cryptography and cryptanalysis. -

Three Ways of Seeing Improved Health and Productivity

Three ways of seeing Key Features Galaxy Watch3 improved health and The Galaxy Watch3 is a premium solution that’s B2B-ready, with days of power and a rotating bezel that allows easy productivity. navigation even while wearing gloves. • Onboard GPS, motion, activity and heart-rate sensors • Battery lasts up to 56 hours (45mm model)2 • Carrier-agnostic LTE3 Take a look at the Samsung Galaxy • Tested to MIL-STD-810G standards,4 IP685, rated at 5 ATM Watch3, Galaxy Watch Active2, and Galaxy Watch Active. Galaxy Watch Active2 The premium Galaxy Watch3, the versatile Galaxy Watch Active, With a focus on wellness, the Galaxy Watch Active2 features and the health-oriented Galaxy Watch Active2 offer greater a digital touch bezel plus advanced sensors that enable health and productivity to virtually any enterprise. They’re more accurate blood pressure tracking, ECG tracking, 1 protected by Samsung Knox . And they’re all customizable to heart rate tracking, alerts, and fall detection. incorporate your company’s branding. Be more nimble. Be • Advanced sensors include heart rate tracker, ECG sensor, and 32G high more productive. Samsung Galaxy watches make it possible. sampling rate accelerometer and gyro • Battery lasts up to 60 hours (44mm model)2 • Carrier-agnostic LTE3 • Tested to MIL-STD-810G standards,4 IP685, rated at 5 ATM Galaxy Watch Active The Galaxy Watch Active offers secure communications in fast-paced environments, and supports corporate efficiency, productivity, health, and safety initiatives. • Advanced sleep tracking helps improve stress levels and sleep patterns • Battery lasts up to 45 hours2 • Tested to MIL-STD-810G standards,4 IP685, rated at 5 ATM Contact Us: samsung.com/wearablesforbiz Galaxy Watch3 Galaxy Watch Active2 Galaxy Watch Active “1.77”” x 1.82”” x 0.44”” (45.0 x 46.2 x 11.1 mm) 1.73" x 1.73" x 0.43" (44 x 44 x 10.9mm) Dimensions 1.56” x 1.56” x 0.41” (39.5 x 39.5 x 10.5mm) 1.61”” x 1.67”” x 0.44”” (41.0 x 42.5 x 11.3 mm)” 1.57" x 1.73" x 0.43" (40 x 40 x 10.9mm) Physical Weight 1.90 oz (53.8 g) /1.70 oz (48.2g) 1.7 oz. -

Survey and Benchmarking of Machine Learning Accelerators

1 Survey and Benchmarking of Machine Learning Accelerators Albert Reuther, Peter Michaleas, Michael Jones, Vijay Gadepally, Siddharth Samsi, and Jeremy Kepner MIT Lincoln Laboratory Supercomputing Center Lexington, MA, USA freuther,pmichaleas,michael.jones,vijayg,sid,[email protected] Abstract—Advances in multicore processors and accelerators components play a major role in the success or failure of an have opened the flood gates to greater exploration and application AI system. of machine learning techniques to a variety of applications. These advances, along with breakdowns of several trends including Moore’s Law, have prompted an explosion of processors and accelerators that promise even greater computational and ma- chine learning capabilities. These processors and accelerators are coming in many forms, from CPUs and GPUs to ASICs, FPGAs, and dataflow accelerators. This paper surveys the current state of these processors and accelerators that have been publicly announced with performance and power consumption numbers. The performance and power values are plotted on a scatter graph and a number of dimensions and observations from the trends on this plot are discussed and analyzed. For instance, there are interesting trends in the plot regarding power consumption, numerical precision, and inference versus training. We then select and benchmark two commercially- available low size, weight, and power (SWaP) accelerators as these processors are the most interesting for embedded and Fig. 1. Canonical AI architecture consists of sensors, data conditioning, mobile machine learning inference applications that are most algorithms, modern computing, robust AI, human-machine teaming, and users (missions). Each step is critical in developing end-to-end AI applications and applicable to the DoD and other SWaP constrained users. -

2016 Comparison of Application Processor (AP) Packaging 1 Table of Contents

Application Processor for Smartphone Packaging Technology & Cost Review Advanced Packaging report by Stéphane ELISABETH November 2016 21 rue la Noue Bras de Fer 44200 NANTES - FRANCE +33 2 40 18 09 16 [email protected] www.systemplus.fr ©2016 System Plus Consulting | 2016 Comparison of Application Processor (AP) packaging 1 Table of Contents Overview / Introduction 3 Manufacturing Process Flow 73 o Executive Summary o Global Overview o AP I/O count & Footprint o Package Fabrication Unit o AP I/O count & L/S width Cost Analysis 76 o Reverse Costing Methodology o Synthesis of the cost analysis Company Profile 10 o Main Steps of Economic Analysis o Apple, Huawei, Samsung, Qualcomm o Yields Explanation & Hypotheses o Apple, Huawei, Samsung, Qualcomm AP history o Die cost 81 o Apple, Huawei, Samsung, Qualcomm Supply Chain History Front-End Cost o iPhone 7 Plus, Huawei P9, Samsung Galaxy S7 Teardown Wafer & Die Cost o PoP Packaging Technology o Package Manufacturing Cost 83 Physical Analysis 32 A10 Package Cost Breakdown o Synthesis of the Physical Analysis Kirin 955 Package Cost Breakdown o Physical Analysis Methodology Exynos 8 Package Cost Breakdown o A10, Kirin 955, Exynos 8, Snapdragon 820 Package 34 Snapdragon 820 Package Cost Breakdown Package Views & Dimensions o Component Cost 88 Wafer/Panel & Component Cost Package Opening Component Cost Breakdown Package Opening Comparison o PoP Cross-Section 47 Company services 91 Package Details Cross-Section Package Cross-Section Comparison o Die & Land-Side Decoupling Capacitor Comparison 66 Die View & Dimensions LSC Capacitor View & Dimensions LSC Capacitor Footprint Embedded LSC Capacitor Cross-Section Soldered LSC Capacitor Cross-Section o Summary of the physical Data 72 ©2016 System Plus Consulting | 2016 Comparison of Application Processor (AP) packaging 2 Executive Summary Overview / Introduction o Executive Summary • Located under the DRAM chip on the main board, the application processors (AP) are packaged using PoP o Reverse Costing Methodology technology.