Deep Learning for Dynamic Music Generation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ISSUE 2 Holy Family Writes

Issue 2 Term 3 August 2020 “Crafty Writers” Your writing is only limited to your imagination! Follow the year levels to see the amazing efforts our This issue, we are showcasing students are making to craft student work that shows a their words so cleverly. variety of author crafting techniques, such as use of of italics, alliteration, similes, Index: onomatopoeia, interrobang, ellipsis and many more! Reception/Year 1 Year 2 Authors in the Year 3 making… Year 4 We hope that in publishing this Year 5/6 digital magazine our young writers feel a sense of great ownership and achievement. Reception/ Year 1 Book making fun in R/1MR Reception/ Year 1 Book making fun in R/1MR Reception/ Year 1 By Athian R/1MP Reception/ Year 1 By Aisha R/1JH Reception/ Year 1 By Ambrose R/1MP Reception/ Year 1 By Dylan R/1MP Reception/ Year 1 By Lina R/1MP Reception/ Year 1 By Ariana R/1JH Reception/ Year 1 By Vincent R/1JH Reception/ Year 1 By Yasha R/1JH Reception/ Year 1 By Isla R/1MP Reception/ Year 1 Reception/ Year 1 Reception/ Year 1 By Kevin R/1MP Reception/ Year 1 Reception/ Year 1 Reception/ Year 1 By Sehana R/1MP Reception/ Year 1 Reception/ Year 1 Reception/ Year 1 Reception/ Year 1 By Victoria R/1MP Reception/ Year 1 Reception/ Year 1 By Hartley R/1MP Reception/ Year 1 Reception/ Year 1 Reception/ Year 1 By Abel R/1MP Year 2 By Cooper 2FA Year 2 By Cooper 2FA Year 2 By Sara 2FA Year 2 By Sara 2FA Year 2 By Sara 2FA Year 2 Year 2 By Dung 2RG Year 2 Year 2 Year 2 Year 2 Year 2 Year 2 By Beau 2RG Year 3 Circus Story Year 3 By Thuy 3JW Year 3 Year 3 Year 3 Year 3 By Vi 3JW Year 3 Dr Seuss is 87 years old. -

The Little SAS® Book a Primer THIRD EDITION

The Little SAS® Book a primer THIRD EDITION Lora D. Delwiche and Susan J. Slaughter The correct bibliographic citation for this manual is as follows: Delwiche, Lora D. and Slaughter, Susan J., 2003. The Little SAS Book: A Primer, Third Edition. Cary, NC: SAS Institute Inc. The Little SAS Book: A Primer, Third Edition Copyright © 2003, SAS Institute Inc., Cary, NC, USA ISBN 1-59047-333-7 All rights reserved. Produced in the United States of America. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, or otherwise, without the prior written permission of the publisher, SAS Institute Inc. U.S. Government Restricted Rights Notice: Use, duplication, or disclosure of this software and related documentation by the U.S. government is subject to the Agreement with SAS Institute and the restrictions set forth in FAR 52.227-19, Commercial Computer Software-Restricted Rights (June 1987). SAS Institute Inc., SAS Campus Drive, Cary, North Carolina 27513. 1st printing, November 2003 SAS Publishing provides a complete selection of books and electronic products to help customers use SAS software to its fullest potential. For more information about our e-books, e-learning products, CDs, and hard- copy books, visit the SAS Publishing Web site at support.sas.com/pubs or call 1-800-727-3228. SAS® and all other SAS Institute Inc. product or service names are registered trademarks or trademarks of SAS Institute Inc. in the USA and other countries. ® indicates USA registration. Other brand and product names are trademarks of their respective companies. -

Song & Music in the Movement

Transcript: Song & Music in the Movement A Conversation with Candie Carawan, Charles Cobb, Bettie Mae Fikes, Worth Long, Charles Neblett, and Hollis Watkins, September 19 – 20, 2017. Tuesday, September 19, 2017 Song_2017.09.19_01TASCAM Charlie Cobb: [00:41] So the recorders are on and the levels are okay. Okay. This is a fairly simple process here and informal. What I want to get, as you all know, is conversation about music and the Movement. And what I'm going to do—I'm not giving elaborate introductions. I'm going to go around the table and name who's here for the record, for the recorded record. Beyond that, I will depend on each one of you in your first, in this first round of comments to introduce yourselves however you wish. To the extent that I feel it necessary, I will prod you if I feel you've left something out that I think is important, which is one of the prerogatives of the moderator. [Laughs] Other than that, it's pretty loose going around the table—and this will be the order in which we'll also speak—Chuck Neblett, Hollis Watkins, Worth Long, Candie Carawan, Bettie Mae Fikes. I could say things like, from Carbondale, Illinois and Mississippi and Worth Long: Atlanta. Cobb: Durham, North Carolina. Tennessee and Alabama, I'm not gonna do all of that. You all can give whatever geographical description of yourself within the context of discussing the music. What I do want in this first round is, since all of you are important voices in terms of music and culture in the Movement—to talk about how you made your way to the Freedom Singers and freedom singing. -

DJ – Titres Incontournables

DJ – Titres incontournables Ce listing de titres constamment réactualisé , il vous ait destiné afin de surligner avec un code couleur ce que vous préférez afin de vous garantir une personnalisation totale de votre soirée . Si vous le souhaitez , il vaut mieux nous appeler pour vous envoyer sur votre mail la version la plus récente . Vous pouvez aussi rajouter des choses qui n’apparaissent pas et nous nous chargeons de trouver cela pour vous . Des que cette inventaire est achevé par vos soins , nous renvoyer par mail ce fichier adapté à vos souhaits 2018 bruno mars – finesse dj-snake-magenta-riddim-audio ed-sheeran-perfect-official-music-video liam-payne-rita-ora-for-you-fifty-shades-freed luis-fonsi-demi-lovato-echame-la-culpa ofenbach-vs-nick-waterhouse-katchi-official-video vitaa-un-peu-de-reve-en-duo-avec-claudio-capeo-clip-officiel 2017 amir-on-dirait april-ivy-be-ok arigato-massai-dont-let-go-feat-tessa-b- basic-tape-so-good-feat-danny-shah bastille-good-grief bastille-things-we-lost-in-the-fire bigflo-oli-demain-nouveau-son-alors-alors bormin-feat-chelsea-perkins-night-and-day burak-yeter-tuesday-ft-danelle-sandoval calum-scott-dancing-on-my-own-1-mic-1-take celine-dion-encore-un-soir charlie-puth-attention charlie-puth-we-dont-talk-anymore-feat-selena-gomez clean-bandit-rockabye-ft-sean-paul-anne-marie dj-khaled-im-the-one-ft-justin-bieber-quavo-chance-the-rapper-lil-wayne dj-snake-let-me-love-you-ft-justin-bieber enrique-iglesias-subeme-la-radio-remix-remixlyric-video-ft-cnco feder-feat-alex-aiono-lordly give-you-up-feat-klp-crayon -

2News Summer 05 Catalog

X-Men, Alpha Flight, and all related characters TM & © Marvel Characters, Inc. All Rights Reserved. 0 8 THIS ISSUE: INTERNATIONAL THIS HEROES! ISSUE: INTERNATIONAL No.83 September 2015 $ 8 . 9 5 1 82658 27762 8 featuring an exclusive interview with cover artists Steve Fastner and Rich Larson Alpha Flight • New X-Men • Global Guardians • Captain Canuck • JLI & more! Volume 1, Number 83 September 2015 EDITOR-IN-CHIEF Michael Eury Comics’ Bronze Age and Beyond! PUBLISHER John Morrow DESIGNER Rich Fowlks COVER ARTISTS Steve Fastner and Rich Larson COVER DESIGNER Michael Kronenberg PROOFREADER Rob Smentek SPECIAL THANKS Jack Abramowitz Martin Pasko Howard Bender Carl Potts Jonathan R. Brown Bob Rozakis FLASHBACK: International X-Men . 2 Rebecca Busselle Samuel Savage The global evolution of Marvel’s mighty mutants ByrneRobotics.com Alex Saviuk Dewey Cassell Jason Shayer FLASHBACK: Exploding from the Pages of X-Men: Alpha Flight . 13 Chris Claremont Craig Shutt John Byrne’s not-quite-a team from the Great White North Mike Collins David Smith J. M. DeMatteis Steve Stiles BACKSTAGE PASS: The Captain and the Controversy . 24 Leopoldo Duranona Dan Tandarich How a moral panic in the UK jeopardized the 1976 launch of Captain Britain Scott Edelman Roy Thomas WHAT THE--?!: Spider-Man: The UK Adventures . 29 Raimon Fonseca Fred Van Lente Ramona Fradon Len Wein Even you Spidey know-it-alls may never have read these stories! Keith Giffen Jay Williams BACKSTAGE PASS: Origins of Marvel UK: Not Just Your Father’s Reprints. 37 Steve Goble Keith Williams Repurposing Marvel Comics classics for a new audience Grand Comics Database Dedicated with ART GALLERY: López Espí Marvel Art Gallery . -

The Spectral Voice and 9/11

SILENCIO: THE SPECTRAL VOICE AND 9/11 Lloyd Isaac Vayo A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY August 2010 Committee: Ellen Berry, Advisor Eileen C. Cherry Chandler Graduate Faculty Representative Cynthia Baron Don McQuarie © 2010 Lloyd Isaac Vayo All Rights Reserved iii ABSTRACT Ellen Berry, Advisor “Silencio: The Spectral Voice and 9/11” intervenes in predominantly visual discourses of 9/11 to assert the essential nature of sound, particularly the recorded voices of the hijackers, to narratives of the event. The dissertation traces a personal journey through a selection of objects in an effort to seek a truth of the event. This truth challenges accepted narrativity, in which the U.S. is an innocent victim and the hijackers are pure evil, with extra-accepted narrativity, where the additional import of the hijacker’s voices expand and complicate existing accounts. In the first section, a trajectory is drawn from the visual to the aural, from the whole to the fragmentary, and from the professional to the amateur. The section starts with films focused on United Airlines Flight 93, The Flight That Fought Back, Flight 93, and United 93, continuing to a broader documentary about 9/11 and its context, National Geographic: Inside 9/11, and concluding with a look at two YouTube shorts portraying carjackings, “The Long Afternoon” and “Demon Ride.” Though the films and the documentary attempt to reattach the acousmatic hijacker voice to a visual referent as a means of stabilizing its meaning, that voice is not so easily fixed, and instead gains force with each iteration, exceeding the event and coming from the past to inhabit everyday scenarios like the carjackings. -

SPIDER-MAN: a BUMPY RIDE on BROADWAY the Broadway Production of “Spider-Man: Turn Off the Dark” Suffered from Vast Expenses and Discord

FROM THE NEW YORK TIMES ARCHIVES SPIDER-MAN: A BUMPY RIDE ON BROADWAY The Broadway production of “Spider-Man: Turn Off the Dark” suffered from vast expenses and discord. (Sara Krulwich/The New York Times) TBook Collections Copyright © 2015 The New York Times Company. All rights reserved. Cover photograph by Sara Krulwich/The New York Times This ebook was created using Vook. All of the articles in this work originally appeared in The New York Times. eISBN: The New York Times Company New York, NY www.nytimes.com www.nytimes.com/tbooks Broadway’s ‘Spider-Man’ Spins A Start Date By PATRICK HEALY February 24, 2009 The widely anticipated new musical “Spider-Man, Turn Off the Dark,” with music and lyrics by Bono and the Edge and directed by Julie Taymor (“The Lion King”), took a big swing toward a Broadway debut on Tuesday: the producers announced that the show would begin previews on Jan. 16 at the Hilton Theater. The musical, produced by Hello Entertainment/David Garfinkle, Martin McCallum, Marvel Entertainment and Sony Pictures Entertainment, is to open on Feb. 18. Industry insiders have said its budget would be the largest in Broadway history, about $40 million; a spokesman for the show, Adrian Bryan-Brown, said on Tuesday that the producers would not comment on the dollar amount. ‘Spider-Man’ Musical Names 2 Of Its Stars June 27, 2009 Evan Rachel Wood will be Mary Jane Watson and Alan Cumming will star as Norman Osborn (a k a Green Goblin) in the upcoming Broadway musical “Spider-Man, Turn Off The Dark,” the producers announced on Friday. -

Behind the Songs with Lynn Ahrens and Stephen Flaherty

Behind the Songs with Lynn Ahrens and Stephen Flaherty [00:00:05] Welcome to The Seattle Public Library’s podcasts of author readings and library events. Library podcasts are brought to you by The Seattle Public Library and Foundation. To learn more about our programs and podcasts, visit our web site at w w w dot SPL dot org. To learn how you can help the library foundation support The Seattle Public Library go to foundation dot SPL dot org [00:00:38] Thank you so much. We are so excited over at the Fifth Avenue to be partnering with The Seattle Public Library and these community conversations and we're so thankful that you all decided to come here on this beautiful Monday sunny night in Seattle. I don't know what you're doing inside it's gorgeous out there. Well we're going to wow you with some songs tonight and a wonderful conversation at the Fifth. We're currently presenting "Marie - Dancing Still", a new musical based on the life of and the world of the young ballet student who inspired the masterpiece by Dagmar. The little dancer. And tonight we have two very special guests with us the writers of Lynn Ahrens who wrote the book and lyrics and Stephen Flaherty who composed the music. Ahrens similarity we're gonna embarrass you a little bit. You are considered the foremost theatrical songwriting team of their generation and that's true. They are. They of course wrote the Tony Award winning score for the musical masterpiece ragtime and began their Broadway career with the irresistible. -

The Strokes (San Diego 2019)

THE STROKES Backup Vox Song Drums Bass Guitar 1 Guitar 2 Guitar 3 Keys 1 Keys 2 Lead Vox (Full) Other (Full) Other (Full) Other (Minor) 1 IS THIS IT VIDA GARCIA JAKE LYONS LORENZO PARADEZ SAMIRA SCHNEIDER notes on song part 1 THE MODERN AGE JACE WHITEHEAD MAX CLEMENT JAGGER LICARI GABBY DURST notes on song part 2 SOMA SOFIA SACCA MERIDETH AUSLAND TYLER GRAUPP notes on song part lead 1 lead 2 rhythm 2 BARELY LEGAL MATTEO NEWELL JAKE LYONS KAIA FARRES VICENZO NAPOLITANO notes on song part 3 SOMEDAY JACE WHITEHEAD JAGGER LICARI MERIDETH AUSLAND HAVEN ENDRISS notes on song part 3 ALONE, TOGETHER INDIA MORIARTI MAX CLEMENT KAYLEE WINNINGHAM notes on song part 4 LAST NITE MATTEO NEWELL ISAAC ZUNIGA KAIA FARRES GABBY DURST notes on song part 4 HARD TO EXPLAIN SOFIA SACCA JACOB BEACH CAEDMON JOSEPH SAMIRA SCHNEIDER notes on song part elec piano 5 WHEN IT STARTED INDIA MORIARTI ISAAC ZUNIGA HAVEN ENDRISS notes on song part lead 1 5 TRYING YOUR LUCK VIDA GARCIA JACOB BEACH JAGGER LICARI KAYLEE WINNINGHAM notes on song part 6 TAKE IT OR LEAVE IT KAIA FARRES TYLER GRAUPP SAMIRA SCHNEIDER notes on song part 6 NEW YORK CITY COPS MATTEO NEWELL ISAAC ZUNIGA VICENZO NAPOLITANO notes on song part Tambo 7 REPTILIA INDIA MORIARTI JACOB BEACH LORENZO PARADEZ GABBY DURST notes on song part 7 12:51 VIDA GARCIA MERIDETH AUSLAND TYLER GRAUPP CAEDMON JOSEPH VICENZO NAPOLITANO notes on song part synth claps 8 YOU ONLY LIVE ONCE JACE WHITEHEAD MAX CLEMENT LORENZO PARADEZ HAVEN ENDRISS notes on song part Tambo 8 THE END HAS NO END SOFIA SACCA JAKE LYONS CAEDMON JOSEPH KAYLEE WINNINGHAM notes on song part synth 9 Song 17 notes on song part 9 Song 18 notes on song part 10 Song 19 notes on song part 10 Song 20 notes on song part 11 Song 21 notes on song part 11 Song 22 notes on song part 12 Song 23 notes on song part 12 Song 24 notes on song part THE STROKES Backup Vox Song Drums Bass Guitar 1 Guitar 2 Guitar 3 Keys 1 Keys 2 Lead Vox (Full) Other (Full) Other (Full) Other (Minor) 13 Song 25 notes on song part 13 Song 26 notes on song part. -

112 Dance with Me 112 Peaches & Cream 213 Groupie Luv 311

112 DANCE WITH ME 112 PEACHES & CREAM 213 GROUPIE LUV 311 ALL MIXED UP 311 AMBER 311 BEAUTIFUL DISASTER 311 BEYOND THE GRAY SKY 311 CHAMPAGNE 311 CREATURES (FOR A WHILE) 311 DON'T STAY HOME 311 DON'T TREAD ON ME 311 DOWN 311 LOVE SONG 311 PURPOSE ? & THE MYSTERIANS 96 TEARS 1 PLUS 1 CHERRY BOMB 10 M POP MUZIK 10 YEARS WASTELAND 10,000 MANIACS BECAUSE THE NIGHT 10CC I'M NOT IN LOVE 10CC THE THINGS WE DO FOR LOVE 112 FT. SEAN PAUL NA NA NA NA 112 FT. SHYNE IT'S OVER NOW (RADIO EDIT) 12 VOLT SEX HOOK IT UP 1TYM WITHOUT YOU 2 IN A ROOM WIGGLE IT 2 LIVE CREW DAISY DUKES (NO SCHOOL PLAY) 2 LIVE CREW DIRTY NURSERY RHYMES (NO SCHOOL PLAY) 2 LIVE CREW FACE DOWN *** UP (NO SCHOOL PLAY) 2 LIVE CREW ME SO HORNY (NO SCHOOL PLAY) 2 LIVE CREW WE WANT SOME ***** (NO SCHOOL PLAY) 2 PAC 16 ON DEATH ROW 2 PAC 2 OF AMERIKAZ MOST WANTED 2 PAC ALL EYEZ ON ME 2 PAC AND, STILL I LOVE YOU 2 PAC AS THE WORLD TURNS 2 PAC BRENDA'S GOT A BABY 2 PAC CALIFORNIA LOVE (EXTENDED MIX) 2 PAC CALIFORNIA LOVE (NINETY EIGHT) 2 PAC CALIFORNIA LOVE (ORIGINAL VERSION) 2 PAC CAN'T C ME 2 PAC CHANGED MAN 2 PAC CONFESSIONS 2 PAC DEAR MAMA 2 PAC DEATH AROUND THE CORNER 2 PAC DESICATION 2 PAC DO FOR LOVE 2 PAC DON'T GET IT TWISTED 2 PAC GHETTO GOSPEL 2 PAC GHOST 2 PAC GOOD LIFE 2 PAC GOT MY MIND MADE UP 2 PAC HATE THE GAME 2 PAC HEARTZ OF MEN 2 PAC HIT EM UP FT. -

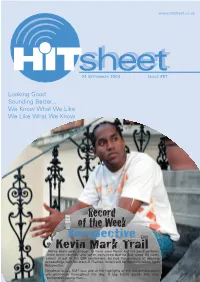

Record of the Week

www.hitsheet.co.uk 24 SEPTEMBER 2004 ISSUE #57 Looking Good Sounding Better... We Know What We Like We Like What We Know Record of the Week Perspective EMI Kevin Mark Trail We’ve been lucky enough to have seen Kevin and his band perform three times recently and we’re convinced that he has some hit tunes comin’ at ya! At the EMI conference, he had the pleasure of opening proceedings with the track D Thames, which will be the likely follow up to Perspective. Needless to say, KMT was one of the highlights of the live performances we witnessed throughout the day. A big future awaits this very personable young man... EDITORIAL 2 EDITORIAL Hello and welcome to issue #57 of the Hit Sheet. It’s that time of year when the industry bustles and buzzes Manchester, do come and say hello. And, if you’re not more than ever. Autumn sales conferences are held, award planning to go anywhere, see the new Metallica movie – ceremonies staged, touring schedules kick off and then watching middle-aged rockers continually admit just how there’s the annual A&R-fest that is In The City. scared and upset they feel is so very Spinal Tap! 31 The Birches, The autumn period arrives as the final few stragglers return London, N21 1NJ from holiday and everything seems to spring back to life at GREG PARMLEY Tel. +44 (0)20 8360 4088 once. True to form, this year has been no exception, Fax: +44 (0)20 8360 4088 starting with the launch of the new Download chart at the Through The Grapevine Email. -

![(Text Transcript Follows) [00:00:09] OPENING MONOLOGUE](https://docslib.b-cdn.net/cover/1518/text-transcript-follows-00-00-09-opening-monologue-3251518.webp)

(Text Transcript Follows) [00:00:09] OPENING MONOLOGUE

Borne the Battle Episode # 221 Air Force Veteran Mark Harper, President and CMO of We Are the Mighty https://www.blogs.va.gov/VAntage/81447/borne-battle-221-air-force-veteran-mark-harper- president-mighty/ (Text Transcript Follows) [00:00:00] Music [00:00:09] OPENING MONOLOGUE: Tanner Iskra (TI): Oh, let's get it. Monday, November 23rd, 2020. Borne the Battle - brought to you by the Department of Veterans Affairs. The podcast that focuses on inspiring veteran stories and puts a highly on important resources, offices, and benefits for our veterans. I am your host Marine Corps, veteran Tanner Iskra. Hope everyone had a great week outside of podcast land. Myself, I'm trying out some new gear, got some new gear from the office. Don't have it quite soundproofed here in the home studio. So, it might sound a little echoey. It's funny how it's a better, better microphone, but it's a worse quality based on the room. So, we're going to manage and we're going to keep pressing on No new reviews this week to respond to - no new ratings. If you like what we put together every week, please consider smashing that subscribe button and leaving a rating and, or a review on Apple Podcasts. It helps push this podcast up in the algorithms. Which gives more veterans the chance to catch the information provided not only in the interviews, but in the benefits breakdown episodes and in the news releases. Talking news releases, we have six this week, some are pretty brief, so it was not going to be, as long as you'd think.