Rapid Modeling and Analysis Tools

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Material Data & Design Workstation Reviews Biomimicry

January 2019 FOCUS ON: New Vehicle Lightweighting Design Meshless Simulation Simulate Additive Manufacturing DigitalEngineering247.com A Touch of AR MATERIAL DATA & DESIGN WORKSTATION REVIEWS BIOMIMICRY DE_0119_Cover.indd 2 12/13/18 4:41 PM ANSYS.indd 1 12/10/18 3:56 PM @XI_Computer.indd 1 12/10/18 3:54 PM //////////////////////////////////////////////////////////////// | DEGREES OF FREEDOM | by Jamie J. Gooch Hack Your New Year’s Resolution T’S THAT TIME of year when we reflect on the past to go back to school to get another degree, maybe resolve to and look ahead to the future. On one hand, this makes take a course in programming, finite element analysis or design sense: The calendar year ends and a new year begins. for additive manufacturing. Small successes can lead to setting What better time to make resolutions? On the other loftier goals, but the trick is to build on each success to get there, Ihand, it seems a bit arbitrary to make important life and rather than trying to achieve too much too soon. work goals based on a date chosen by the pope in 1582 to Another way to engineer yourself to keep your resolutions be the first day of the year. is to share your goals. Research shows that telling people about your goals can help you stick with them. Committing Research has shown that New Year’s Resolutions don’t to something like professional training, where you tell your work for most people, but just barely. According to a 2002 supervisor your intentions, or improving your standard op- study by John C. -

Loads, Load Factors and Load Combinations

Overall Outline 1000. Introduction 4000. Federal Regulations, Guides, and Reports Training Course on 3000. Site Investigation Civil/Structural Codes and Inspection 4000. Loads, Load Factors, and Load Combinations 5000. Concrete Structures and Construction 6000. Steel Structures and Construction 7000. General Construction Methods BMA Engineering, Inc. 8000. Exams and Course Evaluation 9000. References and Sources BMA Engineering, Inc. – 4000 1 BMA Engineering, Inc. – 4000 2 4000. Loads, Load Factors, and Load Scope: Primary Documents Covered Combinations • Objective and Scope • Minimum Design Loads for Buildings and – Introduce loads, load factors, and load Other Structures [ASCE Standard 7‐05] combinations for nuclear‐related civil & structural •Seismic Analysis of Safety‐Related Nuclear design and construction Structures and Commentary [ASCE – Present and discuss Standard 4‐98] • Types of loads and their computational principles • Load factors •Design Loads on Structures During • Load combinations Construction [ASCE Standard 37‐02] • Focus on seismic loads • Computer aided analysis and design (brief) BMA Engineering, Inc. – 4000 3 BMA Engineering, Inc. – 4000 4 Load Types (ASCE 7‐05) Load Types (ASCE 7‐05) • D = dead load • Lr = roof live load • Di = weight of ice • R = rain load • E = earthquake load • S = snow load • F = load due to fluids with well‐defined pressures and • T = self‐straining force maximum heights • W = wind load • F = flood load a • Wi = wind‐on‐ice loads • H = ldload due to lllateral earth pressure, ground water pressure, -

Stresscheck 10.1 2014

StressCheck 10.1 2014 RELEASE NOTES JUNE 16, 2014 (BUILD 10615) This document summarizes the new features available in 64-bit StressCheck 10.1 Professional Edition. For more details, please refer to the Master Guide Addendum available in the main menu of StressCheck under Help. FILE MANAGEMENT SYSTEM The StressCheck file system was completely redesigned in version 10.1 to simplify, improve stability, and make its usage intuitive. The input file (*.sci) and database (*.scm) have been replaced with the StressCheck workfile (*.scw) and the StressCheck project (*.scp). The StressCheck project file (*.scp) is designed to be the default file format when saving and opening StressCheck data. This single file solution replaces the database (*.scm), associated files and its dataset folder. The StressCheck workfile (*.scw) replaces the StressCheck input file (*.sci). As solution data can significantly increase the file size of StressCheck projects, the workfile exists as a smaller alternative that does not contain solution data. It is a snapshot of the model in its current state. The most significant feature of the project file is that StressCheck does not write to it during a session unless the user selects the save option. All temporary files are kept in a separate location which can be changed by the user in the Options dialog. TLAP LOADING Several improvements were made in the way StressCheck handles imported TLAP points. The Status column of the Case Definitions window now reflects the current point assignment status for TLAP points. If a point is assigned in a load, the Status column is updated with “Assigned(x)” where the number in parenthesis (x) is the number of times the point has been assigned in a load record. -

Manage Engineering Data Complex Models and Simulations Yield a Torrent

NX 8 for Design. Smarter decisions, better products. Learn more on page 11 DtopEng_banner_NXCAD_MAR2012.indd 1 3/8/12 11:02 AM April 2012 / deskeng.com Origin 8.6 Overview P.22 Direct Modeling and FEA P.24 TECHNOLOGY FOR DESIGN ENGINEERING Manage Engineering Data Complex models and simulations yield a torrent of data to seize and control. P.16 RACING AHEAD WITH CLUSTERS P.35 REVIEW: HP Z10 WORKSTATION P.38 P.40 PREPARING 3D MODELS de0412_Cover_Darlene.indd 1 3/15/12 12:20 PM Objet.indd 1 3/14/12 11:17 AM DTE_0412_Layout 1 2/28/12 4:36 PM Page 1 Data Loggers & Data Acquisition Systems iNET-400 Series Expandable Modular Data Acquisition System • Directly Connects to Thermocouple, RTD, Thermistor, Strain Gage, Load Complete Cell, Voltage, Current, Resistance Starter and Accelerometer Inputs System $ • USB 2.0 High Speed Data Acquisition 990 Hardware for Windows® ≥XP SP2, Vista or 7 (XP/VS/7) • Analog and Digital Input and Outputs • Free instruNet World Software Visit omega.com/inet-400_series © Kutt Niinepuu / Dreamstime.com Stand-Alone, High-Speed, 8-Channel High Speed Voltage Multifunction Data Loggers Input USB Data Acquisition Modules OM-USB-1208HS Series Starts at $499 High Performance Multi-Function I/O USB Data Acquisition Modules OMB-DAQ-2416 Series OM-LGR-5320 Series Starts at Starts at $1100 $1499 Visit omega.com/om-lgr-5320_series Visit omega.com/om-usb-1208hs_series Visit omega.com/omb-daq-2416 ® omega.com ® © COPYRIGHT 2012 OMEGA ENGINEERING, INC. ALL RIGHTS RESERVED Omega.indd 1 3/14/12 10:54 AM Degrees of Freedom by Jamie J. -

Vysoké Učení Technické V Brně Brno University of Technology

VYSOKÉ UČENÍ TECHNICKÉ V BRNĚ BRNO UNIVERSITY OF TECHNOLOGY FAKULTA STROJNÍHO INŽENÝRSTVÍ ÚSTAV MECHANIKY TĚLES, MECHATRONIKY A BIOMECHANIKY FACULTY OF MECHANICAL ENGINEERING INSTITUTE OF SOLID MECHANICS, MECHATRONICS AND BIOMECHANICS DEFORMAČNĚ-NAPĚŤOVÁ ANALÝZA PRUTOVÝCH SOUSTAV METODOU KONEČNÝCH PRVKŮ STRESS-STRAIN ANALYSIS OF A BAR SYSTEMS USING FINITE ELEMENT METHOD BAKALÁŘSKÁ PRÁCE BACHELOR´S THESIS AUTOR PRÁCE JÁN FODOR AUTHOR VEDOUCÍ PRÁCE Ing. TOMÁŠ NÁVRAT, Ph.D SUPERVISOR BRNO 2015 Vysoké učení technické v Brně, Fakulta strojního inženýrství Ústav mechaniky těles, mechatroniky a biomechaniky Akademický rok: 2014/2015 ZADÁNÍ BAKALÁŘSKÉ PRÁCE student(ka): Ján Fodor který/která studuje v bakalářském studijním programu obor: Základy strojního inženýrství (2341R006) Ředitel ústavu Vám v souladu se zákonem č.111/1998 o vysokých školách a se Studijním a zkušebním řádem VUT v Brně určuje následující téma bakalářské práce: Deformačně-napěťová analýza prutových soustav metodou konečných prvků v anglickém jazyce: Stress-strain analysis of a bar systems using finite element method Stručná charakteristika problematiky úkolu: Cílem práce je naprogramovat algoritmus metody konečných prvků pro řešení prutových soustav. Pro řešení primárně využít volně dostupné prostředky (Python, knihovny NumPy, SciPy, překladač Fortranu, apod.). Ověření funkčnosti realizovat výpočtem v programu ANSYS. Cíle bakalářské práce: 1. Přehled používaných MKP programů se stručným popisem jejich možností. 2. Možnost využití volně dostupných prostředků pro vědecké výpočty. 3. Naprogramovat algoritmus MKP pro prutovou soustavu. 4. Verifikovat vypočtené výsledky s výsledky získanými v programu ANSYS. Seznam odborné literatury: 1. Zienkiewicz, O., C., Taylor, R. L.: The finite element method, 5th ed., Arnold Publishers, London, 2000 2. Kolář, V., Kratochvíl, J., Leitner, F., Ženíšek, A.: Výpočet plošných a prostorových konstrukcí metodou konečných prvků, SNTL Praha, 1979 3. -

Master Document Template

PARALLEL IMPLEMENTATION OF FIELD VISUALIZATIONS WITH HIGH ORDER TETRAHEDRAL FINITE ELEMENTS MOHAMMAD HAFIFI HAFIZ BIN ISHAK UNIVERSITI SAINS MALAYSIA 2013 PARALLEL IMPLEMENTATION OF FIELD VISUALIZATIONS WITH HIGH ORDER TETRAHEDRAL FINITE ELEMENTS by MOHAMMAD HAFIFI HAFIZ BIN ISHAK Thesis submitted in fulfillment of the requirements for the Degree of Master of Science APRIL 2013 ACKNOWLEDGEMENTS First and foremost, I owe my deepest gratitude to Dr. Razi Abdul Rahman, my thesis advisor and project supervisor, who has introduced me to the subject. His serious attitude to research has always been a reference model for me. He inspired me greatly to work in this project. His willingness to motivate me contributed tremendously to my project. I also would like to thank him for showing me some example that related to the topic of my project. His guidance and patience throughout the tumultuous time of conducting scientific investigations related to this project are much appreciated. Besides, his invaluable support and insightful suggestions, not to mention all the hard work and extra time poured in has resulted in the completion of this project. I also would like to express my deepest gratitude to Ministry of Higher Education (MOHE) that provides me with MyBrain scheme throughout my candidature period. Last but not least, my heartfelt gratitude to my family especially my mother,for her continuous support in my pursuit of this master degree and endless love in my life. iii TABLE OF CONTENT PAGE ACKNOWLEDGEMENTS III LIST OF TABLES VII LIST OF FIGURES -

Vysoké Učení Technické V Brně Brno University of Technology

VYSOKÉ UČENÍ TECHNICKÉ V BRNĚ BRNO UNIVERSITY OF TECHNOLOGY FAKULTA STROJNÍHO INŽENÝRSTVÍ FACULTY OF MECHANICAL ENGINEERING ÚSTAV MECHANIKY TĚLES, MECHATRONIKY A BIOMECHANIKY INSTITUTE OF SOLID MECHANICS, MECHATRONICS AND BIOMECHANICS VYUŽITÍ VÝPOČTOVÉHO PROSTŘEDÍ SALOME MECA PŘI ŘEŠENÍ ÚLOH MECHANIKY TĚLES THE USAGE OF SALOME MECA WORKBENCH FOR TASK SOLUTION IN THE FIELD OF SOLID MECHANICS BAKALÁŘSKÁ PRÁCE BACHELOR'S THESIS AUTOR PRÁCE Tomáš Anděl AUTHOR VEDOUCÍ PRÁCE Ing. Petr Vosynek, Ph.D. SUPERVISOR BRNO 2016 Zadání bakalářské práce Ústav: Ústav mechaniky těles, mechatroniky a biomechaniky Student: Tomáš Anděl Studijní program: Strojírenství Studijní obor: Základy strojního inženýrství Vedoucí práce: Ing. Petr Vosynek, Ph.D. Akademický rok: 2015/16 Ředitel ústavu Vám v souladu se zákonem č.111/1998 o vysokých školách a se Studijním a zkušebním řádem VUT v Brně určuje následující téma bakalářské práce: Využití výpočtového prostředí Salome Meca při řešení úloh mechaniky těles Stručná charakteristika problematiky úkolu: Práce je zaměřena na řešení úloh mechaniky těles pomocí metody konečných prvků v programovém prostředí Salome Meca. Student v práci využije dosavadní znalosti z předmětů mechaniky těles a bude je moci dále rozšířit tak, aby byl schopen posuzovat složitější geometrie nejen na úrovni prutů, ale i skořepin a objemů. Výstupem práce pak bude porovnání získaných výsledků s analytickým řešením z doporučené literatury, případně s výsledky jiného výpočtového prostředí (ANSYS nebo ANSYS Workbench). Cíle bakalářské práce: - základní orientace v prostřední Salome Meca - tvorba výpočtových modelů základních úloh mechaniky těles - analýza získaných výsledků s následnou verifikací - popis tvorby výpočtových modelů a diskuze nad výsledky Seznam literatury: Aubury, J. P. (2013): Beginning with Code_Aster. A Practical Introduction to Finite Element Method Using Code_Aster, Gmsh and Salome. -

List of Finite Element Software Packages Wikipedia, the Free Encyclopedia List of Finite Element Software Packages from Wikipedia, the Free Encyclopedia

12/15/2015 List of finite element software packages Wikipedia, the free encyclopedia List of finite element software packages From Wikipedia, the free encyclopedia This is a list of software packages that implement the finite element method for solving partial differential equations or aid in the pre and postprocessing of finite element models. Free/Open source https://en.wikipedia.org/wiki/List_of_finite_element_software_packages 1/8 12/15/2015 List of finite element software packages Wikipedia, the free encyclopedia Operating Name Description License System Multiplatform open source application for the solution of Linux, Agros2D GNU GPL physical problems Windows based on the Hermes library It is an Open Source FEA project. The solver uses a partially compatible ABAQUS Linux, CalculiX file format. The GNU GPL Windows pre/postprocessor generates input data for many FEA and CFD applications is an Open Source software package for Civil and Structural Engineering finite Linux, Code Aster element analysis and GNU GPL FreeBSD numeric simulation in structural mechanics which is written in Python and Fortran is an Open Source software package C/C++ hp Mac OS X, Concepts GNU GPL FEM/DGFEM/BEM Windows library for elliptic equations Comprehensive set of tools for finite QPL up to element codes, release 7.2, Linux, Unix, deal.II scaling from laptops LGPL after Mac OS X, to clusters with that Windows 10,000+ cores. Written in C++. GPL Distributed and Version 2 Unified Numerics Linux, Unix, DUNE with Run Environment, written Mac OS X Time -

CAASE20 Agenda & Content Line-Up

Want to be Part of the Largest Independent Conference on Analysis and Simulation in Engineering in 2020? 200 Presentations CAASE 2020 will bring together the leading visionaries, developers, and practitioners of CAE-related technologies in an open forum, unlike any other, to share experiences, discuss relevant trends, discover common themes and explore future issues. 13 Presentations at this event will be centered on four key themes: Training Courses 1 Driving the Design of Physical Systems, Components & Products 2 Implementing Simulation Governance & Democratization 3 Advancing Manufacturing Processes & Additive Manufacturing 4 Addressing Business Strategies & Challenges 4 Workshops nafems.org/CAASE20 We greatly appreciate the support of our sponsors! Platinum Sponsors Event Sponsors Supporting Sponsors: The CAASE20 Virtual Conference Will Feature: 3 full-days of ground-breaking content 7 parallel tracks 63 sessions 200 presentations, 17 training courses, and workshops 4 incredible keynotes 25 industry-leading sponsors virtually exhibiting ...all at your fingertips with... search (title and speaker) & 75 filter tags SIMULATION FOCUS Conference Preview DigitalEngineering247.com The Conference on Advancing Analysis & Simulation in Engineering Contents Join Us Online .........................................................................................................................3 Welcome to CAASE20 ............................................................................................................4 CAASE By the Numbers ........................................................................................................5 -

Emi Simulation Advances P.35 Review: Lenovo Mobile Workstation P.41 Esrd Cae Handbook Walkthrough P.31

May 2018 ////// FOCUS ON: AutoCAD 2019 IoT Review P.28 P.12 The Digital Twin Divide P.24 digitaleng.news Deep Learning for Engineers P.38 EMI SIMULATION ADVANCES P.35 REVIEW: LENOVO MOBILE WORKSTATION P.41 ESRD CAE HANDBOOK WALKTHROUGH P.31 de0518_Cover.indd 2 4/13/18 4:07 PM ANSYS.indd 1 4/13/18 9:33 AM THE ALL NEW S3 Ultimate performance. Maximum productivity. Featuring a compact design, up to three GPUs, and an 8th Generation Intel® Core™ i7 processor overclocked to 4.8GHz, the new APEXX S3 is optimized for the applications you rely on most. ® 888-984-7589 Intel Core™ i7 processor Intel Inside®. Extraordinary Performance Outside. 512-835-0400 ® Intel, the Intel Logo, Intel Inside, Intel Core, and Core Inside are trademarks of Intel Corporation in the U.S. and/or other countries. www.boxx.com/des3 BOXX.indd 1 4/13/18 9:37 AM //////////////////////////////////////////////////////////////// | DEGREES OF FREEDOM | by Jamie J. Gooch Making Connections RE YOU TIRED OF HEARING about the in- work with the Walter Reed National Military Medical Center ternet of things (IoT)? If so, you’re out of luck. in Washington, D.C., but knew there were many wounded ci- Forecasts from industry experts vary a bit, but vilians in Iraq who could also benefit from the technology. they all predict the billions of “things” con- He contacted Doctors Without Borders, but the organiza- Anected to the internet will grow to tens of billions in just a tion was already pulling out of Iraq for safety. Through his few years. -

Tochnog Professional Fea

08/05/2020 User:RoddemanDennis/sandbox - Wikipedia TOCHNOG PROFESSIONAL FEA Tochnog Professional is a Finite Element Analysis (FEA) solver Tochnog Professional FEA developed and distributed by Tochnog Professional Company. It can be used Original author(s) User:RoddemanDennis for free, both for academic work and commercially. The source is not made Developer(s) Tochnog Professional publicly available however. The software is specializes in geotechnical Company applications, but also has options for civil engineering and mechanical Initial release 1997 engineering. Input data is provided by means of an input file, containing all Stable release current date information that is needed for Operating system Microsoft Windows performing a calculation. Parts of the input file can be generated by external Linux pre-processors. Output is generated by several generated output files. These Platform Windows/x86-64 can either be used in external post- processors, or the files can directly be Linux x86-64 used for interpretation of calculation Type Computer-aided results. engineering, Finite Element Analysis Contents Website tochnogprofessional.nl History Tochnog Professional (http://tochnogprofessi functionality onal.nl) Example calculations Tochnog Professional Company Supported platforms References External links History Development of the Tochnog Professional program started 1997 by Dennis Roddeman. The programming is done completely in the c++ programming language. The program is setup as a batch program, and should be started from the command line. In 2019 Dennis Roddeman started Tochnog Professional Company (https://www.tochnogprofessional.nl), which presently is the owner of the Tochnog Professional program. Since 2019 the program is listed on the soilmodels research site (http s://www.soilmodels.com)[1][2] with over 2000 research members both as generic purpose program and also as incremental driver. -

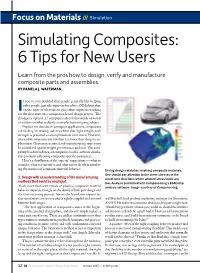

Simulating Composites: 6 Tips for New Users Learn from the Pros How to Design, Verify and Manufacture Composite Parts and Assemblies

Focus on Materials /// Simulation Simulating Composites: 6 Tips for New Users Learn from the pros how to design, verify and manufacture composite parts and assemblies. BY PAMELA J. WATERMAN. f you’ve ever doubted that people generally like helping other people, just ask engineers for advice. DE did just that, I on the topic of what tips to give other engineers working for the first time on a composites-based design project. The floodgates opened: 15 companies shared thousands of words of wisdom on what is clearly a complex but intriguing subject. Popular for decades in aerospace applications, composites are finding increasing use anywhere that light weight with strength is preferred as an improvement over metal. However, successful composite use involves far more than drop-in re- placement. Geometry, material and manufacturing steps must be considered against weight, performance and cost. This com- plexity has driven dozens of companies to offer software simula- tion products addressing composite-specific parameters. Here’s a distillation of the experts’ suggestions — what to consider, what to remember, and what not to do when simulat- ing the nuances of composite material behavior. During design validation involving composite materials. One should pay attention to the sheer stresses in the 1. Design with an understanding of the manufacturing out-of-lane directions where allowed stress levels are methods that could be employed. low. Analysis performed with Componeering’s ESAComp Much more than with metals or plastics, composite material analysis software. Image courtesy of Componeering. behavior depends strongly on the details of both part design and the manufacturing process.