Modeling Taiwanese Southern-Min Tone Sandhi Using Rule-Based Methods

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pan-Sinitic Object Marking: Morphology and Syntax*

Breaking Down the Barriers, 785-816 2013-1-050-037-000234-1 Pan-Sinitic Object Marking: * Morphology and Syntax Hilary Chappell (曹茜蕾) EHESS In Chinese languages, when a direct object occurs in a non-canonical position preceding the main verb, this SOV structure can be morphologically marked by a preposition whose source comes largely from verbs or deverbal prepositions. For example, markers such as kā 共 in Southern Min are ultimately derived from the verb ‘to accompany’, pau11 幫 in many Huizhou and Wu dialects is derived from the verb ‘to help’ and bǎ 把 from the verb ‘to hold’ in standard Mandarin and the Jin dialects. In general, these markers are used to highlight an explicit change of state affecting a referential object, located in this preverbal position. This analysis sets out to address the issue of diversity in such object-marking constructions in order to examine the question of whether areal patterns exist within Sinitic languages on the basis of the main lexical fields of the object markers, if not the construction types. The possibility of establishing four major linguistic zones in China is thus explored with respect to grammaticalization pathways. Key words: typology, grammaticalization, object marking, disposal constructions, linguistic zones 1. Background to the issue In the case of transitive verbs, it is uncontroversial to state that a common word order in Sinitic languages is for direct objects to follow the main verb without any overt morphological marking: * This is a “cross-straits” paper as earlier versions were presented in turn at both the Institute of Linguistics, Academia Sinica, during the joint 14th Annual Conference of the International Association of Chinese Linguistics and 10th International Symposium on Chinese Languages and Linguistics, held in Taipei in May 25-29, 2006 and also at an invited seminar at the Institute of Linguistics, Chinese Academy of Social Sciences in Beijing on 23rd October 2006. -

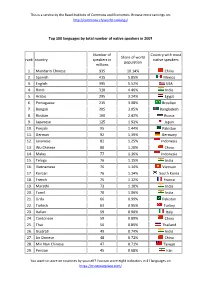

Top 100 Languages by Total Number of Native Speakers in 2007 Rank

This is a service by the Basel Institute of Commons and Economics. Browse more rankings on: http://commons.ch/world-rankings/ Top 100 languages by total number of native speakers in 2007 Number of Country with most Share of world rank country speakers in native speakers population millions 1. Mandarin Chinese 935 10.14% China 2. Spanish 415 5.85% Mexico 3. English 395 5.52% USA 4. Hindi 310 4.46% India 5. Arabic 295 3.24% Egypt 6. Portuguese 215 3.08% Brasilien 7. Bengali 205 3.05% Bangladesh 8. Russian 160 2.42% Russia 9. Japanese 125 1.92% Japan 10. Punjabi 95 1.44% Pakistan 11. German 92 1.39% Germany 12. Javanese 82 1.25% Indonesia 13. Wu Chinese 80 1.20% China 14. Malay 77 1.16% Indonesia 15. Telugu 76 1.15% India 16. Vietnamese 76 1.14% Vietnam 17. Korean 76 1.14% South Korea 18. French 75 1.12% France 19. Marathi 73 1.10% India 20. Tamil 70 1.06% India 21. Urdu 66 0.99% Pakistan 22. Turkish 63 0.95% Turkey 23. Italian 59 0.90% Italy 24. Cantonese 59 0.89% China 25. Thai 56 0.85% Thailand 26. Gujarati 49 0.74% India 27. Jin Chinese 48 0.72% China 28. Min Nan Chinese 47 0.71% Taiwan 29. Persian 45 0.68% Iran You want to score on countries by yourself? You can score eight indicators in 41 languages on https://trustyourplace.com/ This is a service by the Basel Institute of Commons and Economics. -

Congressional-Executive Commission on China

CONGRESSIONAL-EXECUTIVE COMMISSION ON CHINA ANNUAL REPORT 2008 ONE HUNDRED TENTH CONGRESS SECOND SESSION OCTOBER 31, 2008 Printed for the use of the Congressional-Executive Commission on China ( Available via the World Wide Web: http://www.cecc.gov VerDate Aug 31 2005 23:54 Nov 06, 2008 Jkt 000000 PO 00000 Frm 00001 Fmt 6011 Sfmt 5011 U:\DOCS\45233.TXT DEIDRE 2008 ANNUAL REPORT VerDate Aug 31 2005 23:54 Nov 06, 2008 Jkt 000000 PO 00000 Frm 00002 Fmt 6019 Sfmt 6019 U:\DOCS\45233.TXT DEIDRE CONGRESSIONAL-EXECUTIVE COMMISSION ON CHINA ANNUAL REPORT 2008 ONE HUNDRED TENTH CONGRESS SECOND SESSION OCTOBER 31, 2008 Printed for the use of the Congressional-Executive Commission on China ( Available via the World Wide Web: http://www.cecc.gov U.S. GOVERNMENT PRINTING OFFICE ★ 44–748 PDF WASHINGTON : 2008 For sale by the Superintendent of Documents, U.S. Government Printing Office Internet: bookstore.gpo.gov Phone: toll free (866) 512–1800; DC area (202) 512–1800 Fax: (202) 512–2104 Mail: Stop IDCC, Washington, DC 20402–0001 VerDate Aug 31 2005 23:54 Nov 06, 2008 Jkt 000000 PO 00000 Frm 00003 Fmt 5011 Sfmt 5011 U:\DOCS\45233.TXT DEIDRE CONGRESSIONAL-EXECUTIVE COMMISSION ON CHINA LEGISLATIVE BRANCH COMMISSIONERS House Senate SANDER LEVIN, Michigan, Chairman BYRON DORGAN, North Dakota, Co-Chairman MARCY KAPTUR, Ohio MAX BAUCUS, Montana TOM UDALL, New Mexico CARL LEVIN, Michigan MICHAEL M. HONDA, California DIANNE FEINSTEIN, California TIMOTHY J. WALZ, Minnesota SHERROD BROWN, Ohio CHRISTOPHER H. SMITH, New Jersey CHUCK HAGEL, Nebraska EDWARD R. ROYCE, California SAM BROWNBACK, Kansas DONALD A. -

Last Name First Name/Middle Name Course Award Course 2 Award 2 Graduation

Last Name First Name/Middle Name Course Award Course 2 Award 2 Graduation A/L Krishnan Thiinash Bachelor of Information Technology March 2015 A/L Selvaraju Theeban Raju Bachelor of Commerce January 2015 A/P Balan Durgarani Bachelor of Commerce with Distinction March 2015 A/P Rajaram Koushalya Priya Bachelor of Commerce March 2015 Hiba Mohsin Mohammed Master of Health Leadership and Aal-Yaseen Hussein Management July 2015 Aamer Muhammad Master of Quality Management September 2015 Abbas Hanaa Safy Seyam Master of Business Administration with Distinction March 2015 Abbasi Muhammad Hamza Master of International Business March 2015 Abdallah AlMustafa Hussein Saad Elsayed Bachelor of Commerce March 2015 Abdallah Asma Samir Lutfi Master of Strategic Marketing September 2015 Abdallah Moh'd Jawdat Abdel Rahman Master of International Business July 2015 AbdelAaty Mosa Amany Abdelkader Saad Master of Media and Communications with Distinction March 2015 Abdel-Karim Mervat Graduate Diploma in TESOL July 2015 Abdelmalik Mark Maher Abdelmesseh Bachelor of Commerce March 2015 Master of Strategic Human Resource Abdelrahman Abdo Mohammed Talat Abdelziz Management September 2015 Graduate Certificate in Health and Abdel-Sayed Mario Physical Education July 2015 Sherif Ahmed Fathy AbdRabou Abdelmohsen Master of Strategic Marketing September 2015 Abdul Hakeem Siti Fatimah Binte Bachelor of Science January 2015 Abdul Haq Shaddad Yousef Ibrahim Master of Strategic Marketing March 2015 Abdul Rahman Al Jabier Bachelor of Engineering Honours Class II, Division 1 -

From Eurocentrism to Sinocentrism: the Case of Disposal Constructions in Sinitic Languages

From Eurocentrism to Sinocentrism: The case of disposal constructions in Sinitic languages Hilary Chappell 1. Introduction 1.1. The issue Although China has a long tradition in the compilation of rhyme dictionar- ies and lexica, it did not develop its own tradition for writing grammars until relatively late.1 In fact, the majority of early grammars on Chinese dialects, which begin to appear in the 17th century, were written by Europe- ans in collaboration with native speakers. For example, the Arte de la len- gua Chiõ Chiu [Grammar of the Chiõ Chiu language] (1620) appears to be one of the earliest grammars of any Sinitic language, representing a koine of urban Southern Min dialects, as spoken at that time (Chappell 2000).2 It was composed by Melchior de Mançano in Manila to assist the Domini- cans’ work of proselytizing to the community of Chinese Sangley traders from southern Fujian. Another major grammar, similarly written by a Do- minican scholar, Francisco Varo, is the Arte de le lengua mandarina [Grammar of the Mandarin language], completed in 1682 while he was living in Funing, and later posthumously published in 1703 in Canton.3 Spanish missionaries, particularly the Dominicans, played a signifi- cant role in Chinese linguistic history as the first to record the grammar and lexicon of vernaculars, create romanization systems and promote the use of the demotic or specially created dialect characters. This is discussed in more detail in van der Loon (1966, 1967). The model they used was the (at that time) famous Latin grammar of Elio Antonio de Nebrija (1444–1522), Introductiones Latinae (1481), and possibly the earliest grammar of a Ro- mance language, Grammatica de la Lengua Castellana (1492) by the same scholar, although according to Peyraube (2001), the reprinted version was not available prior to the 18th century. -

Construction and Automatization of a Minnan Child Speech Corpus with Some Research Findings

Computational Linguistics and Chinese Language Processing Vol. 12, No. 4, December 2007, pp. 411-442 411 © The Association for Computational Linguistics and Chinese Language Processing Construction and Automatization of a Minnan Child Speech Corpus with some Research Findings Jane S. Tsay∗ Abstract Taiwanese Child Language Corpus (TAICORP) is a corpus based on spontaneous conversations between young children and their adult caretakers in Minnan (Taiwan Southern Min) speaking families in Chiayi County, Taiwan. This corpus is special in several ways: (1) It is a Minnan corpus; (2) It is a speech-based corpus; (3) It is a corpus of a language that does not yet have a conventionalized orthography; (4) It is a collection of longitudinal child language data; (5) It is one of the largest child corpora in the world with about two million syllables in 497,426 lines (utterances) based on about 330 hours of recordings. Regarding the format, TAICORP adopted the Child Language Data Exchange System (CHILDES) [MacWhinney and Snow 1985; MacWhinney 1995] for transcribing and coding the recordings into machine-readable text. The goals of this paper are to introduce the construction of this speech-based corpus and at the same time to discuss some problems and challenges encountered. The development of an automatic word segmentation program with a spell-checker is also discussed. Finally, some findings in syllable distribution are reported. Keywords: Minnan, Taiwan Southern Min, Taiwanese, Speech Corpus, Child Language, CHILDES, Automatic Word Segmentation 1. Introduction Taiwanese Child Language Corpus is a corpus based on spontaneous conversations between young children and their adult caretakers in Minnan speaking families in Chiayi County, Taiwan. -

Post-Cold War Experimental Theatre of China: Staging Globalisation and Its Resistance

Post-Cold War Experimental Theatre of China: Staging Globalisation and Its Resistance Zheyu Wei A thesis submitted for the degree of Doctor of Philosophy The School of Creative Arts The University of Dublin, Trinity College 2017 Declaration I declare that this thesis has not been submitted as an exercise for a degree at this or any other university and it is my own work. I agree to deposit this thesis in the University’s open access institutional repository or allow the library to do so on my behalf, subject to Irish Copyright Legislation and Trinity College Library Conditions of use and acknowledgement. ___________________ Zheyu Wei ii Summary This thesis is a study of Chinese experimental theatre from the year 1990 to the year 2014, to examine the involvement of Chinese theatre in the process of globalisation – the increasingly intensified relationship between places that are far away from one another but that are connected by the movement of flows on a global scale and the consciousness of the world as a whole. The central argument of this thesis is that Chinese post-Cold War experimental theatre has been greatly influenced by the trend of globalisation. This dissertation discusses the work of a number of representative figures in the “Little Theatre Movement” in mainland China since the 1980s, e.g. Lin Zhaohua, Meng Jinghui, Zhang Xian, etc., whose theatrical experiments have had a strong impact on the development of contemporary Chinese theatre, and inspired a younger generation of theatre practitioners. Through both close reading of literary and visual texts, and the inspection of secondary texts such as interviews and commentaries, an overview of performances mirroring the age-old Chinese culture’s struggle under the unprecedented modernising and globalising pressure in the post-Cold War period will be provided. -

THE MEDIA's INFLUENCE on SUCCESS and FAILURE of DIALECTS: the CASE of CANTONESE and SHAAN'xi DIALECTS Yuhan Mao a Thesis Su

THE MEDIA’S INFLUENCE ON SUCCESS AND FAILURE OF DIALECTS: THE CASE OF CANTONESE AND SHAAN’XI DIALECTS Yuhan Mao A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Arts (Language and Communication) School of Language and Communication National Institute of Development Administration 2013 ABSTRACT Title of Thesis The Media’s Influence on Success and Failure of Dialects: The Case of Cantonese and Shaan’xi Dialects Author Miss Yuhan Mao Degree Master of Arts in Language and Communication Year 2013 In this thesis the researcher addresses an important set of issues - how language maintenance (LM) between dominant and vernacular varieties of speech (also known as dialects) - are conditioned by increasingly globalized mass media industries. In particular, how the television and film industries (as an outgrowth of the mass media) related to social dialectology help maintain and promote one regional variety of speech over others is examined. These issues and data addressed in the current study have the potential to make a contribution to the current understanding of social dialectology literature - a sub-branch of sociolinguistics - particularly with respect to LM literature. The researcher adopts a multi-method approach (literature review, interviews and observations) to collect and analyze data. The researcher found support to confirm two positive correlations: the correlative relationship between the number of productions of dialectal television series (and films) and the distribution of the dialect in question, as well as the number of dialectal speakers and the maintenance of the dialect under investigation. ACKNOWLEDGMENTS The author would like to express sincere thanks to my advisors and all the people who gave me invaluable suggestions and help. -

Ethnic Nationalist Challenge to Multi-Ethnic State: Inner Mongolia and China

ETHNIC NATIONALIST CHALLENGE TO MULTI-ETHNIC STATE: INNER MONGOLIA AND CHINA Temtsel Hao 12.2000 Thesis submitted to the University of London in partial fulfilment of the requirement for the Degree of Doctor of Philosophy in International Relations, London School of Economics and Political Science, University of London. UMI Number: U159292 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. Dissertation Publishing UMI U159292 Published by ProQuest LLC 2014. Copyright in the Dissertation held by the Author. Microform Edition © ProQuest LLC. All rights reserved. This work is protected against unauthorized copying under Title 17, United States Code. ProQuest LLC 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106-1346 T h c~5 F . 7^37 ( Potmc^ ^ Lo « D ^(c st' ’’Tnrtrr*' ABSTRACT This thesis examines the resurgence of Mongolian nationalism since the onset of the reforms in China in 1979 and the impact of this resurgence on the legitimacy of the Chinese state. The period of reform has witnessed the revival of nationalist sentiments not only of the Mongols, but also of the Han Chinese (and other national minorities). This development has given rise to two related issues: first, what accounts for the resurgence itself; and second, does it challenge the basis of China’s national identity and of the legitimacy of the state as these concepts have previously been understood. -

ISO 639-3 Code Split Request Template

ISO 639-3 Registration Authority Request for Change to ISO 639-3 Language Code Change Request Number: 2019-062 (completed by Registration authority) Date: 2019 Aug 31 Primary Person submitting request: Kirk Miller E-mail address: kirkmiller at gmail dot com Names, affiliations and email addresses of additional supporters of this request: PLEASE NOTE: This completed form will become part of the public record of this change request and the history of the ISO 639-3 code set and will be posted on the ISO 639-3 website. Types of change requests Type of change proposed (check one): 1. Modify reference information for an existing language code element 2. Propose a new macrolanguage or modify a macrolanguage group 3. Retire a language code element from use (duplicate or non-existent) 4. Expand the denotation of a code element through the merging one or more language code elements into it (retiring the latter group of code elements) 5. Split a language code element into two or more new code elements 6. Create a code element for a previously unidentified language For proposing a change to an existing code element, please identify: Affected ISO 639-3 identifier: nan Associated reference name: Min Nan Chinese 5. Split a language code element into two or more code elements (a) List the languages into which this code element should be split: Hokkien / Hoklo / Quanzhang Chinese Chaoshan Min / Teo-Swa Chinese Datian Min Chinese Leizhou Min Chinese Hainanese Min / Qiong Wen Chinese (b) Referring to the criteria given above, give the rationale for splitting the existing code element into two or more languages: The varieties of Min Nan are not mutually intelligible. -

The Grammaticalization of Hakka, Mandarin and Southern Min

The Grammaticalization of Hakka, Mandarin and Southern Min The Interaction of Negatives with Modality, Aspect, and Interrogatives by Hui-Ling Yang A Dissertation Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy Approved April 2012 by the Graduate Supervisory Committee: Elly van Gelderen, Chair Karen Adams Carrie Gillon Chaofen Sun ARIZONA STATE UNIVERSITY May 2012 ABSTRACT The primary topic of this dissertation is the grammaticalization of negation in three Sinitic language varieties: Hakka, Mandarin, and Southern Min. I discuss negative morphemes that are used under different modality or aspect contexts, including ability, volition, necessity, and perfectivity. Not only does this study examine Southern Min affirmative and negative pairs, but it also highlights the grammaticalization of negation and parametric differences in negation among the languages under investigation. This dissertation also covers the reanalysis of negatives into interrogatives. I approach the investigation of Southern Min negation from both synchronic and diachronic perspectives. I analyze corpus data in addition to data collected from fieldwork for the contemporary linguistic data. For my diachronic research of Chinese negation, I use historical texts and etymological dictionaries. Diachronically, many of the negative morphemes originate from full-fledged verbs and undergo an analogous grammaticalization process that consists of multiple stages of reanalysis from V to T (aspect; modality), and then T to C (interrogative; discourse). I explain this reanalysis, which involves head-to-head movement, using generative frameworks that combine a modified cartographic approach and the Minimalist Economy Principles. Synchronic data show that Southern Min affirmative modals are characterized by a certain morphological doubling. -

Surname Methodology in Defining Ethnic Populations : Chinese

Surname Methodology in Defining Ethnic Populations: Chinese Canadians Ethnic Surveillance Series #1 August, 2005 Surveillance Methodology, Health Surveillance, Public Health Division, Alberta Health and Wellness For more information contact: Health Surveillance Alberta Health and Wellness 24th Floor, TELUS Plaza North Tower P.O. Box 1360 10025 Jasper Avenue, STN Main Edmonton, Alberta T5J 2N3 Phone: (780) 427-4518 Fax: (780) 427-1470 Website: www.health.gov.ab.ca ISBN (on-line PDF version): 0-7785-3471-5 Acknowledgements This report was written by Dr. Hude Quan, University of Calgary Dr. Donald Schopflocher, Alberta Health and Wellness Dr. Fu-Lin Wang, Alberta Health and Wellness (Authors are ordered by alphabetic order of surname). The authors gratefully acknowledge the surname review panel members of Thu Ha Nguyen and Siu Yu, and valuable comments from Yan Jin and Shaun Malo of Alberta Health & Wellness. They also thank Dr. Carolyn De Coster who helped with the writing and editing of the report. Thanks to Fraser Noseworthy for assisting with the cover page design. i EXECUTIVE SUMMARY A Chinese surname list to define Chinese ethnicity was developed through literature review, a panel review, and a telephone survey of a randomly selected sample in Calgary. It was validated with the Canadian Community Health Survey (CCHS). Results show that the proportion who self-reported as Chinese has high agreement with the proportion identified by the surname list in the CCHS. The surname list was applied to the Alberta Health Insurance Plan registry database to define the Chinese ethnic population, and to the Vital Statistics Death Registry to assess the Chinese ethnic population mortality in Alberta.