Object-Sensitive Deep Reinforcement Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A History of Video Game Consoles Introduction the First Generation

A History of Video Game Consoles By Terry Amick – Gerald Long – James Schell – Gregory Shehan Introduction Today video games are a multibillion dollar industry. They are in practically all American households. They are a major driving force in electronic innovation and development. Though, you would hardly guess this from their modest beginning. The first video games were played on mainframe computers in the 1950s through the 1960s (Winter, n.d.). Arcade games would be the first glimpse for the general public of video games. Magnavox would produce the first home video game console featuring the popular arcade game Pong for the 1972 Christmas Season, released as Tele-Games Pong (Ellis, n.d.). The First Generation Magnavox Odyssey Rushed into production the original game did not even have a microprocessor. Games were selected by using toggle switches. At first sales were poor because people mistakenly believed you needed a Magnavox TV to play the game (GameSpy, n.d., para. 11). By 1975 annual sales had reached 300,000 units (Gamester81, 2012). Other manufacturers copied Pong and began producing their own game consoles, which promptly got them sued for copyright infringement (Barton, & Loguidice, n.d.). The Second Generation Atari 2600 Atari released the 2600 in 1977. Although not the first, the Atari 2600 popularized the use of a microprocessor and game cartridges in video game consoles. The original device had an 8-bit 1.19MHz 6507 microprocessor (“The Atari”, n.d.), two joy sticks, a paddle controller, and two game cartridges. Combat and Pac Man were included with the console. In 2007 the Atari 2600 was inducted into the National Toy Hall of Fame (“National Toy”, n.d.). -

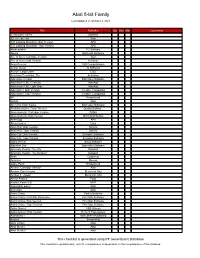

Atari 8-Bit Family

Atari 8-bit Family Last Updated on October 2, 2021 Title Publisher Qty Box Man Comments 221B Baker Street Datasoft 3D Tic-Tac-Toe Atari 747 Landing Simulator: Disk Version APX 747 Landing Simulator: Tape Version APX Abracadabra TG Software Abuse Softsmith Software Ace of Aces: Cartridge Version Atari Ace of Aces: Disk Version Accolade Acey-Deucey L&S Computerware Action Quest JV Software Action!: Large Label OSS Activision Decathlon, The Activision Adventure Creator Spinnaker Software Adventure II XE: Charcoal AtariAge Adventure II XE: Light Gray AtariAge Adventure!: Disk Version Creative Computing Adventure!: Tape Version Creative Computing AE Broderbund Airball Atari Alf in the Color Caves Spinnaker Software Ali Baba and the Forty Thieves Quality Software Alien Ambush: Cartridge Version DANA Alien Ambush: Disk Version Micro Distributors Alien Egg APX Alien Garden Epyx Alien Hell: Disk Version Syncro Alien Hell: Tape Version Syncro Alley Cat: Disk Version Synapse Software Alley Cat: Tape Version Synapse Software Alpha Shield Sirius Software Alphabet Zoo Spinnaker Software Alternate Reality: The City Datasoft Alternate Reality: The Dungeon Datasoft Ankh Datamost Anteater Romox Apple Panic Broderbund Archon: Cartridge Version Atari Archon: Disk Version Electronic Arts Archon II - Adept Electronic Arts Armor Assault Epyx Assault Force 3-D MPP Assembler Editor Atari Asteroids Atari Astro Chase Parker Brothers Astro Chase: First Star Rerelease First Star Software Astro Chase: Disk Version First Star Software Astro Chase: Tape Version First Star Software Astro-Grover CBS Games Astro-Grover: Disk Version Hi-Tech Expressions Astronomy I Main Street Publishing Asylum ScreenPlay Atari LOGO Atari Atari Music I Atari Atari Music II Atari This checklist is generated using RF Generation's Database This checklist is updated daily, and it's completeness is dependent on the completeness of the database. -

Finding Aid to the Atari Coin-Op Division Corporate Records, 1969-2002

Brian Sutton-Smith Library and Archives of Play Atari Coin-Op Division Corporate Records Finding Aid to the Atari Coin-Op Division Corporate Records, 1969-2002 Summary Information Title: Atari Coin-Op Division corporate records Creator: Atari, Inc. coin-operated games division (primary) ID: 114.6238 Date: 1969-2002 (inclusive); 1974-1998 (bulk) Extent: 600 linear feet (physical); 18.8 GB (digital) Language: The materials in this collection are primarily in English, although there a few instances of Japanese. Abstract: The Atari Coin-Op records comprise 600 linear feet of game design documents, memos, focus group reports, market research reports, marketing materials, arcade cabinet drawings, schematics, artwork, photographs, videos, and publication material. Much of the material is oversized. Repository: Brian Sutton-Smith Library and Archives of Play at The Strong One Manhattan Square Rochester, New York 14607 585.263.2700 [email protected] Administrative Information Conditions Governing Use: This collection is open for research use by staff of The Strong and by users of its library and archives. Though intellectual property rights (including, but not limited to any copyright, trademark, and associated rights therein) have not been transferred, The Strong has permission to make copies in all media for museum, educational, and research purposes. Conditions Governing Access: At this time, audiovisual and digital files in this collection are limited to on-site researchers only. It is possible that certain formats may be inaccessible or restricted. Custodial History: The Atari Coin-Op Division corporate records were acquired by The Strong in June 2014 from Scott Evans. The records were accessioned by The Strong under Object ID 114.6238. -

Atari IP Catalog 2019 IP List (Highlighted Links Are Included in Deck)

Atari IP Catalog 2019 IP List (Highlighted Links are Included in Deck) 3D Asteroids Basketball Fatal Run Miniature Golf Retro Atari Classics Super Asteroids & Missile 3D Tic-Tac-Toe Basketbrawl Final Legacy Minimum Return to Haunted House Command A Game of Concentration Bionic Breakthrough Fire Truck * Missile Command Roadrunner Super Baseball Adventure Black Belt Firefox * Missile Command 2 * RollerCoaster Tycoon Super Breakout Adventure II Black Jack Flag Capture Missile Command 3D Runaway * Super Bunny Breakout Agent X * Black Widow * Flyball * Monstercise Saboteur Super Football Airborne Ranger Boogie Demo Food Fight (Charley Chuck's) Monte Carlo * Save Mary Superbug * Air-Sea Battle Booty Football Motor Psycho Scrapyard Dog Surround Akka Arrh * Bowling Frisky Tom MotoRodeo Secret Quest Swordquest: Earthworld Alien Brigade Boxing * Frog Pond Night Driver Sentinel Swordquest: Fireworld Alpha 1 * Brain Games Fun With Numbers Ninja Golf Shark Jaws * Swordquest: Waterworld Anti-Aircraft * Breakout Gerry the Germ Goes Body Off the Wall Shooting Arcade Tank * Aquaventure Breakout * Poppin Orbit * Sky Diver Tank II * Asteroids Breakout Boost Goal 4 * Outlaw Sky Raider * Tank III * Asteroids Deluxe * Canyon Bomber Golf Outlaw * Slot Machine Telepathy Asteroids On-line Casino Gotcha * Peek-A-Boo Slot Racers Tempest Asteroids: Gunner Castles and Catapults Gran Trak 10 * Pin Pong * Smokey Joe * Tempest 2000 Asteroids: Gunner+ Caverns of Mars Gran Trak 20 * Planet Smashers Soccer Tempest 4000 Atari 80 Classic Games in One! Centipede Gravitar Pong -

Intellivision Development, Back in the Day

Intellivision Development, Back In The Day Intellivision Development, Back In The Day Page 1 of 28 Intellivision Development, Back In The Day Table of Contents Introduction......................................................................................................................................3 Overall Process................................................................................................................................5 APh Technological Consulting..........................................................................................................6 Host Hardware and Operating System........................................................................................6 Development Tools......................................................................................................................7 CP-1610 Assembler................................................................................................................7 Text Editor...............................................................................................................................7 Pixel Editor..............................................................................................................................8 Test Harnesses............................................................................................................................8 Tight Finances...........................................................................................................................10 Mattel Electronics...........................................................................................................................11 -

Atari Games Corp. V. Nintendo of America, Inc. ATARI GAMES CORP

Atari Games Corp. v. Nintendo of America, Inc. ATARI GAMES CORP. and TENGEN, INC., Plaintiffs-Appellants, vs. NINTENDO OF AMERICA INC. AND NINTENDO CO., LTD., Defendants-Appellees. 91-1293 United States Court Of Appeals For The Federal Circuit 975 F.2d 832, 24 U.S.P.Q.2D (BNA) 1015, Copy. L. Rep. (CCH) P26,978, 1992-2 Trade Cas. (CCH) P69,969, 92 Cal. Daily Op. Service 7858, 92 Daily Journal DAR 12936, 1992 U.S. App. Decision September 10, 1992, Decided Appealed from: U.S. District Court for the Northern District of California. Judge Smith M. Laurence Popofsky, Heller, Ehrman, White & McAuliffe, of San Francisco, California, argued for plaintiffs-appellants. With him on the brief were Robert S. Venning, Peter A. Wald, Kirk G. Werner, Robert B. Hawk, Michael K. Plimack and Dale A. Rice. Also on the brief were James B. Bear, Knobbe, Martens, Olson & Bear, of Newport Beach, California and G. Gervaise Davis, II, Schroeder, Davis & Orliss, Inc., of Monterey, California. Thomas G. Gallatin, Jr., Mudge, Rose, Guthrie, Alexander & Ferdon, of New York, New York, argued for defendants-appellees. With him on the brief was John J. Kirby, Jr.. Also on the brief was Larry S. Nixon, Nixon & Vanderhye, P.C., of Arlington, Virginia. Before CLEVENGER, Circuit Judge, SMITH, Senior Circuit Judge, and RADER, Circuit Judge. [F.2d 835] RADER, Circuit Judge. Nintendo of America Inc., and Nintendo Co., Ltd. sell the Nintendo Entertainment System (NES). Two of Nintendo's competitors, Atari Games Corporation and its wholly-owned subsidiary, Tengen, Inc., sued Nintendo for, among other things, unfair competition, Sherman Act violations, and patent infringement. -

Premiere Issue Monkeying Around Game Reviews: Special Report

Atari Coleco Intellivision Computers Vectrex Arcade ClassicClassic GamerGamer Premiere Issue MagazineMagazine Fall 1999 www.classicgamer.com U.S. “Because Newer Isn’t Necessarily Better!” Special Report: Classic Videogames at E3 Monkeying Around Revisiting Donkey Kong Game Reviews: Atari, Intellivision, etc... Lost Arcade Classic: Warp Warp Deep Thaw Chris Lion Rediscovers His Atari Plus! · Latest News · Guide to Halloween Games · Win the book, “Phoenix” “As long as you enjoy the system you own and the software made for it, there’s no reason to mothball your equipment just because its manufacturer’s stock dropped.” - Arnie Katz, Editor of Electronic Games Magazine, 1984 Classic Gamer Magazine Fall 1999 3 Volume 1, Version 1.2 Fall 1999 PUBLISHER/EDITOR-IN-CHIEF Chris Cavanaugh - [email protected] ASSOCIATE EDITOR Sarah Thomas - [email protected] STAFF WRITERS Kyle Snyder- [email protected] Reset! 5 Chris Lion - [email protected] Patrick Wong - [email protected] Raves ‘N Rants — Letters from our readers 6 Darryl Guenther - [email protected] Mike Genova - [email protected] Classic Gamer Newswire — All the latest news 8 Damien Quicksilver [email protected] Frank Traut - [email protected] Lee Seitz - [email protected] Book Bytes - Joystick Nation 12 LAYOUT/DESIGN Classic Advertisement — Arcadia Supercharger 14 Chris Cavanaugh PHOTO CREDITS Atari 5200 15 Sarah Thomas - Staff Photographer Pong Machine scan (page 3) courtesy The “New” Classic Gamer — Opinion Column 16 Sean Kelly - Digital Press CD-ROM BIRA BIRA Photos courtesy Robert Batina Lost Arcade Classics — ”Warp Warp” 17 CONTACT INFORMATION Classic Gamer Magazine Focus on Intellivision Cartridge Reviews 18 7770 Regents Road #113-293 San Diego, Ca 92122 Doin’ The Donkey Kong — A closer look at our 20 e-mail: [email protected] on the web: favorite monkey http://www.classicgamer.com Atari 2600 Cartridge Reviews 23 SPECIAL THANKS To Sarah. -

Mastering Atari with Discrete World Models

Published as a conference paper at ICLR 2021 MASTERING ATARI WITH DISCRETE WORLD MODELS Danijar Hafner ∗ Timothy Lillicrap Mohammad Norouzi Jimmy Ba Google Research DeepMind Google Research University of Toronto ABSTRACT Intelligent agents need to generalize from past experience to achieve goals in complex environments. World models facilitate such generalization and allow learning behaviors from imagined outcomes to increase sample-efficiency. While learning world models from image inputs has recently become feasible for some tasks, modeling Atari games accurately enough to derive successful behaviors has remained an open challenge for many years. We introduce DreamerV2, a reinforcement learning agent that learns behaviors purely from predictions in the compact latent space of a powerful world model. The world model uses discrete representations and is trained separately from the policy. DreamerV2 constitutes the first agent that achieves human-level performance on the Atari benchmark of 55 tasks by learning behaviors inside a separately trained world model. With the same computational budget and wall-clock time, Dreamer V2 reaches 200M frames and surpasses the final performance of the top single-GPU agents IQN and Rainbow. DreamerV2 is also applicable to tasks with continuous actions, where it learns an accurate world model of a complex humanoid robot and solves stand-up and walking from only pixel inputs. 1I NTRODUCTION Atari Performance To successfully operate in unknown environments, re- inforcement learning agents need to learn about their 2.0 Model-based Model-free environments over time. World models are an explicit 1.6 way to represent an agent’s knowledge about its environ- ment. Compared to model-free reinforcement learning 1.2 Human Gamer that learns through trial and error, world models facilitate 0.8 generalization and can predict the outcomes of potential actions to enable planning (Sutton, 1991). -

Examining the Dynamics of the US Video Game Console Market

Can Nintendo Get its Crown Back? Examining the Dynamics of the U.S. Video Game Console Market by Samuel W. Chow B.S. Electrical Engineering, Kettering University, 1997 A.L.M. Extension Studies in Information Technology, Harvard University, 2004 Submitted to the System Design and Management Program, the Technology and Policy Program, and the Engineering Systems Division on May 11, 2007 in Partial Fulfillment of the Requirements for the Degrees of Master of Science in Engineering and Management and Master of Science in Technology and Policy at the Massachusetts Institute of Technology June 2007 C 2007 Samuel W. Chow. All rights reserved The author hereby grants to NIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part in any medium now know and hereafter created. Signature of Author Samuel W. Chow System Design and Management Program, and Technology and Policy Program May 11, 2007 Certified by James M. Utterback David J. cGrath jr 9) Professor of Management and Innovation I -'hs Supervisor Accepted by Pat Hale Senior Lecturer in Engineering Systems - Director, System Design and Management Program Accepted by Dava J. Newman OF TEOHNOLoGY Professor of Aeronautics and Astronautics and Engineering Systems Director, Technology and Policy Program FEB 1 E2008 ARCHNOE LIBRARIES Can Nintendo Get its Crown Back? Examining the Dynamics of the U.S. Video Game Console Market by Samuel W. Chow Submitted to the System Design and Management Program, the Technology and Policy Program, and the Engineering Systems Division on May 11, 2007 in Partial Fulfillment of the Requirements for the Degrees of Master of Science in Engineering and Management and Master of Science in Technology and Policy Abstract Several generations of video game consoles have competed in the market since 1972. -

Arcade Games

Arcade games ARCADE-games wo.: TEKKEN 2, STAR WARS, ALIEN 3, SEGA MANX TT SUPER BIKE en nog vele andere Startdatum Monday 27 May 2019 10:00 Bezichtiging Friday May 31 2019 from 14:0 until 16:00 BE-3600 Genk, Stalenstraat 32 Einddatum Maandag 3 juni 2019 vanaf 19:00 Afgifte Monday June 10 2019 from 14:00 until 16:00 BE-3600 Genk, Stalenstraat 32 Online bidding only! Voor meer informatie en voorwaarden: www.moyersoen.be 03/06/2019 06:00 Kavel Omschrijving Openingsbod 1 1996 NAMCO PROP CYCLE DX (serial number: 34576) consisting of 2 500€ parts, Coin Counter: 119430, 220-240V, 50HZ, 960 Watts, year of construction: 1996, manual present, this device is not connected, operation unknown, Dimensions: BDH 125x250x235cm 2 GAELCO SA ATV TRACK MOTION B-253 (serial number: B-58052077) 125€ consisting of 2 parts, present keys: 2, coin meter: 511399, 230V 1000W 50HZ this device is not connected, operation unknown, Dimensions: BDH 90X220X180 cm 3 KONAMI GTI-CLUB RALLY COTE D'AZUR GN688 (serial number: GN688- 125€ 83650122) consisting of 2 parts, Coin meter: 36906, 230V, 300W, 50HZ, this device is not connected, operation unknown, Dimensions: BDH 115x240x200cm 4 NAMCO DIRT DASH (serial number: 324539) without display, coin meter 65€ 165044, this device is not connected, operation unknown, Dimensions: BDH 90x215x145cm 5 1996 KONAMI JETWAVE tm, Virtual Jet Watercraft, screen (damaged), 65€ built in 1996, this device is not connected, operation unknown, Dimensions screen BDH: 115X61x133cm Console BDH: 130x200x140cm 6 TAB AUSTRIA SILVER-BALL (serial number: -

Lecture 2: History of Video Gaming Hardware: the 2-D Era

Lecture 2: History of Video Gaming Hardware: The 2-D Era! Prof. Aaron Lanterman! School of Electrical and Computer Engineering! Georgia Institute of Technology! Atari 2600 VCS (1977)! • " 1 MHz MOS 6507! – low-cost version of 6502! • 128 bytes RAM! • First ROM cartridges 2K, later 4K! • Discontinued 1992! • Retro releases now on the market!! Adventure! Solaris! Pics & info from Wikipedia! 2! Atari 2600 Hardware Tricks! • Could put RAM on the cartridge ! – “Atari Super Chip”! – 128 more bytes!! – Jr. Pac-Man! • “Bank switching” to put more ROM on cartridge! – Only 4K immediately addressable - game still has to operate within individual 4K chunks at a time ! – Mr. Do!’s Castle (8K), Road Runner (16K, 1989)! – Fatal Run (only 32K game released, 1990)! Info & pics from AtariAge! 3! Atari 2600 Hardware Tricks! • “M Network” games! – Atari 2600 games produced by Mattel! – Controversial decision within Mattel! – Done by same group that designed the Intellivision: APh Technology Consulting! • Super Charger added 2K RAM! – Originally planned as a hardware add-ons! – Public didn’t seem to like add-ons, so built in separately into each cartridge! – BurgerTime: Cleverness beats hardware!! Info & pic from www.intellivisionlives.com/bluesky/games/credits/atari1.shtml! www.intellivisionlives.com/bluesky/games/credits/atari2.shtml! 4! Atari 2600 - The Chess Story (1)! • “Atari never intended to create a Chess game for the Atari 2600”! • “the original VCS box had a chess piece on it, and Atari was ultimately sued by someone in Florida due to the lack of -

Evolving Simple Programs for Playing Atari Games

Evolving simple programs for playing Atari games Dennis G Wilson Sylvain Cussat-Blanc [email protected] [email protected] University of Toulouse University of Toulouse IRIT - CNRS - UMR5505 IRIT - CNRS - UMR5505 Toulouse, France 31015 Toulouse, France 31015 Herve´ Luga Julian F Miller [email protected] [email protected] University of Toulouse University of York IRIT - CNRS - UMR5505 York, UK Toulouse, France 31015 ABSTRACT task for articial agents. Object representations and pixel reduction Cartesian Genetic Programming (CGP) has previously shown ca- schemes have been used to condense this information into a more pabilities in image processing tasks by evolving programs with a palatable form for evolutionary controllers. Deep neural network function set specialized for computer vision. A similar approach controllers have excelled here, beneting from convolutional layers can be applied to Atari playing. Programs are evolved using mixed and a history of application in computer vision. type CGP with a function set suited for matrix operations, including Cartesian Genetic Programming (CGP) also has a rich history image processing, but allowing for controller behavior to emerge. in computer vision, albeit less so than deep learning. CGP-IP has While the programs are relatively small, many controllers are com- capably created image lters for denoising, object detection, and petitive with state of the art methods for the Atari benchmark centroid determination. ere has been less study using CGP in set and require less training time. By evaluating the programs of reinforcement learning tasks, and this work represents the rst use the best evolved individuals, simple but eective strategies can be of CGP as a game playing agent.