Evolving Simple Programs for Playing Atari Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Harmony Cartridge Online Manual

A new way to experience the Atari 2600. © Copyright 2009-2011 – AtariAge (atariage.com) Second printing Contents Introduction ____________________________________ 1 Getting Started with Harmony _______________________ 1 Harmony Firmware Upgrading ______________________ 3 Frequently Asked Questions ________________________ 5 Harmony File Extensions __________________________ 8 Harmony Technical Specifications ____________________ 9 Acknowledgments _______________________________ 9 Introduction The Harmony cartridge is a programmable add-on for the Atari 2600 console that allows you to load an entire library of games into a single cartridge and then select which title you want to play from a friendly, on-screen menu interface. It features an SD card interface, making it simple to access the large library of Atari 2600 software. The Harmony cartridge supports almost all of the titles that have been produced for the Atari 2600. It can also be used to run your own Atari 2600 game creations on a real console. The Harmony cartridge is flash-upgradeable, and will be updated to support future Atari 2600 developments. SD card slot Mini-B USB port Back edge of Harmony Cart This guide tells you how to make the most of your Harmony cartridge. It should be read thoroughly before the cartridge is used for the first time. Your Harmony cartridge will provide you with many years of Atari 2600 enjoyment. The following equipment is required to use the Harmony cartridge: 1) An Atari 2600, Atari 7800 or other Atari 2600-compatible console. 2) A Windows, Macintosh or Linux-based computer to transfer data onto the SD card. 3) An SD card adapter for your computer. 4) An SD or SDHC card up to 32GB capacity. -

Finding Aid to the Atari Coin-Op Division Corporate Records, 1969-2002

Brian Sutton-Smith Library and Archives of Play Atari Coin-Op Division Corporate Records Finding Aid to the Atari Coin-Op Division Corporate Records, 1969-2002 Summary Information Title: Atari Coin-Op Division corporate records Creator: Atari, Inc. coin-operated games division (primary) ID: 114.6238 Date: 1969-2002 (inclusive); 1974-1998 (bulk) Extent: 600 linear feet (physical); 18.8 GB (digital) Language: The materials in this collection are primarily in English, although there a few instances of Japanese. Abstract: The Atari Coin-Op records comprise 600 linear feet of game design documents, memos, focus group reports, market research reports, marketing materials, arcade cabinet drawings, schematics, artwork, photographs, videos, and publication material. Much of the material is oversized. Repository: Brian Sutton-Smith Library and Archives of Play at The Strong One Manhattan Square Rochester, New York 14607 585.263.2700 [email protected] Administrative Information Conditions Governing Use: This collection is open for research use by staff of The Strong and by users of its library and archives. Though intellectual property rights (including, but not limited to any copyright, trademark, and associated rights therein) have not been transferred, The Strong has permission to make copies in all media for museum, educational, and research purposes. Conditions Governing Access: At this time, audiovisual and digital files in this collection are limited to on-site researchers only. It is possible that certain formats may be inaccessible or restricted. Custodial History: The Atari Coin-Op Division corporate records were acquired by The Strong in June 2014 from Scott Evans. The records were accessioned by The Strong under Object ID 114.6238. -

Parasites — Excavating the Spectravideo Compumate

ghosts ; replicants ; parasites — Excavating the Spectravideo CompuMate These are the notes I used for my final presentation in the summer Media Archaeology Class, alongside images I used as slides. As such, they’re quite provisional, and once I have some time to hammer them into more coherent thoughts, I’ll update this post accordingly! So this presentation is about articulating and beginning the work of theorizing what I’m provisionally calling “computational parasites.” This is provisional because I don’t particularly like the term myself but I figured it would be good to give it a shorthand so I don’t have to be overvague or verbose about these objects and practices throughout this presentation. As most of you know, I came to this class with a set of research questions about a particular hack of the SNES game Super Mario World, wherein a YouTube personality was able to basically terraform the console original into playing, at least in form although we can talk about content, the iPhone game Flappy Bird. This video playing behind me is that hack. This hack is evocative for me for the way it’s 1) really fucking weird, in terms of pushing hardware and software to their limits, and 2) begins to help me think through ideas of the lifecycle of software objects, to pilfer a phrase from a Ted Chiang novella, and how these lifecycles are caught up in infrastructures of nostalgia, supply chains, and different kinds of materiality. But as fate would have it, I haven’t spent that much time with this hack this week because I got entranced by a different, just as weird object: the Spectravideo CompuMate. -

A Page 1 CART TITLE MANUFACTURER LABEL RARITY Atari Text

A CART TITLE MANUFACTURER LABEL RARITY 3D Tic-Tac Toe Atari Text 2 3D Tic-Tac Toe Sears Text 3 Action Pak Atari 6 Adventure Sears Text 3 Adventure Sears Picture 4 Adventures of Tron INTV White 3 Adventures of Tron M Network Black 3 Air Raid MenAvision 10 Air Raiders INTV White 3 Air Raiders M Network Black 2 Air Wolf Unknown Taiwan Cooper ? Air-Sea Battle Atari Text #02 3 Air-Sea Battle Atari Picture 2 Airlock Data Age Standard 3 Alien 20th Century Fox Standard 4 Alien Xante 10 Alpha Beam with Ernie Atari Children's 4 Arcade Golf Sears Text 3 Arcade Pinball Sears Text 3 Arcade Pinball Sears Picture 3 Armor Ambush INTV White 4 Armor Ambush M Network Black 3 Artillery Duel Xonox Standard 5 Artillery Duel/Chuck Norris Superkicks Xonox Double Ender 5 Artillery Duel/Ghost Master Xonox Double Ender 5 Artillery Duel/Spike's Peak Xonox Double Ender 6 Assault Bomb Standard 9 Asterix Atari 10 Asteroids Atari Silver 3 Asteroids Sears Text “66 Games” 2 Asteroids Sears Picture 2 Astro War Unknown Taiwan Cooper ? Astroblast Telegames Silver 3 Atari Video Cube Atari Silver 7 Atlantis Imagic Text 2 Atlantis Imagic Picture – Day Scene 2 Atlantis Imagic Blue 4 Atlantis II Imagic Picture – Night Scene 10 Page 1 B CART TITLE MANUFACTURER LABEL RARITY Bachelor Party Mystique Standard 5 Bachelor Party/Gigolo Playaround Standard 5 Bachelorette Party/Burning Desire Playaround Standard 5 Back to School Pak Atari 6 Backgammon Atari Text 2 Backgammon Sears Text 3 Bank Heist 20th Century Fox Standard 5 Barnstorming Activision Standard 2 Baseball Sears Text 49-75108 -

Mastering Atari with Discrete World Models

Published as a conference paper at ICLR 2021 MASTERING ATARI WITH DISCRETE WORLD MODELS Danijar Hafner ∗ Timothy Lillicrap Mohammad Norouzi Jimmy Ba Google Research DeepMind Google Research University of Toronto ABSTRACT Intelligent agents need to generalize from past experience to achieve goals in complex environments. World models facilitate such generalization and allow learning behaviors from imagined outcomes to increase sample-efficiency. While learning world models from image inputs has recently become feasible for some tasks, modeling Atari games accurately enough to derive successful behaviors has remained an open challenge for many years. We introduce DreamerV2, a reinforcement learning agent that learns behaviors purely from predictions in the compact latent space of a powerful world model. The world model uses discrete representations and is trained separately from the policy. DreamerV2 constitutes the first agent that achieves human-level performance on the Atari benchmark of 55 tasks by learning behaviors inside a separately trained world model. With the same computational budget and wall-clock time, Dreamer V2 reaches 200M frames and surpasses the final performance of the top single-GPU agents IQN and Rainbow. DreamerV2 is also applicable to tasks with continuous actions, where it learns an accurate world model of a complex humanoid robot and solves stand-up and walking from only pixel inputs. 1I NTRODUCTION Atari Performance To successfully operate in unknown environments, re- inforcement learning agents need to learn about their 2.0 Model-based Model-free environments over time. World models are an explicit 1.6 way to represent an agent’s knowledge about its environ- ment. Compared to model-free reinforcement learning 1.2 Human Gamer that learns through trial and error, world models facilitate 0.8 generalization and can predict the outcomes of potential actions to enable planning (Sutton, 1991). -

UNOCART-2600 Instruction Manual

UNOCART-2600 Instruction Manual Revision 1.2 9/2/2018 Atari 2600 SD multi-cart Quick Start The UnoCart -2600 is an Atari cartridge emulator. It supports cartridges with up to 64k of ROM and 32k of RAM. First, copy BIN, ROM or A26 files to an SD card and insert it into the cartridge. Check the TV configuration jumper on the back of the board matches your TV system. Insert the cartridge in the Atari 2600. If the board is uncased, check you’ve got it the right way round (see picture). Power on the Atari and use the joystick to choose an item and the fire button to start it. PAL/NTSC Configuration The jumper on the back of the board allows you to select whether the cartridge uses NTSC or PAL/PAL60 for the built-in menu. You can remove the jumper completely if you are using a NTSC TV. Note that this jumper only applies to the cartridge menu – once you select a ROM to emulate, the TV picture produced will be entirely dependent on the cartridge being emulated. In general there were separate PAL and NTSC versions of each cartridge – make sure you get the correct version. SD Cards SD cards should be formatted as FAT32. Newer SD cards may come formatted as exFAT. These can be used with the UnoCart-2600, but need to be re-formatted as FAT32 on your computer prior to use. 1 Menu The menu allows you to navigate the files on the card and select a title to use. -

Arcade Games

Arcade games ARCADE-games wo.: TEKKEN 2, STAR WARS, ALIEN 3, SEGA MANX TT SUPER BIKE en nog vele andere Startdatum Monday 27 May 2019 10:00 Bezichtiging Friday May 31 2019 from 14:0 until 16:00 BE-3600 Genk, Stalenstraat 32 Einddatum Maandag 3 juni 2019 vanaf 19:00 Afgifte Monday June 10 2019 from 14:00 until 16:00 BE-3600 Genk, Stalenstraat 32 Online bidding only! Voor meer informatie en voorwaarden: www.moyersoen.be 03/06/2019 06:00 Kavel Omschrijving Openingsbod 1 1996 NAMCO PROP CYCLE DX (serial number: 34576) consisting of 2 500€ parts, Coin Counter: 119430, 220-240V, 50HZ, 960 Watts, year of construction: 1996, manual present, this device is not connected, operation unknown, Dimensions: BDH 125x250x235cm 2 GAELCO SA ATV TRACK MOTION B-253 (serial number: B-58052077) 125€ consisting of 2 parts, present keys: 2, coin meter: 511399, 230V 1000W 50HZ this device is not connected, operation unknown, Dimensions: BDH 90X220X180 cm 3 KONAMI GTI-CLUB RALLY COTE D'AZUR GN688 (serial number: GN688- 125€ 83650122) consisting of 2 parts, Coin meter: 36906, 230V, 300W, 50HZ, this device is not connected, operation unknown, Dimensions: BDH 115x240x200cm 4 NAMCO DIRT DASH (serial number: 324539) without display, coin meter 65€ 165044, this device is not connected, operation unknown, Dimensions: BDH 90x215x145cm 5 1996 KONAMI JETWAVE tm, Virtual Jet Watercraft, screen (damaged), 65€ built in 1996, this device is not connected, operation unknown, Dimensions screen BDH: 115X61x133cm Console BDH: 130x200x140cm 6 TAB AUSTRIA SILVER-BALL (serial number: -

Page 16) to Make Conversions from NTSC to PAL Much More Accurate

============================== OCTOBER 1996 ============================== ============================== THURSDAY, 17 OCTOBER ============================== Jim Nitchals: What the DS instruction is. SUBJECT: [stella] Random number generators POSTER: <a href="mailto:[email protected]">jimn8@xxxxxxxxxx</a> (Jim Nitchals) DATE/TIME: Mon, 21 Oct 1996 08:05:28 -0700 (PDT) POST: Communist Mutants did it like this: RANDOM LDA RND ASL ASL EOR SHIPX ADC RND ADC FRAMEL STA RND RTS It uses an ongoing seed (RND), and combines it with the ship's horizontal position and the current video frame number to produce a new seed. It's simple but relies a bit on the randomness of the ship's X position to yield good randomness in itself. Suicide Mission uses a linear feedback shift register (LFSR). Here's the code: RND LDA RNDL LSR LSR SBC RNDL LSR ROR RNDH ROR RNDL LDA RNDL RTS For simplicity of design, this approach is better but it requires 2 bytes of RAM. If the zero-page RAM is in short supply you could modify RND to use Supercharger memory instead. ============================== MONDAY, 21 OCTOBER ============================== Jim Nitchals: How Communist Mutants Generated Random Numbers. SUBJECT: [stella] Matt's Top 48 POSTER: <a href="mailto:[email protected]">jvmatthe@xxxxxxxxxxxx</a> DATE/TIME: Sat, 26 Oct 1996 12:07:01 -0400 (EDT) POST: The following is a post I made as soon as the list started up again at the new address. My friend Ruffin (Rufbo@xxxxxxx) said he didn't see it, so I thought maybe the rest of y'all didn't get it. I had hoped it would spark some discussion.. -

Digital Press Issue

Fifty II. Editor’s BLURB by Dave Giarrusso DIGITAL e had a lot of big plans for our 50th issue (“DP# 50: Wfi fty!” just in case your short term memory functions a bit like mine does as of late) and fortunately, most of ‘em made it in. UNfortunately, due to time and space constraints, and a mischievious orange tabby by the name of “Pickles”, a scant few of ‘em got left on the cutting room fl oor. The one portion of the 50th issue that we (okay, actually John) were working really hard on was the “alumni moments” section - a section devoted to all the PRESS game designers and artists who shaped what we’ve come to call our favorite pasttime. As you can probably imagine, it was a big undertaking - too big to make it into the pages of issue #50. DIGITAL PRESS # 52 MAY / JUNE 2003 BUT - guess what? John kept nagging everyone in his most convincing voice Founders Joe Santulli and eventually, with nothing but the most polite coercion, managed to round Kevin Oleniacz up the troops. In fact, everyone had such a blast working with John that we wound up with tons more material than we had originally anticipated having. Editors-In-Chief Joe Santulli Dave Giarrusso Which brings us back to THIS issue - the brainchild of John “Big Daddy” and “I’ll Senior Editors Al Backiel get to it when I get to it” Hardie. DP issue #50. Part II. In DP issue #52. Get it? Jeff Cooper John Hardie So join me in extending a hearty “thank you” to Big John and all of the folks Sean Kelly who took time out of their busy schedules to sit down with us and pass along Staff Writers Larry Anderson some of their favorite gaming anecdotes of the past. -

Object-Sensitive Deep Reinforcement Learning

Object-sensitive Deep Reinforcement Learning Yuezhang Li, Katia Sycara, and Rahul Iyer Carnegie Mellon University, Pittsburgh, PA, USA [email protected], [email protected], [email protected] Abstract Deep reinforcement learning has become popular over recent years, showing superiority on different visual-input tasks such as playing Atari games and robot navigation. Although objects are important image elements, few work considers enhancing deep reinforcement learning with object characteristics. In this paper, we propose a novel method that can incorporate object recognition processing to deep reinforcement learning models. This approach can be adapted to any existing deep reinforcement learning frameworks. State-of- the-art results are shown in experiments on Atari games. We also propose a new approach called \object saliency maps" to visually explain the actions made by deep reinforcement learning agents. 1 Introduction Deep neural networks have been widely applied in reinforcement learning (RL) algorithms to achieve human-level control in various challenging domains. More specifically, recent work has found outstanding performances of deep reinforcement learning (DRL) models on Atari 2600 games, by using only raw pixels to make decisions [21]. The literature on reinforcement learning is vast. Multiple deep RL algorithms have been developed to incorporate both on-policy RL such as Sarsa [30], actor-critic methods [1], etc. and off-policy RL such as Q-learning using experience replay memory [21] [25]. A parallel RL paradigm [20] has also been proposed to reduce the heavy reliance of deep RL algorithms on specialized hardware or distributed architectures. However, while a high proportion of RL applications such as Atari 2600 games contain objects with different gain or penalty (for example, enemy ships and fuel vessel are two different objects in the game \Riverraid"), most of previous algorithms are designed under the assumption that various game objects are treated equally. -

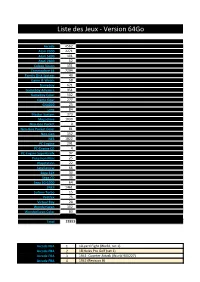

Liste Des Jeux - Version 64Go

Liste des Jeux - Version 64Go Arcade 4562 Atari 2600 2271 Atari 5200 101 Atari 7800 52 Coleco Vision 151 Commodore 64 7294 Family Disk System 43 Game & Watch 58 Gameboy 621 Gameboy Advance 951 Gameboy Color 502 Game Gear 277 GX4000 25 Lynx 84 Master System 373 Megadrive 1030 Neo-Geo Pocket 9 Neo-Geo Pocket Color 81 Neo-Geo 152 NES 1822 PC-Engine 291 PC-Engine CD 4 PC-Engine SuperGrafx 97 Pokemon Mini 25 Playstation 22 Satellaview 66 Sega 32X 30 Sega CD 35 Sega SG-1000 64 SNES 1461 Sufami Turbo 15 Vectrex 75 Virtual Boy 24 WonderSwan 102 WonderSwan Color 83 Total 22853 Arcade FBA 1 10-yard Fight (World, set 1) Arcade FBA 2 18 Holes Pro Golf (set 1) Arcade FBA 3 1941: Counter Attack (World 900227) Arcade FBA 4 1942 (Revision B) Arcade FBA 5 1943 Kai: Midway Kaisen (Japan) Arcade FBA 6 1943: The Battle of Midway (Euro) Arcade FBA 7 1943: The Battle of Midway Mark II (US) Arcade FBA 8 1943mii Arcade FBA 9 1944 : The Loop Master (USA 000620) Arcade FBA 10 1944: The Loop Master (USA 000620) Arcade FBA 11 1945k III Arcade FBA 12 1945k III (newer, OPCX2 PCB) Arcade FBA 13 19XX : The War Against Destiny (USA 951207) Arcade FBA 14 19XX: The War Against Destiny (USA 951207) Arcade FBA 15 2020 Super Baseball Arcade FBA 16 2020 Super Baseball (set 1) Arcade FBA 17 3 Count Bout Arcade FBA 18 3 Count Bout / Fire Suplex (NGM-043)(NGH-043) Arcade FBA 19 3x3 Puzzle (Enterprise) Arcade FBA 20 4 En Raya (set 1) Arcade FBA 21 4 Fun In 1 Arcade FBA 22 4-D Warriors (315-5162) Arcade FBA 23 4play Arcade FBA 24 64th. -

Atari and Amiga

IEEE Consumer Electronics Society Three generations of animation machines: Atari and Amiga Joe Decuir, IEEE Fellow UW Engineering faculty IEEE Consumer Electronics Society Agenda Why are you here? How I got here: design three retro machines – Atari Video Computer System (2600) – Atari Personal Computer System (400/800) – Amiga computer (1000, etc) New hardware: – Atari 2600 in FPGA: Flashback 2.0, etc – Atari PCS: Eclaire 3.0, XEL 1088 – Amiga 500 MIST 2 IEEE Consumer Electronics Society Why are you here? Many of you are fans of vintage computers: – Find and restore old machines – Run productivity applications – Play games Some people also write new applications – E.g. PlatoTerm on several retro computers More people write and play new games 3 How I got here IEEE Consumer Electronics Society My work is here: 5 IEEE Consumer Electronics Society First Video Games Ralph Baer was a pioneer, recognizing that it was possible to bring entertainment home. He imagined a machine which allowed electronic gaming on a “Brown Box” in a family home. Ralph was unlucky – he worked in defense Ralph Baer His employee licensed his in his basement design to Magnavox – as lab, 2014 the Odyssey, 1972 6 IEEE Consumer Electronics Society Atari Video Computer concepts Atari was founded on arcade video games First big hit: Pong (derived from Odessy) Second hits: more complex arcade games – E.g. Tank, Breakout Third hit: Pong for home use Big question: what to do next? Choices: – Random logic games – Microprocessor-based games 7 IEEE Consumer Electronics Society Pong arcade screen 8 IEEE Consumer Electronics Society TANK cabinet art 9 IEEE Consumer Electronics Society I got lucky: Atari Video Computer I was hired to finish debugging the first concept prototype of the Atari Video Computer System.