CSE 141 – Computer Architecture Fall 2005 Lecture 5

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

IBM Z Systems Introduction May 2017

IBM z Systems Introduction May 2017 IBM z13s and IBM z13 Frequently Asked Questions Worldwide ZSQ03076-USEN-15 Table of Contents z13s Hardware .......................................................................................................................................................................... 3 z13 Hardware ........................................................................................................................................................................... 11 Performance ............................................................................................................................................................................ 19 z13 Warranty ............................................................................................................................................................................ 23 Hardware Management Console (HMC) ..................................................................................................................... 24 Power requirements (including High Voltage DC Power option) ..................................................................... 28 Overhead Cabling and Power ..........................................................................................................................................30 z13 Water cooling option .................................................................................................................................................... 31 Secure Service Container ................................................................................................................................................. -

Performance of a Computer (Chapter 4) Vishwani D

ELEC 5200-001/6200-001 Computer Architecture and Design Fall 2013 Performance of a Computer (Chapter 4) Vishwani D. Agrawal & Victor P. Nelson epartment of Electrical and Computer Engineering Auburn University, Auburn, AL 36849 ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 1 What is Performance? Response time: the time between the start and completion of a task. Throughput: the total amount of work done in a given time. Some performance measures: MIPS (million instructions per second). MFLOPS (million floating point operations per second), also GFLOPS, TFLOPS (1012), etc. SPEC (System Performance Evaluation Corporation) benchmarks. LINPACK benchmarks, floating point computing, used for supercomputers. Synthetic benchmarks. ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 2 Small and Large Numbers Small Large 10-3 milli m 103 kilo k 10-6 micro μ 106 mega M 10-9 nano n 109 giga G 10-12 pico p 1012 tera T 10-15 femto f 1015 peta P 10-18 atto 1018 exa 10-21 zepto 1021 zetta 10-24 yocto 1024 yotta ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 3 Computer Memory Size Number bits bytes 210 1,024 K Kb KB 220 1,048,576 M Mb MB 230 1,073,741,824 G Gb GB 240 1,099,511,627,776 T Tb TB ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 4 Units for Measuring Performance Time in seconds (s), microseconds (μs), nanoseconds (ns), or picoseconds (ps). Clock cycle Period of the hardware clock Example: one clock cycle means 1 nanosecond for a 1GHz clock frequency (or 1GHz clock rate) CPU time = (CPU clock cycles)/(clock rate) Cycles per instruction (CPI): average number of clock cycles used to execute a computer instruction. -

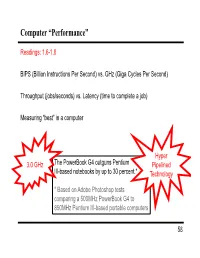

Computer “Performance”

Computer “Performance” Readings: 1.6-1.8 BIPS (Billion Instructions Per Second) vs. GHz (Giga Cycles Per Second) Throughput (jobs/seconds) vs. Latency (time to complete a job) Measuring “best” in a computer Hyper 3.0 GHz The PowerBook G4 outguns Pentium Pipelined III-based notebooks by up to 30 percent.* Technology * Based on Adobe Photoshop tests comparing a 500MHz PowerBook G4 to 850MHz Pentium III-based portable computers 58 Performance Example: Homebuilders Builder Time per Houses Per House Dollars Per House Month Options House Self-build 24 months 1/24 Infinite $200,000 Contractor 3 months 1 100 $400,000 Prefab 6 months 1,000 1 $250,000 Which is the “best” home builder? Homeowner on a budget? Rebuilding Haiti? Moving to wilds of Alaska? Which is the “speediest” builder? Latency: how fast is one house built? Throughput: how long will it take to build a large number of houses? 59 Computer Performance Primary goal: execution time (time from program start to program completion) 1 Performance ExecutionTime To compare machines, we say “X is n times faster than Y” Performance ExecutionTime n x y Performancey ExecutionTimex Example: Machine Orange and Grape run a program Orange takes 5 seconds, Grape takes 10 seconds Orange is _____ times faster than Grape 60 Execution Time Elapsed Time counts everything (disk and memory accesses, I/O , etc.) a useful number, but often not good for comparison purposes CPU time doesn't count I/O or time spent running other programs can be broken up into system time, and user time Example: Unix “time” command linux15.ee.washington.edu> time javac CircuitViewer.java 3.370u 0.570s 0:12.44 31.6% Our focus: user CPU time time spent executing the lines of code that are "in" our program 61 CPU Time CPU execution time CPU clock cycles =*Clock period for a program for a program CPU execution time CPU clock cycles 1 =* for a program for a program Clock rate Application example: A program takes 10 seconds on computer Orange, with a 400MHz clock. -

Trends in Processor Architecture

A. González Trends in Processor Architecture Trends in Processor Architecture Antonio González Universitat Politècnica de Catalunya, Barcelona, Spain 1. Past Trends Processors have undergone a tremendous evolution throughout their history. A key milestone in this evolution was the introduction of the microprocessor, term that refers to a processor that is implemented in a single chip. The first microprocessor was introduced by Intel under the name of Intel 4004 in 1971. It contained about 2,300 transistors, was clocked at 740 KHz and delivered 92,000 instructions per second while dissipating around 0.5 watts. Since then, practically every year we have witnessed the launch of a new microprocessor, delivering significant performance improvements over previous ones. Some studies have estimated this growth to be exponential, in the order of about 50% per year, which results in a cumulative growth of over three orders of magnitude in a time span of two decades [12]. These improvements have been fueled by advances in the manufacturing process and innovations in processor architecture. According to several studies [4][6], both aspects contributed in a similar amount to the global gains. The manufacturing process technology has tried to follow the scaling recipe laid down by Robert N. Dennard in the early 1970s [7]. The basics of this technology scaling consists of reducing transistor dimensions by a factor of 30% every generation (typically 2 years) while keeping electric fields constant. The 30% scaling in the dimensions results in doubling the transistor density (doubling transistor density every two years was predicted in 1975 by Gordon Moore and is normally referred to as Moore’s Law [21][22]). -

Advanced Computer Architecture Lecture No. 30

Advanced Computer Architecture-CS501 ________________________________________________________ Advanced Computer Architecture Lecture No. 30 Reading Material Vincent P. Heuring & Harry F. Jordan Chapter 8 Computer Systems Design and Architecture 8.3.3, 8.4 Summary • Nested Interrupts • Interrupt Mask • DMA Nested Interrupts (Read from Book, Jordan Page 397) Interrupt Mask (Read from Book, Jordan Page 397) Priority Mask (Read from Book, Jordan Page 398) Examples Example # 123 Assume that three I/O devices are connected to a 32-bit, 10 MIPS CPU. The first device is a hard drive with a maximum transfer rate of 1MB/sec. It has a 32-bit bus. The second device is a floppy drive with a transfer rate of 25KB/sec over a 16-bit bus, and the third device is a keyboard that must be polled thirty times per second. Assuming that the polling operation requires 20 instructions for each I/O device, determine the percentage of CPU time required to poll each device. Solution: The hard drive can transfer 1MB/sec or 250 K 32-bit words every second. Thus, this hard drive should be polled using at least this rate. Using 1K=210, the number of CPU instructions required would be 250 x 210 x 20 = 5120000 instructions per second. 23 Adopted from [H&P org] Last Modified: 01-Nov-06 Page 309 Advanced Computer Architecture-CS501 ________________________________________________________ Percentage of CPU time required for polling is (5.12 x 106)/ (10 x106) = 51.2% The floppy disk can transfer 25K/2= 12.5 x 210 half-words per second. It should be polled with at least this rate. -

Xilinx Running the Dhrystone 2.1 Benchmark on a Virtex-II Pro

Product Not Recommended for New Designs Application Note: Virtex-II Pro Device R Running the Dhrystone 2.1 Benchmark on a Virtex-II Pro PowerPC Processor XAPP507 (v1.0) July 11, 2005 Author: Paul Glover Summary This application note describes a working Virtex™-II Pro PowerPC™ system that uses the Dhrystone benchmark and the reference design on which the system runs. The Dhrystone benchmark is commonly used to measure CPU performance. Introduction The Dhrystone benchmark is a general-performance benchmark used to evaluate processor execution time. This benchmark tests the integer performance of a CPU and the optimization capabilities of the compiler used to generate the code. The output from the benchmark is the number of Dhrystones per second (that is, the number of iterations of the main code loop per second). This application note describes a PowerPC design created with Embedded Development Kit (EDK) 7.1 that runs the Dhrystone benchmark, producing 600+ DMIPS (Dhrystone Millions of Instructions Per Second) at 400 MHz. Prerequisites Required Software • Xilinx ISE 7.1i SP1 • Xilinx EDK 7.1i SP1 • WindRiver Diab DCC 5.2.1.0 or later Note: The Diab compiler for the PowerPC processor must be installed and included in the path. • HyperTerminal Required Hardware • Xilinx ML310 Demonstration Platform • Serial Cable • Xilinx Parallel-4 Configuration Cable Dhrystone Developed in 1984 by Reinhold P. Wecker, the Dhrystone benchmark (written in C) was Description originally developed to benchmark computer systems, a short benchmark that was representative of integer programming. The program is CPU-bound, performing no I/O functions or operating system calls. -

Introduction to Cpu

microprocessors and microcontrollers - sadri 1 INTRODUCTION TO CPU Mohammad Sadegh Sadri Session 2 Microprocessor Course Isfahan University of Technology Sep., Oct., 2010 microprocessors and microcontrollers - sadri 2 Agenda • Review of the first session • A tour of silicon world! • Basic definition of CPU • Von Neumann Architecture • Example: Basic ARM7 Architecture • A brief detailed explanation of ARM7 Architecture • Hardvard Architecture • Example: TMS320C25 DSP microprocessors and microcontrollers - sadri 3 Agenda (2) • History of CPUs • 4004 • TMS1000 • 8080 • Z80 • Am2901 • 8051 • PIC16 microprocessors and microcontrollers - sadri 4 Von Neumann Architecture • Same Memory • Program • Data • Single Bus microprocessors and microcontrollers - sadri 5 Sample : ARM7T CPU microprocessors and microcontrollers - sadri 6 Harvard Architecture • Separate memories for program and data microprocessors and microcontrollers - sadri 7 TMS320C25 DSP microprocessors and microcontrollers - sadri 8 Silicon Market Revenue Rank Rank Country of 2009/2008 Company (million Market share 2009 2008 origin changes $ USD) Intel 11 USA 32 410 -4.0% 14.1% Corporation Samsung 22 South Korea 17 496 +3.5% 7.6% Electronics Toshiba 33Semiconduc Japan 10 319 -6.9% 4.5% tors Texas 44 USA 9 617 -12.6% 4.2% Instruments STMicroelec 55 FranceItaly 8 510 -17.6% 3.7% tronics 68Qualcomm USA 6 409 -1.1% 2.8% 79Hynix South Korea 6 246 +3.7% 2.7% 812AMD USA 5 207 -4.6% 2.3% Renesas 96 Japan 5 153 -26.6% 2.2% Technology 10 7 Sony Japan 4 468 -35.7% 1.9% microprocessors and microcontrollers -

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements. -

Levels in Computer Design

Performance of computer systems • Many different factors among which: – Technology • Raw speed of the circuits (clock, switching time) • Process technology (how many transistors on a chip) – Organization • What type of processor (e.g., RISC vs. CISC) • What type of memory hierarchy • What types of I/O devices – How many processors in the system – Software • O.S., compilers, database drivers etc CSE378 Performance. 1 Moore’s Law Courtesy Intel Corp. CSE378 Performance. 2 Processor-Memory Performance Gap • x Memory latency decrease (10x over 8 years but densities have increased 100x over the same period) • o x86 CPU speed (100x over 10 years) Pentium IV 1000 o Pentium III o Pentium Pro o “Memory wall” Pentium 100 o 386o “Memory gap” x x x x 10 x x 1 89 91 93 95 97 99 01 CSE378 Performance. 3 What are some possible metrics • Raw speed (peak performance = clock rate) • Execution time (or response time): time to execute one (suite of) program from beginning to end. – Need benchmarks for integer dominated programs, scientific, graphical interfaces, multimedia tasks, desktop apps, utilities etc. • Throughput (total amount of work in a given time) – measures utilization of resources (good metric when many users: e.g., large data base queries, Web servers) – Improving (decreasing) execution time will improve (increase) throughput. – Most of the time, improving throughput will decrease execution time CSE378 Performance. 4 Execution time Metric • Execution time: inverse of performance Performance A = 1 / (Execution_time A) • Processor A is faster than Processor B Execution_time A < Execution_time B Performance A > Performance B • Relative performance (a computer is “n times faster” than another one) Performance A / Performance B =Execution_time B / Execution_time A CSE378 Performance. -

ARM Cortex-M3/M4 Instruction Set & Architecture

Universität Dortmund ARM Cortex-M3/M4 Instruction Set & Architecture Universität Dortmund Cortex M4 block diagram Cortex M4 Embedded processor for DSP with FPU 2 Universität Dortmund Cortex M4 vs. M3 (b) The Cortex-M4 ISA is enhanced efficient DSP features including extended single-cycle cycle 16/32-bit multiply- accumulate (MAC), dual 16-bit MAC instructions, optimized 8/16-bit SIMD arithmetic and saturating arithmetic instructions Universität Dortmund ARM Cortex-M Series Family ARM Core Hardware Hardware Saturated DSP Floating Processor Thumb® Thumb®-2 Architecture Architecture Multiply Divide Math Extensions Point Cortex-M0 ARMv6-M Von Neumann Most Subset 1 or 32 cycle No No No No Cortex-M0+ ARMv6-M Von Neumann Most Subset 1 or 32 cycle No No No No Cortex-M1 ARMv6-M Von Neumann Most Subset 3 or 33 cycle No No No No Cortex-M3 ARMv7-M Harvard Entire Entire 1 cycle Yes Yes No No Cortex-M4 ARMv7E-M Harvard Entire Entire 1 cycle Yes Yes Yes Optional Universität Dortmund ARMv7 M (Thumb-2) features Mix of 16 and 32b instructions 1.2 CPI 26% higher code density ARM32 25% speed improvement over Thumb16 Universität Dortmund Thumb-2 • Mixes 16 and 32 bits instructions – Enhancements: eg. UDIV, SDIF division, bit-field operators UFBX, BFC, BFE, wrt traditional ARMv4T – No need to mode switch, can be mixed freely • Not backwards binary compatible – But porting is «easy» Universität Dortmund Cortex-M4 Processor Overview • Cortex-M4 Processor – Introduced in 2010 – Designed with a large variety of highly efficient signal processing features – Features extended single-cycle multiply accumulate instructions, optimized SIMD arithmetic, saturating arithmetic and an optional Floating Point Unit. -

Performance Computer Architecture • Benchmarking and Averaging

This Unit • Metrics • Latency and throughput CIS 501 • Reporting performance Computer Architecture • Benchmarking and averaging • CPU performance equation Unit 2: Performance Slides originally developed by Amir Roth with contributions by Milo Martin at University of Pennsylvania with sources that included University of Wisconsin slides by Mark Hill, Guri Sohi, Jim Smith, and David Wood. CIS 501 (Martin/Roth): Performance 1 CIS 501 (Martin/Roth): Performance 2 Readings Performance: Latency vs. Throughput • Chapter 1.2-1.4 of MA:FSPTCM • Latency (execution time): time to finish a fixed task • Throughput (bandwidth): number of tasks in fixed time • Different: exploit parallelism for throughput, not latency (e.g., bread) • Often contradictory (latency vs. throughput) • Will see many examples of this • Choose definition of performance that matches your goals • Scientific program: latency; web server: throughput? • Example: move people 10 miles • Car: capacity = 5, speed = 60 miles/hour • Bus: capacity = 60, speed = 20 miles/hour • Latency: car = 10 min, bus = 30 min • Throughput: car = 15 PPH (count return trip), bus = 60 PPH CIS 501 (Martin/Roth): Performance 3 CIS 501 (Martin/Roth): Performance 4 Processor Performance and Workloads Comparing Performance • Q: what does latency(ChipA) or throughput(ChipA) mean? • A is X times faster than B if • A: nothing, there must be some associated workload • Latency(A) = Latency(B) / X • Workload: set of tasks someone (you) cares about • Throughput(A) = Throughput(B) * X • A is X% faster than B if • Benchmarks: -

ISA Background Processor Performance Growth

ISA Background Processor performance Growth The fact that there are no updates since 2015 is in itself diagnostic ISA Bgrnd Improvements Moore’s Law Second most audacious extrapolation I know Behind Hubble Data Number on transistors / cost-effective integrated circuit double every N months (12 ≤ N ≤ 24) Hubble’s Law ISA Bgrnd Improvements Moore’s Law Increasing performance is driven use of extra gates to, for example add cores. Clock speed flattens around 2000 power limits ISA Bgrnd Improvements Moore’s Law Moore’s law still applicable ISA Bgrnd Improvements Moore’s Law Clock rate. VAX 11/780: 5 MHz Current rate: 3.5 GHz Ratio about 700 Old numbers Moore’s Law about 100,000. From about the time clock speed Performance about 10,000 flattened Not all about clock rate – architecture drives the rest. Multi-core complicates this VAX 11/780 about £150,000 analysis Intel server about £1000 Price ratio about 150. So performance per price about 1,000,000 Some effect of numbers sold. Last thirty years clock rate has driven improvements (500:10). What is the effect of Moore’s Law. Double transistor count. Small units, shorter connection paths, faster switching. Higher clock speed. More transistors – more complex architecture, more memory Clock speed is not computer speed. ISA Bgrnd Word Computer memory Consists of a set of bits, units which can have a value of 0,1 – these bits are organised into words. The word length is a decision for the system architect. Must the word length be a factor of two. PDP 8 had 8 bit words.