Zero-Shot Learning Problem Definition Semantic Labels Visual Feature Space

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Australian Adaptive Mountain Biking Guidelines

AUSTRALIAN ADAPTIVE MOUNTAIN BIKING GUIDELINES A detailed guide to help land managers, trail builders, event directors, mountain bike clubs, charities and associations develop inclusive mountain bike trails, events and programs for people with disabilities in Australia. Australian Adaptive Mountain Biking Guidelines AUSTRALIAN ADAPTIVE MOUNTAIN BIKING GUIDELINES Version 1.0.0 Proudly supported and published by: Mountain Bike Australia Queensland Government Acknowledgements: The authors of this document acknowledge the contribution of volunteers in the preparation and development of the document’s content. The authors would also like to extend their gratitude to the following contributors: Denise Cox (Mountain Bike Australia), Talya Wainstein, Clinton Beddall, Richard King, Cameron McGavin and Ivan Svenson (Kalamunda Mountain Bike Collective). Photography by Kerry Halford, Travis Deane, Emily Dimozantos, Matt Devlin and Leanne Rees. Editing and Graphics by Ripe Designs Graphics by Richard Morrell COPYRIGHT 2018: © BREAK THE BOUNDARY INC. This document is copyright protected apart from any use as permitted under the Australian Copyright Act 1968, no part may be reproduced by any process without prior written permission from the Author. Requests and inquiries concerning reproduction should be addressed to the Author at www.breaktheboundary.com Fair-use policy By using this document, the user agrees to this fair-use policy. This document is a paid publication and as such only for use by the said paying person, members and associates of mountain bike and adaptive sporting communities, clubs, groups or associations. Distribution or duplication is strictly prohibited without the written consent of the Author. The license includes online access to the latest revision of this document and resources at no additional cost and can be obtained from: www.breaktheboundary.com Hard copies can be obtained from: www.mtba.asn.au 3 Australian Adaptive Mountain Biking Guidelines Australian Adaptive Mountain Biking Guidelines CONTENTS 1. -

32 Minis Newsletter Bicycles

Academy Art Museum academyartmuseum.org MINIS AT HOME volume 1, issue 32 Greetings kids, parents, grandparents, guardians, friends and neighbors! Welcome to issue 32 of Minis at Home newsletter. This is our last newsletter of the school year. We hope you have enjoyed reading the newsletters as much as we have enjoyed preparing them for you. Remember that exploring the natural world, working on projects together with your family, and making time to read are all important activities to keep mind and body healthy. This week’s theme = Bicycles It's that time of year when we are heading outside more and more as the weather becomes warmer and our days become longer. Some of us might enjoy a walk, some of us might ride horses, and some of us may play soccer, but many of us like to ride bikes and scooters best. Bicycle comes from “bi," which means two, and “cycle," which means circle. Wheels, of course, are shaped like circles. Bicycles have 2 wheels, 2 peddles, a frame and handlebars. There is a chain that is connected to cogs. When you push the pedals with your legs, this turns the chain, which then turns the back wheel. You steer the front wheel with the handlebars. The short name for “bicycle” is “bike.” It takes a little practice to balance on a bike. Children who are just learning to balance on a bike may use training wheels that keep the bike from falling over. Very young children can ride a tricycle (or “trike”), which has three wheels and can’t tip at all. -

Keeping St. Pete Special Thrown by Tom St. Petersburg Police Mounted Patrol Unit

JUL/AUG 2016 St. Petersburg, FL Est. September 2004 St. Petersburg Police Mounted Patrol Unit Jeannie Carlson In July, 2009 when the Boston Police t. Petersburg cannot be called a ‘one-horse Department was in the process of disbanding town.’ In fact, in addition to the quaint their historic mounted units, they donated two Shorse-drawn carriages along Beach Drive, of their trained, Percheron/Thoroughbred-cross the city has a Mounted Police Unit that patrols horses to the St. Petersburg Police Department. the downtown waterfront parks and The last time the St. Petersburg Police entertainment district. The Mounted Unit, Department employed a mounted unit was back which consists of two officers and two horses, in 1927. The donation of these horses facilitated is part of the Traffic Division of the St. Petersburg a resurgence of the unit in the bustling little Police Department as an enhancement to the metropolis that is now the City of St. Petersburg police presence downtown, particularly during in the 21st century. weekends and special events. Continued on page 28 Tom Davis and some of his finished pottery Thrown by Tom Linda Dobbs ranada Terrace resident Tom Davis is a true craftsman – even an artist. Craftsmen work with their hands and Gheads, but artists also work with their hearts according to St. Francis of Assisi. Well, Tom certainly has heart – he spends 4-6 hours per day at the Morean Center for Clay totally immersed in pottery. He even sometimes helps with the firing of ceramic creations. Imagine that in Florida’s summer heat. Tom first embraced pottery in South Korea in 1971-72 where he was stationed as a captain in the US Air Force. -

Touring Bike Buyers Guide What's in a Wheel?

TOURING BIKE BUYERS GUIDE 11 WHAT’S IN A 20 WHEEL? 1X DRIVETRAIN ROUNDUP 28 TIPS FOR CREATING YOUR 32 OWN ROUTE ILLUSTRATION BY LEVI BOUGHN 2020 MARCH ADVENTURE CYCLIST 10 TOURING BIKE BUYERS GUIDE you’re looking for a new touring bike buying advice in a more theoretical way. We in 2020, you’re in luck — a proliferation believe that the more cyclists can name their IF of highly capable rides offers options needs and understand the numbers that work that would have been pure fiction even a for them, the more empowered they are to get few years ago. But with that flood of options the right bike whether that’s with a helping comes a head-spinning (and sometimes head- hand from the pros at their local bike shop, a scratching) granularity in bikes called things direct-to-consumer order over the internet, like X-Road and All-Road and Endurance Road or even a parking lot Craigslist transaction. and Adventure and Gravel. Knowledge is (buying) power. While the naming might be silly, what’s But with the sheer volume of suitable new certain is the bike industry has come around bikes available, for 2020 we’re playing it very, to what touring cyclists have known for years: very straight. If you’re shopping for a new namely, that tire clearance, a little luggage bike this year, we’ve compiled what we think capability, and comfortable geometry make for are some of the very best across a number bikes that do anything and go anywhere. The of categories to suit the dyed-in-the-wool 23mm tire is nearly dead, and we’re happy to traditionalist, the new-school bikepacker, and pedal a nice 47mm with room left for fenders even the battery assisted. -

January 1978

VOLUME $ NO. 1 ~ERL! JAN. 197.8 ( 20509 Negaunee O.f!icia.l Organ UNICYCLING SOCIETY OF A.MERICA Inc. @1978 ill Rts Res. Red.ford, MI 48240 Yearly Member:,hip $5 Includes Newsletter (4) ID Ca.rd - See Blank Pg.16 OFFICERS Your Secretary-Treasurer a nd Editor wish to say fflANK YOU!!ff to Pres. Brett Shockley BILL JENA~K for alt of the help he has given them as they took on V.Pres. R. Tschudin the responslbflltles of their new jobs. And it ts very comforting S.Treas. Joyce Jones to know that hts support and assistance will be avatlabte throughout their terms of office. lOUNDER MEMBERS Bernard Crandall DATES TO REMEMBER Paul & Nancy Fox Peter Hangach APRIL 29, 1978 - Indoor Meet Patricia Herron sponsored by Bill Jenack SMILING FACES of Findlay, O. Gordon Kruse for more inforutton writes Steve McP~ak Jan Layne, Director Fr. Jas. J. Moran 514 Defiance Avenue Dr. Miles s. Rogers Findlay, Ohio 45840 Charlotte Fox Rogere or call ·dy Rubel t-4t9-433-8Q59 _,r. Claude Shannon Jim Snith Dr. Jack Wiley JULY 22 and 2l NOOLETTm EDITCR NAT IONAL UNICYCLE MEET Carol Brichford to be held Ins 244(:o cy.ndon MINNEAPOLIS, MINNE$ OT A Redford, Mich.48239 CONTRIBUTING EDITm Bill Jenack PROPJLES - BRETT SHOCKLEY 2-3 i----,E't' YOUR-------------~------~ OFP!CERS 3-4 t------OUND THE WOOLD---- ON A UNI- •- WALLY--~--~lt,._..._ WATTS 4 ___ ., ,_____ _____________ .,. __,_ __ i-----OUSTING ---------------~ON UNICYCLES 7 - 1977 NATIONAL MEET PICTURES 8-9 SMILING FACES 4-H UNICYCLE CLUB 10 ---------=---..a10,11,14 ------------------·--- jORDER BLANKS , Mbshp,Bks,Back Issues 15-16 13~.:"T''f S•JC~ ,(I.C::Y - Wnrld 1s highest unicyclF.: c,3,o1.,, U11ly 1r;, 1·n7) Page 2 i.tHC"f'!LTW1 S~IE'l'Y OF A.:~ERICA , INC . -

Bicycle and Personal Transportation Devices

a Virginia Polytechnic Institute and State University Bicycle and Personal Transportation Devices 1.0 Purpose NO. 5005 Virginia Tech promotes the safe use of bicycles and personal transportation devices as forms of alternative transportation, which enhances the university’s goals for a more Policy Effective Date: sustainable campus. This policy establishes responsibilities and procedures to ensure 6/10/2009 pedestrian safety, proper vehicular operation, and enforcement of bicycles, unicycles, skateboards, E-scooters, in-line skates, roller skates, mopeds, motor scooters and electronic Last Revision Date: personal assistance mobility devices (EPAMDs) on campus, as well as parking regulations. 7/1/2019 This policy applies to Virginia Tech faculty, staff, students, and visitors to the Blacksburg campus. Policy Owner: Sherwood Wilson 2.0 Policy Policy Author: (Contact Person) All persons operating a bicycle, unicycle, skateboard, E-scooter, in-line skates, roller Kayla Smith skates, moped, motor scooter or EPAMD on university property are to comply with all applicable Virginia state statutes and university policies, and all traffic control devices. Affected Parties: On the roadway bicyclists, motor scooter, E-scooter, and moped operators must obey all Undergraduate the laws of vehicular traffic. Pedestrians have the right of way and bicyclists, Graduate skateboarders, E-scooters, in-line skaters, roller skaters, and EPAMD users on pathways Faculty and sidewalks must be careful of and courteous to pedestrians. Three or four wheel All- Staff Terrain Vehicles (ATVs) are prohibited from use on the main campus grounds or Other roadways. See University Policy 5501 Electric/Gas Utility-type Vehicles (EGUVs) (http://www.policies.vt.edu/5501.pdf ) for guidelines on use of golf carts and other 1.0 Purpose EGUVs. -

Road Test: Burley Canto

Issue No. 71 Recumbent Sept./ Oct. 2002 CyclistNews The New ZOX Tandem What’s Inside 2 Editorial License 19 Road Test: Burley Canto 4 Recumbent News 22 Rec-Tech: TerraCycle Fold- Forward Stem 6 Letters 24 On Getting ’Bent 9 Road Test: Penninger Trike 28 Rec-Equipment: Burley Nomad 15 Road Test: Burley Hepcat Trailer Editorial License if it was OK. I then told Jeff about my current test recumbent. Now, Jeff isn’t exactly the world’s biggest recumbent fan. He has a 50-year-old wooden sailboat that he owns (or perhaps owns him) and sails, but he does ride his mountain bike around town for transportation. He likes to tease me about my fleet of weird bikes. But when I we walked into the door of PT Cycles and he saw the Greenspeed GTT tandem tricycle, his eyes lit up. He decided to ride the tandem in the parade with his daughter Emma, who is six. We decided that Jeff needed a GTT piloting course. We rolled the GTT out the door. I told Jeff to hang on, and we were ripping down Water Street. Jeff reminded me that he needed to unlock his Stumpjumper so Amy could ride it home, so I pulled a quick U-turn in the middle of Water Street and headed back into town. Jeff and Emma Kelety ride the Greenspeed GTT in the Port Townsend Once that duty was taken care of, we took Rhody Parade (photo by Deborah Carroll) a left on Water as we started Jeff on a crash course of Tandem Trike Piloting 101. -

! Ch Ro=~ Check of $2,000

The Recumbent Cyclist 9 S.F. TO L.A. LIGHTNING-FAST On April 28, 1991 Pete Penseyres took the Lightning X-2 slightly furrowed brow. out for a Sunday spin, shattering the San Francisco to Los Angeles HPV record by more than three hours in the Pete was actually shooting for a 17 hour 45 minute time. He process and making a strong would have easily done opening bid for the $5,000 this had he not fallen at a JohnPaulMitchellSystems' stoplight before he could top prize. Pete started his get a foot down to the quad-centuryjaun tat about ground. Occuring early 3:30 a.m. at San Francisco in the ride, the fall dam City Hall and pulled into aged the Kevlar fairing's Los Angeles City Hall at "landing gear" flaps used 9:34 p.m., 18 hours and 4 to put his feet down. This minutes later. The Light forced him to ride most of ning X-2 was equipped for the distance with the the run with a powerful doors flapping wide open Night Sun headlight, and in a decidedly non-aero the heavy battery had to dynamic configuration, at light a tail light and tum least for the one-time 64 signals as well. Riding most m.p.h. X-2. When he fell, of the way on Interstate 5, Pete was penalized 30 Pete pedalled the over minutes for receiving as weight bicycle up Pacheco sistance from a concerned Pass and Tejon Pass to an onlookerfrumored to be a altitude of 4,144 feet, seemingly effortlessly except for a certain "T. -

Richard's 21St Century Bicycl E 'The Best Guide to Bikes and Cycling Ever Book Published' Bike Events

Richard's 21st Century Bicycl e 'The best guide to bikes and cycling ever Book published' Bike Events RICHARD BALLANTINE This book is dedicated to Samuel Joseph Melville, hero. First published 1975 by Pan Books This revised and updated edition first published 2000 by Pan Books an imprint of Macmillan Publishers Ltd 25 Eccleston Place, London SW1W 9NF Basingstoke and Oxford Associated companies throughout the world www.macmillan.com ISBN 0 330 37717 5 Copyright © Richard Ballantine 1975, 1989, 2000 The right of Richard Ballantine to be identified as the author of this work has been asserted by him in accordance with the Copyright, Designs and Patents Act 1988. • All rights reserved. No part of this publication may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form, or by any means (electronic, mechanical, photocopying, recording or otherwise) without the prior written permission of the publisher. Any person who does any unauthorized act in relation to this publication may be liable to criminal prosecution and civil claims for damages. 1 3 5 7 9 8 6 4 2 A CIP catalogue record for this book is available from the British Library. • Printed and bound in Great Britain by The Bath Press Ltd, Bath This book is sold subject to the condition that it shall nor, by way of trade or otherwise, be lent, re-sold, hired out, or otherwise circulated without the publisher's prior consent in any form of binding or cover other than that in which it is published and without a similar condition including this condition being imposed on the subsequent purchaser. -

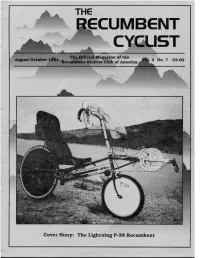

The Lightning P-38 Recumbent 2 the Recumbent Cyclist the LIGHTNING P-38 RECUMBENT by Robert J

Cover Story: The Lightning P-38 Recumbent 2 The Recumbent Cyclist THE LIGHTNING P-38 RECUMBENT by Robert J. Bryant When the subject of high performance recumbents is waterbottle mounts, a pump peg and dropout eyelets. The presented, the Lightning P-38 is sure to come to mind. Lightning's paint is among the finest found on any recum- Considering all of the recumbents currently marketed in bent. It is electrostatic baked on urethane powder and the USA only a few are really built for performance and comes in red, or royal blue. This finish is extremely durable only two designs really have the speed records to back up and looks great. We have found powdercoat much more the claims. These qualities are not an after thought with durable than lmron, but not as glossy a finish. The the Lightning, but the purpose in which the bike is de- difference becomes apparent when the bike is a year or so signed. It is apparent through our interviews with RBCA old and the Powdercoat still looks new. The new aluminum member/ Lightning owners that they know what they want seat frame is anodized silver and much lighter than the in a recumbent bicycle. Lightning owners seem to be previous chro-molly seat frame. among the most loyal owners of any brand of recumbent that we've tested. When Tim shipped the test bike he also included some of Lightning's new custom cast lugs. The lugs were part of the AEROSPACE BEGINNINGS new seat design. The heat treated anodized aluminum Tim Brummer and Company build the Lightnings in seat bolts onto the lugs at the frame and to the seat stays Lompoc California. -

January 2021 - Diy Plans Catalog

JANUARY 2021 - DIY PLANS CATALOG Anyone Can Do This! Our DIY Plans detail every aspect of the building process using easy to follow instructions with high resolution pictures and diagrams. Even if this is your first attempt at building something yourself, you will be able to succeed with our plans, as no previous expertise is assumed. Detailed Plans – Instant Download! We use real build photos and detailed diagrams instead of complex drawings, so anyone with a desire to build can succeed. Our bike and trike plans are not engineering blueprints, and do not call for hard-to-find parts or expensive tools. DIY Means Building Yourself a Better Life. Unleash your creativity, and turn your drawing board ideas into reality. You don't need a fancy garage full of tools or an unlimited budget to build anything shown on our DIY site, you just need the desire to do it yourself. Take pride in your home built projects, and create something completely unique from nothing more than recycled parts. AURORA DIY SUSPENSION TRIKE PLAN The Aurora Delta Recumbent Trike merges speed, handling, and comfort into one great looking ride! With under seat steering, and rear suspension, you will be enjoying your laid-back country cruises in pure comfort and style. Of course, The Aurora DIY Trike is also a hot performer, and will get you around as fast as your leg powered engine feels like working. This DIY Plan Contains 198 Pages and 203 Photos. Click above for more information. WARRIOR LOW RACER TADPOLE TRIKE PLAN The Warrior is one fast and great looking DIY Tadpole Trike that you can make using only basic tools and parts. -

LINK-Liste … Thema "Velomobile"

LINK-Liste … Thema "Velomobile" ... Elektromobilität Aerorider | Electric tricycle | Velomobiel Akkurad, Hersteller und Händler von Elektroleichtfahrzeugen BentBlog.de - Neuigkeiten aus der Liegerad, Trike und Velomobilszene bike-revolution - Velomobile, Österreich Birk Butterfly - alltagstaugliches Speed-HPV Blue Sky Design - HPV Blue Sky Design kit - HPV BugE Dreirad USA, Earth for Earthlings Cab-bike-D CARBIKE GmbH - "S-Pedelec-Auto" Collapsible Recumbent Tricycle Design (ICECycles) - YouTube Drymer - HPV mit Neigetechnik, NL ecoWARRIOR – 4WHEELER – eBike-Konzept von "mint-slot" ecoWARRIOR – e-Mobility – Spirit & Product Ein Fahrrad-Auto-Mobil "Made in Osnabrück" | NDR.de - Nachrichten - Niedersachsen - Osnabrück/Emsland ELF - Die amerikanische Antwort auf das Twike Elf - Wo ein Fahrrad aufs Auto trifft - USA fahrradverkleidung.de - Liegeradverkleidung, Trikeverkleidung, Dreiradverkleidung FN-Trike mit Neigetecchnik Future Cycles are Pedal-Powered Vehicles Ready to Inherit Carless Road - PSFK GinzVelo - futuristisches eBike aus den USA - Pedelecs und E-Bikes Go-One Velomobiles Goone3 - YouTube HP Velotechnik Liegeräder, Dreirad und recumbent Infos - Willkommen / Welcome HPV Sverige: Velomobil Archives ICLETTA - The culture of cycling Katanga s.r.o | WAW LEIBA - Startseite Leitra Light Electric Vehicle Association MARVELO - HPV Canada Mehrspurige ergonomische Fahrräder Mensch-Maschine-Hybrid – TWIKE - Blixxm Milan Velomobil - Die Zukunft der Mobilität Ocean Cycle HPV - GB - Manufacturer of high quality Velomobiles and recumbent bike