A Perception-Based Color Space for Illumination-Invariant Image Processing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Image Processing Terms Used in This Section Working with Different Screen Bit Describes How to Determine the Screen Bit Depth of Your Depths (P

13 Color This section describes the toolbox functions that help you work with color image data. Note that “color” includes shades of gray; therefore much of the discussion in this chapter applies to grayscale images as well as color images. Topics covered include Terminology (p. 13-2) Provides definitions of image processing terms used in this section Working with Different Screen Bit Describes how to determine the screen bit depth of your Depths (p. 13-3) system and provides recommendations if you can change the bit depth Reducing the Number of Colors in an Describes how to use imapprox and rgb2ind to reduce the Image (p. 13-6) number of colors in an image, including information about dithering Converting to Other Color Spaces Defines the concept of image color space and describes (p. 13-15) how to convert images between color spaces 13 Color Terminology An understanding of the following terms will help you to use this chapter. Terms Definitions Approximation The method by which the software chooses replacement colors in the event that direct matches cannot be found. The methods of approximation discussed in this chapter are colormap mapping, uniform quantization, and minimum variance quantization. Indexed image An image whose pixel values are direct indices into an RGB colormap. In MATLAB, an indexed image is represented by an array of class uint8, uint16, or double. The colormap is always an m-by-3 array of class double. We often use the variable name X to represent an indexed image in memory, and map to represent the colormap. Intensity image An image consisting of intensity (grayscale) values. -

Poisson Image Editing

Poisson Image Editing Patrick Perez´ ∗ Michel Gangnet† Andrew Blake‡ Microsoft Research UK Abstract able effect. Conversely, the second-order variations extracted by the Laplacian operator are the most significant perceptually. Using generic interpolation machinery based on solving Poisson Secondly, a scalar function on a bounded domain is uniquely de- equations, a variety of novel tools are introduced for seamless edit- fined by its values on the boundary and its Laplacian in the interior. ing of image regions. The first set of tools permits the seamless The Poisson equation therefore has a unique solution and this leads importation of both opaque and transparent source image regions to a sound algorithm. into a destination region. The second set is based on similar math- So, given methods for crafting the Laplacian of an unknown ematical ideas and allows the user to modify the appearance of the function over some domain, and its boundary conditions, the Pois- image seamlessly, within a selected region. These changes can be son equation can be solved numerically to achieve seamless filling arranged to affect the texture, the illumination, and the color of ob- of that domain. This can be replicated independently in each of jects lying in the region, or to make tileable a rectangular selection. the channels of a color image. Solving the Poisson equation also CR Categories: I.3.3 [Computer Graphics]: Picture/Image has an alternative interpretation as a minimization problem: it com- Generation—Display algorithms; I.3.6 [Computer Graphics]: putes the function whose gradient is the closest, in the L2-norm, to Methodology and Techniques—Interaction techniques; I.4.3 [Im- some prescribed vector field — the guidance vector field — under age Processing and Computer Vision]: Enhancement—Filtering; given boundary conditions. -

Digital Backgrounds Using GIMP

Digital Backgrounds using GIMP GIMP is a free image editing program that rivals the functions of the basic versions of industry leaders such as Adobe Photoshop and Corel Paint Shop Pro. For information on which image editing program is best for your needs, please refer to our website, digital-photo- backgrounds.com in the FAQ section. This tutorial was created to teach you, step-by-step, how to remove a subject (person or item) from its original background and place it onto a Foto*Fun Digital Background. Upon completing this tutorial, you will have the skills to create realistic portraits plus photographic collages and other useful functions. If you have any difficulties with any particular step in the process, feel free to contact us anytime at digital-photo-backgrounds.com. How to read this tutorial It is recommended that you use your own image when following the instructions. However, one has been provided for you in the folder entitled "Tutorial Images". This tutorial uses screenshots to visually show you each step of the process. Each step will be numbered which refers to a number within the screenshot image to indicate the location of the function or tool being described in that particular step. Written instructions are first and refer to the following screenshot image. Keyboard shortcuts are shown after most of the described functions and are in parentheses as in the following example (ctrl+T); which would indicate that the "ctrl" key and the letter "T" key should be pressed at the same time. These are keyboard shortcuts and can be used in place of the described procedure; not in addition to the described procedure. -

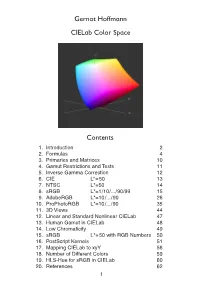

Cielab Color Space

Gernot Hoffmann CIELab Color Space Contents . Introduction 2 2. Formulas 4 3. Primaries and Matrices 0 4. Gamut Restrictions and Tests 5. Inverse Gamma Correction 2 6. CIE L*=50 3 7. NTSC L*=50 4 8. sRGB L*=/0/.../90/99 5 9. AdobeRGB L*=0/.../90 26 0. ProPhotoRGB L*=0/.../90 35 . 3D Views 44 2. Linear and Standard Nonlinear CIELab 47 3. Human Gamut in CIELab 48 4. Low Chromaticity 49 5. sRGB L*=50 with RGB Numbers 50 6. PostScript Kernels 5 7. Mapping CIELab to xyY 56 8. Number of Different Colors 59 9. HLS-Hue for sRGB in CIELab 60 20. References 62 1.1 Introduction CIE XYZ is an absolute color space (not device dependent). Each visible color has non-negative coordinates X,Y,Z. CIE xyY, the horseshoe diagram as shown below, is a perspective projection of XYZ coordinates onto a plane xy. The luminance is missing. CIELab is a nonlinear transformation of XYZ into coordinates L*,a*,b*. The gamut for any RGB color system is a triangle in the CIE xyY chromaticity diagram, here shown for the CIE primaries, the NTSC primaries, the Rec.709 primaries (which are also valid for sRGB and therefore for many PC monitors) and the non-physical working space ProPhotoRGB. The white points are individually defined for the color spaces. The CIELab color space was intended for equal perceptual differences for equal chan- ges in the coordinates L*,a* and b*. Color differences deltaE are defined as Euclidian distances in CIELab. This document shows color charts in CIELab for several RGB color spaces. -

Real-Time Supervised Detection of Pink Areas in Dermoscopic Images of Melanoma: Importance of Color Shades, Texture and Location

Missouri University of Science and Technology Scholars' Mine Electrical and Computer Engineering Faculty Research & Creative Works Electrical and Computer Engineering 01 Nov 2015 Real-Time Supervised Detection of Pink Areas in Dermoscopic Images of Melanoma: Importance of Color Shades, Texture and Location Ravneet Kaur P. P. Albano Justin G. Cole Jason R. Hagerty et. al. For a complete list of authors, see https://scholarsmine.mst.edu/ele_comeng_facwork/3045 Follow this and additional works at: https://scholarsmine.mst.edu/ele_comeng_facwork Part of the Chemistry Commons, and the Electrical and Computer Engineering Commons Recommended Citation R. Kaur et al., "Real-Time Supervised Detection of Pink Areas in Dermoscopic Images of Melanoma: Importance of Color Shades, Texture and Location," Skin Research and Technology, vol. 21, no. 4, pp. 466-473, John Wiley & Sons, Nov 2015. The definitive version is available at https://doi.org/10.1111/srt.12216 This Article - Journal is brought to you for free and open access by Scholars' Mine. It has been accepted for inclusion in Electrical and Computer Engineering Faculty Research & Creative Works by an authorized administrator of Scholars' Mine. This work is protected by U. S. Copyright Law. Unauthorized use including reproduction for redistribution requires the permission of the copyright holder. For more information, please contact [email protected]. Published in final edited form as: Skin Res Technol. 2015 November ; 21(4): 466–473. doi:10.1111/srt.12216. Real-time Supervised Detection of Pink Areas in Dermoscopic Images of Melanoma: Importance of Color Shades, Texture and Location Ravneet Kaur, MS, Department of Electrical and Computer Engineering, Southern Illinois University Edwardsville, Campus Box 1801, Edwardsville, IL 62026-1801, Telephone: 618-210-6223, [email protected] Peter P. -

Accurately Reproducing Pantone Colors on Digital Presses

Accurately Reproducing Pantone Colors on Digital Presses By Anne Howard Graphic Communication Department College of Liberal Arts California Polytechnic State University June 2012 Abstract Anne Howard Graphic Communication Department, June 2012 Advisor: Dr. Xiaoying Rong The purpose of this study was to find out how accurately digital presses reproduce Pantone spot colors. The Pantone Matching System is a printing industry standard for spot colors. Because digital printing is becoming more popular, this study was intended to help designers decide on whether they should print Pantone colors on digital presses and expect to see similar colors on paper as they do on a computer monitor. This study investigated how a Xerox DocuColor 2060, Ricoh Pro C900s, and a Konica Minolta bizhub Press C8000 with default settings could print 45 Pantone colors from the Uncoated Solid color book with only the use of cyan, magenta, yellow and black toner. After creating a profile with a GRACoL target sheet, the 45 colors were printed again, measured and compared to the original Pantone Swatch book. Results from this study showed that the profile helped correct the DocuColor color output, however, the Konica Minolta and Ricoh color outputs generally produced the same as they did without the profile. The Konica Minolta and Ricoh have much newer versions of the EFI Fiery RIPs than the DocuColor so they are more likely to interpret Pantone colors the same way as when a profile is used. If printers are using newer presses, they should expect to see consistent color output of Pantone colors with or without profiles when using default settings. -

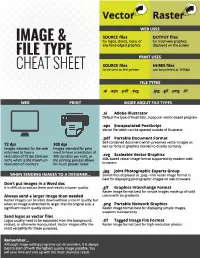

Image & File Type Cheat Sheet

Vecto� Raste� WEB USES SOURCE flies OUTPUT flies for logos, charts, icons, or for most web graphics IMAGE & any hard-edged graphics displayed on the screen FILE TYPE PRINT USES SOURCE files HI-RES flies CHEAT SHEET to be sent to the printer can be printed at 300dpi FILE TYPES .ai .eps .pdf .svg .jpg .gif .png .tif WEB PRINT MORE ABOUT FILE TYPES .ai Adobe Illustrator Default file typeof Illustrator, a popular vector-based program .eps Encapsulated Postscript Vector file which can be opened outside of Illustrator .pdf Portable Document Format Self-contained document which preservesvector images as 72dpl 300 dpl well as fonts or graphics needed to display correctly Images intended for the web Images intended for print only need to have a need to have a resolutlon of resolution of 72 dpi (dots per 300 dpi (dots per inch), as .svg Scaleable Vector Graphics inch), which is the maximum the printing process allows XML-based vector image format supportedby modern web resolution of monitors for much greater detail browsers .jpg Joint Photographic Experts Group WHEN SENDING IMAGES TO A DESIGNER••• Sometimes displayed as .jpeg-this raster image format is best fordisplaying photographic images on web browsers Don't put images in a Word doc It is difficult to extract them and results in lower quality. .gif Graphics Interchange Format Raster image format best forsimple images made up of solid Always send a larger image than needed colors with no gradients Raster images can be sized down without a loss in quality,but when an image is stretched to larger than its original size, a .png Portable Network Graphics significant loss in quality occurs. -

Predictability of Spot Color Overprints

Predictability of Spot Color Overprints Robert Chung, Michael Riordan, and Sri Prakhya Rochester Institute of Technology School of Print Media 69 Lomb Memorial Drive, Rochester, NY 14623, USA emails: [email protected], [email protected], [email protected] Keywords spot color, overprint, color management, portability, predictability Abstract Pre-media software packages, e.g., Adobe Illustrator, do amazing things. They give designers endless choices of how line, area, color, and transparency can interact with one another while providing the display that simulates printed results. Most prepress practitioners are thrilled with pre-media software when working with process colors. This research encountered a color management gap in pre-media software’s ability to predict spot color overprint accurately between display and print. In order to understand the problem, this paper (1) describes the concepts of color portability and color predictability in the context of color management, (2) describes an experimental set-up whereby display and print are viewed under bright viewing surround, (3) conducts display-to-print comparison of process color patches, (4) conducts display-to-print comparison of spot color solids, and, finally, (5) conducts display-to-print comparison of spot color overprints. In doing so, this research points out why the display-to-print match works for process colors, and fails for spot color overprints. Like Genie out of the bottle, there is no turning back nor quick fix to reconcile the problem with predictability of spot color overprints in pre-media software for some time to come. 1. Introduction Color portability is a key concept in ICC color management. -

Preparing Images for Delivery

TECHNICAL PAPER Preparing Images for Delivery TABLE OF CONTENTS So, you’ve done a great job for your client. You’ve created a nice image that you both 2 How to prepare RGB files for CMYK agree meets the requirements of the layout. Now what do you do? You deliver it (so you 4 Soft proofing and gamut warning can bill it!). But, in this digital age, how you prepare an image for delivery can make or 13 Final image sizing break the final reproduction. Guess who will get the blame if the image’s reproduction is less than satisfactory? Do you even need to guess? 15 Image sharpening 19 Converting to CMYK What should photographers do to ensure that their images reproduce well in print? 21 What about providing RGB files? Take some precautions and learn the lingo so you can communicate, because a lack of crystal-clear communication is at the root of most every problem on press. 24 The proof 26 Marking your territory It should be no surprise that knowing what the client needs is a requirement of pro- 27 File formats for delivery fessional photographers. But does that mean a photographer in the digital age must become a prepress expert? Kind of—if only to know exactly what to supply your clients. 32 Check list for file delivery 32 Additional resources There are two perfectly legitimate approaches to the problem of supplying digital files for reproduction. One approach is to supply RGB files, and the other is to take responsibility for supplying CMYK files. Either approach is valid, each with positives and negatives. -

Dalmatian's Definitions

T H E B L A C K & W H I T E P A P E R S D A L M A T I A N ’ S D E F I N I T I O N S 8 BIT A bit is the possible number of colors or tones assigned to each pixel. In 8 bit files, 1 of 256 tones is assigned to each pixel. 8-bit Jpeg is the default setting for most cameras; whenever possible set the camera to RAW capture for the best image capture possible. 16 BIT A bit is the possible number of colors or tones assigned to each pixel. In 16 bit files, 1 of 65,536 tones are assigned to each pixel. 16 bit files have the greatest range of tonalities and a more realistic interpretation of continuous tone. Note the increase in tonalities from 8 bit to 16 bit. If your aim is the quality of the image, then 16 bit is a must. ADOBE 1998 COLOR SPACE Adobe ‘98 is the preferred RGB color space that places the captured and therefore printable colors within larger parameters, rendering approximately 50% the visible color space of the human eye. Whenever possible, change the camera’s default sRGB setting to Adobe 1998 in order to increase available color capture. Whenever possible change this setting to Adobe 1998 in order to increase available color capture. When an image is captured in sRGB, it is best to leave the color space unchanged so color rendering is unaffected. ARCHIVAL Archival materials are those with a neutral pH balance that will not degrade over time and are resistant to UV fading. -

Specification of Srgb

How to interpret the sRGB color space (specified in IEC 61966-2-1) for ICC profiles A. Key sRGB color space specifications (see IEC 61966-2-1 https://webstore.iec.ch/publication/6168 for more information). 1. Chromaticity co-ordinates of primaries: R: x = 0.64, y = 0.33, z = 0.03; G: x = 0.30, y = 0.60, z = 0.10; B: x = 0.15, y = 0.06, z = 0.79. Note: These are defined in ITU-R BT.709 (the television standard for HDTV capture). 2. Reference display‘Gamma’: Approximately 2.2 (see precise specification of color component transfer function below). 3. Reference display white point chromaticity: x = 0.3127, y = 0.3290, z = 0.3583 (equivalent to the chromaticity of CIE Illuminant D65). 4. Reference display white point luminance: 80 cd/m2 (includes veiling glare). Note: The reference display white point tristimulus values are: Xabs = 76.04, Yabs = 80, Zabs = 87.12. 5. Reference veiling glare luminance: 0.2 cd/m2 (this is the reference viewer-observed black point luminance). Note: The reference viewer-observed black point tristimulus values are assumed to be: Xabs = 0.1901, Yabs = 0.2, Zabs = 0.2178. These values are not specified in IEC 61966-2-1, and are an additional interpretation provided in this document. 6. Tristimulus value normalization: The CIE 1931 XYZ values are scaled from 0.0 to 1.0. Note: The following scaling equations can be used. These equations are not provided in IEC 61966-2-1, and are an additional interpretation provided in this document. 76.04 X abs 0.1901 XN = = 0.0125313 (Xabs – 0.1901) 80 76.04 0.1901 Yabs 0.2 YN = = 0.0125313 (Yabs – 0.2) 80 0.2 87.12 Zabs 0.2178 ZN = = 0.0125313 (Zabs – 0.2178) 80 87.12 0.2178 7. -

Computational RYB Color Model and Its Applications

IIEEJ Transactions on Image Electronics and Visual Computing Vol.5 No.2 (2017) -- Special Issue on Application-Based Image Processing Technologies -- Computational RYB Color Model and its Applications Junichi SUGITA† (Member), Tokiichiro TAKAHASHI†† (Member) †Tokyo Healthcare University, ††Tokyo Denki University/UEI Research <Summary> The red-yellow-blue (RYB) color model is a subtractive model based on pigment color mixing and is widely used in art education. In the RYB color model, red, yellow, and blue are defined as the primary colors. In this study, we apply this model to computers by formulating a conversion between the red-green-blue (RGB) and RYB color spaces. In addition, we present a class of compositing methods in the RYB color space. Moreover, we prescribe the appropriate uses of these compo- siting methods in different situations. By using RYB color compositing, paint-like compositing can be easily achieved. We also verified the effectiveness of our proposed method by using several experiments and demonstrated its application on the basis of RYB color compositing. Keywords: RYB, RGB, CMY(K), color model, color space, color compositing man perception system and computer displays, most com- 1. Introduction puter applications use the red-green-blue (RGB) color mod- Most people have had the experience of creating an arbi- el3); however, this model is not comprehensible for many trary color by mixing different color pigments on a palette or people who not trained in the RGB color model because of a canvas. The red-yellow-blue (RYB) color model proposed its use of additive color mixing. As shown in Fig.