The Continuum Hypothesis and Forcing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Axiomatic Set Teory P.D.Welch

Axiomatic Set Teory P.D.Welch. August 16, 2020 Contents Page 1 Axioms and Formal Systems 1 1.1 Introduction 1 1.2 Preliminaries: axioms and formal systems. 3 1.2.1 The formal language of ZF set theory; terms 4 1.2.2 The Zermelo-Fraenkel Axioms 7 1.3 Transfinite Recursion 9 1.4 Relativisation of terms and formulae 11 2 Initial segments of the Universe 17 2.1 Singular ordinals: cofinality 17 2.1.1 Cofinality 17 2.1.2 Normal Functions and closed and unbounded classes 19 2.1.3 Stationary Sets 22 2.2 Some further cardinal arithmetic 24 2.3 Transitive Models 25 2.4 The H sets 27 2.4.1 H - the hereditarily finite sets 28 2.4.2 H - the hereditarily countable sets 29 2.5 The Montague-Levy Reflection theorem 30 2.5.1 Absoluteness 30 2.5.2 Reflection Theorems 32 2.6 Inaccessible Cardinals 34 2.6.1 Inaccessible cardinals 35 2.6.2 A menagerie of other large cardinals 36 3 Formalising semantics within ZF 39 3.1 Definite terms and formulae 39 3.1.1 The non-finite axiomatisability of ZF 44 3.2 Formalising syntax 45 3.3 Formalising the satisfaction relation 46 3.4 Formalising definability: the function Def. 47 3.5 More on correctness and consistency 48 ii iii 3.5.1 Incompleteness and Consistency Arguments 50 4 The Constructible Hierarchy 53 4.1 The L -hierarchy 53 4.2 The Axiom of Choice in L 56 4.3 The Axiom of Constructibility 57 4.4 The Generalised Continuum Hypothesis in L. -

Singular Cardinals: from Hausdorff's Gaps to Shelah's Pcf Theory

SINGULAR CARDINALS: FROM HAUSDORFF’S GAPS TO SHELAH’S PCF THEORY Menachem Kojman 1 PREFACE The mathematical subject of singular cardinals is young and many of the math- ematicians who made important contributions to it are still active. This makes writing a history of singular cardinals a somewhat riskier mission than writing the history of, say, Babylonian arithmetic. Yet exactly the discussions with some of the people who created the 20th century history of singular cardinals made the writing of this article fascinating. I am indebted to Moti Gitik, Ronald Jensen, Istv´an Juh´asz, Menachem Magidor and Saharon Shelah for the time and effort they spent on helping me understand the development of the subject and for many illuminations they provided. A lot of what I thought about the history of singular cardinals had to change as a result of these discussions. Special thanks are due to Istv´an Juh´asz, for his patient reading for me from the Russian text of Alexandrov and Urysohn’s Memoirs, to Salma Kuhlmann, who directed me to the definition of singular cardinals in Hausdorff’s writing, and to Stefan Geschke, who helped me with the German texts I needed to read and sometimes translate. I am also indebted to the Hausdorff project in Bonn, for publishing a beautiful annotated volume of Hausdorff’s monumental Grundz¨uge der Mengenlehre and for Springer Verlag, for rushing to me a free copy of this book; many important details about the early history of the subject were drawn from this volume. The wonderful library and archive of the Institute Mittag-Leffler are a treasure for anyone interested in mathematics at the turn of the 20th century; a particularly pleasant duty for me is to thank the institute for hosting me during my visit in September of 2009, which allowed me to verify various details in the early research literature, as well as providing me the company of many set theorists and model theorists who are interested in the subject. -

Universes for Category Theory

Universes for category theory Zhen Lin Low 28 November 2014 Abstract The Grothendieck universe axiom asserts that every set is a member of some set-theoretic universe U that is itself a set. One can then work with entities like the category of all U-sets or even the category of all locally U-small categories, where U is an “arbitrary but fixed” universe, all without worrying about which set-theoretic operations one may le- gitimately apply to these entities. Unfortunately, as soon as one allows the possibility of changing U, one also has to face the fact that univer- sal constructions such as limits or adjoints or Kan extensions could, in principle, depend on the parameter U. We will prove this is not the case for adjoints of accessible functors between locally presentable categories (and hence, limits and Kan extensions), making explicit the idea that “bounded” constructions do not depend on the choice of U. Introduction In category theory it is often convenient to invoke a certain set-theoretic device commonly known as a ‘Grothendieck universe’, but we shall say simply ‘uni- verse’, so as to simplify exposition and proofs by eliminating various circum- arXiv:1304.5227v2 [math.CT] 28 Nov 2014 locutions involving cardinal bounds, proper classes etc. In [SGA 4a, Exposé I, §0], the authors adopt the following universe axiom: For each set x, there exists a universe U with x ∈ U. One then introduces a universe parameter U and speaks of U-sets, locally U- small categories, and so on, with the proviso that U is “arbitrary”. -

The Axiom of Choice. Cardinals and Cardinal Arithmetic

Set Theory (MATH 6730) The Axiom of Choice. Cardinals and Cardinal Arithmetic 1. The Axiom of Choice We will discuss several statements that are equivalent (in ZF) to the Axiom of Choice. Definition 1.1. Let A be a set of nonempty sets. A choice function for A is a function f with domain A such that f(a) 2 a for all a 2 A. Theorem 1.2. In ZF the following statement are equivalent: AC (The Axiom of Choice) For any set A of pairwise disjoint, nonempty sets there exists a set C which has exactly one element from every set in A. CFP (Choice Function Principle) For any set A of nonempty sets there exists a choice function for A. WOP (Well-Ordering Principle) For every set B there exists a well-ordering (B; ≺). ZLm (Zorn's Lemma) If (D; <) is a partial order such that (∗) every subset of D that is linearly ordered by < has an upper bound in D,1 then (D; <) has a maximal element. Corollary 1.3. ZFC ` CFP, WOP, ZLm. Proof of Theorem 1.2. AC)CFP: Assume AC, and let A be a set of nonempty sets. By the Axiom of Replacement, A = ffag × a : a 2 Ag is a set. Hence, by AC, there is a set C which has exactly one element from every set in A. • C is a choice function for A. 1Condition (∗) for the empty subset of D is equivalent to requiring that D 6= ;. 1 2 CFP) WOP: Assume CFP, and let B be a set. -

Georg Cantor English Version

GEORG CANTOR (March 3, 1845 – January 6, 1918) by HEINZ KLAUS STRICK, Germany There is hardly another mathematician whose reputation among his contemporary colleagues reflected such a wide disparity of opinion: for some, GEORG FERDINAND LUDWIG PHILIPP CANTOR was a corruptor of youth (KRONECKER), while for others, he was an exceptionally gifted mathematical researcher (DAVID HILBERT 1925: Let no one be allowed to drive us from the paradise that CANTOR created for us.) GEORG CANTOR’s father was a successful merchant and stockbroker in St. Petersburg, where he lived with his family, which included six children, in the large German colony until he was forced by ill health to move to the milder climate of Germany. In Russia, GEORG was instructed by private tutors. He then attended secondary schools in Wiesbaden and Darmstadt. After he had completed his schooling with excellent grades, particularly in mathematics, his father acceded to his son’s request to pursue mathematical studies in Zurich. GEORG CANTOR could equally well have chosen a career as a violinist, in which case he would have continued the tradition of his two grandmothers, both of whom were active as respected professional musicians in St. Petersburg. When in 1863 his father died, CANTOR transferred to Berlin, where he attended lectures by KARL WEIERSTRASS, ERNST EDUARD KUMMER, and LEOPOLD KRONECKER. On completing his doctorate in 1867 with a dissertation on a topic in number theory, CANTOR did not obtain a permanent academic position. He taught for a while at a girls’ school and at an institution for training teachers, all the while working on his habilitation thesis, which led to a teaching position at the university in Halle. -

Elements of Set Theory

Elements of set theory April 1, 2014 ii Contents 1 Zermelo{Fraenkel axiomatization 1 1.1 Historical context . 1 1.2 The language of the theory . 3 1.3 The most basic axioms . 4 1.4 Axiom of Infinity . 4 1.5 Axiom schema of Comprehension . 5 1.6 Functions . 6 1.7 Axiom of Choice . 7 1.8 Axiom schema of Replacement . 9 1.9 Axiom of Regularity . 9 2 Basic notions 11 2.1 Transitive sets . 11 2.2 Von Neumann's natural numbers . 11 2.3 Finite and infinite sets . 15 2.4 Cardinality . 17 2.5 Countable and uncountable sets . 19 3 Ordinals 21 3.1 Basic definitions . 21 3.2 Transfinite induction and recursion . 25 3.3 Applications with choice . 26 3.4 Applications without choice . 29 3.5 Cardinal numbers . 31 4 Descriptive set theory 35 4.1 Rational and real numbers . 35 4.2 Topological spaces . 37 4.3 Polish spaces . 39 4.4 Borel sets . 43 4.5 Analytic sets . 46 4.6 Lebesgue's mistake . 48 iii iv CONTENTS 5 Formal logic 51 5.1 Propositional logic . 51 5.1.1 Propositional logic: syntax . 51 5.1.2 Propositional logic: semantics . 52 5.1.3 Propositional logic: completeness . 53 5.2 First order logic . 56 5.2.1 First order logic: syntax . 56 5.2.2 First order logic: semantics . 59 5.2.3 Completeness theorem . 60 6 Model theory 67 6.1 Basic notions . 67 6.2 Ultraproducts and nonstandard analysis . 68 6.3 Quantifier elimination and the real closed fields . -

Notes on Set Theory, Part 2

§11 Regular cardinals In what follows, κ , λ , µ , ν , ρ always denote cardinals. A cardinal κ is said to be regular if κ is infinite, and the union of fewer than κ sets, each of whose cardinality is less than κ , is of cardinality less than κ . In symbols: κ is regular if κ is infinite, and κ 〈 〉 ¡ κ for any set I with I ¡ < and any family Ai i∈I of sets such that Ai < ¤¦¥ £¢ κ for all i ∈ I , we have A ¡ < . i∈I i (Among finite cardinals, only 0 , 1 and 2 satisfy the displayed condition; it is not worth including these among the cardinals that we want to call "regular".) ℵ To see two examples, we know that 0 is regular: this is just to say that the union of finitely ℵ many finite sets is finite. We have also seen that 1 is regular: the meaning of this is that the union of countably many countable sets is countable. For future use, let us note the simple fact that the ordinal-least-upper-bound of any set of cardinals is a cardinal; for any set I , and cardinals λ for i∈I , lub λ is a cardinal. i i∈I i α Indeed, if α=lub λ , and β<α , then for some i∈I , β<λ . If we had β § , then by i∈I i i β λ ≤α β § λ λ < i , and Cantor-Bernstein, we would have i , contradicting the facts that i is β λ β α β ¨ α α a cardinal and < i . -

The Continuum Hypothesis

Mathematics As A Liberal Art Math 105 Fall 2015 Fowler 302 MWF 10:40am- 11:35am BY: 2015 Ron Buckmire http://sites.oxy.edu/ron/math/105/15/ Class 22: Monday October 26 Aleph One (@1) and All That The Continuum Hypothesis: Introducing @1 (Aleph One) We can show that the power set of the natural numbers has a cardinality greater than the the cardinality of the natural numbers. This result was proven in an 1891 paper by German mathematician Georg Cantor (1845-1918) who used something called a diagonalization argument in his proof. Using our formula for the cardinality of the power set, @0 jP(N)j = 2 = @1 The cardinality of the power set of the natural numbers is called @1. THEOREM Cantor's Power Set Theorem For any set S (finite or infinite), cardinality of the power set of S, i.e. P (S) is always strictly greater than the cardinality of S. Mathematically, this says that jP (S)j > jS THEOREM c > @0 The cardinality of the real numbers is greater than the cardinality of the natural numbers. DEFINITION: Uncountable An infinite set is said to be uncountable (or nondenumerable) if it has a cardinality that is greater than @0, i.e. it has more elements than the set of natural numbers. PROOF Let's use Cantor's diagonal process to show that the set of real numbers between 0 and 1 is uncountable. Math As A Liberal Art Class 22 Math 105 Spring 2015 THEOREM The Continuum Hypothesis, i.e. @1 =c The continuum hypothesis is that the cardinality of the continuum (i.e. -

The Continuum Hypothesis and Its Relation to the Lusin Set

THE CONTINUUM HYPOTHESIS AND ITS RELATION TO THE LUSIN SET CLIVE CHANG Abstract. In this paper, we prove that the Continuum Hypothesis is equiv- alent to the existence of a subset of R called a Lusin set and the property that every subset of R with cardinality < c is of first category. Additionally, we note an interesting consequence of the measure of a Lusin set, specifically that it has measure zero. We introduce the concepts of ordinals and cardinals, as well as discuss some basic point-set topology. Contents 1. Introduction 1 2. Ordinals 2 3. Cardinals and Countability 2 4. The Continuum Hypothesis and Aleph Numbers 2 5. The Topology on R 3 6. Meagre (First Category) and Fσ Sets 3 7. The existence of a Lusin set, assuming CH 4 8. The Lebesque Measure on R 4 9. Additional Property of the Lusin set 4 10. Lemma: CH is equivalent to R being representable as an increasing chain of countable sets 5 11. The Converse: Proof of CH from the existence of a Lusin set and a property of R 6 12. Closing Comments 6 Acknowledgments 6 References 6 1. Introduction Throughout much of the early and middle twentieth century, the Continuum Hypothesis (CH) served as one of the premier problems in the foundations of math- ematical set theory, attracting the attention of countless famous mathematicians, most notably, G¨odel,Cantor, Cohen, and Hilbert. The conjecture first advanced by Cantor in 1877, makes a claim about the relationship between the cardinality of the continuum (R) and the cardinality of the natural numbers (N), in relation to infinite set hierarchy. -

Aaboe, Asger Episodes from the Early History of Mathematics QA22 .A13 Abbott, Edwin Abbott Flatland: a Romance of Many Dimensions QA699 .A13 1953 Abbott, J

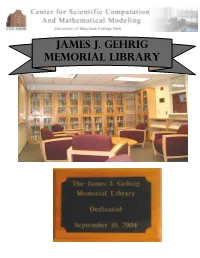

James J. Gehrig Memorial Library _________Table of Contents_______________________________________________ Section I. Cover Page..............................................i Table of Contents......................................ii Biography of James Gehrig.............................iii Section II. - Library Author’s Last Name beginning with ‘A’...................1 Author’s Last Name beginning with ‘B’...................3 Author’s Last Name beginning with ‘C’...................7 Author’s Last Name beginning with ‘D’..................10 Author’s Last Name beginning with ‘E’..................13 Author’s Last Name beginning with ‘F’..................14 Author’s Last Name beginning with ‘G’..................16 Author’s Last Name beginning with ‘H’..................18 Author’s Last Name beginning with ‘I’..................22 Author’s Last Name beginning with ‘J’..................23 Author’s Last Name beginning with ‘K’..................24 Author’s Last Name beginning with ‘L’..................27 Author’s Last Name beginning with ‘M’..................29 Author’s Last Name beginning with ‘N’..................33 Author’s Last Name beginning with ‘O’..................34 Author’s Last Name beginning with ‘P’..................35 Author’s Last Name beginning with ‘Q’..................38 Author’s Last Name beginning with ‘R’..................39 Author’s Last Name beginning with ‘S’..................41 Author’s Last Name beginning with ‘T’..................45 Author’s Last Name beginning with ‘U’..................47 Author’s Last Name beginning -

2.5. INFINITE SETS Now That We Have Covered the Basics of Elementary

2.5. INFINITE SETS Now that we have covered the basics of elementary set theory in the previous sections, we are ready to turn to infinite sets and some more advanced concepts in this area. Shortly after Georg Cantor laid out the core principles of his new theory of sets in the late 19th century, his work led him to a trove of controversial and groundbreaking results related to the cardinalities of infinite sets. We will explore some of these extraordinary findings, including Cantor’s eponymous theorem on power sets and his famous diagonal argument, both of which imply that infinite sets come in different “sizes.” We also present one of the grandest problems in all of mathematics – the Continuum Hypothesis, which posits that the cardinality of the continuum (i.e. the set of all points on a line) is equal to that of the power set of the set of natural numbers. Lastly, we conclude this section with a foray into transfinite arithmetic, an extension of the usual arithmetic with finite numbers that includes operations with so-called aleph numbers – the cardinal numbers of infinite sets. If all of this sounds rather outlandish at the moment, don’t be surprised. The properties of infinite sets can be highly counter-intuitive and you may likely be in total disbelief after encountering some of Cantor’s theorems for the first time. Cantor himself said it best: after deducing that there are just as many points on the unit interval (0,1) as there are in n-dimensional space1, he wrote to his friend and colleague Richard Dedekind: “I see it, but I don’t believe it!” The Tricky Nature of Infinity Throughout the ages, human beings have always wondered about infinity and the notion of uncountability. -

Set.1 the Power of the Continuum

set.1 The Power of the Continuum sol:set:pow: In second-order logic we can quantify over subsets of the domain, but not over explanation sec sets of subsets of the domain. To do this directly, we would need third-order logic. For instance, if we wanted to state Cantor's Theorem that there is no injective function from the power set of a set to the set itself, we might try to formulate it as \for every set X, and every set P , if P is the power set of X, then not P ⪯ X". And to say that P is the power set of X would require formalizing that the elements of P are all and only the subsets of X, so something like 8Y (P (Y ) $ Y ⊆ X). The problem lies in P (Y ): that is not a formula of second-order logic, since only terms can be arguments to one-place relation variables like P . We can, however, simulate quantification over sets of sets, if the domain is large enough. The idea is to make use of the fact that two-place relations R relates elements of the domain to elements of the domain. Given such an R, we can collect all the elements to which some x is R-related: fy 2 jMj : R(x; y)g is the set \coded by" x. Conversely, if Z ⊆ }(jMj) is some collection of subsets of jMj, and there are at least as many elements of jMj as there are sets in Z, then there is also a relation R ⊆ jMj2 such that every Y 2 Z is coded by some x using R.