Synchronized Real Time Audio Streaming Over Ethernet in Embedded Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Ttethernet for Integrated Fault-Tolerant Spacecraft Networks

On TTEthernet for Integrated Fault-Tolerant Spacecraft Networks Andrew Loveless∗ NASA Johnson Space Center, Houston, TX, 77058 There has recently been a push for adopting integrated modular avionics (IMA) princi- ples in designing spacecraft architectures. This consolidation of multiple vehicle functions to shared computing platforms can significantly reduce spacecraft cost, weight, and de- sign complexity. Ethernet technology is attractive for inclusion in more integrated avionic systems due to its high speed, flexibility, and the availability of inexpensive commercial off-the-shelf (COTS) components. Furthermore, Ethernet can be augmented with a variety of quality of service (QoS) enhancements that enable its use for transmitting critical data. TTEthernet introduces a decentralized clock synchronization paradigm enabling the use of time-triggered Ethernet messaging appropriate for hard real-time applications. TTEther- net can also provide two forms of event-driven communication, therefore accommodating the full spectrum of traffic criticality levels required in IMA architectures. This paper explores the application of TTEthernet technology to future IMA spacecraft architectures as part of the Avionics and Software (A&S) project chartered by NASA's Advanced Ex- ploration Systems (AES) program. Nomenclature A&S = Avionics and Software Project AA2 = Ascent Abort 2 AES = Advanced Exploration Systems Program ANTARES = Advanced NASA Technology Architecture for Exploration Studies API = Application Program Interface ARM = Asteroid Redirect Mission -

Data Center Ethernet 2

DataData CenterCenter EthernetEthernet Raj Jain Washington University in Saint Louis Saint Louis, MO 63130 [email protected] These slides and audio/video recordings of this class lecture are at: http://www.cse.wustl.edu/~jain/cse570-15/ Washington University in St. Louis http://www.cse.wustl.edu/~jain/cse570-15/ ©2015 Raj Jain 4-1 OverviewOverview 1. Residential vs. Data Center Ethernet 2. Review of Ethernet Addresses, devices, speeds, algorithms 3. Enhancements to Spanning Tree Protocol 4. Virtual LANs 5. Data Center Bridging Extensions Washington University in St. Louis http://www.cse.wustl.edu/~jain/cse570-15/ ©2015 Raj Jain 4-2 Quiz:Quiz: TrueTrue oror False?False? Which of the following statements are generally true? T F p p Ethernet is a local area network (Local < 2km) p p Token ring, Token Bus, and CSMA/CD are the three most common LAN access methods. p p Ethernet uses CSMA/CD. p p Ethernet bridges use spanning tree for packet forwarding. p p Ethernet frames are 1518 bytes. p p Ethernet does not provide any delay guarantees. p p Ethernet has no congestion control. p p Ethernet has strict priorities. Washington University in St. Louis http://www.cse.wustl.edu/~jain/cse570-15/ ©2015 Raj Jain 4-3 ResidentialResidential vs.vs. DataData CenterCenter EthernetEthernet Residential Data Center Distance: up to 200m r No limit Scale: Few MAC addresses r Millions of MAC Addresses 4096 VLANs r Millions of VLANs Q-in-Q Protection: Spanning tree r Rapid spanning tree, … (Gives 1s, need 50ms) Path determined by r Traffic engineered path spanning tree Simple service r Service Level Agreement. -

Study of the Time Triggered Ethernet Dataflow

Institutionen f¨ordatavetenskap Department of Computer and Information Science Final thesis Study of the Time Triggered Ethernet Dataflow by Niclas Rosenvik LIU-IDA/LITH-EX-G{15/011|SE 2015-07-08 Linköpings universitet Linköpings universitet SE-581 83 Linköping, Sweden 581 83 Linköping Link¨opingsuniversitet Institutionen f¨ordatavetenskap Final thesis Study of the Time Triggered Ethernet Dataflow by Niclas Rosenvik LIU-IDA/LITH-EX-G{15/011|SE 2015-07-08 Supervisor: Unmesh Bordoloi Examiner: Petru Eles Abstract In recent years Ethernet has caught the attention of the real-time commu- nity. The main reason for this is that it has a high data troughput, 10Mbit/s and higher, and good EMI characteristics. As a protocol that might be used in real-time environments such as control systems for cars etc, it seems to fulfil the requirements. TTEthernet is a TDMA extension to normal Eth- ernet, designed to meet the hard deadlines required by real-time networks. This thesis describes how TTEthernet handles frames and the mathemat- ical formulas to calculate shuffle delay of frames in such a network. Open problems related to TTEthernet are also discussed. iii Contents 1 Introduction 1 2 Ethernet 2 2.1 Switching . 2 2.2 Ethernet frame format . 3 2.3 The need for TTEthernet . 4 3 TTEthernet 6 3.1 TTEthernet spec . 6 3.1.1 Protocol control frames . 6 3.1.2 Time-triggered frames . 7 3.1.3 Rate constrained frames . 9 3.1.4 Best effort frames . 10 3.2 Integration Algorithms . 10 3.2.1 Shuffling . 10 3.2.2 Preemption . -

PROFINET for Network Geeks

PROFINET for Network Geeks (and those who want to be) Introduction PROFINET is an open Industrial Ethernet standard. It is a communication protocol that exchanges data between automation controllers and devices. With over 25 million installed nodes (as of 2018), PROFINET is one of the most widely used Industrial Ethernet standards worldwide. But even though millions of users are familiar with PROFINET, not all users understand how it works. This white paper starts with a brief overview of Ethernet and the 7-layer ISO-OSI model. Then, it describes how PROFINET’s 3 communication channels fit in the model: TCP/IP and UDP/IP, Real-Time (RT), and Isochronous Real-Time (IRT). 1 Ethernet The transition from using 4-20 mA analog signals for I/O communication to digital fieldbuses provided the benefits of reduced wiring, access to network data, and robust diagnostics. The later transition from digital fieldbuses to Ethernet was also similarly a shift to a more modern technology. Ethernet incorporated and improved upon the benefits of fieldbuses. Ethernet is ubiquitous and PROFINET uses standard Ethernet. Ethernet gives PROFINET the ability to provide faster updates, more bandwidth, larger messages, an unlimited address space, and even more diagnostic capabilities. Also, as commercial Ethernet evolves, PROFINET can take advantage of these physical layer improvements. Figure 1 ISO-OSI Model The ISO-OSI Model Ethernet-based communications can be represented by a seven-layer model: the ISO/OSI Reference Model. The model generically describes the means and methods used to transmit data. Each layer has a specific name and function, as shown in Figure 1. -

Generating Synthetic Voip Traffic for Analyzing Redundant Openbsd

UNIVERSITY OF OSLO Department of Informatics Generating Synthetic VoIP Traffic for Analyzing Redundant OpenBSD-Firewalls Master Thesis Maurice David Woernhard May 23, 2006 Generating Synthetic VoIP Traffic for Analyzing Redundant OpenBSD-Firewalls Maurice David Woernhard May 23, 2006 Abstract Voice over IP, short VoIP, is among the fastest growing broadband technologies in the private and commercial sector. Compared to the Plain Old Telephone System (POTS), Internet telephony has reduced availability, measured in uptime guarantees per a given time period. This thesis makes a contribution towards proper quantitative statements about network availability when using two redun- dant, state synchronized computers, acting as firewalls between the Internet (WAN) and the local area network (LAN). First, methods for generating adequate VoIP traffic volumes for loading a Gigabit Ethernet link are examined, with the goal of using a minimal set of hardware, namely one regular desktop computer. pktgen, the Linux kernel UDP packet generator, was chosen for generating synthetic/artificial traffic, reflecting the common VoIP packet characteristics packet size, changing sender and receiver address, as well as typical UDP-port usage. pktgen’s three main parameters influencing the generation rate are fixed inter-packet delay, packet size and total packet count. It was sought to relate these to more user-friendly val- ues of amount of simultaneous calls, voice codec employed and call duration. The proposed method fails to model VoIP traffic accurately, mostly due to the cur- rently unstable nature of pktgen. However, it is suited for generating enough packets for testing the firewalls. Second, the traffic forwarding limit and failover behavior of the redun- dant, state-synchronized firewalls was examined. -

Žilinská Univerzita V Žiline Elektrotechnická Fakulta Katedra Telekomunikácií

Žilinská univerzita v Žiline Elektrotechnická fakulta Katedra telekomunikácií Teoretický návrh a realizácia sieťového uzla na báze protokolov 802.1Q, IP a BGP Pavol Križan 2006 Teoretický návrh a realizácia sieťového uzla na báze protokolov 802.1Q, IP a BGP DIPLOMOVÁ PRÁCA PAVOL KRIŽAN ŽILINSKÁ UNIVERZITA V ŽILINE Elektrotechnická fakulta Katedra telekomunikácií Študijný odbor: TELEKOMUNIKÁCIE Vedúci diplomovej práce: Ing. Peter Zuberec Stupeň kvalifikácie: inžinier (Ing.) Dátum odovzdania diplomovej práce: 19.05.2006 ŽILINA 2006 Abstrakt Diplomová práca popisuje základy fungovania počítačových sietí VLAN, protokoly, ktoré sa v nich využívajú a základy smerovacích protokolov, špeciálne Border Gateway Protokol (BGP). Práca sa tiež zaoberá vytvorením modelu sieťového uzla, ktorý bude poskytovať smerovanie s použitím BGP protokolu, podporu VLAN a vysokú redundanciu zariadení alebo liniek. This diploma work is dealing with basic functions of Virtual Local Area Networks, protocols used in those netwoks and basics of routing protocols, specially Border Gateway Protocol (BGP). It describes creation of network node, which will provide routing using BGP protocol, VLAN support and high redundancy of devices or links . Žilinská univerzita v Žiline, Elektrotechnická fakulta, Katedra telekomunikácií ________________________________________________________________________ ANOTAČNÝ ZÁZNAM - DIPLOMOVÁ PRÁCA Priezvisko, meno: Križan Pavol školský rok: 2005/2006 Názov práce: Teoretický návrh a realizácia sieťového uzla na báze protokolov 802.1Q, IP a BGP Počet strán: 50 Počet obrázkov: 23 Počet tabuliek: 0 Počet grafov: 0 Počet príloh: 0 Použitá lit.: 16 Anotácia: Diplomová práca popisuje základy fungovania počítačových sietí VLAN, protokoly, ktoré sa v nich využívajú a základy smerovacích protokolov, špeciálne Border Gateway Protokol (BGP). Práca sa tiež zaoberá vytvorením modelu sieťového uzla, ktorý bude poskytovať smerovanie s použitím BGP protokolu, podporu VLAN a vysokú redundanciu zariadení alebo liniek. -

AES67-101: the Basics of AES67

AES67-101: The Basics of AES67 Anthony P. Kuzub IP Audio Product Manager [email protected] www.Ward-Beck.Systems TorontoAES.org Vice-Chair Ward-Beck.Systems - Audio Domains PREAMPS.audio FILTERING.audio PATCHING.audio MUTING.audio DB25.audio VUMETERS.audio PANELS.audio MATRIXING.audio BUSSING.audio XLR.audio SUMMING.audio AMPLIFY.audio CONSOLES.audio GROUNDING.audio The least you SHOULD know about networking: The physical datalink networks transported sessions presented by the application TX RX DATA 7 - Application DATA PATCHING.audio AES67.audio MATRIXING.audio 2110-30.AES67.audio 6 - Presentation BUSSING.audio 5 - Session 4 - Transport ROUTING.audio IGMP.audio 3 - Network SWITCHING.audio CLOCKING.audio 2 - Data Link MULTICASTING.audio 1 - Physical RJ45.audio The Road to Incompatibility… Dante RAVENNA QLAN Livewire Control & Monitoring Proprietary HTTP, Ember+ TCP, HTTP HTTP, Proprietary Proprietary Proprietary Discovery Bonjour Proprietary Connection Proprietary RTSP, SIP, IGMP Proprietary Proprietary, HTTP, IGMP Management Session Description Proprietary SDP Proprietary Channel # Transport Proprietary, IPv4 RTP, IPv4 RTP, IPv4 RTP, IPv4 Quality of Service DiffServ DiffServ DiffServ DiffServ/802.1pq Encoding & Streaming L16-32, ≤4 ch/flow L16-32, ≤64 cha/str 32B-FP, ≤16 ch/str L24, st, surr Synchronization PTP1588-2002 PTP1588-2008 PTP1588-2008 Proprietary Media Clock 44.1kHz, 192kHz 44.1kHz – 384kHz 48kHz 48kHz AES67-2013 Standard for audio applications of networks: High-performance streaming audio-over-IP interoperability References -

Merging Technologies

Merging Technologies More than 25 years of independence www.merging.com For 3D audio - Audio IP - Monitoring Industries that (could) use 3D audio Cinema Gaming Broadcast (TV/Radio – Live) Opera / Theatre / Musicals VOD / streaming (Netflix etc ) Music production (live) Events / Museum / Exhibition Retail / Installations / shopping malls Medical industry Car industry Audio Immersive / 3D audio / OBA (object based audio) My studio needs to be flexible What infrastructure ? What applications ? What formats ? Ressources ? DAW 1 22.2 BINAURAL PROCESS OBA Creative for LIVE AURO 7.1 ATMOS STEREO 7.1.4 LIVE YOU and your RADIO TV CINEMA studio (s) EVENTS DAW 2 10.2 MIXING Panner DAW 1 22.2 BINAURAL PROCESS OBA Creative for LIVE AURO 7.1 ATMOS STEREO 7.1.4 LIVE YOU and your RADIO TV CINEMA studio (s) EVENTS DAW 2 AES 67 10.2 MIXING INTERCONNECTION Panner AES67 – open protocol • ST 2110 • RAVENNA • Dante AES67 • Multiple sample Rate • High track counts • Interoperability RAVENNA / AES67 Core Audio IO vs. ASIO IO Count & Linux RAVENNA CORE AUDIO IO COUNT RAVENNA ASIO IO COUNT • 128 I/O @ 1fs (44.1kHz / 48kHz) • 128 I/O @ 1fs (44.1kHz / 48kHz) • 128 I/O @ 2fs (88.2 kHz / 96kHz) • 64 I/O @ 2fs (88.2 kHz / 96kHz) • 128 I/O @ 4fs (176.4 kHz / 192 kHz) • 32 I/O @ 4fs (176.4 kHz / 192 kHz) • 16 I/O @ 8fs (352.8 kHz / 384 kHz) Note: The number of I/Os can be configured to less if the application does not support these numbers. Compatibility ANEMAN for MAC & Windows • 6x expansion slots for 8 channel Mic/Line AD boards or 8 channel DA boards - 8 channels -

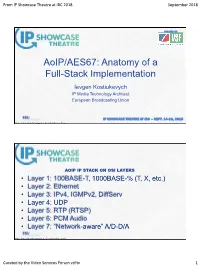

Aoip/AES67: Anatomy of a Full-Stack Implementation Ievgen Kostiukevych IP Media Technology Architect European Broadcasting Union

From IP Showcase Theatre at IBC 2018 September 2018 C U R A T E D B Y AoIP/AES67: Anatomy of a Full-Stack Implementation Ievgen Kostiukevych IP Media Technology Architect European Broadcasting Union IP SHOWCASE THEATRE AT IBC – SEPT. 14-18, 2018 ©Ievgen Kostiukevych, [email protected], Special for IP Showcase Theatre AOIP IP STACK ON OSI LAYERS • Layer 1: 100BASE-T, 1000BASE-% (T, X, etc.) • Layer 2: Ethernet • Layer 3: IPv4, IGMPv2, DiffServ • Layer 4: UDP • Layer 5: RTP (RTSP) • Layer 6: PCM Audio • Layer 7: “Network-aware” A/D-D/A ©Ievgen Kostiukevych, [email protected], Special for IP Showcase Theatre Curated by the Video Services Forum vsf.tv 1 From IP Showcase Theatre at IBC 2018 September 2018 AUDIO OVER IP IMPLEMENTATION ANATOMY ©Ievgen Kostiukevych, [email protected], Special for IP Showcase Theatre ©Ievgen Kostiukevych, [email protected], Special for IP Showcase Theatre Curated by the Video Services Forum vsf.tv 2 From IP Showcase Theatre at IBC 2018 September 2018 AUDIO OVER IP IMPLEMENTATION ANATOMY • Audio over IP protocols are packet-based • Utilize connectionless, unreliable protocol – UDP • Require additional protocols • I.E. DiffServ to maintain reliable performance • I.E. IEEE1588 to keep stable clock and synchronization • I.E. IGMP to utilize network properly and efficiently ©Ievgen Kostiukevych, [email protected], Special for IP Showcase Theatre ©Ievgen Kostiukevych, [email protected], Special for IP Showcase Theatre Curated by the Video Services Forum vsf.tv 3 From IP Showcase Theatre at IBC 2018 September -

Developments in Audio Networking Protocols By: Mel Lambert

TECHNICAL FOCUS: SOUND Copyright Lighting&Sound America November 2014 http://www.lightingandsoundamerica.com/LSA.html Developments in Audio Networking Protocols By: Mel Lambert It’s an enviable dream: the ability to prominent of these current offerings, ular protocol and the basis for connect any piece of audio equip- with an emphasis on their applicability Internet-based systems: IP, the ment to other system components within live sound environments. Internet protocol, handles the and seamlessly transfer digital materi- exchange of data between routers al in real time from one device to OSI layer-based model for using unique IP addresses that can another using the long-predicted con- AV networks hence select paths for network traffic; vergence between AV and IT. And To understand how AV networks while TCP ensures that the data is with recent developments in open work, it is worth briefly reviewing the transmitted reliably and without industry standards and plug-and-play OSI layer-based model, which divides errors. Popular Ethernet-based proto- operability available from several well- protocols into a number of smaller cols are covered by a series of IEEE advanced proprietary systems, that elements that accomplish a specific 802.3 standards running at a variety dream is fast becoming a reality. sub-task, and interact with one of data-transfer speeds and media, Beyond relaying digital-format signals another in specific, carefully defined including familiar CAT-5/6 copper and via conventional AES/EBU two-chan- ways. Layering allows the parts of a fiber-optic cables. nel and MADI-format multichannel protocol to be designed and tested All AV networking involves two pri- connections—which requires dedicat- more easily, simplifying each design mary roles: control, including configur- ed, wired links—system operators are stage. -

The Essential Guide to Audio Over IP for Broadcasters 2 5

The Essential Guide To Audio OverIP FOR BROADCASTERS Powerful Performance | Powerful Control | Powerful Savings i 1. Why IP for Broadcast Audio? Reasons to Migrate to Audio over IP ..........................................................................................................8 1. Flexibility ..................................................................................................................................................................................8 2. Cost ...........................................................................................................................................................................................8 3. Scalability ................................................................................................................................................................................9 4. Reliability (yes really!) .........................................................................................................................................................9 5. Availability ..............................................................................................................................................................................9 6. Control and Monitoring .....................................................................................................................................................9 7. Network Consolidation ......................................................................................................................................................9 -

AES67 Standard and What It Means for the AV Industry

TECH TALK 78 Systems Integration Asia August - September 2015 About AES67 Standard And What It Means For The AV Industry AES67 is not intended to replace existing solutions, but to offer means for interoperability among them Many of you would have heard about the AES67 standard that was developed by the Audio Engineering Society and published in September 2013. To promote the adoption of AES67, The Media Networking Alliance (MNA) was formed in October 2014. SI Asia speaks to Andreas Hildebrand,Senior Product Manager at ALC NetworX GmbH, the company that is responsible for RAVENNA networking technologies and also a member of MNA, to know more about AES67. What is AES67 all about? guidelines. A prerequisite was not In the overall audio eco- What loophole or space is to invent yet another, completely system, how does AES67 this meant to fill which was new solution, but to try to identify and the solutions fit into the not previously available? commonalities among the existing picture? AES67 is a standard published by solutions and use available technology The advantage of having an the Audio Engineering Society on standards and protocols already interoperability standard for various September 11th, 2013, addressing employed. The idea was to allow solutions is obvious: while there may be “High-performance Streaming Audio- current solution providers to adopt a sound ecosystem of products already over-IP Interoperability”. It defines a AES67 with as little effort as possible available for individual solutions, none set of guidelines which provide a basis and provide AES67 interoperability of these solutions can fit all applications for achieving interoperability between either via a special mode of operation areas.