To Download the PDF File

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Aneuploidy: Using Genetic Instability to Preserve a Haploid Genome?

Health Science Campus FINAL APPROVAL OF DISSERTATION Doctor of Philosophy in Biomedical Science (Cancer Biology) Aneuploidy: Using genetic instability to preserve a haploid genome? Submitted by: Ramona Ramdath In partial fulfillment of the requirements for the degree of Doctor of Philosophy in Biomedical Science Examination Committee Signature/Date Major Advisor: David Allison, M.D., Ph.D. Academic James Trempe, Ph.D. Advisory Committee: David Giovanucci, Ph.D. Randall Ruch, Ph.D. Ronald Mellgren, Ph.D. Senior Associate Dean College of Graduate Studies Michael S. Bisesi, Ph.D. Date of Defense: April 10, 2009 Aneuploidy: Using genetic instability to preserve a haploid genome? Ramona Ramdath University of Toledo, Health Science Campus 2009 Dedication I dedicate this dissertation to my grandfather who died of lung cancer two years ago, but who always instilled in us the value and importance of education. And to my mom and sister, both of whom have been pillars of support and stimulating conversations. To my sister, Rehanna, especially- I hope this inspires you to achieve all that you want to in life, academically and otherwise. ii Acknowledgements As we go through these academic journeys, there are so many along the way that make an impact not only on our work, but on our lives as well, and I would like to say a heartfelt thank you to all of those people: My Committee members- Dr. James Trempe, Dr. David Giovanucchi, Dr. Ronald Mellgren and Dr. Randall Ruch for their guidance, suggestions, support and confidence in me. My major advisor- Dr. David Allison, for his constructive criticism and positive reinforcement. -

Viewed and Published Immediately Upon Acceptance Cited in Pubmed and Archived on Pubmed Central Yours — You Keep the Copyright

BMC Genomics BioMed Central Research article Open Access Differential gene expression in ADAM10 and mutant ADAM10 transgenic mice Claudia Prinzen1, Dietrich Trümbach2, Wolfgang Wurst2, Kristina Endres1, Rolf Postina1 and Falk Fahrenholz*1 Address: 1Johannes Gutenberg-University, Institute of Biochemistry, Mainz, Johann-Joachim-Becherweg 30, 55128 Mainz, Germany and 2Helmholtz Zentrum München – German Research Center for Environmental Health, Institute for Developmental Genetics, Ingolstädter Landstraße 1, 85764 Neuherberg, Germany Email: Claudia Prinzen - [email protected]; Dietrich Trümbach - [email protected]; Wolfgang Wurst - [email protected]; Kristina Endres - [email protected]; Rolf Postina - [email protected]; Falk Fahrenholz* - [email protected] * Corresponding author Published: 5 February 2009 Received: 19 June 2008 Accepted: 5 February 2009 BMC Genomics 2009, 10:66 doi:10.1186/1471-2164-10-66 This article is available from: http://www.biomedcentral.com/1471-2164/10/66 © 2009 Prinzen et al; licensee BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Abstract Background: In a transgenic mouse model of Alzheimer disease (AD), cleavage of the amyloid precursor protein (APP) by the α-secretase ADAM10 prevented amyloid plaque formation, and alleviated cognitive deficits. Furthermore, ADAM10 overexpression increased the cortical synaptogenesis. These results suggest that upregulation of ADAM10 in the brain has beneficial effects on AD pathology. Results: To assess the influence of ADAM10 on the gene expression profile in the brain, we performed a microarray analysis using RNA isolated from brains of five months old mice overexpressing either the α-secretase ADAM10, or a dominant-negative mutant (dn) of this enzyme. -

Identification of Genomic Targets of Krüppel-Like Factor 9 in Mouse Hippocampal

Identification of Genomic Targets of Krüppel-like Factor 9 in Mouse Hippocampal Neurons: Evidence for a role in modulating peripheral circadian clocks by Joseph R. Knoedler A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Neuroscience) in the University of Michigan 2016 Doctoral Committee: Professor Robert J. Denver, Chair Professor Daniel Goldman Professor Diane Robins Professor Audrey Seasholtz Associate Professor Bing Ye ©Joseph R. Knoedler All Rights Reserved 2016 To my parents, who never once questioned my decision to become the other kind of doctor, And to Lucy, who has pushed me to be a better person from day one. ii Acknowledgements I have a huge number of people to thank for having made it to this point, so in no particular order: -I would like to thank my adviser, Dr. Robert J. Denver, for his guidance, encouragement, and patience over the last seven years; his mentorship has been indispensable for my growth as a scientist -I would also like to thank my committee members, Drs. Audrey Seasholtz, Dan Goldman, Diane Robins and Bing Ye, for their constructive feedback and their willingness to meet in a frequently cold, windowless room across campus from where they work -I am hugely indebted to Pia Bagamasbad and Yasuhiro Kyono for teaching me almost everything I know about molecular biology and bioinformatics, and to Arasakumar Subramani for his tireless work during the home stretch to my dissertation -I am grateful for the Neuroscience Program leadership and staff, in particular -

Supporting Information

Supporting Information Friedman et al. 10.1073/pnas.0812446106 SI Results and Discussion intronic miR genes in these protein-coding genes. Because in General Phenotype of Dicer-PCKO Mice. Dicer-PCKO mice had many many cases the exact borders of the protein-coding genes are defects in additional to inner ear defects. Many of them died unknown, we searched for miR genes up to 10 kb from the around birth, and although they were born at a similar size to hosting-gene ends. Out of the 488 mouse miR genes included in their littermate heterozygote siblings, after a few weeks the miRBase release 12.0, 192 mouse miR genes were found as surviving mutants were smaller than their heterozygote siblings located inside (distance 0) or in the vicinity of the protein-coding (see Fig. 1A) and exhibited typical defects, which enabled their genes that are expressed in the P2 cochlear and vestibular SE identification even before genotyping, including typical alopecia (Table S2). Some coding genes include huge clusters of miRNAs (in particular on the nape of the neck), partially closed eyelids (e.g., Sfmbt2). Other genes listed in Table S2 as coding genes are [supporting information (SI) Fig. S1 A and C], eye defects, and actually predicted, as their transcript was detected in cells, but weakness of the rear legs that were twisted backwards (data not the predicted encoded protein has not been identified yet, and shown). However, while all of the mutant mice tested exhibited some of them may be noncoding RNAs. Only a single protein- similar deafness and stereocilia malformation in inner ear HCs, coding gene that is differentially expressed in the cochlear and other defects were variable in their severity. -

UC San Diego Electronic Theses and Dissertations

UC San Diego UC San Diego Electronic Theses and Dissertations Title Cardiac Stretch-Induced Transcriptomic Changes are Axis-Dependent Permalink https://escholarship.org/uc/item/7m04f0b0 Author Buchholz, Kyle Stephen Publication Date 2016 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA, SAN DIEGO Cardiac Stretch-Induced Transcriptomic Changes are Axis-Dependent A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Bioengineering by Kyle Stephen Buchholz Committee in Charge: Professor Jeffrey Omens, Chair Professor Andrew McCulloch, Co-Chair Professor Ju Chen Professor Karen Christman Professor Robert Ross Professor Alexander Zambon 2016 Copyright Kyle Stephen Buchholz, 2016 All rights reserved Signature Page The Dissertation of Kyle Stephen Buchholz is approved and it is acceptable in quality and form for publication on microfilm and electronically: Co-Chair Chair University of California, San Diego 2016 iii Dedication To my beautiful wife, Rhia. iv Table of Contents Signature Page ................................................................................................................... iii Dedication .......................................................................................................................... iv Table of Contents ................................................................................................................ v List of Figures ................................................................................................................... -

Association of Chromosome 19 to Lung Cancer Genotypes and Phenotypes

Cancer Metastasis Rev DOI 10.1007/s10555-015-9556-2 Association of chromosome 19 to lung cancer genotypes and phenotypes Xiangdong Wang1 & Yong Zhang 1 & Carol L. Nilsson2 & Frode S. Berven3 & Per E. Andrén4 & Elisabet Carlsohn5 & Johan Malm7 & Manuel Fuentes7 & Ákos Végvári6,8 & Charlotte Welinder6,9 & Thomas E. Fehniger6,10 & Melinda Rezeli8 & Goutham Edula11 & Sophia Hober12 & Toshihide Nishimura13 & György Marko-Varga6,8,13 # Springer Science+Business Media New York 2015 Abstract The Chromosome 19 Consortium, a part of the aberrations include translocation t(15, 19) (q13, p13.1) fusion Chromosome-Centric Human Proteome Project (C-HPP, oncogene BRD4-NUT, DNA repair genes (ERCC1, ERCC2, http://www.C-HPP.org), is tasked with the understanding XRCC1), TGFβ1 pathway activation genes (TGFB1, LTBP4) chromosome 19 functions at the gene and protein levels, as , Dyrk1B, and potential oncogenesis protector genes such as well as their roles in lung oncogenesis. Comparative genomic NFkB pathway inhibition genes (NFKBIB, PPP1R13L) and hybridization (CGH) studies revealed chromosome aberration EGLN2. In conclusion, neXtProt is an effective resource for in lung cancer subtypes, including ADC, SCC, LCC, and the validation of gene aberrations identified in genomic SCLC. The most common abnormality is 19p loss and 19q studies. It promises to enhance our understanding of lung gain. Sixty-four aberrant genes identified in previous genomic cancer oncogenesis. studies and their encoded protein functions were further vali- dated in the neXtProt database (http://www.nextprot.org/). Among those, the loss of tumor suppressor genes STK11, Keywords Proteins . Genes . Antibodies . mRNA . Mass MUM1, KISS1R (19p13.3), and BRG1 (19p13.13) is spectrometry . -

Endurance Training Remodels Sperm-Borne Small RNA Expression and Methylation at Neurological Gene Hotspots Lars R

Ingerslev et al. Clinical Epigenetics (2018) 10:12 DOI 10.1186/s13148-018-0446-7 SHORT REPORT Open Access Endurance training remodels sperm-borne small RNA expression and methylation at neurological gene hotspots Lars R. Ingerslev1†, Ida Donkin1†, Odile Fabre1, Soetkin Versteyhe1, Mie Mechta1, Pattarawan Pattamaprapanont1, Brynjulf Mortensen2, Nikolaj Thure Krarup1 and Romain Barrès1,3* Abstract Remodeling of the sperm epigenome by lifestyle factors before conception could account for altered metabolism in the next generation offspring. Here, we hypothesized that endurance training changes the epigenome of human spermatozoa. Using small RNA (sRNA) sequencing and reduced representation bisulfite sequencing (RRBS), we, respectively, investigated sRNA expression and DNA methylation in pure fractions of motile spermatozoa collected from young healthy individuals before, after 6 weeks of endurance training and after 3 months without exercise. Expression of 8 PIWI interacting RNA were changed by exercise training. RRBS analysis revealed 330 differentially methylated regions (DMRs) after training and 303 DMRs after the detraining period, which were, in both conditions, enriched at close vicinity of transcription start sites. Ontology analysis of genes located at proximity of DMRs returned terms related to neurological function at the trained state and, to a much lesser extent, at the detrained state. Our study reveal that short-term endurance training induces marked remodeling of the sperm epigenome, and identify genes related to the development of the central nervous system as potential hot spots for epigenetic variation upon environmental stress. Introduction established that environmental factors can remodel the Gametes contain epigenetic information that plays a fun- sperm epigenome at the level of post-translational modifi- damental role in embryonic development [1]. -

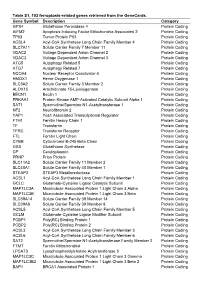

Table S1. 103 Ferroptosis-Related Genes Retrieved from the Genecards

Table S1. 103 ferroptosis-related genes retrieved from the GeneCards. Gene Symbol Description Category GPX4 Glutathione Peroxidase 4 Protein Coding AIFM2 Apoptosis Inducing Factor Mitochondria Associated 2 Protein Coding TP53 Tumor Protein P53 Protein Coding ACSL4 Acyl-CoA Synthetase Long Chain Family Member 4 Protein Coding SLC7A11 Solute Carrier Family 7 Member 11 Protein Coding VDAC2 Voltage Dependent Anion Channel 2 Protein Coding VDAC3 Voltage Dependent Anion Channel 3 Protein Coding ATG5 Autophagy Related 5 Protein Coding ATG7 Autophagy Related 7 Protein Coding NCOA4 Nuclear Receptor Coactivator 4 Protein Coding HMOX1 Heme Oxygenase 1 Protein Coding SLC3A2 Solute Carrier Family 3 Member 2 Protein Coding ALOX15 Arachidonate 15-Lipoxygenase Protein Coding BECN1 Beclin 1 Protein Coding PRKAA1 Protein Kinase AMP-Activated Catalytic Subunit Alpha 1 Protein Coding SAT1 Spermidine/Spermine N1-Acetyltransferase 1 Protein Coding NF2 Neurofibromin 2 Protein Coding YAP1 Yes1 Associated Transcriptional Regulator Protein Coding FTH1 Ferritin Heavy Chain 1 Protein Coding TF Transferrin Protein Coding TFRC Transferrin Receptor Protein Coding FTL Ferritin Light Chain Protein Coding CYBB Cytochrome B-245 Beta Chain Protein Coding GSS Glutathione Synthetase Protein Coding CP Ceruloplasmin Protein Coding PRNP Prion Protein Protein Coding SLC11A2 Solute Carrier Family 11 Member 2 Protein Coding SLC40A1 Solute Carrier Family 40 Member 1 Protein Coding STEAP3 STEAP3 Metalloreductase Protein Coding ACSL1 Acyl-CoA Synthetase Long Chain Family Member 1 Protein -

Genome-Wide RNA-Sequencing Analysis Identifies a Distinct

www.nature.com/scientificreports OPEN Genome-wide RNA-Sequencing analysis identifies a distinct fibrosis gene signature in the conjunctiva Received: 16 March 2017 Accepted: 27 June 2017 after glaucoma surgery Published: xx xx xxxx Cynthia Yu-Wai-Man1,2, Nicholas Owen2, Jonathan Lees3, Aristides D. Tagalakis4, Stephen L. Hart 4, Andrew R. Webster1,2, Christine A. Orengo3 & Peng T. Khaw1,2 Fibrosis-related events play a part in most blinding diseases worldwide. However, little is known about the mechanisms driving this complex multifactorial disease. Here we have carried out the first genome-wide RNA-Sequencing study in human conjunctival fibrosis. We isolated 10 primary fibrotic and 7 non-fibrotic conjunctival fibroblast cell lines from patients with and without previous glaucoma surgery, respectively. The patients were matched for ethnicity and age. We identified 246 genes that were differentially expressed by over two-fold andp < 0.05, of which 46 genes were upregulated and 200 genes were downregulated in the fibrotic cell lines compared to the non-fibrotic cell lines. We also carried out detailed gene ontology, KEGG, disease association, pathway commons, WikiPathways and protein network analyses, and identified distinct pathways linked to smooth muscle contraction, inflammatory cytokines, immune mediators, extracellular matrix proteins and oncogene expression. We further validated 11 genes that were highly upregulated or downregulated using real-time quantitative PCR and found a strong correlation between the RNA-Seq and qPCR results. Our study demonstrates that there is a distinct fibrosis gene signature in the conjunctiva after glaucoma surgery and provides new insights into the mechanistic pathways driving the complex fibrotic process in the eye and other tissues. -

Association of PPP1R13L and CD3EAP Polymorphisms with Risk and Survival of Non-Small Cell Lung Cancer in Chinese Non- Smoking Females

www.impactjournals.com/oncotarget/ Oncotarget, 2017, Vol. 8, (No. 45), pp: 78749-78756 Research Paper Association of PPP1R13L and CD3EAP polymorphisms with risk and survival of non-small cell lung cancer in Chinese non- smoking females Xu Feng1,2, Xue Fang1,3, Lingzi Xia1,3, Yangwu Ren1,3, Xuelian Li1,3, Xiaowei Quan1,3 and Baosen Zhou1,3 1Department of Epidemiology, School of Public Health, China Medical University, Liaoning, China 2Department of Health Statistics, School of Public Health, Shenyang Medical College, Liaoning, China 3Key Laboratory of Cancer Etiology and Prevention (China Medical University), Liaoning Provincial Department of Education, Liaoning, China Correspondence to: Baosen Zhou, email: [email protected] Keywords: PPP1R13L, CD3EAP, single nucleotide polymorphism, non-small cell lung cancer Received: April 11, 2017 Accepted: July 19, 2017 Published: August 12, 2017 Copyright: Feng et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License 3.0 (CC BY 3.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited. ABSTRACT PPP1R13L and CD3EAP were confirmed to play important roles in transcription and apoptosis. SNPs in PPP1R13L and CD3EAP may be associated with lung cancer risk and survival. This study investigated the association of PPP1R13L rs1005165 and CD3EAP rs967591 with non-small cell lung cancer (NSCLC) risk and survival in Chinese non- smoking females. 442 NSCLC cases and 480 cancer-free controls were conducted in the case-control study, and 283 cases were in cohort study. Genotype was determined by Taqman real-time PCR. -

Gene Section Mini Review

Atlas of Genetics and Cytogenetics in Oncology and Haematology OPEN ACCESS JOURNAL AT INIST-CNRS Gene Section Mini Review PPP1R13L (protein phosphatase 1, regulatory (inhibitor) subunit 13 like) Ulla Vogel National Research Centre for the Working Environment, Lerso Parkalle 105, DK-2100 Copenhagen O, Denmark Published in Atlas Database: December 2006 Online updated version: http://AtlasGeneticsOncology.org/Genes/PPP1R13LID42997ch19q13.html DOI: 10.4267/2042/38407 This work is licensed under a Creative Commons Attribution-Non-commercial-No Derivative Works 2.0 France Licence. © 2007 Atlas of Genetics and Cytogenetics in Oncology and Haematology increased resistance towards cisplatin and UV-induced Identity apoptosis. This indicates that RAI inhibits apoptosis. Hugo: PPP1R13L Several studies provide evidence that PPP1R13L Other names: RAI (relA associated inhibitor); IASPP expression is increased in tumor tissue. In a study of (Inhibitor of ASPP protein) colorectal adenomas and colorectal cancers, PPP1R13L Location: 19q13.32 expression was found to be substantially higher in lesions than in the normal tissue from the same patient. DNA/RNA PPP1R13L expression has also been found to be increased in breast carcinomas and in blood cells in Description patients with acute leukemia. 26,674 bp 13 exons. In a prospective study of lung cancer among 265 lung cancer cases and 272 controls nested within the Transcription population based 'Diet, Cancer and Health study', 3,076 bps. PPP1R13L expression in mononuclear blood cells (isolated by buffy coat) was not associated with risk of Protein lung cancer. mRNA levels were found to be 41% higher in women than in men. Description 828 amino acids. Mutations Function Note: Genetic Epidemiology: PPP1R13L was originally named RAI, an acronym for The most frequently studied polymorphism in RelA associated inhibitor. -

Atlas Journal

Atlas of Genetics and Cytogenetics in Oncology and Haematology Home Genes Leukemias Solid Tumours Cancer-Prone Deep Insight Portal Teaching X Y 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 NA Atlas Journal Atlas Journal versus Atlas Database: the accumulation of the issues of the Journal constitutes the body of the Database/Text-Book. TABLE OF CONTENTS Volume 11, Number 2, Apr-Jun 2007 Previous Issue / Next Issue Genes BOK (BCL2-related ovarian killer) (2q37.3). Alexander G Yakovlev. Atlas Genet Cytogenet Oncol Haematol 2007; 11 (2): 119-123. [Full Text] [PDF] URL : http://AtlasGeneticsOncology.org/Genes/BOKID824ch2q37.html BIRC6 (Baculoviral IAP repeat-containing 6) (2p22). Christian Pohl, Stefan Jentsch. Atlas Genet Cytogenet Oncol Haematol 2007; 11 (2): 124-129. [Full Text] [PDF] URL : http://AtlasGeneticsOncology.org/Genes/BIRC6ID798ch2p22.html AKAP12 (A kinase (PRKA) anchor protein 1) (6q25). Irwin H Gelman. Atlas Genet Cytogenet Oncol Haematol 2007; 11 (2): 130-136. [Full Text] [PDF] URL : http://AtlasGeneticsOncology.org/Genes/AKAP12ID607ch6q25.html TRIM 24 (tripartite motif-containing 24) (7q34). Jean Loup Huret. Atlas Genet Cytogenet Oncol Haematol 2007; 11 (2): 137-141. [Full Text] [PDF] Atlas Genet Cytogenet Oncol Haematol 2007; 2 - I - URL : http://AtlasGeneticsOncology.org/Genes/TRIM24ID504ch7q34.html RUNX 2 (Runt-related transcription factor 2) (6p21). Athanasios G Papavassiliou, Panos Ziros. Atlas Genet Cytogenet Oncol Haematol 2007; 11 (2): 142-147. [Full Text] [PDF] URL : http://AtlasGeneticsOncology.org/Genes/RUNX2ID42183ch6p21.html PTPRG (protein tyrosine phosphatase, receptor type, G) (3p14.2). Cornelis P Tensen, Remco van Doorn.