Statistical Reasoning and Conjecturing According to Peirce

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

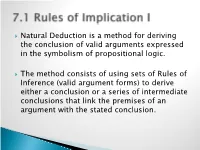

7.1 Rules of Implication I

Natural Deduction is a method for deriving the conclusion of valid arguments expressed in the symbolism of propositional logic. The method consists of using sets of Rules of Inference (valid argument forms) to derive either a conclusion or a series of intermediate conclusions that link the premises of an argument with the stated conclusion. The First Four Rules of Inference: ◦ Modus Ponens (MP): p q p q ◦ Modus Tollens (MT): p q ~q ~p ◦ Pure Hypothetical Syllogism (HS): p q q r p r ◦ Disjunctive Syllogism (DS): p v q ~p q Common strategies for constructing a proof involving the first four rules: ◦ Always begin by attempting to find the conclusion in the premises. If the conclusion is not present in its entirely in the premises, look at the main operator of the conclusion. This will provide a clue as to how the conclusion should be derived. ◦ If the conclusion contains a letter that appears in the consequent of a conditional statement in the premises, consider obtaining that letter via modus ponens. ◦ If the conclusion contains a negated letter and that letter appears in the antecedent of a conditional statement in the premises, consider obtaining the negated letter via modus tollens. ◦ If the conclusion is a conditional statement, consider obtaining it via pure hypothetical syllogism. ◦ If the conclusion contains a letter that appears in a disjunctive statement in the premises, consider obtaining that letter via disjunctive syllogism. Four Additional Rules of Inference: ◦ Constructive Dilemma (CD): (p q) • (r s) p v r q v s ◦ Simplification (Simp): p • q p ◦ Conjunction (Conj): p q p • q ◦ Addition (Add): p p v q Common Misapplications Common strategies involving the additional rules of inference: ◦ If the conclusion contains a letter that appears in a conjunctive statement in the premises, consider obtaining that letter via simplification. -

Chapter 10: Symbolic Trails and Formal Proofs of Validity, Part 2

Essential Logic Ronald C. Pine CHAPTER 10: SYMBOLIC TRAILS AND FORMAL PROOFS OF VALIDITY, PART 2 Introduction In the previous chapter there were many frustrating signs that something was wrong with our formal proof method that relied on only nine elementary rules of validity. Very simple, intuitive valid arguments could not be shown to be valid. For instance, the following intuitively valid arguments cannot be shown to be valid using only the nine rules. Somalia and Iran are both foreign policy risks. Therefore, Iran is a foreign policy risk. S I / I Either Obama or McCain was President of the United States in 2009.1 McCain was not President in 2010. So, Obama was President of the United States in 2010. (O v C) ~(O C) ~C / O If the computer networking system works, then Johnson and Kaneshiro will both be connected to the home office. Therefore, if the networking system works, Johnson will be connected to the home office. N (J K) / N J Either the Start II treaty is ratified or this landmark treaty will not be worth the paper it is written on. Therefore, if the Start II treaty is not ratified, this landmark treaty will not be worth the paper it is written on. R v ~W / ~R ~W 1 This or statement is obviously exclusive, so note the translation. 427 If the light is on, then the light switch must be on. So, if the light switch in not on, then the light is not on. L S / ~S ~L Thus, the nine elementary rules of validity covered in the previous chapter must be only part of a complete system for constructing formal proofs of validity. -

Aristotle's Theory of the Assertoric Syllogism

Aristotle’s Theory of the Assertoric Syllogism Stephen Read June 19, 2017 Abstract Although the theory of the assertoric syllogism was Aristotle’s great invention, one which dominated logical theory for the succeeding two millenia, accounts of the syllogism evolved and changed over that time. Indeed, in the twentieth century, doctrines were attributed to Aristotle which lost sight of what Aristotle intended. One of these mistaken doctrines was the very form of the syllogism: that a syllogism consists of three propositions containing three terms arranged in four figures. Yet another was that a syllogism is a conditional proposition deduced from a set of axioms. There is even unclarity about what the basis of syllogistic validity consists in. Returning to Aristotle’s text, and reading it in the light of commentary from late antiquity and the middle ages, we find a coherent and precise theory which shows all these claims to be based on a misunderstanding and misreading. 1 What is a Syllogism? Aristotle’s theory of the assertoric, or categorical, syllogism dominated much of logical theory for the succeeding two millenia. But as logical theory de- veloped, its connection with Aristotle because more tenuous, and doctrines and intentions were attributed to him which are not true to what he actually wrote. Looking at the text of the Analytics, we find a much more coherent theory than some more recent accounts would suggest.1 Robin Smith (2017, §3.2) rightly observes that the prevailing view of the syllogism in modern logic is of three subject-predicate propositions, two premises and a conclusion, whether or not the conclusion follows from the premises. -

An Introduction to Critical Thinking and Symbolic Logic: Volume 1 Formal Logic

An Introduction to Critical Thinking and Symbolic Logic: Volume 1 Formal Logic Rebeka Ferreira and Anthony Ferrucci 1 1An Introduction to Critical Thinking and Symbolic Logic: Volume 1 Formal Logic is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License. 1 Preface This textbook has developed over the last few years of teaching introductory symbolic logic and critical thinking courses. It has been truly a pleasure to have beneted from such great students and colleagues over the years. As we have become increasingly frustrated with the costs of traditional logic textbooks (though many of them deserve high praise for their accuracy and depth), the move to open source has become more and more attractive. We're happy to provide it free of charge for educational use. With that being said, there are always improvements to be made here and we would be most grateful for constructive feedback and criticism. We have chosen to write this text in LaTex and have adopted certain conventions with symbols. Certainly many important aspects of critical thinking and logic have been omitted here, including historical developments and key logicians, and for that we apologize. Our goal was to create a textbook that could be provided to students free of charge and still contain some of the more important elements of critical thinking and introductory logic. To that end, an additional benet of providing this textbook as a Open Education Resource (OER) is that we will be able to provide newer updated versions of this text more frequently, and without any concern about increased charges each time. -

The Logicist Manifesto: at Long Last Let Logic-Based Artificial Intelligence Become a Field Unto Itself∗

The Logicist Manifesto: At Long Last Let Logic-Based Artificial Intelligence Become a Field Unto Itself∗ Selmer Bringsjord Rensselaer AI & Reasoning (RAIR) Lab Department of Cognitive Science Department of Computer Science Rensselaer Polytechnic Institute (RPI) Troy NY 12180 USA [email protected] version 9.18.08 Contents 1 Introduction 1 2 Background 1 2.1 Logic-Based AI Encapsulated . .1 2.1.1 LAI is Ambitious . .3 2.1.2 LAI is Based on Logical Systems . .4 2.1.3 LAI is a Top-Down Enterprise . .5 2.2 Ignoring the \Strong" vs. \Weak" Distinction . .5 2.3 A Slice in the Day of a Life of a LAI Agent . .6 2.3.1 Knowledge Representation in Elementary Logic . .8 2.3.2 Deductive Reasoning . .8 2.3.3 A Note on Nonmonotonic Reasoning . 12 2.3.4 Beyond Elementary Logical Systems . 13 2.4 Examples of Logic-Based Cognitive Systems . 15 3 Factors Supporting Logicist AI as an Independent Field 15 3.1 History Supports the Divorce . 15 3.2 The Advent of the Web . 16 3.3 The Remarkable Effectiveness of Logic . 16 3.4 Logic Top to Bottom Now Possible . 17 3.5 Learning and Denial . 19 3.6 Logic is an Antidote to \Cheating" in AI . 19 3.7 Logic Our Only Hope Against the Dark AI Future . 21 4 Objections; Rebuttals 22 4.1 \But you are trapped in a fundamental dilemma: your position is either redundant, or false." . 22 4.2 \But you're neglecting probabilistic AI." . 23 4.3 \But we now know that the mind, contra logicists, is continuous, and hence dynamical, not logical, systems are superior." . -

Transpositions Familiar to Artists, This Book Shows How Moves Can Be Made Between Established Positions and Completely New Ground

ORPHEUS New modes of epistemic relationships in artistic research ORPHEUS Research leads to new insights rupturing the existent fabric of knowledge. Situated in the still evolving field of artistic research, this book investigates a fundamental quality of this process. Building on the lessons of deconstruction, artistic research invents new modes of epistemic relationships that include aesthetic dimensions. Under the heading transposition, seventeen artists, musicians, and theorists explain how one thing may turn into another in a spatio- temporal play of identity and difference that has the power to expand into the unknown. By connecting materially concrete positions in a way Transpositions familiar to artists, this book shows how moves can be made between established positions and completely new ground. In doing so, research changes from a process that expands knowledge to one that creatively Aesthetico-Epistemic Operators reinvents it. in Artistic Research Michael Schwab is the founding editor-in-chief of the Journal for Artistic Research (JAR). He is senior researcher of MusicExperiment21 (Orpheus Institute, Ghent) and joint project leader of Transpositions: Artistic Data Exploration (University of Music and Performing Arts Graz; University of Applied Arts Vienna). Transpositions INSTITUTE Edited by Michael Schwab IN ISBN 9789462701410 S TIT U T 9 789462 701410 > E SERIES Transpositions cover final.indd 1 05/07/18 14:38 Transpositions: Aesthetico-Epistemic Operators in Artistic Research TRANSPOSITIONS: AESTHETICO- EPISTEMIC OPERATORS -

MGF1121 Course Outline

MGF1121 Course Outline Introduction to Logic............................................................................................................. (3) (P) Description: This course is a study of both the formal and informal nature of human thought. It includes an examination of informal fallacies, sentential symbolic logic and deductive proofs, categorical propositions, syllogistic arguments and sorites. General Education Learning Outcome: The primary General Education Learning Outcome (GELO) for this course is Quantitative Reasoning, which is to understand and apply mathematical concepts and reasoning, and analyze and interpret various types of data. The GELO will be assessed through targeted questions on either the comprehensive final or an outside assignment. Prerequisite: MFG1100, MAT1033, or MAT1034 with a grade of “C” or better, OR the equivalent. Rationale: In order to function effectively and productively in an increasingly complex democratic society, students must be able to think for themselves to make the best possible decisions in the many and varied situations that will confront them. Knowledge of the basic concepts of logical reasoning, as offered in this course, will give students a firm foundation on which to base their decision-making processes. Students wishing to major in computer science, philosophy, mathematics, engineering and most natural sciences are required to have a working knowledge of symbolic logic and its applications. Impact Assessment: Introduction to Logic provides students with critical insight -

Form and Content: an Introduction to Formal Logic

Connecticut College Digital Commons @ Connecticut College Open Educational Resources 2020 Form and Content: An Introduction to Formal Logic Derek D. Turner Connecticut College, [email protected] Follow this and additional works at: https://digitalcommons.conncoll.edu/oer Recommended Citation Turner, Derek D., "Form and Content: An Introduction to Formal Logic" (2020). Open Educational Resources. 1. https://digitalcommons.conncoll.edu/oer/1 This Book is brought to you for free and open access by Digital Commons @ Connecticut College. It has been accepted for inclusion in Open Educational Resources by an authorized administrator of Digital Commons @ Connecticut College. For more information, please contact [email protected]. The views expressed in this paper are solely those of the author. Form & Content Form and Content An Introduction to Formal Logic Derek Turner Connecticut College 2020 Susanne K. Langer. This bust is in Shain Library at Connecticut College, New London, CT. Photo by the author. 1 Form & Content ©2020 Derek D. Turner This work is published in 2020 under a Creative Commons Attribution- NonCommercial-NoDerivatives 4.0 International License. You may share this text in any format or medium. You may not use it for commercial purposes. If you share it, you must give appropriate credit. If you remix, transform, add to, or modify the text in any way, you may not then redistribute the modified text. 2 Form & Content A Note to Students For many years, I had relied on one of the standard popular logic textbooks for my introductory formal logic course at Connecticut College. It is a perfectly good book, used in countless logic classes across the country. -

1. Introduction Recall That a Topological Space X Is Extremally Disconnected If the Closure of Every Open Set Is Open

Pr´e-Publica¸c~oesdo Departamento de Matem´atica Universidade de Coimbra Preprint Number 20{03 ON INFINITE VARIANTS OF DE MORGAN LAW IN LOCALE THEORY IGOR ARRIETA Abstract: A locale, being a complete Heyting algebra, satisfies De Morgan law (a _ b)∗ = a∗ ^ b∗ for pseudocomplements. The dual De Morgan law (a ^ b)∗ = a∗ _ b∗ (here referred to as the second De Morgan law) is equivalent to, among other conditions, (a _ b)∗∗ = a∗∗ _ b∗∗, and characterizes the class of extremally disconnected locales. This paper presents a study of the subclasses of extremally disconnected locales determined by the infinite versions of the second De Morgan law and its equivalents. Math. Subject Classification (2020): 18F70 (primary), 06D22, 54G05, 06D30 (secondary). 1. Introduction Recall that a topological space X is extremally disconnected if the closure of every open set is open. The point-free counterpart of this notion is that of an extremally disconnected locale, that is, of a locale L satisfying (a ^ b)∗ = a∗ _ b∗; for every a; b 2 L; (ED) or, equivalently, (a _ b)∗∗ = a∗∗ _ b∗∗; for every a; b 2 L: (ED0) Extremal disconnectedness is a well-established topic both in classical and point-free topology (see, for example, Section 3.5 and the following ones in Johnstone's monograph [18]), and it admits various characterizations (cf. Proposition 2.4 below, or Proposition 2.3 in [12]). The goal of the present paper is to study infinite versions of conditions (ED) and (ED0) (cf. Propo- sition 2.4 (i) and (iii)). We show that, when considered in the infinite case, Received January 24, 2020. -

Homework 8 Due Wednesday, 8 April

CIS 194: Homework 8 Due Wednesday, 8 April Propositional Logic In this section, you will prove some theorems in Propositional Logic, using the Haskell compiler to verify your proofs. The Curry-Howard isomorphism states that types are equivalent to theorems and pro- grams are equivalent to proofs. There is no need to run your code or even write test cases; once your code type checks, the proof will be accepted! Implication We will begin by defining some logical connectives. The first logical connective is implication. Luckily, implication is already built in to the Haskell type system in the form of arrow types. The type p -> q is equivalent to the logical statement P ! Q. We can now prove a very simple theorem called Modus Ponens. Modus Ponens states that if P implies Q and P is true, then Q is true. It is often written as: P ! Q Figure 1: Modus Ponens P Q We can write this down in Haskell as well: modus_ponens :: (p -> q) -> p -> q modus_ponens pq p = pq p cis 194: homework 8 2 Notice that the type of modus_ponens is identical to the Prelude function ($). We can therefore simplify the proof1: 1 This is a very interesting observation. The logical theorem Modus Ponens is modus_ponens :: (p -> q) -> p -> q exactly equivalent to function applica- tion! modus_ponens = ($) Disjunction The disjunction (also known as “or”) of the propositions P and Q is often written as P _ Q. In Haskell, we will define disjunction as follows: -- Logical Disjunction data p \/ q = Left p | Right q The disjunction has two constructors, Left and Right. -

Aristotelian Syllogisms

“Up to University” EU funded Project (Up2U) – “University as a Hub” sub-project (U-Hub) Learning Path: "Education towards Critical Thinking" (ECT) Texts choice and remixing: Giovanni Toffoli and Stefano Lariccia – Sapienza Università di Roma, DigiLab and LINK srl Content revision and English translation: Ingrid Barth – Tel Aviv University (TAU), Division of Foreign Languages. Most texts are inspired by: “Educazione al pensiero critico” (Editoriale Scientifica) by Francesco Piro – Università degli studi di Salerno, Dipartimento di Scienze Umane, Filosofiche e della Formazione. Some feedback has been provided by Vindice Deplano, Pragma srl, who has taken care of the transposition of the path into interactive learning units. Most translations between English and Italian have been drafted with GoogleTranslate and www.DeepL.com/Translator. UNIT 4.2 – Aristotelian syllogisms From propositional calculus to predicate calculus We already noticed that the the propositional calculus does not consider how an elementary statement is made within it, it does not enter into the merits of what it states; nor does it take into consideration what connection exists between the elements of discourse and the objects outside the discourse to which they refer. We could also say that the propositional calculus is not interested in the meaning of statements, but only in the form of complex statements. If we tried to express the reasoning All men are mortal, Socrates is a man, so Socrates is mortal. using only the connective elements of propositional logic, we should represent it as follows: p, q ├ r this formula is obviously not correct, because in general, from two statements, no arbitrary third can be derived; we note that this formula does not reflect the fact that the word Socrates occurs in two of the statements, as well as the word man (if we prescind from the singular-plural declension). -

Modal Propositional Logic

6 Modal Propositional Logic 1. INTRODUCTION In this book we undertook — among other things — to show how metaphysical talk of possible worlds and propositions can be used to make sense of the science of deductive logic. In chapter 1, we used that talk to explicate such fundamental logical concepts as those of contingency, noncontingency, implication, consistency, inconsistency, and the like. Next, in chapter 2, while resisting the attempt to identify propositions with sets of possible worlds, we suggested that identity-conditions for the constituents of propositions (i.e., for concepts), can be explicated by making reference to possible worlds and showed, further, how appeal to possible worlds can help us both to disambiguate proposition-expressing sentences and to refute certain false theories. In chapter 3 we argued the importance of distinguishing between, on the one hand, the modal concepts of the contingent and the noncontingent (explicable in terms of possible worlds) and, on the other hand, the epistemic concepts of the empirical and the a priori (not explicable in terms of possible worlds). In chapter 4 we showed how the fundamental methods of logic — analysis and valid inference — are explicable in terms of possible worlds and argued for the centrality within logic as a whole of modal logic in general and S5 in particular. Then, in chapter 5, we tried to make good our claim that modal concepts are needed in order to make sound philosophical sense even of that kind of propositional logic — truth-functional logic — from which they seem conspicuously absent. Now in this, our last chapter, we concentrate our attention on the kind of propositional logic — modal propositional logic — within which modal concepts feature overtly.