Form and Content: an Introduction to Formal Logic

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gibbardian Collapse and Trivalent Conditionals

Gibbardian Collapse and Trivalent Conditionals Paul Égré* Lorenzo Rossi† Jan Sprenger‡ Abstract This paper discusses the scope and significance of the so-called triviality result stated by Allan Gibbard for indicative conditionals, showing that if a conditional operator satisfies the Law of Import-Export, is supraclassical, and is stronger than the material conditional, then it must collapse to the material conditional. Gib- bard’s result is taken to pose a dilemma for a truth-functional account of indicative conditionals: give up Import-Export, or embrace the two-valued analysis. We show that this dilemma can be averted in trivalent logics of the conditional based on Reichenbach and de Finetti’s idea that a conditional with a false antecedent is undefined. Import-Export and truth-functionality hold without triviality in such logics. We unravel some implicit assumptions in Gibbard’s proof, and discuss a recent generalization of Gibbard’s result due to Branden Fitelson. Keywords: indicative conditional; material conditional; logics of conditionals; triva- lent logic; Gibbardian collapse; Import-Export 1 Introduction The Law of Import-Export denotes the principle that a right-nested conditional of the form A → (B → C) is logically equivalent to the simple conditional (A ∧ B) → C where both antecedentsare united by conjunction. The Law holds in classical logic for material implication, and if there is a logic for the indicative conditional of ordinary language, it appears Import-Export ought to be a part of it. For instance, to use an example from (Cooper 1968, 300), the sentences “If Smith attends and Jones attends then a quorum *Institut Jean-Nicod (CNRS/ENS/EHESS), Département de philosophie & Département d’études cog- arXiv:2006.08746v1 [math.LO] 15 Jun 2020 nitives, Ecole normale supérieure, PSL University, 29 rue d’Ulm, 75005, Paris, France. -

Chrysippus's Dog As a Case Study in Non-Linguistic Cognition

Chrysippus’s Dog as a Case Study in Non-Linguistic Cognition Michael Rescorla Abstract: I critique an ancient argument for the possibility of non-linguistic deductive inference. The argument, attributed to Chrysippus, describes a dog whose behavior supposedly reflects disjunctive syllogistic reasoning. Drawing on contemporary robotics, I urge that we can equally well explain the dog’s behavior by citing probabilistic reasoning over cognitive maps. I then critique various experimentally-based arguments from scientific psychology that echo Chrysippus’s anecdotal presentation. §1. Language and thought Do non-linguistic creatures think? Debate over this question tends to calcify into two extreme doctrines. The first, espoused by Descartes, regards language as necessary for cognition. Modern proponents include Brandom (1994, pp. 145-157), Davidson (1984, pp. 155-170), McDowell (1996), and Sellars (1963, pp. 177-189). Cartesians may grant that ascribing cognitive activity to non-linguistic creatures is instrumentally useful, but they regard such ascriptions as strictly speaking false. The second extreme doctrine, espoused by Gassendi, Hume, and Locke, maintains that linguistic and non-linguistic cognition are fundamentally the same. Modern proponents include Fodor (2003), Peacocke (1997), Stalnaker (1984), and many others. Proponents may grant that non- linguistic creatures entertain a narrower range of thoughts than us, but they deny any principled difference in kind.1 2 An intermediate position holds that non-linguistic creatures display cognitive activity of a fundamentally different kind than human thought. Hobbes and Leibniz favored this intermediate position. Modern advocates include Bermudez (2003), Carruthers (2002, 2004), Dummett (1993, pp. 147-149), Malcolm (1972), and Putnam (1992, pp. 28-30). -

Frontiers of Conditional Logic

City University of New York (CUNY) CUNY Academic Works All Dissertations, Theses, and Capstone Projects Dissertations, Theses, and Capstone Projects 2-2019 Frontiers of Conditional Logic Yale Weiss The Graduate Center, City University of New York How does access to this work benefit ou?y Let us know! More information about this work at: https://academicworks.cuny.edu/gc_etds/2964 Discover additional works at: https://academicworks.cuny.edu This work is made publicly available by the City University of New York (CUNY). Contact: [email protected] Frontiers of Conditional Logic by Yale Weiss A dissertation submitted to the Graduate Faculty in Philosophy in partial fulfillment of the requirements for the degree of Doctor of Philosophy, The City University of New York 2019 ii c 2018 Yale Weiss All Rights Reserved iii This manuscript has been read and accepted by the Graduate Faculty in Philosophy in satisfaction of the dissertation requirement for the degree of Doctor of Philosophy. Professor Gary Ostertag Date Chair of Examining Committee Professor Nickolas Pappas Date Executive Officer Professor Graham Priest Professor Melvin Fitting Professor Edwin Mares Professor Gary Ostertag Supervisory Committee The City University of New York iv Abstract Frontiers of Conditional Logic by Yale Weiss Adviser: Professor Graham Priest Conditional logics were originally developed for the purpose of modeling intuitively correct modes of reasoning involving conditional|especially counterfactual|expressions in natural language. While the debate over the logic of conditionals is as old as propositional logic, it was the development of worlds semantics for modal logic in the past century that cat- alyzed the rapid maturation of the field. -

Chapter 5: Methods of Proof for Boolean Logic

Chapter 5: Methods of Proof for Boolean Logic § 5.1 Valid inference steps Conjunction elimination Sometimes called simplification. From a conjunction, infer any of the conjuncts. • From P ∧ Q, infer P (or infer Q). Conjunction introduction Sometimes called conjunction. From a pair of sentences, infer their conjunction. • From P and Q, infer P ∧ Q. § 5.2 Proof by cases This is another valid inference step (it will form the rule of disjunction elimination in our formal deductive system and in Fitch), but it is also a powerful proof strategy. In a proof by cases, one begins with a disjunction (as a premise, or as an intermediate conclusion already proved). One then shows that a certain consequence may be deduced from each of the disjuncts taken separately. One concludes that that same sentence is a consequence of the entire disjunction. • From P ∨ Q, and from the fact that S follows from P and S also follows from Q, infer S. The general proof strategy looks like this: if you have a disjunction, then you know that at least one of the disjuncts is true—you just don’t know which one. So you consider the individual “cases” (i.e., disjuncts), one at a time. You assume the first disjunct, and then derive your conclusion from it. You repeat this process for each disjunct. So it doesn’t matter which disjunct is true—you get the same conclusion in any case. Hence you may infer that it follows from the entire disjunction. In practice, this method of proof requires the use of “subproofs”—we will take these up in the next chapter when we look at formal proofs. -

Critical Thinking STARS Handout Finalx

Critical Thinking "It is the mark of an educated mind to be able to entertain a thought without accepting it." - Aristotle "The important thing is not to stop questioning." - Albert Einstein What is critical thinking? Critical thinking is the intellectually disciplined process of actively and skillfully conceptualizing, applying, analyzing, synthesizing, and/or evaluating information gathered from, or generated by, observation, experience, reflection, reasoning, or communication, as a guide to belief and action. In its exemplary form, it is based on universal intellectual values that transcend subject matter divisions: clarity, accuracy, precision, consistency, relevance, sound evidence, good reasons, depth, breadth, and fairness. A Critical Thinker: Asks pertinent questions Assess statements and arguments Is able to admit a lack of understanding or information Has a sense of curiosity Is interested in finding new solutions Examines beliefs, assumptions and opinions and weighs them against facts Listens carefully to others and can give effective feedback Suspends judgment until all facts have been gathered and considered Look for evidence to support assumptions or beliefs Is able to adjust beliefs when new information is found Examines problems closely Is able to reject information that is irrelevant or incorrect Critical Thinking Standards and Questions: The most significant thinking (intellectual) standards/questions: • Clarity o Could you elaborate further on that point? o Could you give me an example? o Could you express -

Glossary for Logic: the Language of Truth

Glossary for Logic: The Language of Truth This glossary contains explanations of key terms used in the course. (These terms appear in bold in the main text at the point at which they are first used.) To make this glossary more easily searchable, the entry headings has ‘::’ (two colons) before it. So, for example, if you want to find the entry for ‘truth-value’ you should search for ‘:: truth-value’. :: Ambiguous, Ambiguity : An expression or sentence is ambiguous if and only if it can express two or more different meanings. In logic, we are interested in ambiguity relating to truth-conditions. Some sentences in natural languages express more than one claim. Read one way, they express a claim which has one set of truth-conditions. Read another way, they express a different claim with different truth-conditions. :: Antecedent : The first clause in a conditional is its antecedent. In ‘(P ➝ Q)’, ‘P’ is the antecedent. In ‘If it is raining, then we’ll get wet’, ‘It is raining’ is the antecedent. (See ‘Conditional’ and ‘Consequent’.) :: Argument : An argument is a set of claims (equivalently, statements or propositions) made up from premises and conclusion. An argument can have any number of premises (from 0 to indefinitely many) but has only one conclusion. (Note: This is a somewhat artificially restrictive definition of ‘argument’, but it will help to keep our discussions sharp and clear.) We can consider any set of claims (with one claim picked out as conclusion) as an argument: arguments will include sets of claims that no-one has actually advanced or put forward. -

On Basic Probability Logic Inequalities †

mathematics Article On Basic Probability Logic Inequalities † Marija Boriˇci´cJoksimovi´c Faculty of Organizational Sciences, University of Belgrade, Jove Ili´ca154, 11000 Belgrade, Serbia; [email protected] † The conclusions given in this paper were partially presented at the European Summer Meetings of the Association for Symbolic Logic, Logic Colloquium 2012, held in Manchester on 12–18 July 2012. Abstract: We give some simple examples of applying some of the well-known elementary probability theory inequalities and properties in the field of logical argumentation. A probabilistic version of the hypothetical syllogism inference rule is as follows: if propositions A, B, C, A ! B, and B ! C have probabilities a, b, c, r, and s, respectively, then for probability p of A ! C, we have f (a, b, c, r, s) ≤ p ≤ g(a, b, c, r, s), for some functions f and g of given parameters. In this paper, after a short overview of known rules related to conjunction and disjunction, we proposed some probabilized forms of the hypothetical syllogism inference rule, with the best possible bounds for the probability of conclusion, covering simultaneously the probabilistic versions of both modus ponens and modus tollens rules, as already considered by Suppes, Hailperin, and Wagner. Keywords: inequality; probability logic; inference rule MSC: 03B48; 03B05; 60E15; 26D20; 60A05 1. Introduction The main part of probabilization of logical inference rules is defining the correspond- Citation: Boriˇci´cJoksimovi´c,M. On ing best possible bounds for probabilities of propositions. Some of them, connected with Basic Probability Logic Inequalities. conjunction and disjunction, can be obtained immediately from the well-known Boole’s Mathematics 2021, 9, 1409. -

Critical Thinking for the Military Professional

Document created: 17 Jun 04 Critical Thinking For The Military Professional Col W. Michael Guillot “Any complex activity, if it is to be carried on with any degree of virtuosity, calls for appropriate gifts of intellect and temperament …Genius consists in a harmonious combination of elements, in which one or the other ability may predominate, but none may be in conflict with the rest.”1 In a previous article on Strategic leadership I described the strategic environment as volatile, uncertain, complex, and ambiguous (VUCA). Additionally, that writing introduced the concept of strategic competency.2 This article will discuss the most important essential skill for Strategic Leaders: critical thinking. It is hard to imagine a Strategic leader today who does not think critically or at least uses the concept in making decisions. Critical thinking helps the strategic leader master the challenges of the strategic environment. It helps one understand how to bring stability to a volatile world. Critical thinking leads to more certainty and confidence in an uncertain future. This skill helps simplify complex scenarios and brings clarity to the ambiguous lens. Critical thinking is the kind of mental attitude required for success in the strategic environment. In essence, critical thinking is about learning how to think and how to judge and improve the quality of thinking—yours and others. Lest you feel you are already a great critical thinker, consider this, in a recent study supported by the Kellogg Foundation, only four percent of the U.S. organizational -

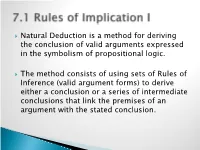

7.1 Rules of Implication I

Natural Deduction is a method for deriving the conclusion of valid arguments expressed in the symbolism of propositional logic. The method consists of using sets of Rules of Inference (valid argument forms) to derive either a conclusion or a series of intermediate conclusions that link the premises of an argument with the stated conclusion. The First Four Rules of Inference: ◦ Modus Ponens (MP): p q p q ◦ Modus Tollens (MT): p q ~q ~p ◦ Pure Hypothetical Syllogism (HS): p q q r p r ◦ Disjunctive Syllogism (DS): p v q ~p q Common strategies for constructing a proof involving the first four rules: ◦ Always begin by attempting to find the conclusion in the premises. If the conclusion is not present in its entirely in the premises, look at the main operator of the conclusion. This will provide a clue as to how the conclusion should be derived. ◦ If the conclusion contains a letter that appears in the consequent of a conditional statement in the premises, consider obtaining that letter via modus ponens. ◦ If the conclusion contains a negated letter and that letter appears in the antecedent of a conditional statement in the premises, consider obtaining the negated letter via modus tollens. ◦ If the conclusion is a conditional statement, consider obtaining it via pure hypothetical syllogism. ◦ If the conclusion contains a letter that appears in a disjunctive statement in the premises, consider obtaining that letter via disjunctive syllogism. Four Additional Rules of Inference: ◦ Constructive Dilemma (CD): (p q) • (r s) p v r q v s ◦ Simplification (Simp): p • q p ◦ Conjunction (Conj): p q p • q ◦ Addition (Add): p p v q Common Misapplications Common strategies involving the additional rules of inference: ◦ If the conclusion contains a letter that appears in a conjunctive statement in the premises, consider obtaining that letter via simplification. -

Application: Digital Logic Circuits

SECTION 2.4 Application: Digital Logic Circuits Copyright © Cengage Learning. All rights reserved. Application: Digital Logic Circuits Switches “in series” Switches “in parallel” Change closed and on are replaced by T, open and off are replaced by F? Application: Digital Logic Circuits • More complicated circuits correspond to more complicated logical expressions. • This correspondence has been used extensively in design and study of circuits. • Electrical engineers use language of logic when refer to values of signals produced by an electronic switch as being “true” or “false.” • Only that symbols 1 and 0 are used • symbols 0 and 1 are called bits, short for binary digits. • This terminology was introduced in 1946 by the statistician John Tukey. Black Boxes and Gates Black Boxes and Gates • Circuits: transform combinations of signal bits (1’s and 0’s) into other combinations of signal bits (1’s and 0’s). • Computer engineers and digital system designers treat basic circuits as black boxes. • Ignore inside of a black box (detailed implementation of circuit) • focused on the relation between the input and the output signals. • Operation of a black box is completely specified by constructing an input/output table that lists all its possible input signals together with their corresponding output signals. Black Boxes and Gates One possible correspondence of input to output signals is as follows: An Input/Output Table Black Boxes and Gates An efficient method for designing more complicated circuits is to build them by connecting less complicated black box circuits. Gates can be combined into circuits in a variety of ways. If the rules shown on the next page are obeyed, the result is a combinational circuit, one whose output at any time is determined entirely by its input at that time without regard to previous inputs. -

Shalack V.I. Semiotic Foundations of Logic

Semiotic foundations of logic Vladimir I. Shalack abstract. The article offers a look at the combinatorial logic as the logic of signs operating in the most general sense. For this it is proposed slightly reformulate it in terms of introducing and replacement of the definitions. Keywords: combinatory logic, semiotics, definition, logic founda- tions 1 Language selection Let’s imagine for a moment what would be like the classical logic, if we had not studied it in the language of negation, conjunction, dis- junction and implication, but in the language of the Sheffer stroke. I recall that it can be defined with help of negation and conjunction as follows A j B =Df :(A ^ B): In turn, all connectives can be defined with help of the Sheffer stroke in following manner :A =Df (A j A) A ^ B =Df (A j B) j (A j B) A _ B =Df (A j A) j (B j B) A ⊃ B =Df A j (B j B): Modus ponens rule takes the following form A; A j (B j B) . B 226 Vladimir I. Shalack We can go further and following the ideas of M. Sch¨onfinkel to define two-argument infix quantifier ‘jx’ x A j B =Df 8x(A j B): Now we can use it to define Sheffer stroke and quantifiers. x A j B =Df A j B where the variable x is not free in the formulas A and B; y x y 8xA =Df (A j A) j (A j A) where the variable y is not free in the formula A; x y x 9xA =Df (A j A) j (A j A) where the variable y is not free in the formula A. -

Tables of Implications and Tautologies from Symbolic Logic

Tables of Implications and Tautologies from Symbolic Logic Dr. Robert B. Heckendorn Computer Science Department, University of Idaho March 17, 2021 Here are some tables of logical equivalents and implications that I have found useful over the years. Where there are classical names for things I have included them. \Isolation by Parts" is my own invention. By tautology I mean equivalent left and right hand side and by implication I mean the left hand expression implies the right hand. I use the tilde and overbar interchangeably to represent negation e.g. ∼x is the same as x. Enjoy! Table 1: Properties of All Two-bit Operators. The Comm. is short for commutative and Assoc. is short for associative. Iff is short for \if and only if". Truth Name Comm./ Binary And/Or/Not Nands Only Table Assoc. Op 0000 False CA 0 0 (a " (a " a)) " (a " (a " a)) 0001 And CA a ^ b a ^ b (a " b) " (a " b) 0010 Minus b − a a ^ b (b " (a " a)) " (a " (a " a)) 0011 B A b b b 0100 Minus a − b a ^ b (a " (a " a)) " (a " (a " b)) 0101 A A a a a 0110 Xor/NotEqual CA a ⊕ b (a ^ b) _ (a ^ b)(b " (a " a)) " (a " (a " b)) (a _ b) ^ (a _ b) 0111 Or CA a _ b a _ b (a " a) " (b " b) 1000 Nor C a # b a ^ b ((a " a) " (b " b)) " ((a " a) " a) 1001 Iff/Equal CA a $ b (a _ b) ^ (a _ b) ((a " a) " (b " b)) " (a " b) (a ^ b) _ (a ^ b) 1010 Not A a a a " a 1011 Imply a ! b a _ b (a " (a " b)) 1100 Not B b b b " b 1101 Imply b ! a a _ b (b " (a " a)) 1110 Nand C a " b a _ b a " b 1111 True CA 1 1 (a " a) " a 1 Table 2: Tautologies (Logical Identities) Commutative Property: p ^ q $ q