Building Parallel Monolingual Gan Chinese Dialects Corpus

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Ecological Protection Research Based on the Cultural Landscape Space Construction in Jingdezhen

Available online at www.sciencedirect.com Procedia Environmental Sciences 10 ( 2011 ) 1829 – 1834 2011 3rd International Conference on Environmental Science and InformationConference Application Title Technology (ESIAT 2011) The Ecological Protection Research Based on the Cultural Landscape Space Construction in Jingdezhen Yu Bina*, Xiao Xuan aGraduate School of ceramic aesthetics, Jingdezhen ceramic institute, Jingdezhen, CHINA bGraduate School of ceramic aesthetics, Jingdezhen ceramic institute, Jingdezhen, CHINA [email protected] Abstract As a historical and cultural city, Jingdezhen is now faced with new challenges that exhausted porcelain clay resources restricted economic development. This paper will explore the rich cultural landscape resources from the viewpoint of the cultural landscape space, conclude that Jingdezhen is an active state diversity and animacy cultural landscape space which is composed of ceramics cultural landscape as the main part, and integrates with tea, local opera, natural ecology, architecture, folk custom, religion cultural landscape, and study how to build an mechanism of Jingdezhen ecological protection. © 2011 Published by Elsevier Ltd. Selection and/or peer-review under responsibility of Conference © 2011 Published by Elsevier Ltd. Selection and/or peer-review under responsibility of [name organizer] ESIAT2011 Organization Committee. Keywords: Jingdezhen, cultural landscape space, Poyang Lake area, ecological economy. ĉ. Introduction In 2009, Jingdezhen was one of the resource-exhausted cities listed -

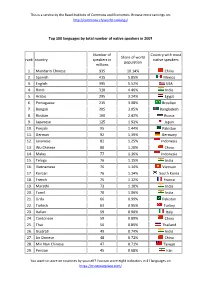

Top 100 Languages by Total Number of Native Speakers in 2007 Rank

This is a service by the Basel Institute of Commons and Economics. Browse more rankings on: http://commons.ch/world-rankings/ Top 100 languages by total number of native speakers in 2007 Number of Country with most Share of world rank country speakers in native speakers population millions 1. Mandarin Chinese 935 10.14% China 2. Spanish 415 5.85% Mexico 3. English 395 5.52% USA 4. Hindi 310 4.46% India 5. Arabic 295 3.24% Egypt 6. Portuguese 215 3.08% Brasilien 7. Bengali 205 3.05% Bangladesh 8. Russian 160 2.42% Russia 9. Japanese 125 1.92% Japan 10. Punjabi 95 1.44% Pakistan 11. German 92 1.39% Germany 12. Javanese 82 1.25% Indonesia 13. Wu Chinese 80 1.20% China 14. Malay 77 1.16% Indonesia 15. Telugu 76 1.15% India 16. Vietnamese 76 1.14% Vietnam 17. Korean 76 1.14% South Korea 18. French 75 1.12% France 19. Marathi 73 1.10% India 20. Tamil 70 1.06% India 21. Urdu 66 0.99% Pakistan 22. Turkish 63 0.95% Turkey 23. Italian 59 0.90% Italy 24. Cantonese 59 0.89% China 25. Thai 56 0.85% Thailand 26. Gujarati 49 0.74% India 27. Jin Chinese 48 0.72% China 28. Min Nan Chinese 47 0.71% Taiwan 29. Persian 45 0.68% Iran You want to score on countries by yourself? You can score eight indicators in 41 languages on https://trustyourplace.com/ This is a service by the Basel Institute of Commons and Economics. -

Deciphering the Spatial Structures of City Networks in the Economic Zone of the West Side of the Taiwan Strait Through the Lens of Functional and Innovation Networks

sustainability Article Deciphering the Spatial Structures of City Networks in the Economic Zone of the West Side of the Taiwan Strait through the Lens of Functional and Innovation Networks Yan Ma * and Feng Xue School of Architecture and Urban-Rural Planning, Fuzhou University, Fuzhou 350108, Fujian, China; [email protected] * Correspondence: [email protected] Received: 17 April 2019; Accepted: 21 May 2019; Published: 24 May 2019 Abstract: Globalization and the spread of information have made city networks more complex. The existing research on city network structures has usually focused on discussions of regional integration. With the development of interconnections among cities, however, the characterization of city network structures on a regional scale is limited in the ability to capture a network’s complexity. To improve this characterization, this study focused on network structures at both regional and local scales. Through the lens of function and innovation, we characterized the city network structure of the Economic Zone of the West Side of the Taiwan Strait through a social network analysis and a Fast Unfolding Community Detection algorithm. We found a significant imbalance in the innovation cooperation among cities in the region. When considering people flow, a multilevel spatial network structure had taken shape. Among cities with strong centrality, Xiamen, Fuzhou, and Whenzhou had a significant spillover effect, which meant the region was depolarizing. Quanzhou and Ganzhou had a significant siphon effect, which was unsustainable. Generally, urbanization in small and midsize cities was common. These findings provide support for government policy making. Keywords: city network; spatial organization; people flows; innovation network 1. -

Research on the Relationship Between Green Development and Fdi in Jiangxi Province

2019 International Seminar on Education, Teaching, Business and Management (ISETBM 2019) Research on the Relationship between Green Development and Fdi in Jiangxi Province Zejie Liu1, a, Xiaoxue Lei2, b, Wenhui Lai1, c, Zhenyue Lu1, d, Yuanyuan Yin1, e 1School of Business, Jiangxi Normal University, Nanchang 330022, China 2 School of Financial and Monetary, Jiangxi Normal University, Nanchang 330022, China Keywords: Jiangxi province, City, Green development, Fdi Abstract: Firstly, based on factor analysis method, this paper obtained the green development comprehensive index and its changing trend of 11 prefecture level cities in Jiangxi Province. Secondly, by calculating Moran's I index, it made an empirical analysis of the spatial correlation of FDI between 11 prefecture level cities in Jiangxi Province, both globally and locally. Finally, this paper further tested the relationship between FDI and green development index by establishing multiple linear regression equation. The results show that the overall green development level of Jiangxi Province shows a steady upward trend, but the development gap between 11 prefecture level cities in Jiangxi Province is large, and the important green development indicators have a significant positive impact on FDI. 1. Introduction As one of the important provinces rising in Central China, Jiangxi has made remarkable achievements in the protection of ecological environment and the promotion of economic benefits while vigorously promoting green development and actively introducing FDI. Data shows that since the first batch of FDI was introduced in 1984, Jiangxi Province has attracted 12.57 billion US dollars of FDI in 2018, with an average annual growth rate of 19.42%. By the first three quarters of 2019, Jiangxi's actual use of FDI amounted to 9.79 billion US dollars, an increase of 8.1%, ranking at the top of the country. -

20200316 Factory List.Xlsx

Country Factory Name Address BANGLADESH AMAN WINTER WEARS LTD. SINGAIR ROAD, HEMAYETPUR, SAVAR, DHAKA.,0,DHAKA,0,BANGLADESH BANGLADESH KDS GARMENTS IND. LTD. 255, NASIRABAD I/A, BAIZID BOSTAMI ROAD,,,CHITTAGONG-4211,,BANGLADESH BANGLADESH DENITEX LIMITED 9/1,KORNOPARA, SAVAR, DHAKA-1340,,DHAKA,,BANGLADESH JAMIRDIA, DUBALIAPARA, VALUKA, MYMENSHINGH BANGLADESH PIONEER KNITWEARS (BD) LTD 2240,,MYMENSHINGH,DHAKA,BANGLADESH PLOT # 49-52, SECTOR # 08 , CEPZ, CHITTAGONG, BANGLADESH HKD INTERNATIONAL (CEPZ) LTD BANGLADESH,,CHITTAGONG,,BANGLADESH BANGLADESH FLAXEN DRESS MAKER LTD MEGHDUBI, WARD: 40, GAZIPUR CITY CORP,,,GAZIPUR,,BANGLADESH BANGLADESH NETWORK CLOTHING LTD 228/3,SHAHID RAWSHAN SARAK, CHANDANA,,,GAZIPUR,DHAKA,BANGLADESH 521/1 GACHA ROAD, BOROBARI,GAZIPUR CITY BANGLADESH ABA FASHIONS LTD CORPORATION,,GAZIPUR,DHAKA,BANGLADESH VILLAGE- AMTOIL, P.O. HAT AMTOIL, P.S. SREEPUR, DISTRICT- BANGLADESH SAN APPARELS LTD MAGURA,,JESSORE,,BANGLADESH BANGLADESH TASNIAH FABRICS LTD KASHIMPUR NAYAPARA, GAZIPUR SADAR,,GAZIPUR,,BANGLADESH BANGLADESH AMAN KNITTINGS LTD KULASHUR, HEMAYETPUR,,SAVAR,DHAKA,BANGLADESH BANGLADESH CHERRY INTIMATE LTD PLOT # 105 01,DEPZ, ASHULIA, SAVAR,DHAKA,DHAKA,BANGLADESH COLOMESSHOR, POST OFFICE-NATIONAL UNIVERSITY, GAZIPUR BANGLADESH ARRIVAL FASHION LTD SADAR,,,GAZIPUR,DHAKA,BANGLADESH VILLAGE-JOYPURA, UNION-SHOMBAG,,UPAZILA-DHAMRAI, BANGLADESH NAFA APPARELS LTD DISTRICT,DHAKA,,BANGLADESH BANGLADESH VINTAGE DENIM APPARELS LIMITED BOHERARCHALA , SREEPUR,,,GAZIPUR,,BANGLADESH BANGLADESH KDS IDR LTD CDA PLOT NO: 15(P),16,MOHORA -

Did the Establishment of Poyang Lake Eco-Economic Zone Increase Agricultural Labor Productivity in Jiangxi Province, China?

Article Did the Establishment of Poyang Lake Eco-Economic Zone Increase Agricultural Labor Productivity in Jiangxi Province, China? Tao Wu 1 and Yuelong Wang 2,* Received: 10 December 2015; Accepted: 21 December 2015; Published: 24 December 2015 Academic Editor: Marc A. Rosen 1 School of Economics, Jiangxi University of Finance and Economics, 169 Shuanggang East Road, Nanchang 330013, China; [email protected] 2 Center for Regulation and Competition, Jiangxi University of Finance and Economics, 169 Shuanggang East Road, Nanchang 330013, China * Correspondence: [email protected]; Tel.: +86-0791-83816310 Abstract: In this paper, we take the establishment of Poyang Lake Eco-Economic Zone in 2009 as a quasi-natural experiment, to evaluate its influence on the agricultural labor productivity in Jiangxi Province, China. The estimation results of the DID method show that the establishment of the zone reduced agricultural labor productivity by 3.1%, lowering farmers’ net income by 2.5% and reducing the agricultural GDP by 3.6%. Furthermore, this negative effect has increased year after year since 2009. However, the heterogeneity analysis implies that the agricultural labor productivities of all cities in Jiangxi Province will ultimately converge. We find that the lack of agricultural R&D activities and the abuse of chemical fertilizers may be the main reasons behind the negative influence of the policy, by examining two possible transmission channels—the R&D investment and technological substitution. Corresponding policy implications are also provided. Keywords: Poyang Lake Eco-Economic Zone; agricultural labor productivity; DID method; R&D on agriculture 1. Introduction Poyang Lake, which is located in the northern part of Jiangxi Province and connects to the lower Yangtze River, is the largest freshwater lake in China. -

On the Development of Syllable-Initial Voiced Obstruents and Its Interplay with Tones in North-Western Gan Chinese Dialects

Shared innovation and the variability: On the development of syllable-initial voiced obstruents and its interplay with tones in north-western Gan Chinese dialects It is generally agreed that there were four tones in Middle Chinese, namely Ping (‘level’), Shang (‘rising’), Qu (‘departing’), and Ru (‘entering’), and at the same time, there was a three-way distinction of syllable-initial obstruents into voiced, voiceless unaspirated, and voiceless aspirated (Karlgren 1915-1926). The development of syllable-initial voiced obstruents and tonal split are two major sound changes from Middle Chinese to Modern Chinese and its dialects, which actually determines the profile of modern phonologies of Chinese dialects and contributed to the diversity of tones. Ideally, tones split according to the voicing condition of initial consonants, namely high tones, in general, are associated with voiceless initials and low tones with voiced initials. Regarding the development of obstruents, only Wu dialects and the old varieties of Xiang dialects retain the three-way distinction. In the other dialects, voiced obstruents merged with voiceless ones in various ways. For instance, voiced stops and affricates became voiceless unaspirated in Min dialects, but voiceless aspirated in Hakka and Gan dialects, in general. And in Mandarin dialects, voiced stops and affricates became voiceless aspirated in level-toned syllables and voiceless unaspirated in non-level-toned syllables. The complex interplay between the development of voiced obstruents and tones thus contributed to a highly diversified phonology of consonants and tones in modern Chinese dialects. This paper focuses on north-western Gan dialects. As in the other Gan dialects, voiced stops and affricates merged with their voiceless aspirated counterparts in north-western Gan dialects. -

Deciphering the Spatial Structures of City Networks in the Economic Zone of the West Side of the Taiwan Strait Through the Lens of Functional and Innovation Networks

Article Deciphering the Spatial Structures of City Networks in the Economic Zone of the West Side of the Taiwan Strait Through the Lens of Functional and Innovation Networks Yan Ma 1,* and Feng Xue 2 School of Architecture and Urban Planning, Fuzhou University, Fuzhou 350108, Fujian, China; [email protected] * Correspondence: [email protected] Received: 17 April 2019; Accepted: 21 May 2019; Published: 24 May 2019 Abstract: Globalization and the spread of information have made city networks more complex. The existing research on city network structures has usually focused on discussions of regional integration. With the development of interconnections among cities, however, the characterization of city network structures on a regional scale is limited in the ability to capture a network’s complexity. To improve this characterization, this study focused on network structures at both regional and local scales. Through the lens of function and innovation, we characterized the city network structure of the Economic Zone of the West Side of the Taiwan Strait through a social network analysis and a Fast Unfolding Community Detection algorithm. We found a significant imbalance in the innovation cooperation among cities in the region. When considering people flow, a multilevel spatial network structure had taken shape. Among cities with strong centrality, Xiamen, Fuzhou, and Whenzhou had a significant spillover effect, which meant the region was depolarizing. Quanzhou and Ganzhou had a significant siphon effect, which was unsustainable. Generally, urbanization in small and midsize cities was common. These findings provide support for government policy making. Keywords: city network; spatial organization; people flows; innovation network 1. -

The Neolithic Ofsouthern China-Origin, Development, and Dispersal

The Neolithic ofSouthern China-Origin, Development, and Dispersal ZHANG CHI AND HSIAO-CHUN HUNG INTRODUCTION SANDWICHED BETWEEN THE YELLOW RIVER and Mainland Southeast Asia, southern China1 lies centrally within eastern Asia. This geographical area can be divided into three geomorphological terrains: the middle and lower Yangtze allu vial plain, the Lingnan (southern Nanling Mountains)-Fujian region,2 and the Yungui Plateau3 (Fig. 1). During the past 30 years, abundant archaeological dis coveries have stimulated a rethinking of the role ofsouthern China in the prehis tory of China and Southeast Asia. This article aims to outline briefly the Neolithic cultural developments in the middle and lower Yangtze alluvial plain, to discuss cultural influences over adjacent regions and, most importantly, to examine the issue of southward population dispersal during this time period. First, we give an overview of some significant prehistoric discoveries in south ern China. With the discovery of Hemudu in the mid-1970s as the divide, the history of archaeology in this region can be divided into two phases. The first phase (c. 1920s-1970s) involved extensive discovery, when archaeologists un earthed Pleistocene human remains at Yuanmou, Ziyang, Liujiang, Maba, and Changyang, and Palaeolithic industries in many caves. The major Neolithic cul tures, including Daxi, Qujialing, Shijiahe, Majiabang, Songze, Liangzhu, and Beiyinyangying in the middle and lower Yangtze, and several shell midden sites in Lingnan, were also discovered in this phase. During the systematic research phase (1970s to the present), ongoing major ex cavation at many sites contributed significantly to our understanding of prehis toric southern China. Additional early human remains at Wushan, Jianshi, Yun xian, Nanjing, and Hexian were recovered together with Palaeolithic assemblages from Yuanmou, the Baise basin, Jianshi Longgu cave, Hanzhong, the Li and Yuan valleys, Dadong and Jigongshan. -

THE MEDIA's INFLUENCE on SUCCESS and FAILURE of DIALECTS: the CASE of CANTONESE and SHAAN'xi DIALECTS Yuhan Mao a Thesis Su

THE MEDIA’S INFLUENCE ON SUCCESS AND FAILURE OF DIALECTS: THE CASE OF CANTONESE AND SHAAN’XI DIALECTS Yuhan Mao A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Arts (Language and Communication) School of Language and Communication National Institute of Development Administration 2013 ABSTRACT Title of Thesis The Media’s Influence on Success and Failure of Dialects: The Case of Cantonese and Shaan’xi Dialects Author Miss Yuhan Mao Degree Master of Arts in Language and Communication Year 2013 In this thesis the researcher addresses an important set of issues - how language maintenance (LM) between dominant and vernacular varieties of speech (also known as dialects) - are conditioned by increasingly globalized mass media industries. In particular, how the television and film industries (as an outgrowth of the mass media) related to social dialectology help maintain and promote one regional variety of speech over others is examined. These issues and data addressed in the current study have the potential to make a contribution to the current understanding of social dialectology literature - a sub-branch of sociolinguistics - particularly with respect to LM literature. The researcher adopts a multi-method approach (literature review, interviews and observations) to collect and analyze data. The researcher found support to confirm two positive correlations: the correlative relationship between the number of productions of dialectal television series (and films) and the distribution of the dialect in question, as well as the number of dialectal speakers and the maintenance of the dialect under investigation. ACKNOWLEDGMENTS The author would like to express sincere thanks to my advisors and all the people who gave me invaluable suggestions and help. -

The Diminutive Word Tsa42 in the Xianning Dialect: A

The Diminutive Word tsa42 in the Xianning Dialect: A Cognitive Approach* Xiaolong Lu University of Arizona [email protected] This study reports on the syntactic distribution and semantic features of a spoken word tsa42 as a form of diminutive address in the Xianning dialect. Two research questions are examined: what are the distributional patterns of the dialectal word tsa42 regarding its different functions in the Xianning dialect? And when this word serves as a suffix of nouns, why can it be widely used as a diminutive marker in the dialect? Based on interview data, I argue that (1) there are restrictions in using the diminutive word tsa42 together with adjectives and nouns, and certain countable nouns denoting huge and fierce animals, or large transportation tools, etc., cannot co-occur with the diminutive word tsa42; and (2) the markedness theory (Shi 2005) is used to explain that the diminutive suffix tsa42 can be used to highlight nouns denoting things of small sizes. The revised model of Radial Category (Jurafsky 1996) helps account for different categorizations metaphorically and metonymically extended from the prototype “child” in terms of tsa42, etc. This case study implies that a typological model for diminution needs to be built to connect the analysis of other diminutive words in Chinese dialects and beyond. 1. Introduction Showing loveliness or affection to small things is taken to be a kind of human nature, and the “diminutive function is among the grammatical primitives which seem to occur universally or near-universally in world languages” (Jurafsky 1996: 534). The term “diminutive” is expressed as a kind of word that has been modified to convey a slighter degree of its root * I want to give my thanks to Dr. -

The Donkey Rider As Icon: Li Cheng and Early Chinese Landscape Painting Author(S): Peter C

The Donkey Rider as Icon: Li Cheng and Early Chinese Landscape Painting Author(s): Peter C. Sturman Source: Artibus Asiae, Vol. 55, No. 1/2 (1995), pp. 43-97 Published by: Artibus Asiae Publishers Stable URL: http://www.jstor.org/stable/3249762 . Accessed: 05/08/2011 12:40 Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at . http://www.jstor.org/page/info/about/policies/terms.jsp JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. Artibus Asiae Publishers is collaborating with JSTOR to digitize, preserve and extend access to Artibus Asiae. http://www.jstor.org PETER C. STURMAN THE DONKEY RIDER AS ICON: LI CHENG AND EARLY CHINESE LANDSCAPE PAINTING* he countryis broken,mountains and rivers With thesefamous words that lamentthe "T remain."'I 1T catastropheof the An LushanRebellion, the poet Du Fu (712-70) reflectedupon a fundamental principle in China:dynasties may come and go, but landscapeis eternal.It is a principleaffirmed with remarkablepower in the paintingsthat emergedfrom the rubbleof Du Fu'sdynasty some two hundredyears later. I speakof the magnificentscrolls of the tenth and eleventhcenturies belonging to the relativelytightly circumscribedtradition from Jing Hao (activeca. 875-925)to Guo Xi (ca. Ooo-9go)known todayas monumentallandscape painting. The landscapeis presentedas timeless. We lose ourselvesin the believabilityof its images,accept them as less the productof humanminds and handsthan as the recordof a greatertruth.