Toni Heittola Automatic Classification of Music Signals Master of Science

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Young Americans to Emotional Rescue: Selected Meetings

YOUNG AMERICANS TO EMOTIONAL RESCUE: SELECTING MEETINGS BETWEEN DISCO AND ROCK, 1975-1980 Daniel Kavka A Thesis Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of MASTER OF MUSIC August 2010 Committee: Jeremy Wallach, Advisor Katherine Meizel © 2010 Daniel Kavka All Rights Reserved iii ABSTRACT Jeremy Wallach, Advisor Disco-rock, composed of disco-influenced recordings by rock artists, was a sub-genre of both disco and rock in the 1970s. Seminal recordings included: David Bowie’s Young Americans; The Rolling Stones’ “Hot Stuff,” “Miss You,” “Dance Pt.1,” and “Emotional Rescue”; KISS’s “Strutter ’78,” and “I Was Made For Lovin’ You”; Rod Stewart’s “Do Ya Think I’m Sexy“; and Elton John’s Thom Bell Sessions and Victim of Love. Though disco-rock was a great commercial success during the disco era, it has received limited acknowledgement in post-disco scholarship. This thesis addresses the lack of existing scholarship pertaining to disco-rock. It examines both disco and disco-rock as products of cultural shifts during the 1970s. Disco was linked to the emergence of underground dance clubs in New York City, while disco-rock resulted from the increased mainstream visibility of disco culture during the mid seventies, as well as rock musicians’ exposure to disco music. My thesis argues for the study of a genre (disco-rock) that has been dismissed as inauthentic and commercial, a trend common to popular music discourse, and one that is linked to previous debates regarding the social value of pop music. -

“My Girl”—The Temptations (1964) Added to the National Registry: 2017 Essay by Mark Ribowsky (Guest Post)*

“My Girl”—The Temptations (1964) Added to the National Registry: 2017 Essay by Mark Ribowsky (guest post)* The Temptations, c. 1964 The Temptations’ 1964 recording of “My Girl” came at a critical confluence for the group, the Motown label, and a culture roiling with the first waves of the British invasion of popular music. The five-man cell of disparate souls, later to be codified by black disc jockeys as the “tall, tan, talented, titillating, tempting Temptations,” had been knocking around Motown’s corridors and studio for three years, cutting six failed singles before finally scoring on the charts that year with Smokey Robinson’s cleverly spunky “The Way You Do the Things You Do” that winter. It rose to number 11 on the pop chart and to the top of the R&B chart, an important marker on the music landscape altered by the Beatles’ conquest of America that year. Having Smokey to guide them was incalculably advantageous. Berry Gordy, the former street hustler who had founded Motown as a conduit for Detroit’s inner-city voices in 1959, invested a lot of trust in the baby-faced Robinson, who as front man of the Miracles delivered the company’s seminal number one R&B hit and million-selling single, “Shop Around.” Four years later, in 1964, he wrote and produced Mary Wells’ “My Guy,” Motown’s second number one pop hit. Gordy conquered the black urban market but craved the broader white pop audience. The Temptations were riders on that train. Formed in 1959 by Otis Williams, a leather-jacketed street singer, their original lineup consisted of Williams, Elbridge “Al” Bryant, bass singer Melvin Franklin and tenors Eddie Kendricks and Paul Williams. -

Teo Moritz, Superhuit Vient Aujourd'hui Grossir Les Rangs D'une Scène House Locale Qui Ne Comptait Jusqu'ici Qu'un Seul Représentant, Rotax, Créé Fin 1996

Reviews (France) MUSIQUE INFO HEBDO - February 4th 2000 - #109 Superhuit, le label deep-house lyonnais La région Rhône-Alpes est réputée pour être un bastion techno, une quinzaine de labels y produisant le gros de la production techno hexagonale. Fondé par Teo Moritz, Superhuit vient aujourd'hui grossir les rangs d'une scène house locale qui ne comptait jusqu'ici qu'un seul représentant, Rotax, créé fin 1996. Comme ce dernier, Superhuit se destine avant tout à la production de vinyles destinés aux DJ's. Signé sur Pamplemousse (alias Boogymann) et sur Brif Records, Teo Moritz publiait le premier maxi de Superhuit à l'automne 1999. Il décide aujourd'hui de passer à la vitesse supérieure. "Notre objectif à court terme est de produire un vinyle tous les deux mois", confie-t-il. "J'ai un nouveau maxi du lyonnais Ron Hayes. Il est sorti la semaine dernière, et nous et nous avons déjà vendu la totalité des copies pressées en quelques jours. Nous le repressons en raison de la demande. La couleur dominante de Superhuit sera la deep-house, même si chaque vinyle inclut des titres house plus classiques, ou des plages downtempo. Je développerai dans un premier temps Teo Moritz et Ron Hayes, mais je cherche de nouvelles signatures." La distribution est assurée par Vénus. (Y2M) MUSIQUE INFO HEBDO - March 23th 2001 - #160 Les fusions jazz de Teo Moritz Deux ans après la création de son label Superhuit, Teo Moritz produit son premier album, distribué par Vénus. Avec ce LP vinyle, le jeune DJ et producteur lyonnais rejoint le groupe d’artistes français explorant les mélanges entre jazz et musique électronique. -

Starr-Waterman American Popular Music Chapter 11: the 1970S: Rock Music, Disco, and the Popular Mainstream Key People Allman

Starr-Waterman American Popular Music Chapter 11: The 1970s: Rock Music, Disco, and the Popular Mainstream Key People Allman Brothers Band: Most important southern rock band of the late 1960s and early 1970s who reconnected the generative power of the blues to the mainstream of rock music. Barry White (1944‒2004): Multitalented African American singer, songwriter, arranger, conductor, and producer who achieved success as an artist in the 1970s with his Love Unlimited Orchestra; perhaps best known for his full, deep voice. Carlos Santana (b. 1947): Mexican-born rock guitarist who combined rock, jazz, and Afro-Latin elements on influential albums like Abraxas. Carole King (b. 1942): Singer-songwriter who recorded influential songs in New York’s Brill Building and later recorded the influential album Tapestry in 1971. Charlie Rich (b. 1932): Country performer known as the “Silver Fox” who won the Country Music Association’s Entertainer of the Year award in 1974 for his song “The Most Beautiful Girl.” Chic: Disco group who recorded the hit “Good Times.” Chicago: Most long-lived and popular jazz rock band of the 1970s, known today for anthemic love songs such as “If You Leave Me Now” (1976), “Hard to Say I’m Sorry” (1982), and “Look Away” (1988). David Bowie (1947‒2016): Glam rock pioneer who recorded the influential album The Rise and Fall of Ziggy Stardust and the Spiders from Mars in 1972. Dolly Parton (b. 1946): Country music star whose flexible soprano voice, songwriting ability, and carefully crafted image as a cheerful sex symbol combined to gain her a loyal following among country fans. -

Funku�....�14 � 2.1� Počátek� 20

-4 OBSAH ÚVOD ...............................................................................................................................6 I ČÁST 1 HUDEBNÍKULTURANAÚZEMÍSPOJENÝCHSTÁTŮ ...............................8 1.1 AFRICKÉ KOŘENY ................................................................................................8 1.2 EVROPSKÁ VERSUS AFRICKÁ HUDBA .................................................................10 1.3 VZÁJEMNÉ PROPOJOVÁNÍ ..................................................................................12 2 HUDEBNÍSTYLYSPŘÍMÝMVLIVEMNAVZNIKA VÝVOJFUNKU ....14 2.1 POČÁTEK 20. STOLETÍ AŽ DVACÁTÁ LÉTA : GOSPEL , BLUES , RAGTIME , NEW ORLEANS – KOLÉBKA JAZZU , JAZZ ....................................................................16 2.2 TŘICÁTÁ A ČTYŘICÁTÁ LÉTA : SWING , BE BOP , RHYTHM &BLUES .......................23 2.3 PADESÁTÁ A ŠEDESÁTÁ LÉTA : ROCK &ROLL , SOUL ............................................28 2.4 SEDMDESÁTÁ LÉTA : JAZZ ROCK , DISKO , HIP -HOP ..............................................31 II ČÁST 3 HISTORICKÝKONTEXT VZNIKUFUNKU ...................................................36 3.1 OBČANSKÉ NEPOKOJE .......................................................................................37 3.2 RASOVÁ PROBLEMATIKA ...................................................................................38 3.3 HUDEBNÍ SOUVISLOST .......................................................................................40 4 FUNK .......................................................................................................................41 -

Traditional Funk: an Ethnographic, Historical, and Practical Study of Funk Music in Dayton, Ohio

University of Dayton eCommons Honors Theses University Honors Program 4-26-2020 Traditional Funk: An Ethnographic, Historical, and Practical Study of Funk Music in Dayton, Ohio Caleb G. Vanden Eynden University of Dayton Follow this and additional works at: https://ecommons.udayton.edu/uhp_theses eCommons Citation Vanden Eynden, Caleb G., "Traditional Funk: An Ethnographic, Historical, and Practical Study of Funk Music in Dayton, Ohio" (2020). Honors Theses. 289. https://ecommons.udayton.edu/uhp_theses/289 This Honors Thesis is brought to you for free and open access by the University Honors Program at eCommons. It has been accepted for inclusion in Honors Theses by an authorized administrator of eCommons. For more information, please contact [email protected], [email protected]. Traditional Funk: An Ethnographic, Historical, and Practical Study of Funk Music in Dayton, Ohio Honors Thesis Caleb G. Vanden Eynden Department: Music Advisor: Samuel N. Dorf, Ph.D. April 2020 Traditional Funk: An Ethnographic, Historical, and Practical Study of Funk Music in Dayton, Ohio Honors Thesis Caleb G. Vanden Eynden Department: Music Advisor: Samuel N. Dorf, Ph.D. April 2020 Abstract Recognized nationally as the funk capital of the world, Dayton, Ohio takes credit for birthing important funk groups (i.e. Ohio Players, Zapp, Heatwave, and Lakeside) during the 1970s and 80s. Through a combination of ethnographic and archival research, this paper offers a pedagogical approach to Dayton funk, rooted in the styles and works of the city’s funk legacy. Drawing from fieldwork with Dayton funk musicians completed over the summer of 2019 and pedagogical theories of including black music in the school curriculum, this paper presents a pedagogical model for funk instruction that introduces the ingredients of funk (instrumentation, form, groove, and vocals) in order to enable secondary school music programs to create their own funk rooted in local history. -

Crossing Over: from Black Rhythm Blues to White Rock 'N' Roll

PART2 RHYTHM& BUSINESS:THE POLITICAL ECONOMY OF BLACKMUSIC Crossing Over: From Black Rhythm Blues . Publishers (ASCAP), a “performance rights” organization that recovers royalty pay- to WhiteRock ‘n’ Roll ments for the performance of copyrighted music. Until 1939,ASCAP was a closed BY REEBEEGAROFALO society with a virtual monopoly on all copyrighted music. As proprietor of the com- positions of its members, ASCAP could regulate the use of any selection in its cata- logue. The organization exercised considerable power in the shaping of public taste. Membership in the society was generally skewed toward writers of show tunes and The history of popular music in this country-at least, in the twentieth century-can semi-serious works such as Richard Rodgers and Lorenz Hart, Cole Porter, George be described in terms of a pattern of black innovation and white popularization, Gershwin, Irving Berlin, and George M. Cohan. Of the society’s 170 charter mem- which 1 have referred to elsewhere as “black roots, white fruits.’” The pattern is built bers, six were black: Harry Burleigh, Will Marion Cook, J. Rosamond and James not only on the wellspring of creativity that black artists bring to popular music but Weldon Johnson, Cecil Mack, and Will Tyers.’ While other “literate” black writers also on the systematic exclusion of black personnel from positions of power within and composers (W. C. Handy, Duke Ellington) would be able to gain entrance to the industry and on the artificial separation of black and white audiences. Because of ASCAP, the vast majority of “untutored” black artists were routinely excluded from industry and audience racism, black music has been relegated to a separate and the society and thereby systematically denied the full benefits of copyright protection. -

“Bo Diddley” and “I'm a Man” (1955)

“Bo Diddley” and “I’m a Man” (1955) Added to the National Registry: 2011 Essay by Ed Komara (guest post)* Bo Diddley While waiting in Bo Diddley’s house to conduct an interview for the February 12, 1987 issue of “Rolling Stone,” journalist Kurt Loder noticed a poster. “If You Think Rock and Roll Started With Elvis,” it proclaimed, “You Don’t Know Diddley.” This statement seems exaggerated, but upon listening to Diddley’s April 1955 debut 78 on Checker 814, “Bo Diddley” backed with “I’m A Man,” it becomes apt, perhaps even understated. Bo Diddley (1928-2008) described his own place in music history to Loder. “People wouldn’t even bother with no stuff like ‘Bo Diddley’ and ‘I’m A Man’ and stuff like that ten years earlier [circa 1945] or even a year earlier [1954]. Then Leonard and Phil Chess decided to take a chance, and suddenly a whole different scene, a different kind of music, came in. And that was the beginning of rock and roll.” The composer credit for Checker 814 reads “E. McDaniels,” and there begins the tale. Bo Diddley was born Ellas Otha Bates in McComb, Mississippi on December 30, 1928 to a teenage mother and her local boyfriend. He was raised, however, by his maternal first cousin, Gussie McDaniel, to whom he was taken to Chicago, and given her surname McDaniel. He grew up on the South Side of the city, where he learned violin, trombone and, at age 12, the guitar. Before long, he was playing for change on the local streets. -

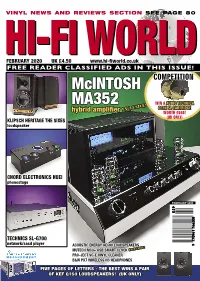

Mcintosh MA352 HYBRID VALVE AMPLIFIER 10 Transistor Power but with Valve Sound

VINYL NEWS AND REVIEWS SECTION SEE PAGE 80 HI-FIHI-FIFEBRUARY 2020 UK £4.50 WORLDWORLDwww.hi-fiworld.co.uk FREE READER CLASSIFIED ADS IN THIS ISSUE! McINTOSH COMPETITION MA352 WIN A AUDIO TECHNICA ! Exclusive OC9X SL CARTRIDGE hybrid amplifier WORTH £660! (UK ONLY) KLIPSCH HERITAGE THE SIXES loudspeaker CHORD ELECTRONICS HUEI phonostage FEBRUARY 2020 TECHNICS SL-G700 network/sacd player ACOUSTIC ENERGY AE500 LOUDSPEAKERS SIVE! MUTECH MC3+ USB SMART CLOCK EXCLU PRO-JECT VC-E VINYL CLEANER MEASUREMENT B&W PX7 WIRELESS NC HEADPHONES FIVE PAGES OF LETTERS - THE BEST WINS A PAIR OF KEF Q150 LOUDSPEAKERS! (UK ONLY) [master] The definitive version. Ultimate performance, bespoke audiophile cable collection Chord Company Signature, Sarum and ChordMusic form the vanguard of our cable ranges. A master collection that features our latest conductor, insulation and connector technology. High-end audiophile quality at a realistic price and each one built to your specific requirements. Arrange a demonstration with your nearest Chord Company retailer and hear the difference for yourself. Designed and hand-built in England since 1985 by a dedicated team of music-lovers. Find more information and a dealer locator at: www.chord.co.uk chordco-ad-HFW-MAR19-MASTER-002a.indd 1 07/05/2019 13:57:32 welcome EDITOR alves can be a bit troublesome. If you’re lucky big ones responsible Noel Keywood for producing power will last a few thousand hours, but then need e-mail: [email protected] replacing. However, small ones that don’t dissipate power will sol- dier on past 10,000 hours – and what’s more they cost little, in the DESIGN EDITOR £10-£20 region. -

Motown the Musical

EDUCATIONAL GUIDE C1 Kevin MccolluM Doug Morris anD Berry gorDy Present Book by Music and Lyrics from Berry gorDy The legenDary MoTown caTalog BaseD upon The BooK To Be loveD: Music By arrangeMenT wiTh The Music, The Magic, The MeMories sony/aTv Music puBlishing of MKoevinTown B yM Bcerrycollu gorDyM Doug Morris anD Berry gorDy MoTown® is a regisTereD TraPresentDeMarK of uMg recorDings, inc. Starring BranDon vicTor Dixon valisia leKae charl Brown Bryan Terrell clarK Book by Music and Lyrics from TiMoThy J. alex Michael arnolD nicholas chrisTopher reBecca e. covingTon ariana DeBose anDrea Dora presTBonerry w. Dugger g iiior Dwyil Kie ferguson iii TheDionne legen figgins DaryMarva M hicoKsT ownTiffany c JaaneneTalog howarD sasha huTchings lauren liM JacKson Jawan M. JacKson Morgan JaMes John Jellison BaseD upon The BooK To Be loveD: Music By arrangeMenT wiTh crysTal Joy Darius KaleB grasan KingsBerry JaMie laverDiere rayMonD luKe, Jr. Marielys Molina The Music, The Magic, The MeMories sony/aTv Music puBlishing syDney MorTon Maurice Murphy Jarran Muse Jesse nager MilTon craig nealy n’Kenge DoMinic nolfi of MoTown By Berry gorDy saycon sengBloh ryan shaw JaMal sTory eric laJuan suMMers ephraiM M. syKes ® JMuliusoTown Tho isM asa regisiii TereDanielD Tra DJ.eM waraTTK sof uMDgonal recorD wDeingsBBer, i, ncJr.. Scenic Design Costume Design LighStarringting Design Sound Design Projection Design DaviD Korins esosa BranDnonaTasha vic TKoraTz Dixon peTer hylensKi Daniel BroDie Casting Hair & Wig Design valisia leKae Associate Director Assistant Choreographer Telsey + coMpany charlcharles Brown g. lapoinTe scheleBryan willia TerrellMs clarK Brian h. BrooKs BeThany Knox,T icsaMoThy J. alex Michael arnolD nicholas chrisTopher reBecca e. -

The Social and Cultural Changes That Affected the Music of Motown Records from 1959-1972

Columbus State University CSU ePress Theses and Dissertations Student Publications 2015 The Social and Cultural Changes that Affected the Music of Motown Records From 1959-1972 Lindsey Baker Follow this and additional works at: https://csuepress.columbusstate.edu/theses_dissertations Part of the Music Commons Recommended Citation Baker, Lindsey, "The Social and Cultural Changes that Affected the Music of Motown Records From 1959-1972" (2015). Theses and Dissertations. 195. https://csuepress.columbusstate.edu/theses_dissertations/195 This Thesis is brought to you for free and open access by the Student Publications at CSU ePress. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of CSU ePress. The Social and Cultural Changes that Affected the Music of Motown Records From 1959-1972 by Lindsey Baker A Thesis Submitted in Partial Fulfillment of Requirements of the CSU Honors Program for Honors in the degree of Bachelor of Music in Performance Schwob School of Music Columbus State University Thesis Advisor Date Dr. Kevin Whalen Honors Committee Member ^ VM-AQ^A-- l(?Yy\JcuLuJ< Date 2,jbl\5 —x'Dr. Susan Tomkiewicz Dean of the Honors College ((3?7?fy/L-Asy/C/7^ ' Date Dr. Cindy Ticknor Motown Records produced many of the greatest musicians from the 1960s and 1970s. During this time, songs like "Dancing in the Street" and "What's Going On?" targeted social issues in America and created a voice for African-American people through their messages. Events like the Mississippi Freedom Summer and Bloody Thursday inspired the artists at Motown to create these songs. Influenced by the cultural and social circumstances of the Civil Rights Movement, the musical output of Motown Records between 1959 and 1972 evolved from a sole focus on entertainment in popular culture to a focus on motivating social change through music. -

Brijean Signs to Ghostly International & Shares New

July 22, 2020 For Immediate Release Brijean Signs To Ghostly International & Shares New Single "Moody" Photo by Jack Bool Brijean - the Oakland-based duo of Brijean Murphy and Doug Stuart - announces signing to Ghostly International and shares new single “Moody.” The easy-grooving, ephemeral cut “Moody” captures Brijean’s signature sound in just over two minutes: the dazzling, golden-hued haze of percussive beats and honeyed vocals. Stuart says the track is “a quick gentle trip” that started in a living room — recording with Murphy’s drum mentor Pepe Jacobo — windows open, stream-of-consciousness lyrics flowing. Murphy, an accomplished DJ, session and live player in Oakland’s diverse music scene has emerged as one of indie’s most in-demand percussionists (Poolside, Toro Y Moi, U.S. Girls). In 2018, she began recording songs with multi-instrumentalist and producer Stuart, who shares a background in jazz and pop in bands such as Bells Atlas, Meernaa, and Luke Temple. Murphy’s musical talents are family heirlooms: her father, percussionist and engineer Patrick Murphy, taught Brijean her first patterns on a pair of congas that she inherited from the late Trinidadian steel drum legend Vince Charles (Neil Diamond). Growing up in LA’s Glassell Park, Murphy was raised by a cadre of honorary aunts and uncles – a deep bench of jazz, latin and soul musicians in their own rites. This meant she grew up regaled by musical lore – larger than life tales of jazz luminaries, psychedelic trips and obscure cultural enclaves – sampling some of those family stories and weaving them into her work.