P189 Machine Learning Algorithms for Tropical Cyclone Center Fixing and Eye Detection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lecture 15 Hurricane Structure

MET 200 Lecture 15 Hurricanes Last Lecture: Atmospheric Optics Structure and Climatology The amazing variety of optical phenomena observed in the atmosphere can be explained by four physical mechanisms. • What is the structure or anatomy of a hurricane? • How to build a hurricane? - hurricane energy • Hurricane climatology - when and where Hurricane Katrina • Scattering • Reflection • Refraction • Diffraction 1 2 Colorado Flood Damage Hurricanes: Useful Websites http://www.wunderground.com/hurricane/ http://www.nrlmry.navy.mil/tc_pages/tc_home.html http://tropic.ssec.wisc.edu http://www.nhc.noaa.gov Hurricane Alberto Hurricanes are much broader than they are tall. 3 4 Hurricane Raymond Hurricane Raymond 5 6 Hurricane Raymond Hurricane Raymond 7 8 Hurricane Raymond: wind shear Typhoon Francisco 9 10 Typhoon Francisco Typhoon Francisco 11 12 Typhoon Francisco Typhoon Francisco 13 14 Typhoon Lekima Typhoon Lekima 15 16 Typhoon Lekima Hurricane Priscilla 17 18 Hurricane Priscilla Hurricanes are Tropical Cyclones Hurricanes are a member of a family of cyclones called Tropical Cyclones. West of the dateline these storms are called Typhoons. In India and Australia they are called simply Cyclones. 19 20 Hurricane Isaac: August 2012 Characteristics of Tropical Cyclones • Low pressure systems that don’t have fronts • Cyclonic winds (counter clockwise in Northern Hemisphere) • Anticyclonic outflow (clockwise in NH) at upper levels • Warm at their center or core • Wind speeds decrease with height • Symmetric structure about clear "eye" • Latent heat from condensation in clouds primary energy source • Form over warm tropical and subtropical oceans NASA VIIRS Day-Night Band 21 22 • Differences between hurricanes and midlatitude storms: Differences between hurricanes and midlatitude storms: – energy source (latent heat vs temperature gradients) - Winter storms have cold and warm fronts (asymmetric). -

Investigation and Prediction of Hurricane Eyewall

INVESTIGATION AND PREDICTION OF HURRICANE EYEWALL REPLACEMENT CYCLES By Matthew Sitkowski A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Atmospheric and Oceanic Sciences) at the UNIVERSITY OF WISCONSIN-MADISON 2012 Date of final oral examination: 4/9/12 The dissertation is approved by the following members of the Final Oral Committee: James P. Kossin, Affiliate Professor, Atmospheric and Oceanic Sciences Daniel J. Vimont, Professor, Atmospheric and Oceanic Sciences Steven A. Ackerman, Professor, Atmospheric and Oceanic Sciences Jonathan E. Martin, Professor, Atmospheric and Oceanic Sciences Gregory J. Tripoli, Professor, Atmospheric and Oceanic Sciences i Abstract Flight-level aircraft data and microwave imagery are analyzed to investigate hurricane secondary eyewall formation and eyewall replacement cycles (ERCs). This work is motivated to provide forecasters with new guidance for predicting and better understanding the impacts of ERCs. A Bayesian probabilistic model that determines the likelihood of secondary eyewall formation and a subsequent ERC is developed. The model is based on environmental and geostationary satellite features. A climatology of secondary eyewall formation is developed; a 13% chance of secondary eyewall formation exists when a hurricane is located over water, and is also utilized by the model. The model has been installed at the National Hurricane Center and has skill in forecasting secondary eyewall formation out to 48 h. Aircraft reconnaissance data from 24 ERCs are examined to develop a climatology of flight-level structure and intensity changes associated with ERCs. Three phases are identified based on the behavior of the maximum intensity of the hurricane: intensification, weakening and reintensification. -

Extratropical Cyclones and Anticyclones

© Jones & Bartlett Learning, LLC. NOT FOR SALE OR DISTRIBUTION Courtesy of Jeff Schmaltz, the MODIS Rapid Response Team at NASA GSFC/NASA Extratropical Cyclones 10 and Anticyclones CHAPTER OUTLINE INTRODUCTION A TIME AND PLACE OF TRAGEDY A LiFE CYCLE OF GROWTH AND DEATH DAY 1: BIRTH OF AN EXTRATROPICAL CYCLONE ■■ Typical Extratropical Cyclone Paths DaY 2: WiTH THE FI TZ ■■ Portrait of the Cyclone as a Young Adult ■■ Cyclones and Fronts: On the Ground ■■ Cyclones and Fronts: In the Sky ■■ Back with the Fitz: A Fateful Course Correction ■■ Cyclones and Jet Streams 298 9781284027372_CH10_0298.indd 298 8/10/13 5:00 PM © Jones & Bartlett Learning, LLC. NOT FOR SALE OR DISTRIBUTION Introduction 299 DaY 3: THE MaTURE CYCLONE ■■ Bittersweet Badge of Adulthood: The Occlusion Process ■■ Hurricane West Wind ■■ One of the Worst . ■■ “Nosedive” DaY 4 (AND BEYOND): DEATH ■■ The Cyclone ■■ The Fitzgerald ■■ The Sailors THE EXTRATROPICAL ANTICYCLONE HIGH PRESSURE, HiGH HEAT: THE DEADLY EUROPEAN HEAT WaVE OF 2003 PUTTING IT ALL TOGETHER ■■ Summary ■■ Key Terms ■■ Review Questions ■■ Observation Activities AFTER COMPLETING THIS CHAPTER, YOU SHOULD BE ABLE TO: • Describe the different life-cycle stages in the Norwegian model of the extratropical cyclone, identifying the stages when the cyclone possesses cold, warm, and occluded fronts and life-threatening conditions • Explain the relationship between a surface cyclone and winds at the jet-stream level and how the two interact to intensify the cyclone • Differentiate between extratropical cyclones and anticyclones in terms of their birthplaces, life cycles, relationships to air masses and jet-stream winds, threats to life and property, and their appearance on satellite images INTRODUCTION What do you see in the diagram to the right: a vase or two faces? This classic psychology experiment exploits our amazing ability to recognize visual patterns. -

ESSENTIALS of METEOROLOGY (7Th Ed.) GLOSSARY

ESSENTIALS OF METEOROLOGY (7th ed.) GLOSSARY Chapter 1 Aerosols Tiny suspended solid particles (dust, smoke, etc.) or liquid droplets that enter the atmosphere from either natural or human (anthropogenic) sources, such as the burning of fossil fuels. Sulfur-containing fossil fuels, such as coal, produce sulfate aerosols. Air density The ratio of the mass of a substance to the volume occupied by it. Air density is usually expressed as g/cm3 or kg/m3. Also See Density. Air pressure The pressure exerted by the mass of air above a given point, usually expressed in millibars (mb), inches of (atmospheric mercury (Hg) or in hectopascals (hPa). pressure) Atmosphere The envelope of gases that surround a planet and are held to it by the planet's gravitational attraction. The earth's atmosphere is mainly nitrogen and oxygen. Carbon dioxide (CO2) A colorless, odorless gas whose concentration is about 0.039 percent (390 ppm) in a volume of air near sea level. It is a selective absorber of infrared radiation and, consequently, it is important in the earth's atmospheric greenhouse effect. Solid CO2 is called dry ice. Climate The accumulation of daily and seasonal weather events over a long period of time. Front The transition zone between two distinct air masses. Hurricane A tropical cyclone having winds in excess of 64 knots (74 mi/hr). Ionosphere An electrified region of the upper atmosphere where fairly large concentrations of ions and free electrons exist. Lapse rate The rate at which an atmospheric variable (usually temperature) decreases with height. (See Environmental lapse rate.) Mesosphere The atmospheric layer between the stratosphere and the thermosphere. -

Airborne Radar Observations of Eye Configuration Changes, Bright

UDC 661.616.22:661.M)9.61: 661.601.81 Airborne Radar Observations of Eye Configuration Changes, Bright Band Distribution, and Precipitation Tilt During the 1969 Multiple Seeding Experiments in Hurricane Debbie' PETER G. BLACK-National Hurricane Research Laboratory, Environmental Research Laboratories, NOAA, Miami, Fla. HARRY V. SENN and CHARLES L. COURTRIGHT-Radar Meteorology Laboratory, Rosenstiel School of Marine and Atmospheric Sciences, University of Miami, Coral Gables, Fla. ABSTRACT-Project Stormfury radar precipitation data radius of maximum winds follow closely the changes in gathered before, during, and after the multiple seedings of eyewall radius. It is suggested that the different results on the eyewall region of hurricane Debbie on Aug. 18 and 20, the 2 days might be attributable to seeding beyond the 1969, are used to study changes in the eye configuration, radius of maximum winds on the 18th and inside the the characteristics of the radar bright band, and the outer radius of maximum winds on the 20th. precipitation tilt. Increases in the echo-free area within The bright band is found in all quadrants of the storm the eye followed each of the five seedings on the 18th, within 100 n.mi. of the eye, sloping slightly upward near but followed only one seeding on the 20th. Changes in the eyewall. The inferred shears are directed outward and major axis orientation followed only one seeding on the slightly down band with height in both layers studied. 18th, but followed each seeding on the 20th. Similar The hurricane Debbie bright band and precipitation tilt studies conducted recently on unmodified storms suggest data compared favorably with those gathered in Betsy of that such changes do not occur naturally. -

What Is Storm Surge?

INTRODUCTION TO STORM SURGE Introduction to Storm Surge National Hurricane Center Storm Surge Unit BOLIVAR PENINSULA IN TEXAS AFTER HURRICANE IKE (2008) What is Storm Surge? Inland Extent Storm surge can penetrate well inland from the coastline. During Hurricane Ike, the surge moved inland nearly 30 miles in some locations in southeastern Texas and southwestern Louisiana. Storm surge is an abnormal Storm tide is the water level rise of water generated by a rise during a storm due to storm, over and above the the combination of storm predicted astronomical tide. surge and the astronomical tide. • It’s the change in the water level that is due to the presence of the • Since storm tide is the storm combination of surge and tide, it • Since storm surge is a difference does require a reference level Vulnerability between water levels, it does not All locations along the U.S. East and Gulf coasts • A 15 ft. storm surge on top of a are vulnerable to storm surge. This figure shows have a reference level high tide that is 2 ft. above mean the areas that could be inundated by water in any sea level produces a 17 ft. storm given category 4 hurricane. tide. INTRODUCTION TO STORM SURGE 2 What causes Storm Surge? Storm surge is caused primarily by the strong winds in a hurricane or tropical storm. The low pressure of the storm has minimal contribution! The wind circulation around the eye of a Once the hurricane reaches shallower hurricane (left above) blows on the waters near the coast, the vertical ocean surface and produces a vertical circulation in the ocean becomes In general, storm surge occurs where winds are blowing onshore. -

Talking Points for GOES-R Hurricane Intensity Estimate Baseline Product

Talking points for GOES-R Hurricane Intensity Estimate baseline product 1. GOES-R Hurricane Intensity Estimate baseline product 2. Learning Objectives: (1) To provide a brief background of the Hurricane Intensity Estimate algorithm. (2) To understand the strengths and limitations, including measurement range and accuracy. (3) To use an example to become familiar with the text product format. 3. Product Overview: The Hurricane Intensity Estimate algorithm is a GOES-R baseline product. In this algorithm, estimates of tropical cyclone intensity are generated in real-time using infrared window channel satellite imagery. This can be used by forecasters to help assess current intensity trends throughout the life of the storm, which is particularly important when direct aircraft reconnaissance measurements are unavailable. The official product is an estimate of the maximum sustained wind speed. Comparing this estimate to tropical cyclone models can identify which model is best capturing current conditions. GOES-R channel 13 imagery with a central wavelength of 10.35 µm is used, with full disk coverage both day and night. The measurement range of the Dvorak hurricane intensity scale is 1 to 9, corresponding to wind speeds of roughly 25 to 200 kt. Measurement accuracy and precision are just under 10 and 16 kt. 4. The Algorithm: The HIE algorithm is derived from the Advanced Dvorak Technique that is currently in use operationally. It is based on the relationship of convective cloud patterns and cloud-top temperatures to current tropical cyclone intensity. As cloud regions become more organized with stronger convection releasing greater amounts of latent heat, there is a reduction in storm central pressure and increased wind speeds. -

8A.4 the Advanced Objective Dvorak Technique (Aodt) – Continuing the Journey

8A.4 THE ADVANCED OBJECTIVE DVORAK TECHNIQUE (AODT) – CONTINUING THE JOURNEY Timothy L. Olander* and Christopher S. Velden University of Wisconsin - CIMSS, Madison, Wisconsin 1. INTRODUCTION regions in the IR imagery to determine scene types. This scheme eventually defined four primary scene In the early 1970’s, scientists at types: Eye, Central Dense Overcast (CDO), NOAA/NESDIS pioneered a technique to estimate Embedded Center (EMBC), and Shear. By using tropical cyclone (TC) intensity using geostationary these four scene type designations, a proper “branch” satellite data. These efforts were led by Vern Dvorak in the DT logic tree could be followed to more whom continued to advance the technique in the accurately produce an objective intensity estimate. 1980’s (Dvorak 1984). The method of equating The creation of a “history file” allowed for satellite cloud signatures and brightness temperature previous intensity estimates, and their related analysis values to TC intensity became know as the Dvorak parameters, to be stored and utilized by subsequent Technique (DT). The DT has been used at TC image interrogations by the ODT algorithm. A time- forecast centers worldwide since that time, and is the averaged T-number replaced the Data-T number, and primary tool for determining TC intensity where aircraft was effective in removing much of the fictitious short- reconnaissance measurements are not available (a term variability in the intensity estimates. In addition, large majority of global TC basins). specific DT rules, such as the “EIR Rule 9” controlling The main shortcoming of the DT is the the weakening rate of a TC after maximum intensity, inherent subjectivity stemming from the experience were implemented to more closely follow the DT and judgment of the TC forecaster using it. -

Tropical Cyclones

Chapter 24: Tropical Cyclones • Hurricane Naming, Track, Structure • Tropical Cyclone Development Tropical Cyclones vs. Mid-latitude Storms Tropical cyclones The tropical cyclone is a low-pressure system which derives its energy primarily from evaporation from the sea in the presence of high winds and lowered surface pressure. It has associated condensation in convective clouds concentrated near its center. Mid-latitude storms Mid-latitude storms are low pressure systems associated cold fronts, warm fronts, and occluded fronts. They primarily get their energy from the horizontal temperature gradients that exist in the atmosphere. An Overview of Tropical Cyclone Secondary Circulation •Boundary layer inflow •Eyewall ascending •Upper tropospheric outflow converting thermal energy from ocean to kinetic energy Primary Circulation •Axis-symmetric circulation • Conserving angular momentum •Balanced flow Driven by the Secondary Circulation Secondary Circulation: A Carnot Cycle (Carnot Heat Engine)(Kerry Emanuel 1988) Secondary Circulation tropical cyclone Primary Circulation A heat engine acts by transferring energy from a warm region to a cool region of space and, in the process, converting some of that energy to mechanical work. The Carnot cycle is a theoretical thermodynamic cycle and can be shown to be the most efficient cycle for converting a given amount of thermal energy into work, or conversely, creating a temperature difference (e.g. refrigeration) by doing a given amount of work. Two Circulation Components of Tropical Cyclone Secondary Circulation Centrifugal and Coriolis Forces are not in perfect equilibrium with the pressure gradient. Air is forced to center and then rises Conservation of Angular Momentum As air enters to a small radius, its speed has to become faster Increases the rotational speed of the tropical cyclone. -

In the Eye of the Storm: Perspective from an Engineer, Meteorologist and Storm Chaser

2/16/2017 In the Eye of the Storm: Perspective From an Engineer, Meteorologist and Storm Chaser by Tim Marshall P.E. Meteorologist Presented at 2017 Tornado Summit Oklahoma City, OK February 14, 2017 1957 Began to position chase April 21, 1967 vehicles Oak Lawn, IL Tornado 1967 -Oak Lawn, IL news 1 2/16/2017 Oak Lawn Tornado Damage Destroyed mobile home park April 21, 1967 April 21, 1967 barograph trace Oak Lawn Tornado Track from Albright Middle School Rated F4 Traveled 16.2 miles @ 60 mph 33 deaths >1000 homes damaged Marvin Zeaman Drops in pressure with Belvidere http://www.weather.gov/media/lot/events/1967_Apr _Tornadoes/OakLawn_Tornado_survey.pdf and Oak Lawn tornadoes. 1969 - Won top award for tornado exhibit at science fair. 1970 – Lubbock, TX Tornado Tornado 2 2/16/2017 1970- Dr. Fujita surveyed the 1970 – Lubbock, TX Tornado tornado damage http://www.lubbocktornado1970.com/pdf /LubbockTornadoes_TetsuyaFujita.pdf Institute for Disaster 1971- Fujita Scale Research was formed at TTU https://swco-ir.tdl.org/swco- ir/handle/10605/261888 Kiesling, Peterson, Mehta, Minor, McDonald DR. TED FUJITA and the 1974- F5 Xenia, OH Tornado SuperOutbreak April 3-4, 1974 I attended his lecture at the 319 fatalities, 5,484 injuries Univ. of Chicago 11-12-74 3 2/16/2017 1974- F5 Xenia, OH Tornado 1974 – N.I.U. Meteorology Prof. Jack R. Villmow Worst damage occurred on Marshall Street ! 1976 – First Tornado Damage Survey 1976 – Lemont, IL Tornado I walked the damage path 1978 – TTU Graduate School 1978 – TTU Graduate School Saw a tornado first day in TX Edmonson, TX – May 27, 1978 4 2/16/2017 April 10, 1979 - Dr. -

A Glossary for Biometeorology

Int J Biometeorol DOI 10.1007/s00484-013-0729-9 ICB 2011 - STUDENTS / NEW PROFESSIONALS A glossary for biometeorology Simon N. Gosling & Erin K. Bryce & P. Grady Dixon & Katharina M. A. Gabriel & Elaine Y.Gosling & Jonathan M. Hanes & David M. Hondula & Liang Liang & Priscilla Ayleen Bustos Mac Lean & Stefan Muthers & Sheila Tavares Nascimento & Martina Petralli & Jennifer K. Vanos & Eva R. Wanka Received: 30 October 2012 /Revised: 22 August 2013 /Accepted: 26 August 2013 # The Author(s) 2013. This article is published with open access at Springerlink.com Abstract Here we present, for the first time, a glossary of berevisitedincomingyears,updatingtermsandaddingnew biometeorological terms. The glossary aims to address the need terms, as appropriate. The glossary is intended to provide a for a reliable source of biometeorological definitions, thereby useful resource to the biometeorology community, and to this facilitating communication and mutual understanding in this end, readers are encouraged to contact the lead author to suggest rapidly expanding field. A total of 171 terms are defined, with additional terms for inclusion in later versions of the glossary as reference to 234 citations. It is anticipated that the glossary will a result of new and emerging developments in the field. S. N. Gosling (*) L. Liang School of Geography, University of Nottingham, Nottingham NG7 Department of Geography, University of Kentucky, Lexington, 2RD, UK KY, USA e-mail: [email protected] E. K. Bryce P. A. Bustos Mac Lean Department of Anthropology, University of Toronto, Department of Animal Science, Universidade Estadual de Maringá Toronto, ON, Canada (UEM), Maringa, Paraná, Brazil P. G. Dixon S. -

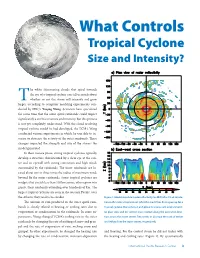

What Controls Tropical Cyclone Size and Intensity?

What Controls Tropical Cyclone Size and Intensity? he white shimmering clouds that spiral towards the eye of a tropical cyclone can tell us much about Twhether or not the storm will intensify and grow larger, according to computer modeling experiments con- ducted by IPRC’s Yuqing Wang. Scientists have speculated for some time that the outer spiral rainbands could impact significantly a storm’s structure and intensity, but this process is not yet completely understood. With the cloud-resolving tropical cyclone model he had developed, the TCM4, Wang conducted various experiments in which he was able to in- crease or decrease the activity of the outer rainbands. These changes impacted the strength and size of the storms the model generated. In their mature phase, strong tropical cyclones typically develop a structure characterized by a clear eye at the cen- ter and an eyewall with strong convection and high winds surrounded by the rainbands. The inner rainbands are lo- cated about two to three times the radius of maximum wind; beyond lie the outer rainbands. Some tropical cyclones are midgets that stretch less than 200 km across, others grow into giants, their rainbands extending over hundreds of km. The largest tropical cyclones are seen in the western Pacific; over the Atlantic they tend to be smaller. Figure 1. Model-simulated radar reflectivity (in dBZ) after 9 h of simula- The amount of rain produced in the outer spiral rain- tion in the control experiment (after the model has been spun up for a bands is closely related to heating or cooling rates due to tropical cyclone-like vortex on an f-plane in a quiescent environment): evaporation or condensation in the rainbands.