Entity Set Expansion Using Topic Information

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

L'elysée Montmartre Ticket Reservation

A member of X-Japan in France ! Ra :IN in Paris the 5th of May 2005 Gfdgfd We have the pleasure to announce that the solo band of PATA (ex-X-Japan), Ra :IN, will perform live at the Elysee Montmartre in Paris (France) the 5th of May 2005. " Ra:IN " (pronounced "rah'een")----is an amazing unique, powerful new rock trio consisting of PATA (guitar-Tomoaki Ishizuka), one of the guitarists from the legendary Japanese rock band X Japan, michiaki (bass-Michiaki Suzuki), originally from another legendary Yokohama based rock band TENSAW, and Tetsu Mukaiyama (drums), who supported various bands and musicians such as Red Warriors and CoCCo. " Ra:IN " 's uniqueness comes from its musical eloquence. The three-piece band mainly delves into instrumental works, and the band's combination of colorful tones and sounds, along with the tight playing only possible with top-notch skilled players, provides an overwhelming power and energy that is what " ROCK " is all about. Just cool and heavy rhythms. But above all, don’t miss the first opportunity to see on stage in Europe a member of X-Japan, PATA (one of the last still performing). One of those that with their music, during years, warmed the heart of millions of fans in Japan and across the world. Ra :IN live in Paris website : http://parisvisualprod.free.fr Ra :IN website : http://www.rain-web.com Ticket Reservation Europe : Pre-Sales on Official Event Website : http://parisvisualprod.free.fr Japan : For Fan tour, please contact : [email protected] Worlwide (From 01st of March 2005) : TICKETNET -

“PRESENCE” of JAPAN in KOREA's POPULAR MUSIC CULTURE by Eun-Young Ju

TRANSNATIONAL CULTURAL TRAFFIC IN NORTHEAST ASIA: THE “PRESENCE” OF JAPAN IN KOREA’S POPULAR MUSIC CULTURE by Eun-Young Jung M.A. in Ethnomusicology, Arizona State University, 2001 Submitted to the Graduate Faculty of School of Arts and Sciences in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Pittsburgh 2007 UNIVERSITY OF PITTSBURGH SCHOOL OF ARTS AND SCIENCES This dissertation was presented by Eun-Young Jung It was defended on April 30, 2007 and approved by Richard Smethurst, Professor, Department of History Mathew Rosenblum, Professor, Department of Music Andrew Weintraub, Associate Professor, Department of Music Dissertation Advisor: Bell Yung, Professor, Department of Music ii Copyright © by Eun-Young Jung 2007 iii TRANSNATIONAL CULTURAL TRAFFIC IN NORTHEAST ASIA: THE “PRESENCE” OF JAPAN IN KOREA’S POPULAR MUSIC CULTURE Eun-Young Jung, PhD University of Pittsburgh, 2007 Korea’s nationalistic antagonism towards Japan and “things Japanese” has mostly been a response to the colonial annexation by Japan (1910-1945). Despite their close economic relationship since 1965, their conflicting historic and political relationships and deep-seated prejudice against each other have continued. The Korean government’s official ban on the direct import of Japanese cultural products existed until 1997, but various kinds of Japanese cultural products, including popular music, found their way into Korea through various legal and illegal routes and influenced contemporary Korean popular culture. Since 1998, under Korea’s Open- Door Policy, legally available Japanese popular cultural products became widely consumed, especially among young Koreans fascinated by Japan’s quintessentially postmodern popular culture, despite lingering resentments towards Japan. -

Press Release – Global X Japan Launches Global X

Press Release Global X Japan launches Global X Digital Innovation Japan ETF, its first engagement with Solactive 27 January 2021 Global X Japan, a joint venture between Global X ETFs and Daiwa Securities established in 2020, launched its new Global X Digital Innovation Japan ETF [Ticker: 2626] on January 27th, 2021, on the Tokyo Stock Exchange. This release marks Global X’s first engagement with Solactive on Japanese ground after Global X, a sub-brand of Mirae Asset Global Investments, issued multiple successful ETFs tracking Solactive indices in HK, Europe, and the US. The Global X Digital Innovation Japan ETF tracks Solactive’s Digital Innovation Japan Index, which is powered by ARTIS®, Solactive’s proprietary big data and natural language processing algorithm. The index includes an ESG screening carried out by ESG data provider Minerva Analytics Ltd. The screening excludes companies not compliant with the UN Global Compact and businesses affiliated with controversial industries. Japan is pushing for digitalization. The country’s plan to establish a new governmental Digitalization Agency by September 20211 owes to the fact that the state’s current lack of digitalization could burden its economy with an annual cost of, according to some estimates, 12 trillion yen (about 116 billion USD)2. Pioneering companies in this field could benefit from the resulting surge in demand and political initiatives. The Index The Solactive Digital Innovation Japan Index includes Japanese companies that are pioneers in digital innovation and, therefore, represents companies that are likely to contribute to the Japanese societal transformation plans. The Solactive Digital Innovation Japan Index includes eleven sub-themes of digital innovation: Cloud Computing; Cyber Security; Remote Communications; Online Project and Document Management; Online Healthcare and Telemedicine; Digital, Online, and Video Gaming; Video and Media streaming; Online Education; Social Networks; E-commerce; and E-payments. -

A Multi-Dimensional Study of the Emotion in Current Japanese Popular Music

Acoust. Sci. & Tech. 34, 3 (2013) #2013 The Acoustical Society of Japan PAPER A multi-dimensional study of the emotion in current Japanese popular music Ryo Yonedaà and Masashi Yamada Graduate School of Engineering, Kanazawa Institute of Technology, 7–1 Ohgigaoka, Nonoich, 921–8501 Japan ( Received 22 March 2012, Accepted for publication 5 September 2012 ) Abstract: Musical psychologists illustrated musical emotion with various numbers of dimensions ranging from two to eight. Most of them concentrated on classical music. Only a few researchers studied emotion in popular music, but the number of pieces they used was very small. In the present study, perceptual experiments were conducted using large sets of popular pieces. In Experiment 1, ten listeners rated musical emotion for 50 J-POP pieces using 17 SD scales. The results of factor analysis showed that the emotional space was constructed by three factors, ‘‘evaluation,’’ ‘‘potency’’ and ‘‘activity.’’ In Experiment 2, three musicians and eight non-musicians rated musical emotion for 169 popular pieces. The set of pieces included not only J-POP tunes but also Enka and western popular tunes. The listeners also rated the suitability for several listening situations. The results of factor analysis showed that the emotional space for the 169 pieces was spanned by the three factors, ‘‘evaluation,’’ ‘‘potency’’ and ‘‘activity,’’ again. The results of multiple-regression analyses suggested that the listeners like to listen to a ‘‘beautiful’’ tune with their lovers and a ‘‘powerful’’ and ‘‘active’’ tune in a situation where people were around them. Keywords: Musical emotion, Popular music, J-POP, Semantic differential method, Factor analysis PACS number: 43.75.Cd [doi:10.1250/ast.34.166] ‘‘activity’’ [3]. -

Get Ebook « Visual Kei Artists

QDKRCRTPLZU6 \ PDF « Visual kei artists Visual kei artists Filesize: 4.12 MB Reviews This pdf is indeed gripping and interesting. It is definitely simplistic but shocks within the 50 percent of your book. Once you begin to read the book, it is extremely difficult to leave it before concluding. (Michael Spinka) DISCLAIMER | DMCA ZVKPJW8WNMMZ // Kindle \\ Visual kei artists VISUAL KEI ARTISTS Reference Series Books LLC Apr 2013, 2013. Taschenbuch. Book Condition: Neu. 246x189x7 mm. Neuware - Source: Wikipedia. Pages: 121. Chapters: X Japan, Buck-Tick, Dir En Grey, Alice Nine, Miyavi, Hide, Nightmare, The Gazette, Glacier, Merry, Luna Sea, Ayabie, Rentrer en Soi, Versailles, D'espairsRay, Malice Mizer, Girugamesh, Psycho le Cému, An Cafe, Penicillin, Laputa, Vidoll, Phantasmagoria, Mucc, Plastic Tree, Onmyo- Za, Panic Channel, Blood, The Piass, Megamasso, Tinc, Kagerou, Sid, Uchuu Sentai NOIZ, Fanatic Crisis, Moi dix Mois, Pierrot, Zi:Kill, Kagrra, Doremidan, Anti Feminism, Eight, Sug, Kuroyume, 12012, LM.C, Sadie, Baiser, Strawberry song orchestra, Unsraw, Charlotte, Dué le Quartz, Baroque, Silver Ash, Schwarz Stein, Inugami Circus-dan, Cali Gari, GPKism, Guniw Tools, Luci'fer Luscious Violenoue, Mix Speakers, Inc, Aion, Lareine, Ghost, Luis-Mary, Raphael, Vistlip, Deathgaze, Duel Jewel, El Dorado, Skin, Exist Trace, Cascade, The Dead Pop Stars, D'erlanger, The Candy Spooky Theater, Aliene Ma'riage, Matenrou Opera, Blam Honey, Kra, Fairy Fore, BIS, Lynch, Shazna, Die in Cries, Color, D=Out, By-Sexual, Rice, Dio - Distraught Overlord, Kaya, Jealkb, Genkaku Allergy, Karma Shenjing, L'luvia, Devil Kitty, Nheira. Excerpt: X Japan Ekkusu Japan ) is a Japanese heavy metal band founded in 1982 by Toshimitsu 'Toshi' Deyama and Yoshiki Hayashi. -

Visual Kei Artists

JUCMSA3KJKEX # Kindle # Visual kei artists Visual kei artists Filesize: 6.52 MB Reviews Merely no terms to spell out. We have read through and i also am confident that i will gonna read yet again again in the future. You will not sense monotony at anytime of your own time (that's what catalogs are for about should you question me). (Pasquale Larkin I) DISCLAIMER | DMCA RTERDIYRDXPD ~ PDF \\ Visual kei artists VISUAL KEI ARTISTS Reference Series Books LLC Apr 2013, 2013. Taschenbuch. Book Condition: Neu. 246x189x7 mm. Neuware - Source: Wikipedia. Pages: 121. Chapters: X Japan, Buck-Tick, Dir En Grey, Alice Nine, Miyavi, Hide, Nightmare, The Gazette, Glacier, Merry, Luna Sea, Ayabie, Rentrer en Soi, Versailles, D'espairsRay, Malice Mizer, Girugamesh, Psycho le Cému, An Cafe, Penicillin, Laputa, Vidoll, Phantasmagoria, Mucc, Plastic Tree, Onmyo- Za, Panic Channel, Blood, The Piass, Megamasso, Tinc, Kagerou, Sid, Uchuu Sentai NOIZ, Fanatic Crisis, Moi dix Mois, Pierrot, Zi:Kill, Kagrra, Doremidan, Anti Feminism, Eight, Sug, Kuroyume, 12012, LM.C, Sadie, Baiser, Strawberry song orchestra, Unsraw, Charlotte, Dué le Quartz, Baroque, Silver Ash, Schwarz Stein, Inugami Circus-dan, Cali Gari, GPKism, Guniw Tools, Luci'fer Luscious Violenoue, Mix Speakers, Inc, Aion, Lareine, Ghost, Luis-Mary, Raphael, Vistlip, Deathgaze, Duel Jewel, El Dorado, Skin, Exist Trace, Cascade, The Dead Pop Stars, D'erlanger, The Candy Spooky Theater, Aliene Ma'riage, Matenrou Opera, Blam Honey, Kra, Fairy Fore, BIS, Lynch, Shazna, Die in Cries, Color, D=Out, By-Sexual, Rice, Dio - Distraught Overlord, Kaya, Jealkb, Genkaku Allergy, Karma Shenjing, L'luvia, Devil Kitty, Nheira. Excerpt: X Japan Ekkusu Japan ) is a Japanese heavy metal band founded in 1982 by Toshimitsu 'Toshi' Deyama and Yoshiki Hayashi. -

JPM-X Overview for Japan

JPM-X Japan Disclosure Effective date: 1 September 2021 JPMorgan Securities Japan Co., Ltd. JPMorgan Securities Japan Co., Ltd. operates a liquidity pool in Japan for TSE equity market securities through its crossing service (JPM-X). The JPM-X service operates under the ‘internal system’ definition of Article 70-II-7 under Cabinet Office Ordinance on Financial Instruments Business Operators. This overview is prepared to provide details relating to the operations of JPM-X. Clients can contact their J.P. Morgan representative for further information on external liquidity pools and matching and other information relating to them. Category Details Participant JPM-X participants (professional investors only under Japanese regulations) are Summary assigned to tiers, at J.P. Morgan’s sole discretion. For Japan JPM-X the tiers are: • Institutional clients • Broker-Dealer Flow (Aggregator) • Electronic liquidity providers • JPM Principal Activity Participation Clients can access JPM-X through various algorithmic and smart order routing (SOR) Criteria execution services as well as send orders specifically for participation in JPM-X (Directed Orders). Clients may choose to opt out of interacting with one or more tiers. In addition, J.P. Morgan may further limit the tiers with which an order interacts in efforts to enhance execution performance of JPM-X; accordingly, a client may be prevented from interacting with a tier with which the client has not opted-out of interacting. Clients’ on-boarding requests are reviewed at J.P. Morgan’s sole discretion. Order Types JPM-X accepts limit orders and market orders, including pegged orders. JPM-X also supports DAY and IOC time in force values. -

Hide Pdf, Epub, Ebook

HIDE PDF, EPUB, EBOOK Lisa Gardner | 451 pages | 20 May 2008 | Random House USA Inc | 9780553588088 | English | New York, United States Hide PDF Book What Does 'Eighty-Six' Mean? Universal Music. Watch how it works X. Retrieved December 12, Prior to his death, Hide and Yoshiki talked about restarting X Japan with a new vocalist in the year What Features are included in all plans? Comments on hide What made you want to look up hide? And he scarcely bothered to hide his chief ambition: to lead his country as prime minister. Z in See more words from the same century Thesaurus Entries near hide hidden hidden tax hidden taxes hide hideaway hideaways hidebound. Tim Evans Geraldine Singer Trailers and Videos. Spread Beaver guitarist K. Approximately 50, people who attended his funeral at Tsukiji Hongan-ji on May 7, where 56 people were hospitalized and people received medical treatment in first aid tents due to a mixture of emotional exhaustion and heat, with the funeral taking place on the warmest day of the year at that point, at 27 degrees Celsius about Archived from the original on January 25, Archived from the original on October 25, While they never achieved mainstream success in the United States one of their songs was included on the soundtrack for Heavy Metal Test Your Vocabulary. Main article: Hide discography. Retrieved May 1, He was the lead guitarist of the rock band X Japan from onward, and a solo artist from onward. Main article: X Japan. Retrieved September 12, June 18, He's talked about suicide in his records for five years. -

Global X Japan Global Leaders ESG ETF 2641 As of 8/31/2021

Global X Japan Global Leaders ESG ETF 2641 As of 8/31/2021 FUND OBJECTIVE FUND DETAILS The Global X Japan Global Leaders ESG ETF seeks to provide investment results that Inception Date 6/21/21 correspond generally to the price and yield performance, before fees and expenses, of the FactSet Japan Global Underlying Index FactSet Japan Global Leaders ESG Index. Leaders ESG Index KEY FEATURES Number of Holdings 20 Assets Under Management ¥31.25(100mil) Global Leaders NAV (per 100 units) ¥196,728 The Global X Japan Global Leaders ESG ETF offers exposure to Japanese 0.3025% per annum Management Fee companies with high overseas sales ratio and high overseas customer (0.275% before tax) relationship ratio. Distribution Frequency Semi-Annually Closing date 6/24, 12/24 ESG Investing The Global X Japan Global Leaders ESG ETF applies ESG to the weighting TRADING DETAILS scheme. Securities code 2641 ISIN JP3049330008 Exchange Tokyo Stock Exchange ETF Efficiency Bloomberg INAV Ticker 2641IV In a single trade, The Global X Japan Global Leaders ESG ETF delivers Index Ticker FDSJGLP access to dozens of companies with high exposure to the Global Leaders. ASSETS UNDER MANAGEMENT / FUND PERFORMANCE CHART FUND DISTRIBUTIONS (6/21/2021~8/31/2021) per 100 units, before taxes (Yen) 240,000 60 Assets Under Management 230,000 54 220,000 FactSet Japan Global Leaders ESG Index 48 210,000 FUND at NAV (reinvestment) 42 200,000 36 190,000 30 180,000 24 170,000 18 AUM (100mil AUM (100mil Yen) 160,000 12 150,000 6 FUND at FUND at NAV (reinvestment, Yen) 140,000 0 Inception -

“This Was the Reason I Was Born”

LUND UNIVERSITY • CENTRE FOR EAST AND SOUTH-EAST ASIAN STUDIES “This was the reason I was born” Contextualizing Issues and Choices of a Japanese Woman Musician Author: Alessandra Cognetta Supervisor: Annika Pissin Master’s Programme in Asian Studies Spring semester 2017 Abstract This thesis investigates the Japanese music industry from a liberal feminist perspective, as academic literature rarely discusses Japanese music – especially niche genres like metal. After pinpointing a gap in the literature on the subject, and after outlining issues in the Japanese music industry, this study sheds light on the reasons that lead Japanese women musicians to thrive outside of major Japanese record companies. The direct experience of a Japanese power metal singer, who has worked both in her home country and in Europe, generates the main body of data. Specific aspects such as gender stereotypes, musical genres and language barrier are taken into account as parameters affecting women musicians’ choices. The analysis focuses on an in-depth semi- structured interview with the singer, to obtain her insights on these aspects and to inquire on her choices as an independent female musician in Japan. The aim of this article is to contextualize a specific case within the Japanese music scene, instead of generalizing the experience of a single female musician as applicable to all female musicians. The main findings of this research project highlight issues for further research: economic support for independent artists and the lack of inclusive spaces for female musicians. Keywords: Japanese metal music, Japanese music industry, Female musicians, Feminist theory, Choice feminism 2 ACKNOWLEDGEMENTS This thesis is the result of a massive personal effort, but it has also come to life thanks to the contributions of many inspiring individuals. -

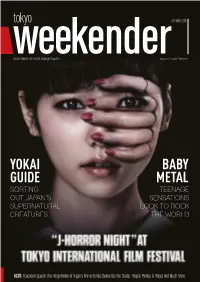

Yokai Guide Baby Metal

OCTOBER 2015 Japan’s number one English language magazine Image © 2015 “Gekijorei” Film Partners YOKAI BABY GUIDE METAL SORTING TEENAGE OUT JAPAN’S SENSATIONS SUPERNATURAL LOOK TO ROCK CREATURES THE WORLD ALSO: Education Special, the Hoop Nation of Nippon, Marie Kondo Cleans Out the Clutter, People, Parties, & Placeswww.tokyoweekender.com and Much MoreOCTOBER 2015 OCTOBER 2015 www.tokyoweekender.com OCTOBER 2015 CONTENTS 15 MASTERS OF J-HORROR The Tokyo International Film Festival brings plenty of screams to the screen © 2015 “Gekijorei” Film Partners 14 16 20 BABYMETAL YOKAI GUIDE EDUCATION SPECIAL Awww...These teenagers are on a rock- Don’t know your kappa from your kirin? Autumn is filled with new developments fueled musical rampage Turn to page 16, dear friends at Tokyo’s international schools 6 The Guide 13 Akita-Style Teppanyaki 34 Previews Our recommendations for hot fall looks, Get ready for some of Japan’s finest The back story of Peter Pan, Keanu takes plus a snack that’s worth a train ride ingredients, artfully prepared at Gomei revenge, and the Not-So-Fantastic Four 8 Gallery Guide 28 Marie Kondo 36 Agenda Edo period adult art, the College Women’s Reclaiming joy by getting rid of clutter? Shimokita Curry Festival, Tokyo Design Association of Japan Print Show, and more Sounds like a win-win Week, and other October happenings 10 Japan Hoops It Up 30 People, Parties, Places 38 Back in the Day Lace ‘em up and get fired up for the A very French soirée, a tasty evening for Looking back at a look ahead to the CWAJ coming season of NBA action Peru, and Bill back in the Studio 54 days Print Show, circa 1974 www.tokyoweekender.com OCTOBER 2015 THIS MONTH IN THE WEEKENDER and domestic for decades, and this year the Tokyo International Film Festival OCTOBER 2015 has decided to take a walk on the spooky side, celebrating the work of three masters of “J-Horror,” including Hideo Nakata, whose upcoming release “Ghost Theater” gets the cover this month. -

TRANSCULTURAL SPACES in SUBCULTURE: an Examination of Multicultural Dynamics in the Japanese Visual Kei Movement

TRANSCULTURAL SPACES IN SUBCULTURE: An examination of multicultural dynamics in the Japanese visual kei movement Hayley Maxime Bhola 5615A031-9 January 10, 2017 A master’s thesis submitted to the Graduate School of International Culture and Communication Studies Waseda University in partial fulfillment of the requirements for the degree of Master of Arts TRANSCULTURAL SPACES IN SUBCULTURE 2 Abstract of the Dissertation The purpose of this dissertation was to examine Japanese visual kei subculture through the theoretical lens of transculturation. Visual kei (ヴィジュアル系) is a music based subculture that formed in the late 1980’s in Japan with bands like X Japan, COLOR and Glay. Bands are recognized by their flamboyant (often androgynous appearances) as well as their theatrical per- formances. Transculturation is a term originally coined by ethnographer Fernando Ortiz in re- sponse to the cultural exchange that took place during the era of colonization in Cuba. It de- scribes the process of cultural exchange in a way that implies mutual action and a more even dis- tribution of power and control over the process itself. This thesis looked at transculturation as it relates to visual kei in two main parts. The first was expositional: looking at visual kei and the musicians that fall under the genre as a product of transculturation between Japanese and non- Japanese culture. The second part was an effort to label visual kei as a transcultural space that is able to continue the process of transculturation by fostering cultural exchange and development among members within the subculture in Japan. Chapter 1 gave a brief overview of the thesis and explains the motivation behind conduct- ing the research.